Breast Cancer Computer-Aided Detection

The QuData team developed an AI-based solution for early breast cancer detection using a deep learning model that automatically classifies mammography images according to the BI-RADS system. The algorithm includes localization of suspicious areas, image enhancement, contrast framing, and advanced data augmentation. The system improves the accuracy of detecting pathologies at early stages, even in complex clinical cases, while reducing the workload on medical staff and providing a reliable tool to support decision-making.

Business Challenge

Business Challenge

Breast cancer remains a significant global health concern, affecting millions of women every year. The existing landscape of breast cancer diagnosis is marked by challenges that necessitate innovative solutions. Traditional methods often rely on subjective human interpretation, leading to inconsistencies and the potential for missed diagnoses. Moreover, the increasing volume of mammogram screenings strains healthcare systems, demanding faster and more reliable ways to interpret results.

The Qudata team sought to develop an intelligent system that could analyze mammograms with unparalleled accuracy, reduce interpretation time, and provide healthcare professionals with a dependable tool to enhance patient care.

Solution Overview

Solution Overview

Our response to the pressing challenge of breast cancer diagnosis is an AI-powered system that combines deep learning algorithms and advanced image analysis. This innovative solution is designed to streamline the diagnosis process, minimize subjectivity, and expedite accurate interpretations.

By leveraging advanced technologies, our system transforms mammograms into rich data representations that enable rapid and precise analysis. The AI-driven tool categorizes findings based on established classifications, providing medical practitioners with consistent and standardized insights. This approach not only enhances diagnostic accuracy but also empowers healthcare professionals to make informed decisions swiftly.

The practical impact of our solution is profound: faster diagnoses, reduced human error, and ultimately, improved patient outcomes. Through the seamless integration of AI, we aspire to redefine breast cancer diagnosis and set a new benchmark for medical innovation.

Technical Details

Technical Details

In our research, we noticed that the majority of existing studies in breast cancer diagnosis concentrate on distinguishing between malignant and benign mammogram exams. However, we observed that only a limited number of methods are capable of categorizing mammograms into multiple outputs. This inspired us to develop a deep learning system that can generate a multi-label output to classify BI-RADS on mammograms (Breast Imaging-Reporting and Data System; BI-RADS 1-5).

We employed the VinDr dataset to train our neural network. This dataset is remarkable for being the most extensive publicly accessible collection in its field, comprising a total of 20,000 scans. The use of the VinDr was solely for non-commercial purposes, contributing significantly to the development of our neural network. The dataset's class distribution for BI-RADS categories is as follows: BIRADS 1 (67.03%), BIRADS 2 (23.38%), BIRADS 3 (04.65%), BIRADS 4 (03.81%), and BIRADS 5 (01.13%).

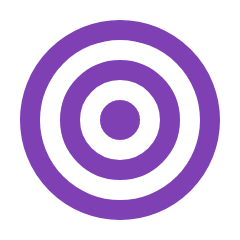

Due to the prevalence of mammogram scans having large areas of black background containing no relevant information, the process of training using these scans can be resource-intensive. To address this challenge, we employed OpenCV, a computer vision library, to precisely identify and isolate the pertinent region of the breast from the original scans. By accurately localizing this region, we significantly reduced computational resources and focused solely on the crucial areas for training our model.

The windowing technique was used to enhance the image's contrast and brightness. By selecting a specific window of pixel values and remapping them to a new range, we effectively improved the visual quality of the image. This process enabled us to highlight important features and details while optimizing the overall appearance for better interpretation and analysis.

To tackle the challenges of class imbalance, various data augmentation techniques were utilized, namely cut-mix, drop-out, and affine transform.

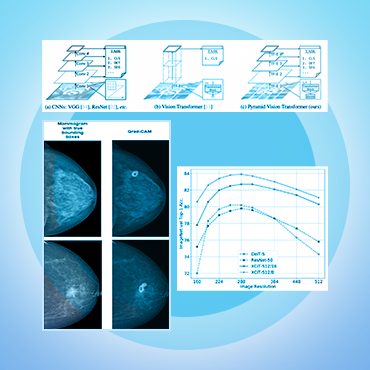

The construction of the model relied on the utilization of a cutting-edge architecture known as Convnextv1-small, which had previously undergone pre-training on the ImageNet dataset.

Stochastic gradient descent (SGD) with momentum=0.9 was utilized as the optimizer. The error was computed using the cross-entropy function. When assessing the model's performance, we utilized the F1-score for the 5-class BI-RADS classification. Model was trained for 60 epochs with a batch size of 8 on an NVIDIA Tesla T4 GPU.

The classification model can serve as a means for identifying and localizing lesion regions. For this purpose, the gradCAM technique is applied to generate heat maps that can pinpoint the areas of interest in mammograms.

Technology Stack

OpenCV

PyTorch