Integrated AI development platform for drones

The QuData team created an open hardware and software platform for testing and developing AI algorithms for drone control by combining the AirSim simulator and a compact Raspberry Pi module. The solution provides a full environment for real-time debugging of visual odometry, computer vision, and navigation systems.

Business Challenge

Business Challenge

One of the main challenges arose during the development of a solution for autonomous flight control. It turned out that ready-made solutions (drone + AI) with an open architecture for the development and testing of control systems are not for sale or use as pre-made complexes.

The development and testing of solutions that use Computer Vision and Machine Learning tools, required developing a platform for testing and debugging data processing and drone control systems. This solution had to be mobile (compact), support various architectures (libraries), prove performant for real-time computations, be robust in terms of power requirements regarding extreme operation conditions, and support a range of peripheral equipment. Additionally, the solution had to support various external controllers and their integration protocols.

It was also crucial for the solution to provide maximum flexibility and easy access to test data for real-time monitoring of the performance metrics of control algorithms. Furthermore, it had to be budget-friendly, with the components readily available for order without restrictions.

Solution Overview

Solution Overview

The approach in developing comprehensive solutions involves using both hardware and software environments. For initial testing of algorithms and solutions used in robotics, we chose AirSim, a cross-platform, open-source simulator for unmanned aerial vehicles created with the Unreal Engine 4 game engine, as a flexible platform for AI research. At this stage, we have managed to develop and test solutions for controlling flying devices, visual odometry, searching, classifying, and identifying objects on the ground in a virtual environment. Development and testing were conducted using emulated sensors and carriers.

For hardware support, it was necessary to select a mobile platform that meets the requirements and does not contradict basic usage constraints. Initially, we considered Nvidia's Jetson line of microcomputers as the base platform, but testing revealed a set of unsatisfactory parameters that prevented these products from being considered as the foundation for the solution testing system. The Jetson line is limited in performance, not very compact, demanding in terms of power, and restricted in terms of availability for order. As a result, we excluded this platform from consideration. We ultimately settled on solutions from Raspberry Pi. This platform basically meets all the requirements for developing a test platform, although it does not have solutions for use in small-scale production.

Using the test platform allowed us to integrate the solution into an actual drone and gain access to real-time metric tracking.

Technical Details

Technical Details

To implement the virtual testing environment, a system based on AirSim was deployed and tested for initial verification of recognition algorithms using visual streams with virtual sensors.

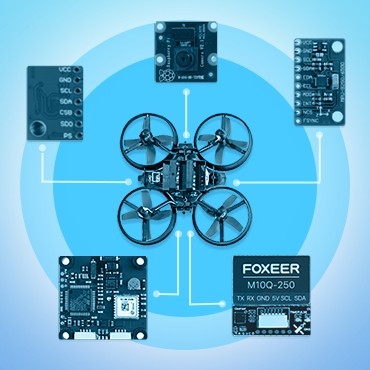

An additional virtual environment for AirSim was developed, significantly expanding the capabilities for emulating drone behavior. This decision was due to the limited functionality of ready-to-use virtual environments and restrictions on modifying template environment settings. For the hardware platform, after a series of stress tests, we selected the single-board Raspberry Pi 5 (8GB) solution with a set of external sensors: a barometer (MPU2950), and a gyroscope/accelerometer (MS5611). One of the main factors in choosing this set of sensors was the support for the SPI interface, which allows polling sensor states at a higher frequency than I2C. The Raspberry Camera Module 2 was chosen as the primary camera. Unlike the Camera Module 3, it does not have a physically implemented autofocus, making it less susceptible to vibrations that adversely affected the test visual data obtained during test assignments.

A separate power module was dedicated to support the 5A current required for the stable operation of the onboard module under various flight modes with significantly fluctuating power supply levels in the drone's power circuit. Support for a more powerful external WiFi module and an external communication system was added for online telemetry monitoring. The overall status of the sensors and the test module, including telemetry and the full spectrum of logging, was managed by a custom build of Betaflight with visual control through an extended version of the Betaflight Configurator.

This solution allows for testing complex algorithms and visual odometry systems on real flight missions with minimal time and cost.

Technology Stack

OpenCV

C++

Python

BetaFlight