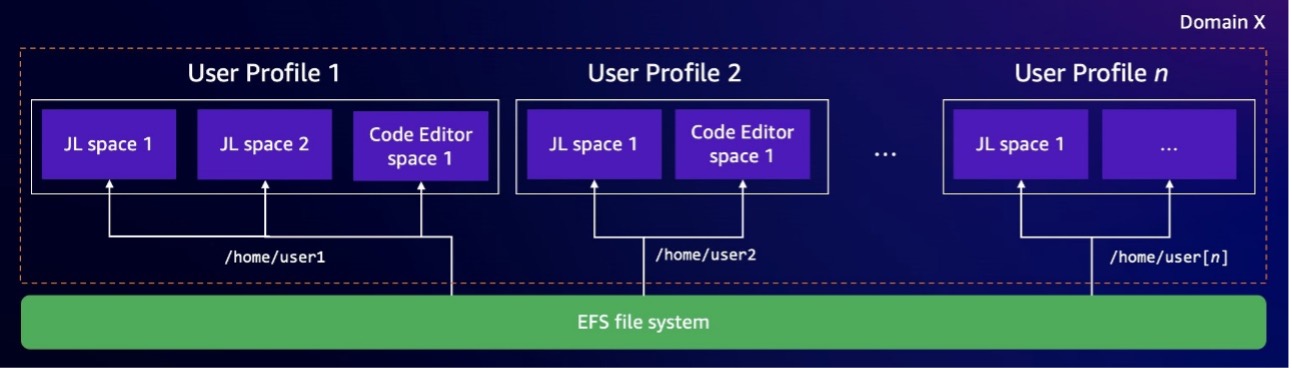

Amazon SageMaker Studio offers integrated IDEs like JupyterLab and RStudio for efficient ML workflows. Users can set up private spaces with Amazon EFS for seamless data sharing and centralized management, enabling individual storage and cross-instance file access.

Google plans to power AI datacentres with nuclear reactors, raising economic, ethical, and environmental concerns. Elon Musk donated $75m to a pro-Trump group, highlighting his role in the 2024 US election.

Ambience Healthcare, founded by Mike Ng MBA ’16 and Nikhil Buduma ’17, offers an AI-powered platform embedded in EHRs to automate tasks for clinicians, saving 2-3 hours per day on documentation and improving patient care. Used in 40+ institutions like UCSF Health, Ambience helps clinicians focus on patients, not paperwork, leading to lower burnout and better relationships.

AI and fake newsreaders used by dictatorships to create disinformation videos. Actor Dan Dewhirst discusses unwitting participation in deepfake for authoritarian regime.

Monitoring vegetation health is crucial but challenging. Amazon SageMaker's geospatial capabilities offer a streamlined solution, mapping global vegetation in under 20 minutes.

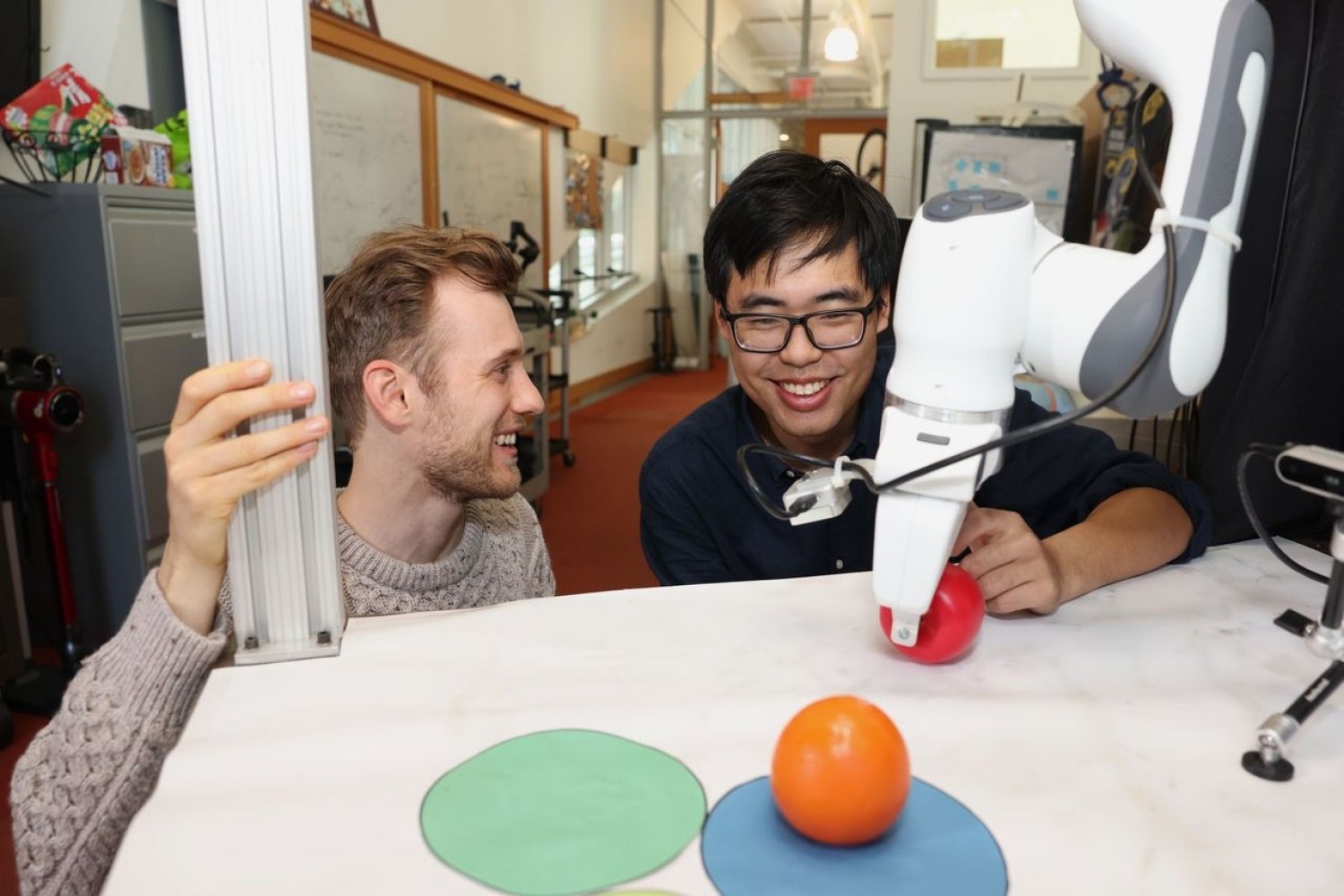

MIT researchers propose Diffusion Forcing, a new training technique that combines next-token and full-sequence diffusion models for flexible, reliable sequence generation. This method enhances AI decision-making, improves video quality, and aids robots in completing tasks by predicting future steps with varying noise levels.

Synthesia's tech used for deepfake videos promoting authoritarian regimes. Video shows young man supporting Burkina Faso dictator after military coup.

Imogen Heap merges pop with tech in her daring data-mining project, showcasing her innovative 'Imogenation'. The musician and technologist captivates with her eccentricity and passion for progress, unveiling a mysterious black device that promises to change lives.

Tech barons push for new technologies to solve climate crisis, but fixes already exist. Former Google CEO advocates for AI over climate goals, sparking debate on tech's role in addressing climate change.

AI lobbyists resist regulation due to bipartisan voter distrust; Governor Newsom opposes SB1047 for excluding smaller AI models, advocating for comprehensive regulations instead. Journalist Garrison Lovely questions the decision, highlighting the need for proper oversight in the AI sector.

AI chatbot impersonates boss; job seeker recounts job hunting struggles and perfect job application.

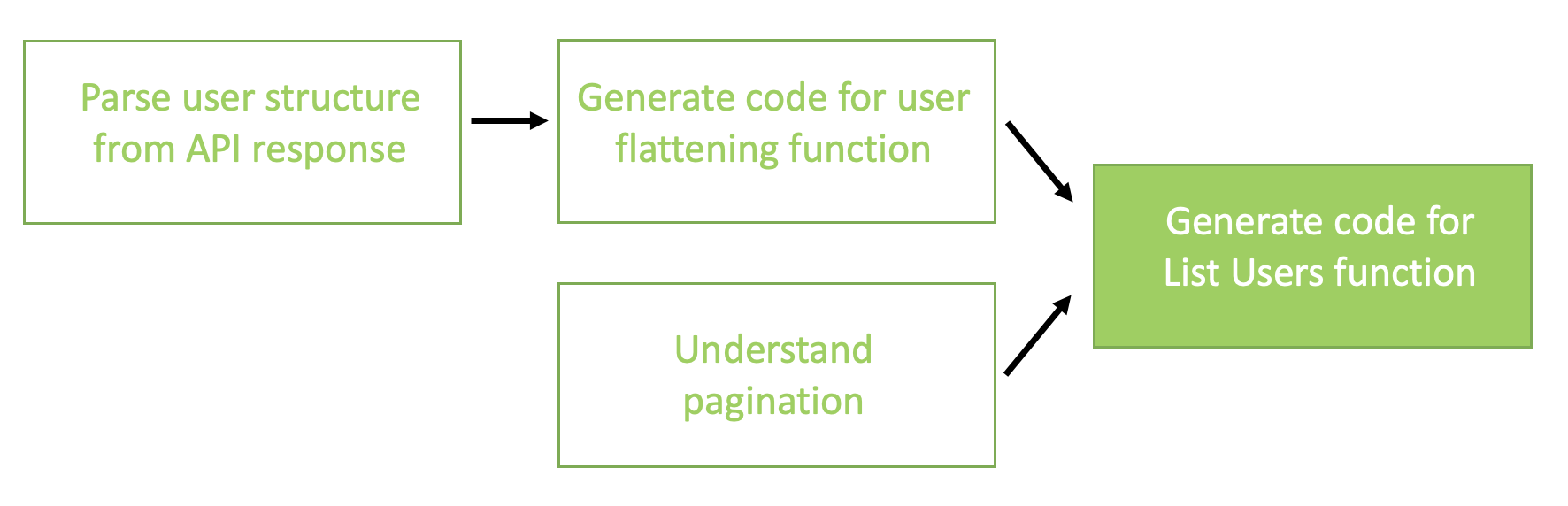

Generative AI transforms programming by offering intelligent assistance and automation. AWS and SailPoint collaborate to build a coding assistant using Anthropic’s technology on Amazon Bedrock to accelerate SaaS connector development. SailPoint specializes in enterprise identity security solutions, ensuring the right access to resources at the right times.

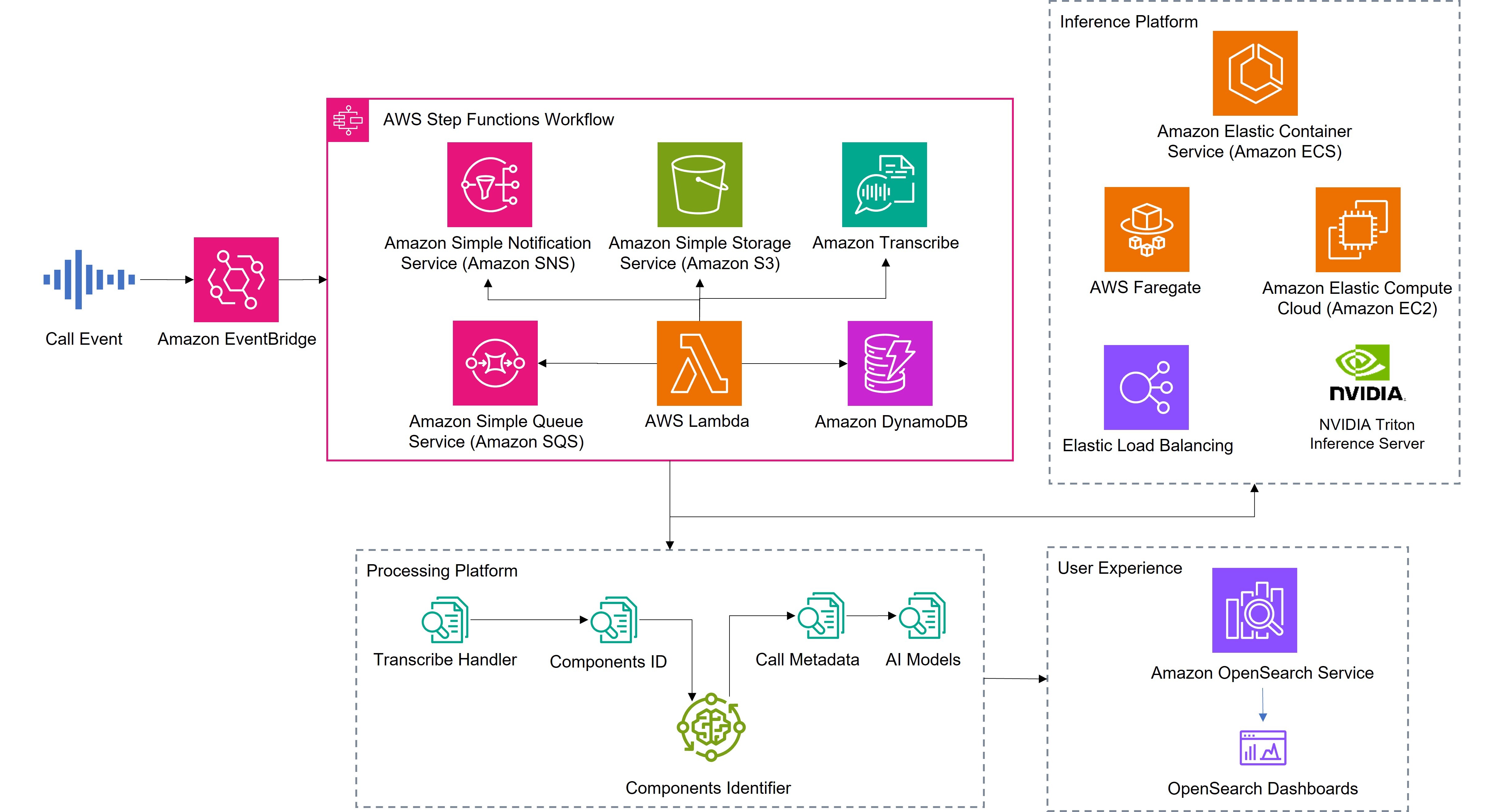

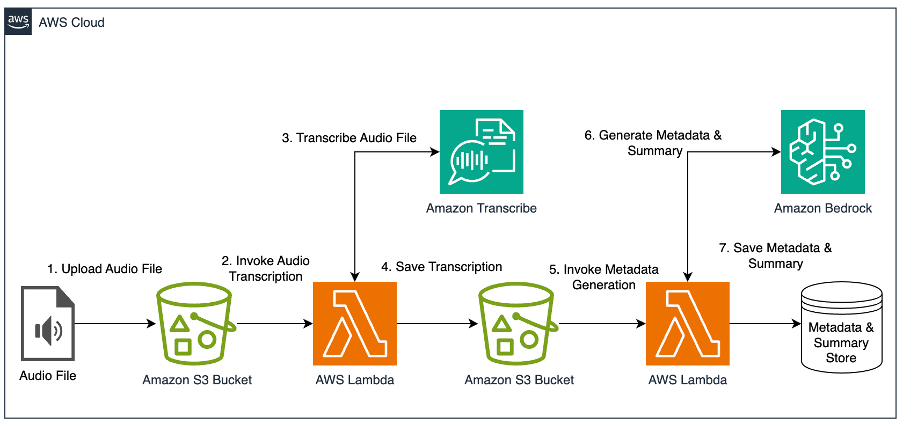

Intact Financial Corporation implements AI-powered Call Quality (CQ) solution using Amazon Transcribe to handle 1,500% more calls, reduce agent handling time by 10%, and gain valuable customer insights efficiently. Amazon Transcribe's deep learning capabilities and scalability were key factors in Intact's decision, allowing for accurate speech-to-text transcription and versatile post-call analy...

DPG Media enhances video metadata with AI, improving content recommendations and consumer experience. AI-powered processes introduced in just 4 weeks for more automated annotation systems.

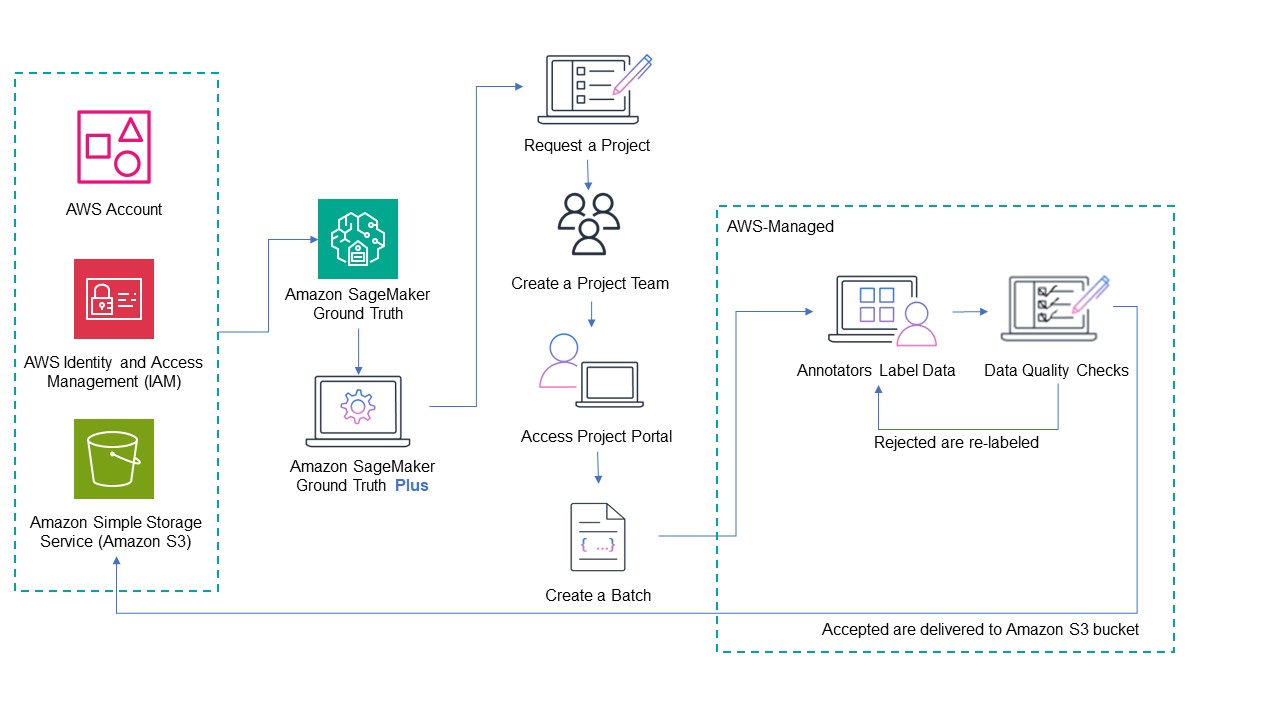

Amazon SageMaker Ground Truth is a data labeling service by AWS for various data types, supporting generative AI. It offers a self-serve option and SageMaker Ground Truth Plus for managing projects efficiently.