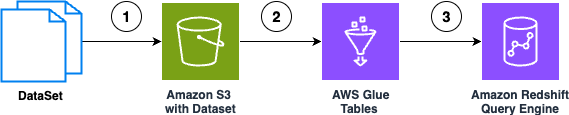

Amazon Bedrock Knowledge Bases simplifies natural language interactions with structured data sources, enabling accurate queries without SQL bottlenecks. The solution empowers organizations to quickly build conversational data interfaces, transforming data access capabilities and decision-making...

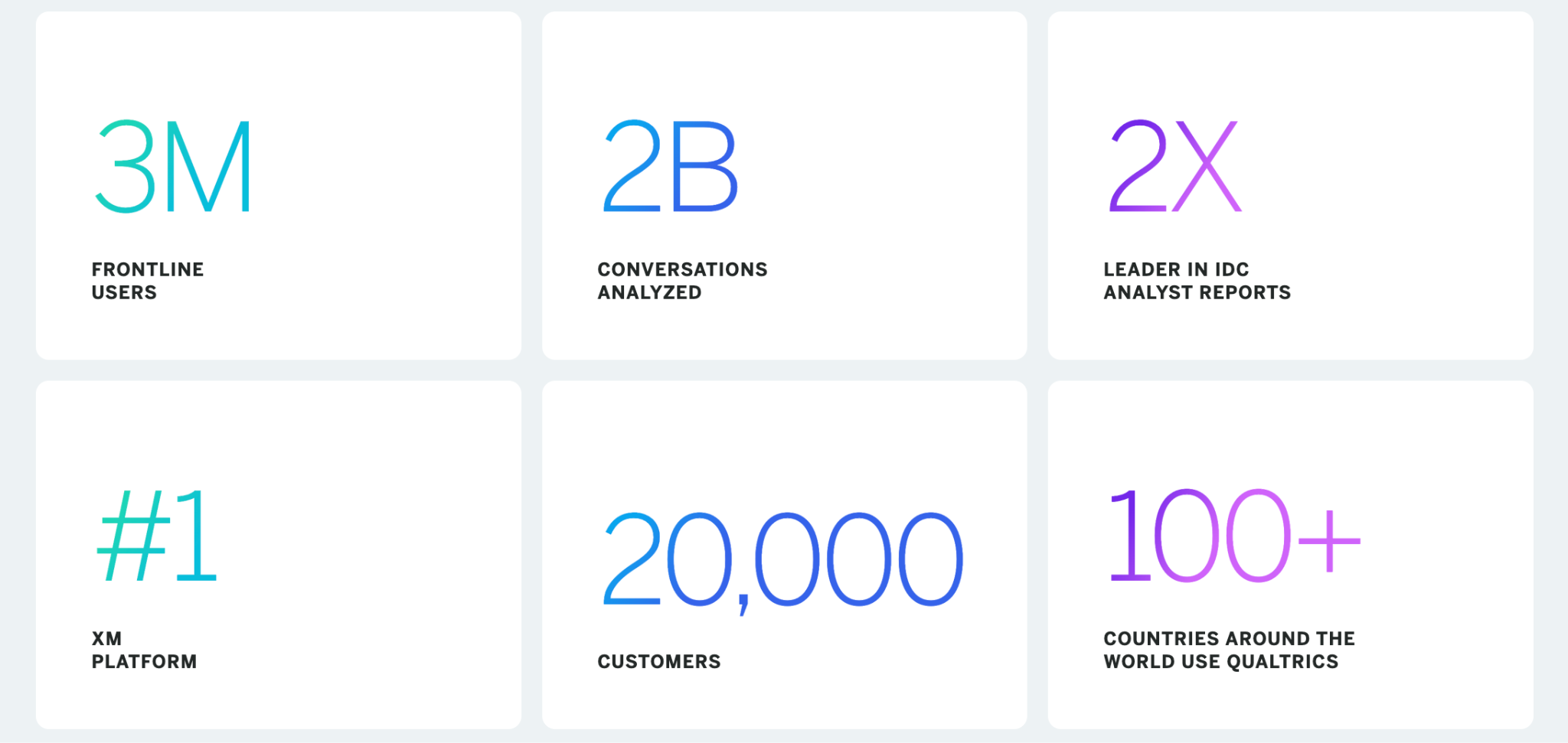

Qualtrics pioneers Experience Management (XM) with AI, ML, and NLP capabilities, enhancing customer connections and loyalty. Qualtrics's Socrates platform, powered by Amazon SageMaker, drives innovation in experience management with advanced ML...

The Air Mobility Command's 618th AOC is enhancing mission planning with AI-powered chat tools developed by Lincoln Laboratory. Natural language processing enables quick trend analysis and intelligent search capabilities for critical decision-making in the U.S. Air...

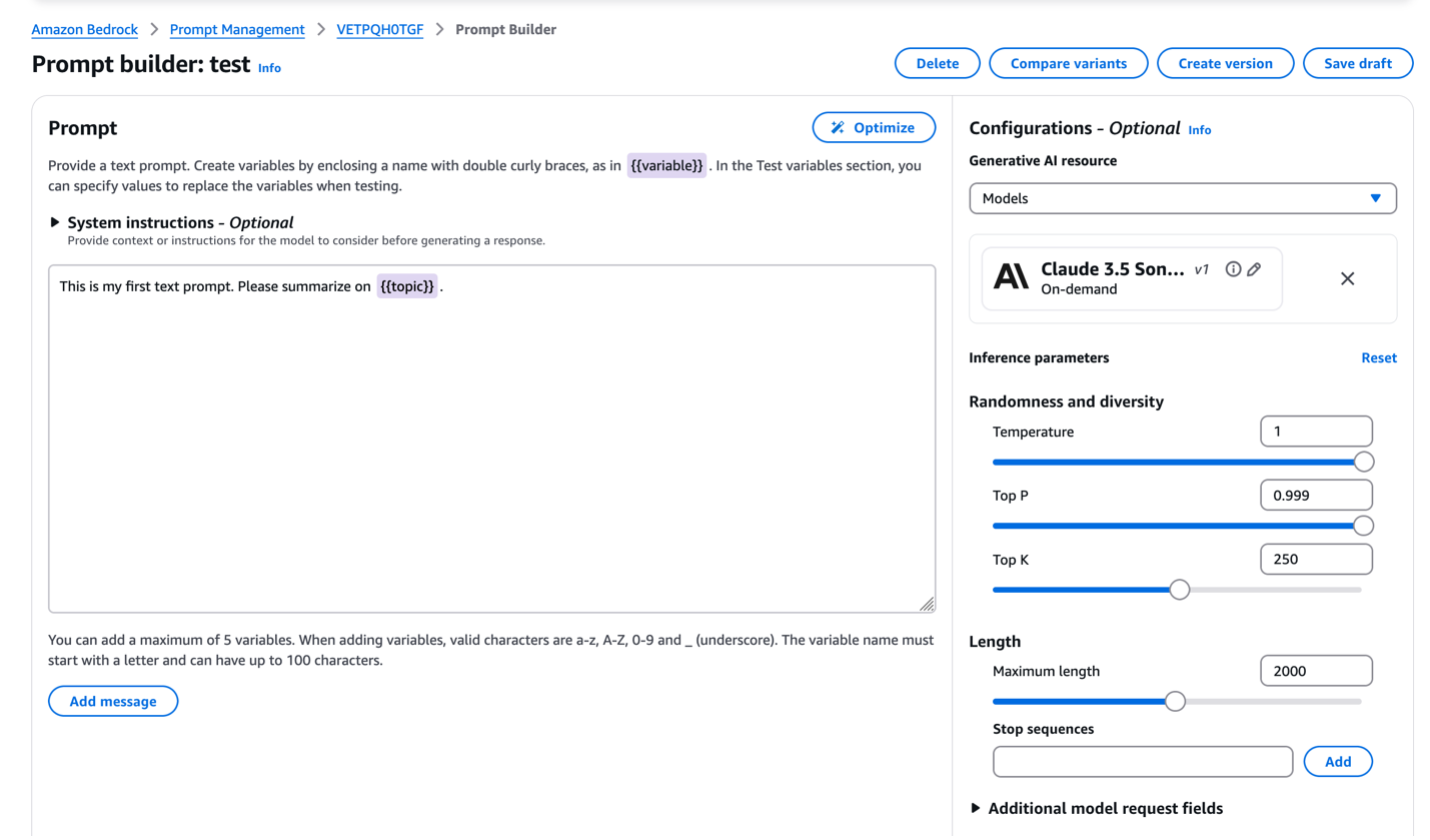

Yuewen Group expands global influence with WebNovel platform, adapting web novels into films and animations. Prompt Optimization on Amazon Bedrock enhances performance of large language models for intelligent text processing at Yuewen Group, overcoming challenges in prompt engineering and improving capabilities in specific use...

LettuceDetect, a lightweight hallucination detector for RAG pipelines, surpasses prior models, offering efficiency and open-source accessibility. Large Language Models face hallucination challenges, but LettuceDetect helps spot and address inaccuracies, enhancing reliability in critical...

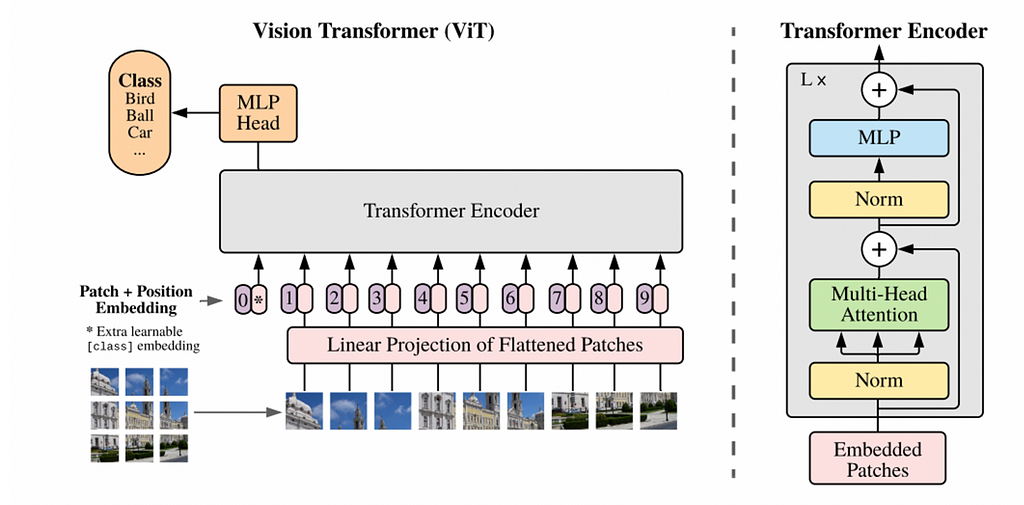

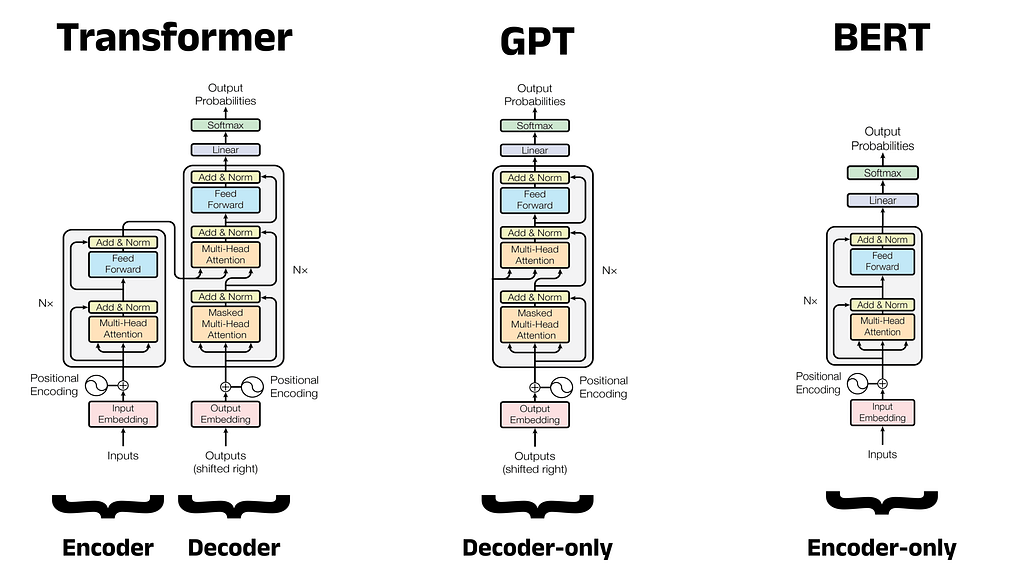

Transformers are revolutionizing NLP with efficient self-attention mechanisms. Integrating transformers in computer vision faces scalability challenges, but promising breakthroughs are on the...

Data science advancements like Transformer, ChatGPT, and RAG are reshaping tech. Understanding NLP evolution is key for aspiring data...

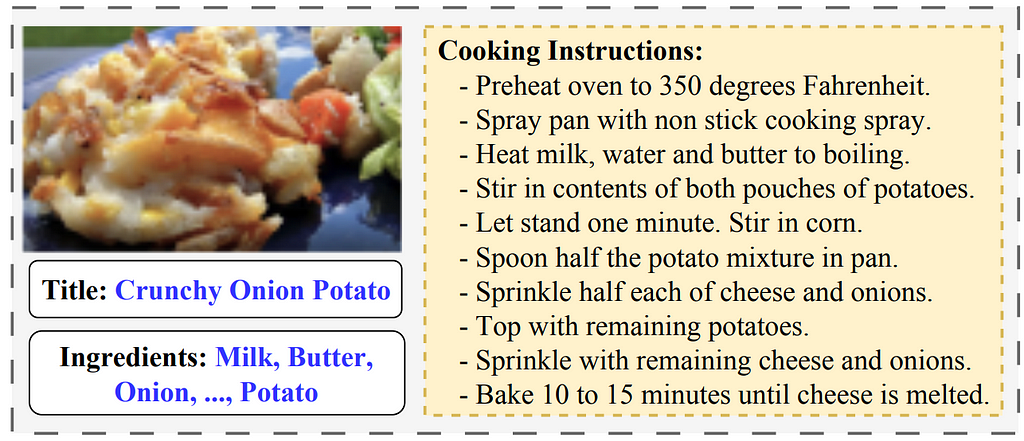

AI advancements have merged NLP and Computer Vision, leading to image captioning models like the one in "Show and Tell." This model combines CNN for image processing and RNN for text generation, using GoogLeNet and...

Language Models excel in various tasks, but struggle with ASCII art interpretation and creation. Tokenization hinders LLMs from grasping the big picture, resulting in comical failures like a smiley face mistaken for a mathematical...

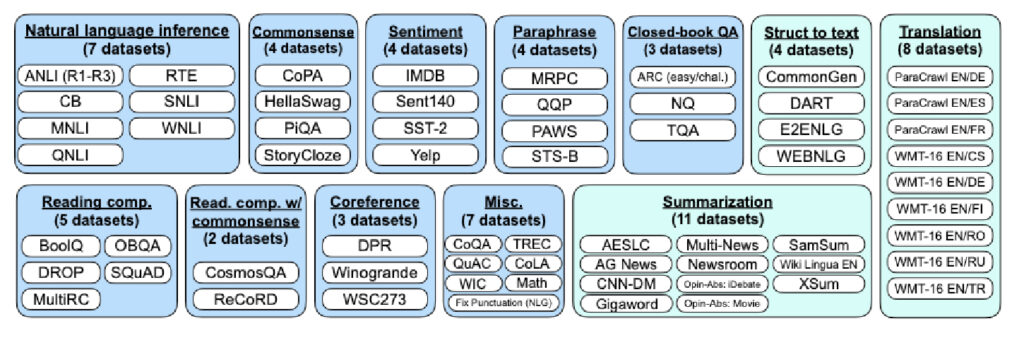

Current best practices for training LLMs include diverse model evaluations on tasks like question answering, translation, and reasoning. Evaluation methods like n-shot learning with prompting are crucial for assessing model performance...

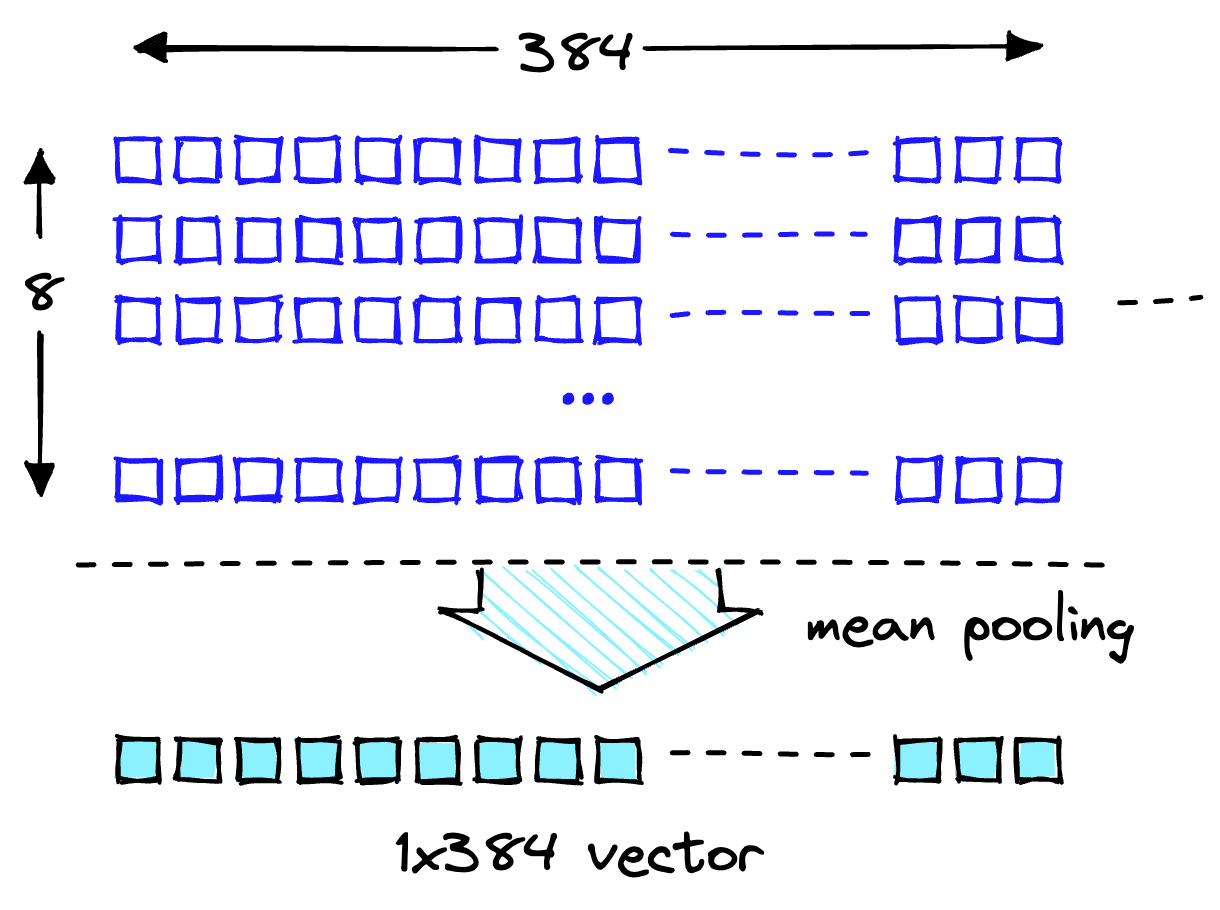

Multimodal embeddings merge text and image data into a single model, enabling cross-modal applications like image captioning and content moderation. CLIP aligns text and image representations for 0-shot image classification, showcasing the power of shared embedding...

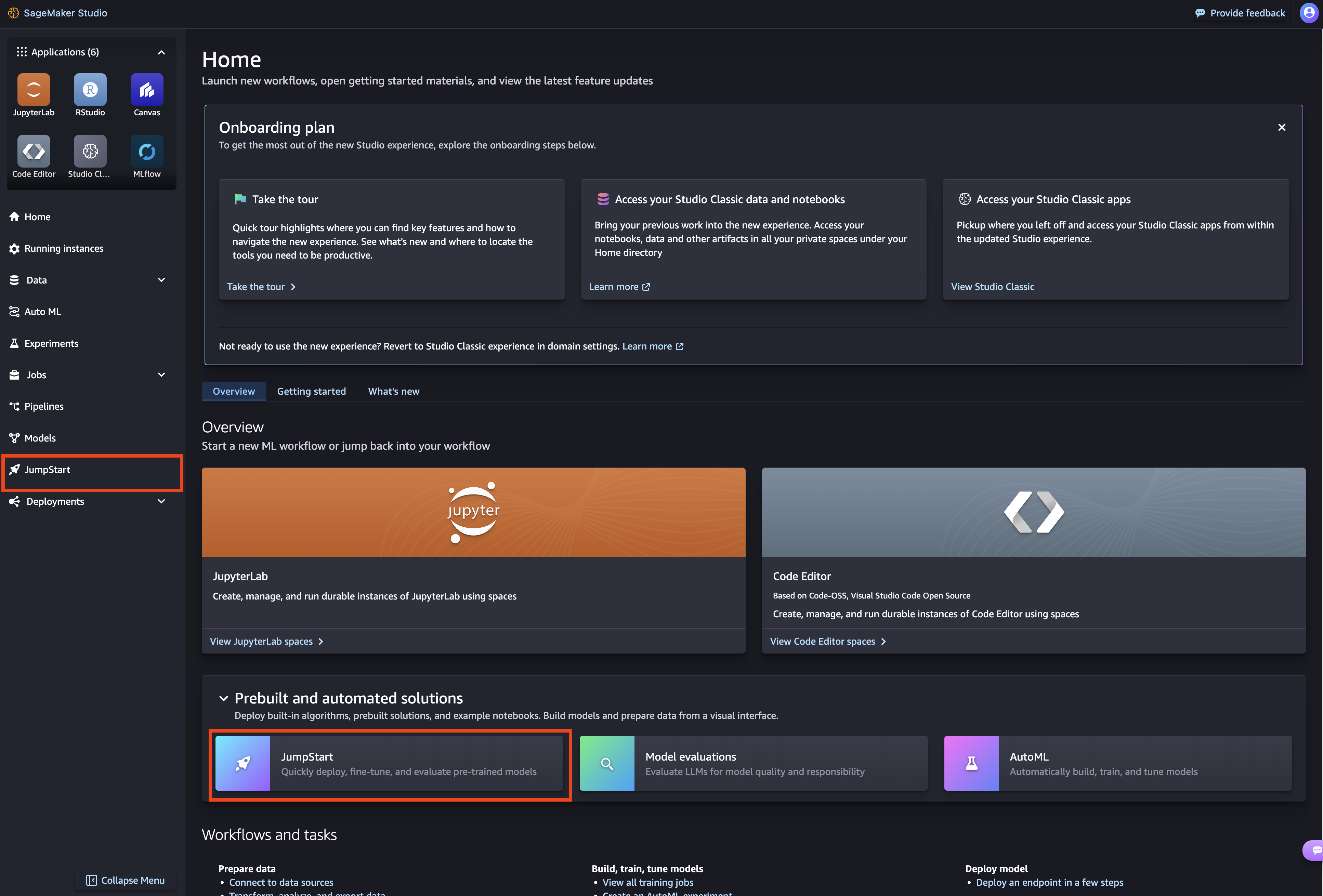

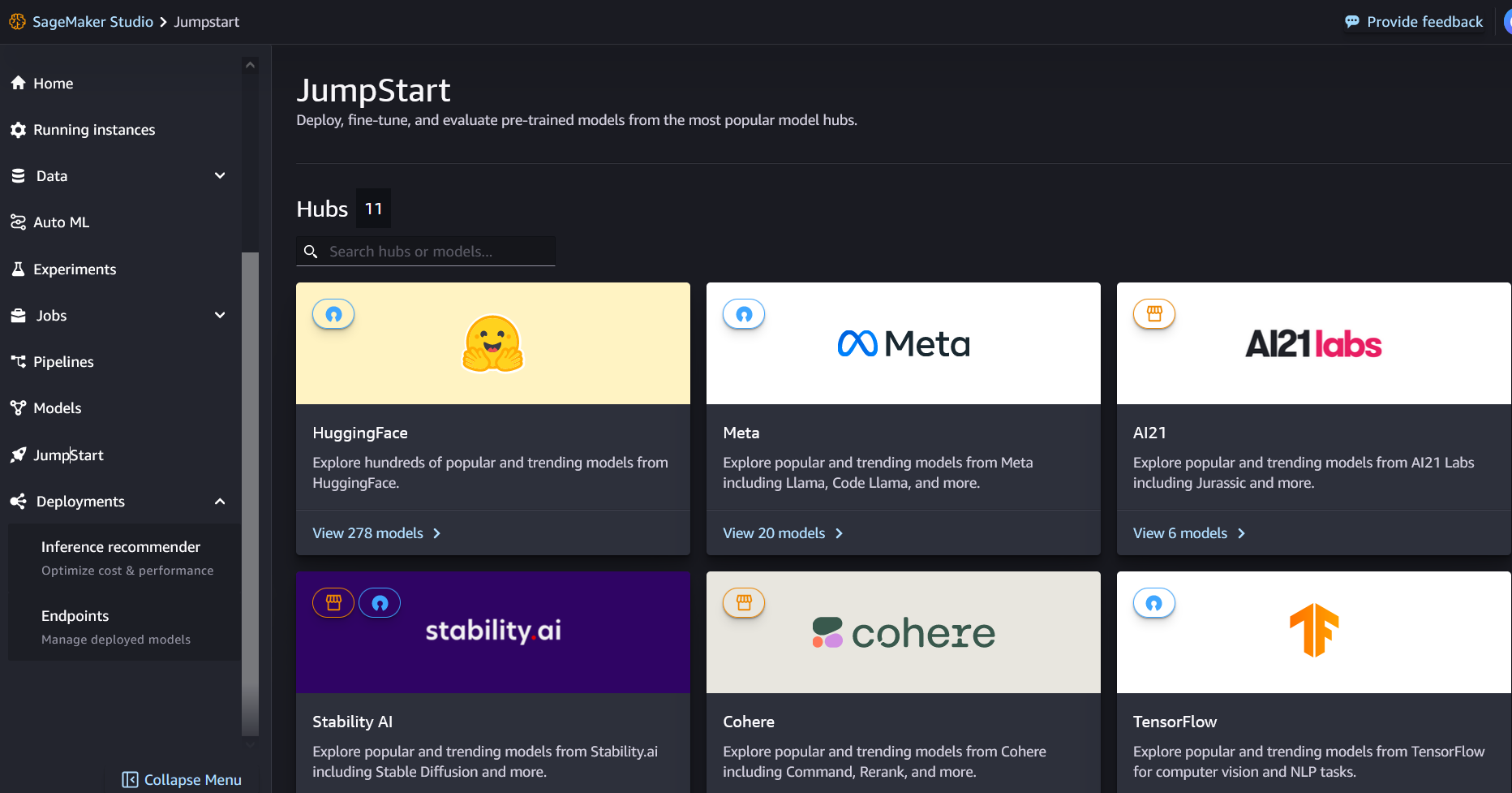

John Snow Labs' Medical LLM models on Amazon SageMaker Jumpstart optimize medical language tasks, outperforming GPT-4o in summarization and question answering. These models enhance efficiency and accuracy for medical professionals, supporting optimal patient care and healthcare...

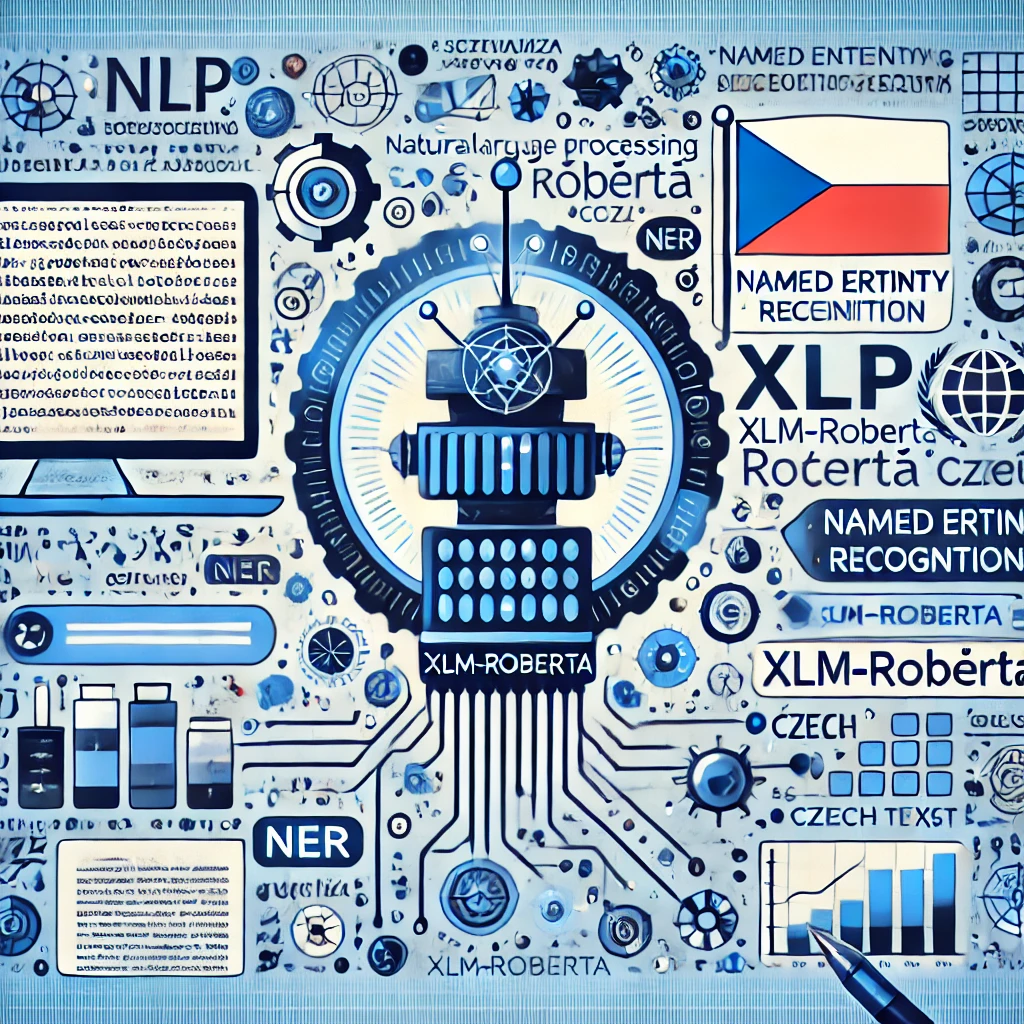

Summary: A developer shares insights from deploying an NLP model for document processing in Czech, focusing on entity identification. The model was trained on 710 PDF documents using manual labeling and avoided bounding box-based approaches for...

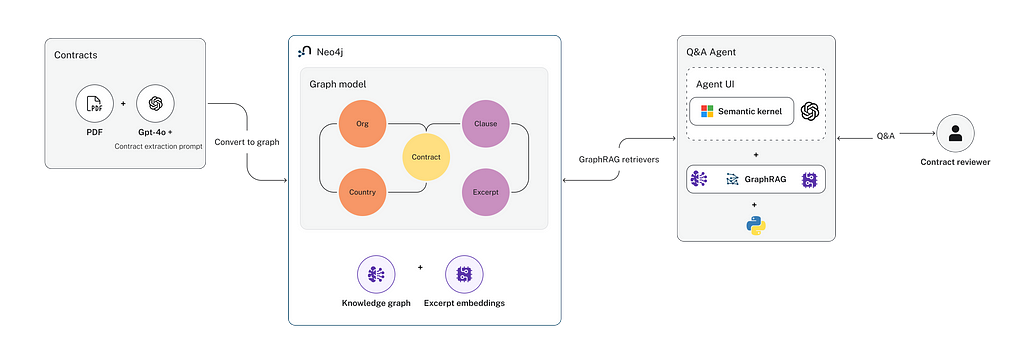

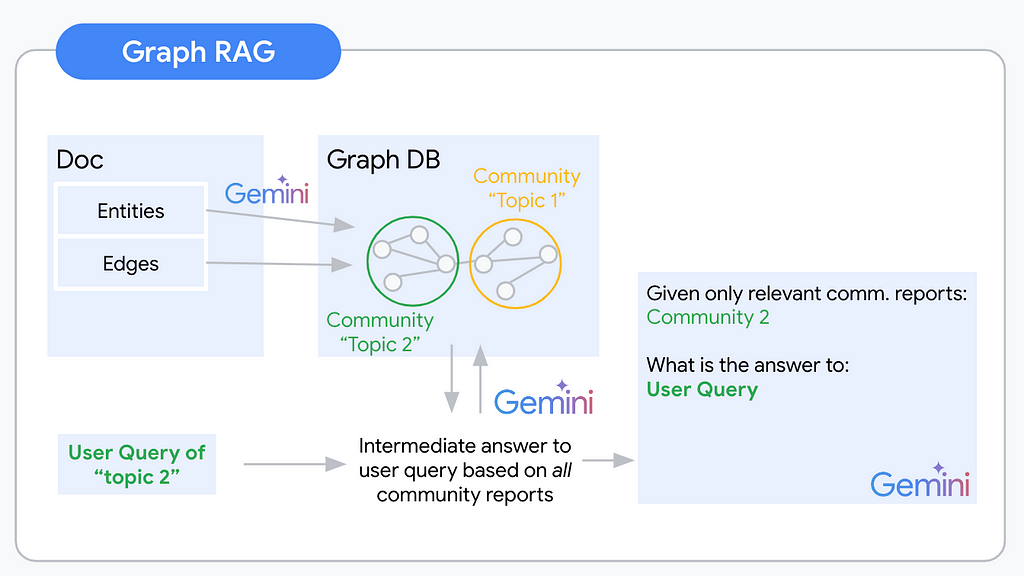

Summary: Introducing a new GraphRAG approach for efficient commercial contract data extraction and Q&A agent building. Focus on targeted information extraction and knowledge graph organization enhances accuracy and performance, making it suitable for handling complex legal...

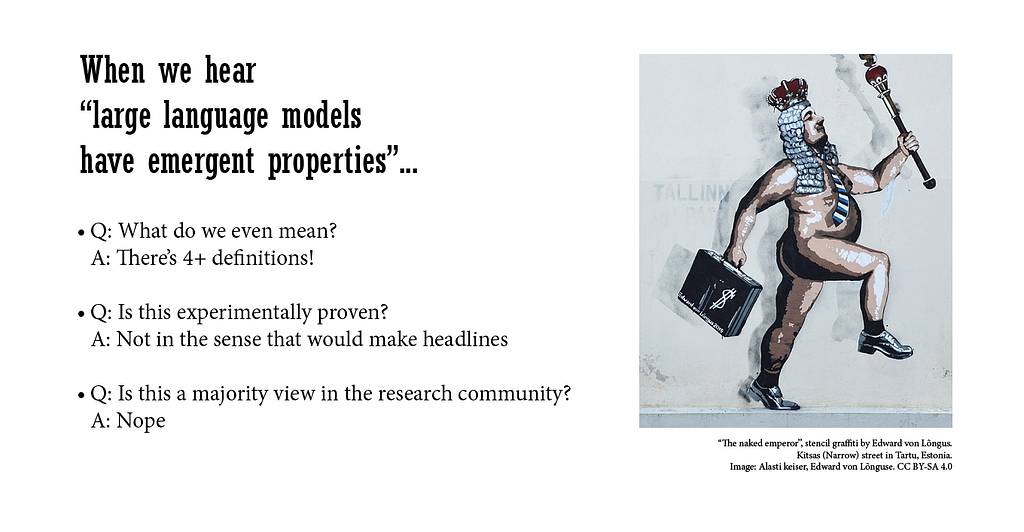

Large Language Models (LLMs) are said to have ‘emergent properties’, but the definition varies. NLP researchers debate if these properties are learned or inherent, impacting research and public...

Google Colab, integrated with Generative AI tools, simplifies Python coding. Learn Python easily with no installation needed, thanks to Google Colab's accessible...

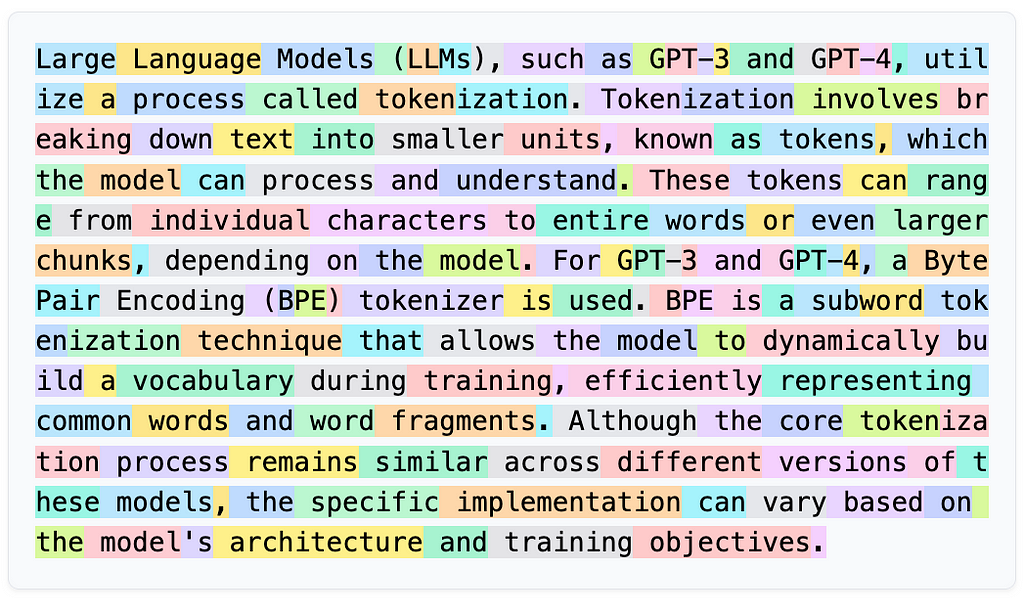

Tokenization is crucial in NLP to bridge human language and machine understanding, enabling computers to process text effectively. Large language models like ChatGPT and Claude use tokenization to convert text into numerical representations for meaningful...

Thomson Reuters Labs developed an efficient MLOps process using AWS SageMaker, accelerating AI innovation. TR Labs aims to standardize MLOps for smarter, cost-efficient machine learning...

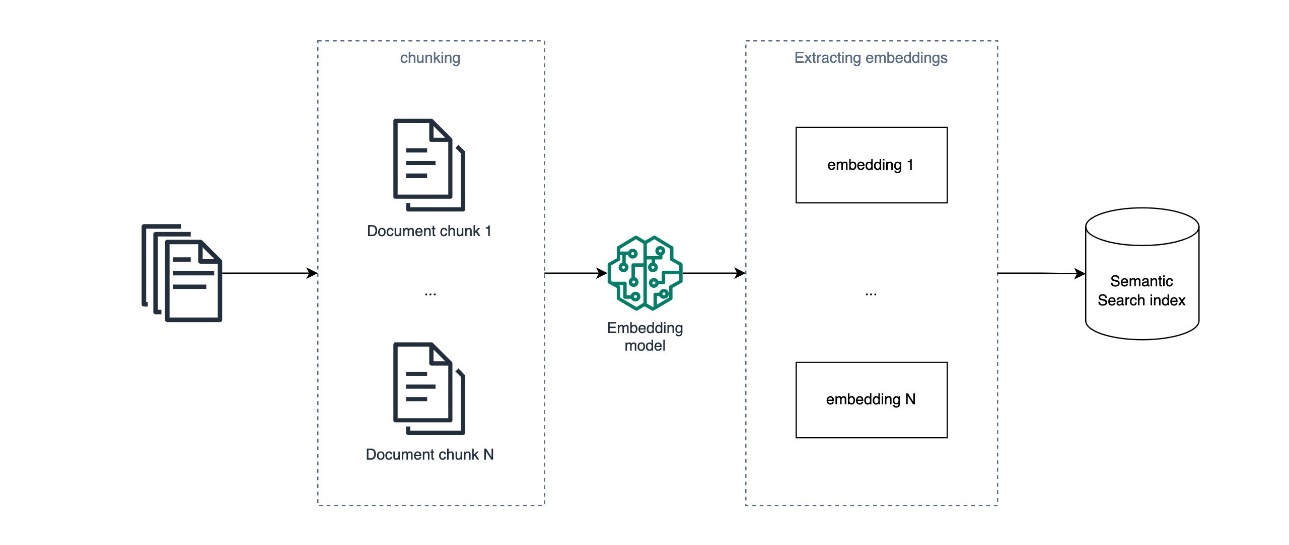

RAG enhances AI applications by combining LLMs with domain-specific data. Text embeddings have limitations in answering complex, abstract questions across...

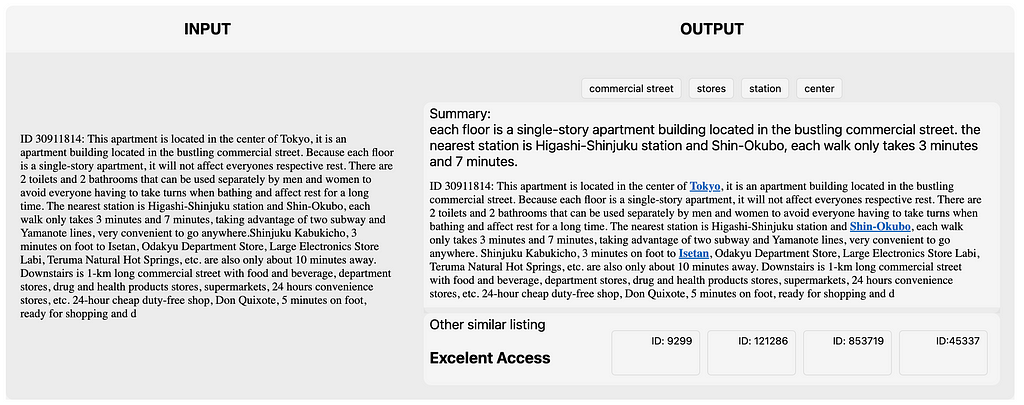

NLP techniques enhance rental listings on Airbnb in Tokyo, extracting keywords and improving user experience. Part 2 will cover topic modeling and text prediction for property...

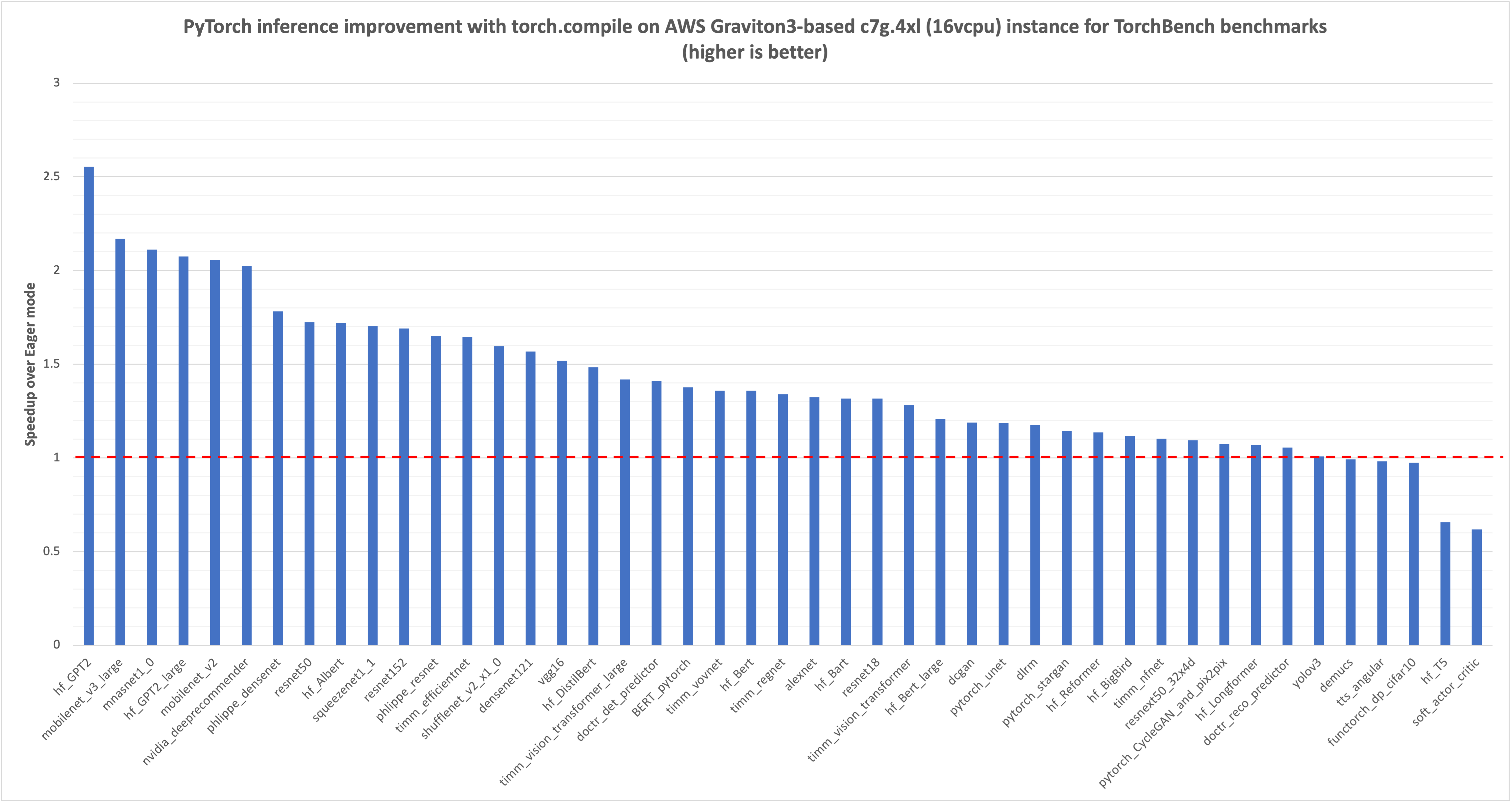

PyTorch 2.0 introduced torch.compile for faster code execution. AWS optimized torch.compile for Graviton3 processors, resulting in significant performance improvements for NLP, CV, and recommendation...

Using LLMs and GenAI can enhance de-duplication processes, increasing accuracy from 30% to almost 60%. This innovative method is not only beneficial for Customer data, but also for identifying duplicate records in other...

Transformers, known for revolutionizing NLP, now excel in computer vision tasks. Explore the Vision Transformer and Masked Autoencoder Vision Transformer architectures enabling this...

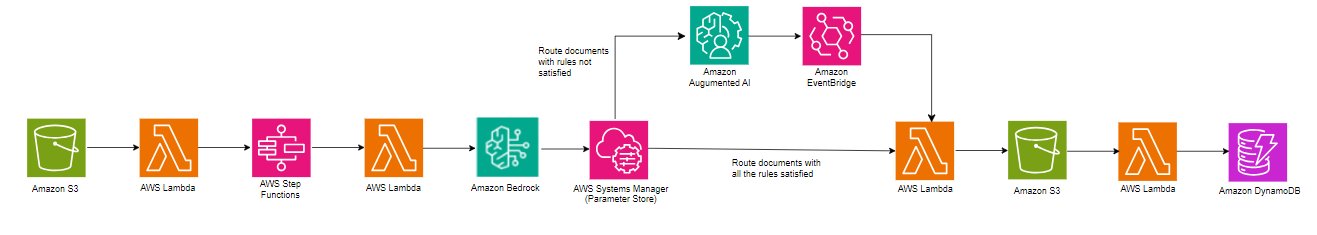

Amazon Bedrock leverages Anthropic Claude 3 Haiku model for advanced document processing, offering scalable data extraction with state-of-the-art NLP capabilities. The solution streamlines workflow by handling larger files and multipage documents, ensuring high-quality results through customizable rules and human...

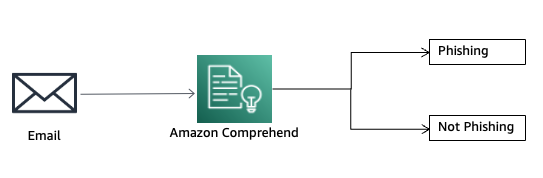

Phishing involves acquiring sensitive info via email. Amazon Comprehend Custom helps detect phishing attempts using ML...

Summary: Explore domain adaptation for LLMs in this blog series. Learn about fine-tuning to expand models' capabilities and improve...

BERT, developed by Google AI Language, is a groundbreaking Large Language Model for Natural Language Processing. Its architecture and focus on Natural Language Understanding have reshaped the NLP landscape, inspiring models like RoBERTa and...

ONNX Runtime on AWS Graviton3 boosts ML inference by up to 65% with optimized GEMM kernels. MLAS backend enhances deep learning operator acceleration for improved...

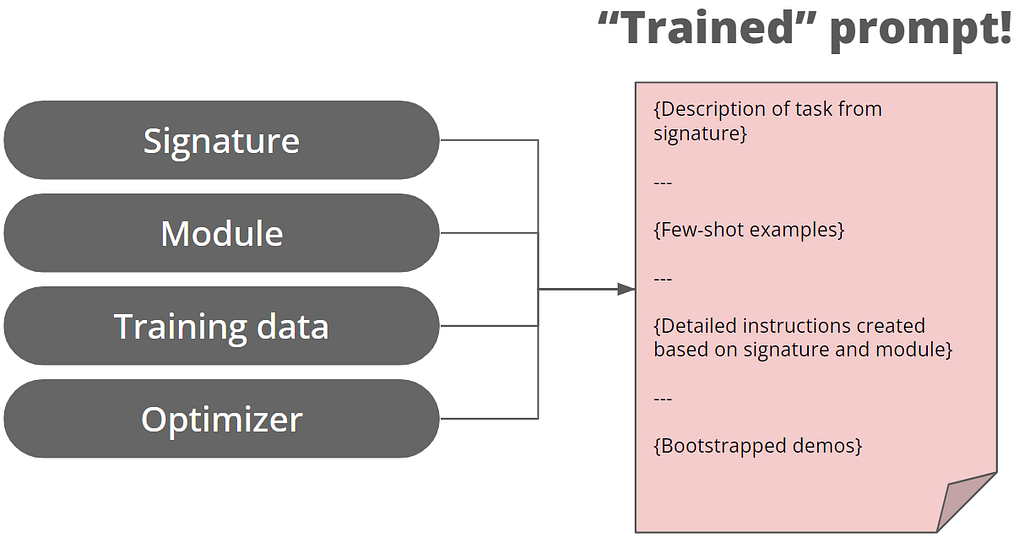

Stanford NLP introduces DSPy for prompt engineering, moving away from manual prompt writing to modularized programming. The new approach aims to optimize prompts for LLMs, enhancing reliability and...

Article highlights rise of vector databases in AI integration, focusing on Retrieval Augmented Generation (RAG) systems. Companies store text embeddings in vector databases for efficient search, raising privacy concerns over potential data breaches and unauthorized...

Alida leveraged Anthropic's Claude Instant model on Amazon Bedrock to improve topic assertion by 4-6 times in survey responses, overcoming limitations of traditional NLP. Amazon Bedrock enabled Alida to quickly build a scalable service for market researchers, capturing nuanced qualitative data points beyond multiple-choice...

The article discusses the evolution of GPT models, specifically focusing on GPT-2's improvements over GPT-1, including its larger size and multitask learning capabilities. Understanding the concepts behind GPT-1 is crucial for recognizing the working principles of more advanced models like ChatGPT or...

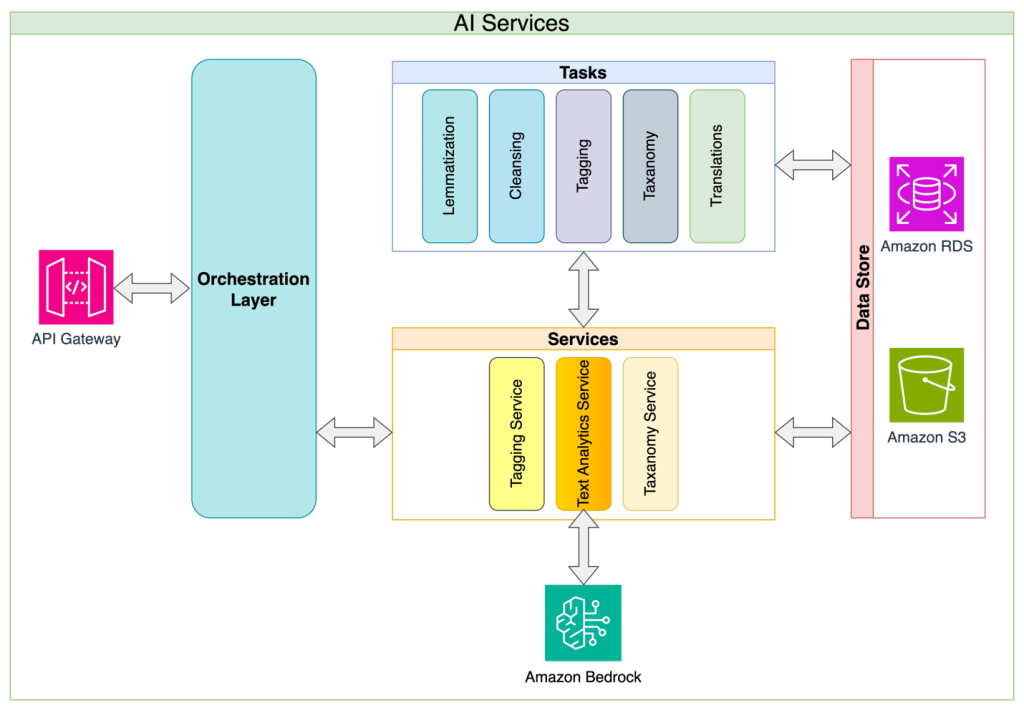

Recommender systems generate significant revenue, with Amazon and Netflix relying heavily on product recommendations. This article explores the use of controlled vocabularies and LLMs to improve similarity models in recommender systems, finding that a controlled vocabulary enhances outcomes and building a genre list using an LLM is easy but creating a detailed taxonomy is...

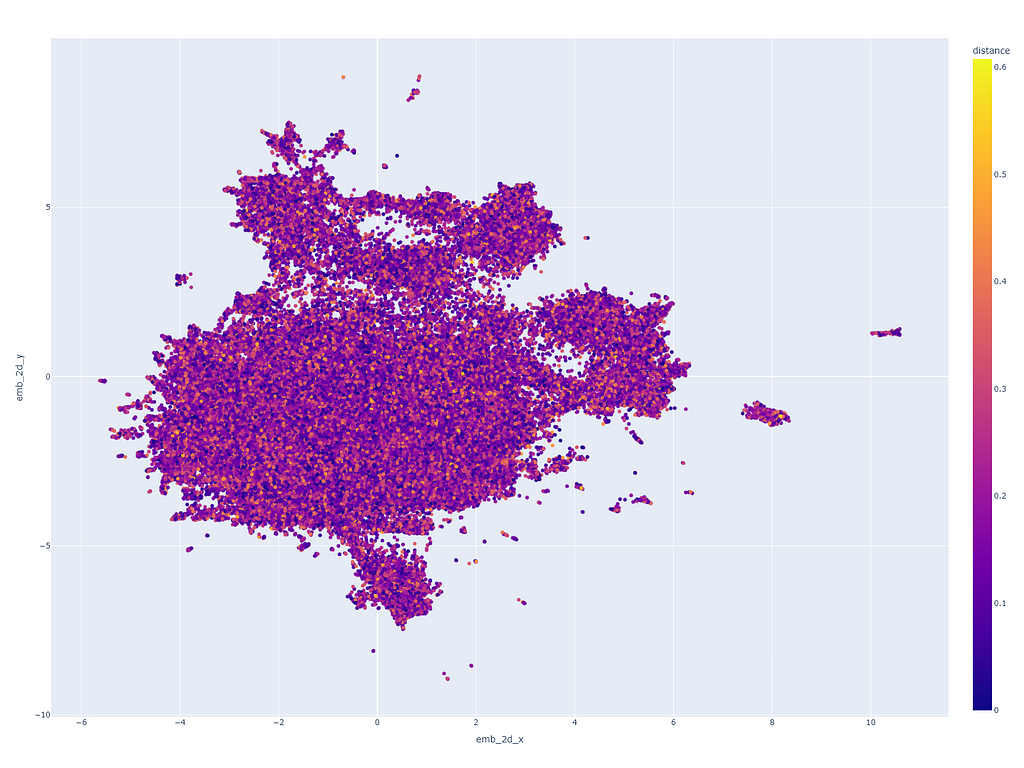

The article discusses the Retrieval Augmented Generation (RAG) pattern for generative AI workloads, focusing on the analysis and detection of embedding drift. It explores how embedding vectors are used to retrieve knowledge from external sources and augment instruction prompts, and explains the process of performing drift analysis on these vectors using Principal Component Analysis...

AI technology has the ability to transform food images into recipes, allowing for personalized food recommendations, cultural customization, and automated cooking execution. This innovative method combines computer vision and natural language processing to generate comprehensive recipes from food images, bridging the gap between visual depictions of dishes and symbolic...

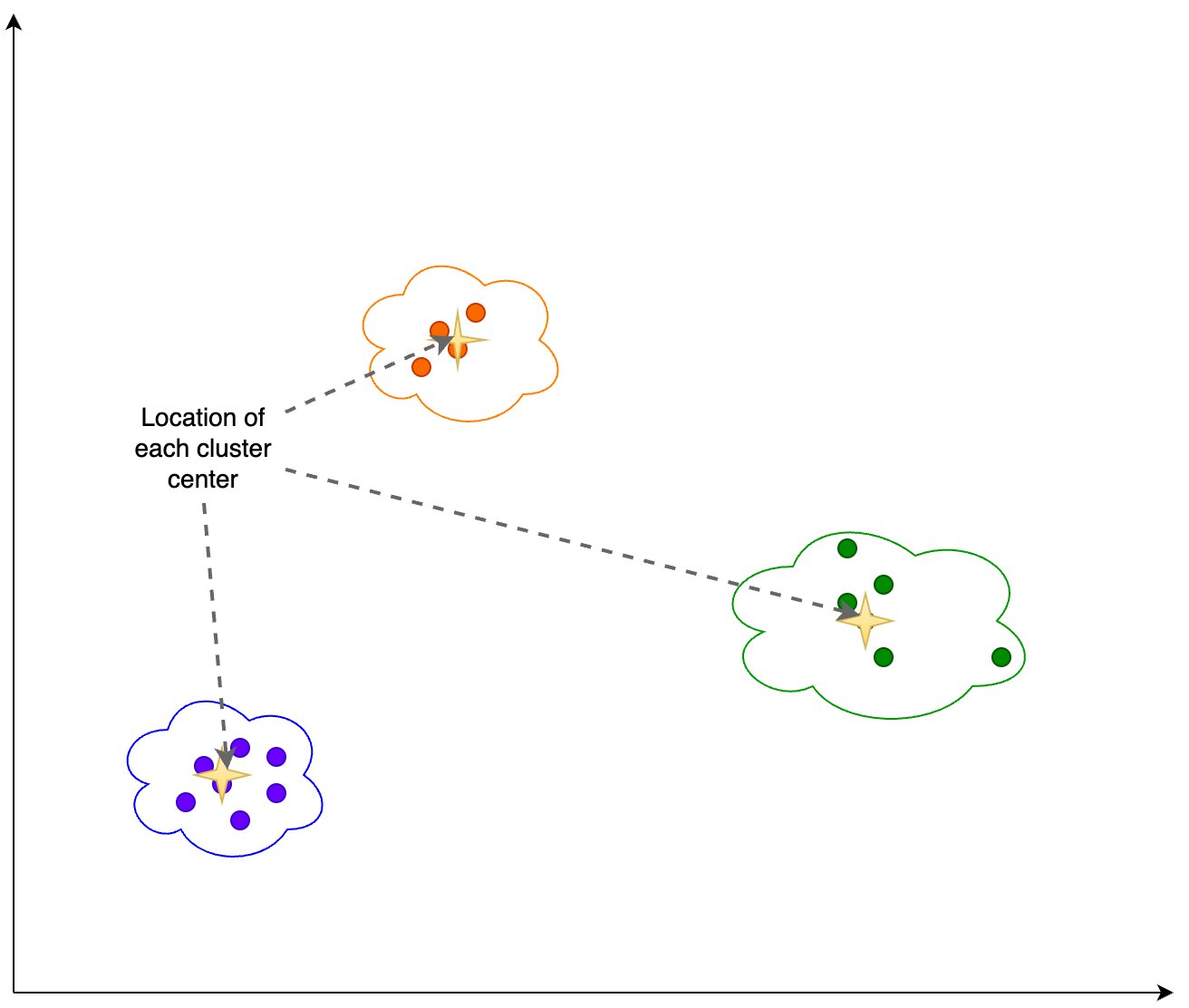

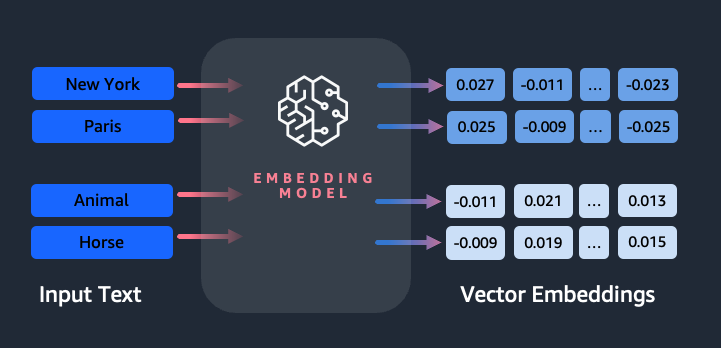

Amazon Titan Text Embeddings is a text embeddings model that converts natural language text into numerical representations for search, personalization, and clustering. It utilizes word embeddings algorithms and large language models to capture semantic relationships and improve downstream NLP...

A data science associate used NLP techniques to analyze Reddit discussions on depression, exploring gender-related taboos around mental health. They found that zero-shot classification can easily produce similar results to traditional sentiment analysis, simplifying the process and eliminating the need for a training...

Google Brain introduced Transformer in 2017, a flexible architecture that outperformed existing deep learning approaches, and is now used in models like BERT and GPT. GPT, a decoder model, uses a language modeling task to generate new sequences, and follows a two-stage framework of pre-training and...

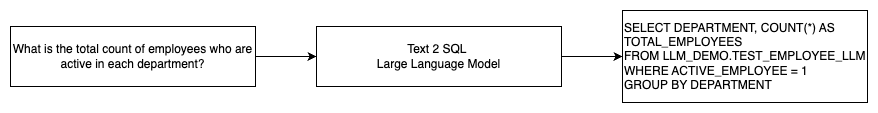

Generative AI has unlocked potential in AI, including text generation and code generation. One area evolving is using NLP to generate SQL queries, making data analysis more accessible to non-technical...

2024 could be the tipping point for Music AI, with breakthroughs in text-to-music generation, music search, and chatbots. However, the field still lags behind Speech AI, and advancements in flexible and natural source separation are needed to revolutionize music interaction through...

Mistral AI's Mixtral-8x7B large language model is now available on Amazon SageMaker JumpStart for easy deployment. With its multilingual support and superior performance, Mixtral-8x7B is an appealing choice for NLP applications, offering faster inference speeds and lower computational...

Conversational AI has evolved with generative AI and large language models, but lacks specialized knowledge for accurate answers. Retrieval Augmented Generation (RAG) connects generic models to internal knowledge bases, enabling domain-specific AI assistants. Amazon Kendra and OpenSearch Service offer mature vector search solutions for implementing RAG, but analytical reasoning questions...

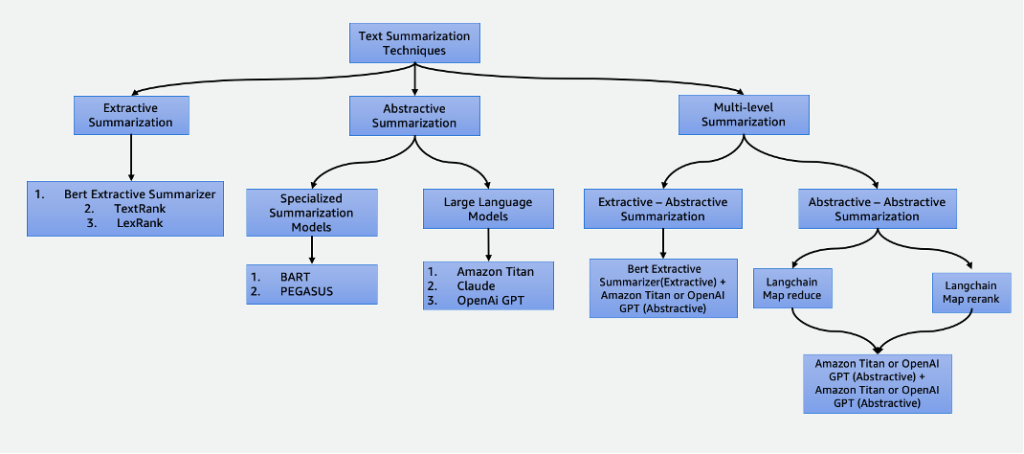

Summarization is essential in our data-driven world, saving time and improving decision-making. It has various applications, including news aggregation, legal document summarization, and financial analysis. With advancements in NLP and AI, techniques like extractive and abstractive summarization are becoming more accessible and...

LLMs like Llama 2, Flan T5, and Bloom are essential for conversational AI use cases, but updating their knowledge requires retraining, which is time-consuming and expensive. However, with Retrieval Augmented Generation (RAG) using Amazon Sagemaker JumpStart and Pinecone vector database, LLMs can be deployed and kept up to date with relevant information to prevent AI...