MIT researchers discovered the position bias in large language models, affecting information retrieval. Their framework could lead to more reliable AI systems, like chatbots and medical...

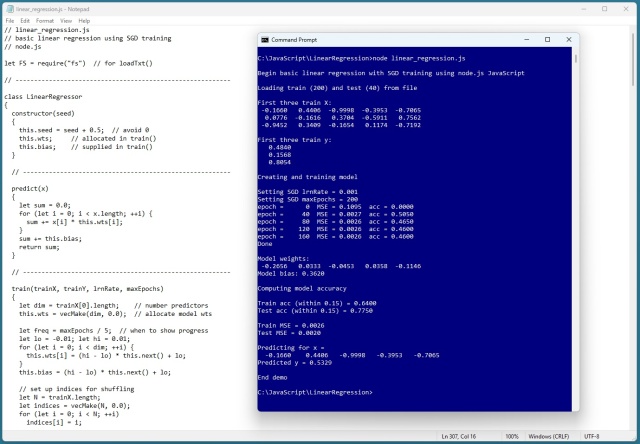

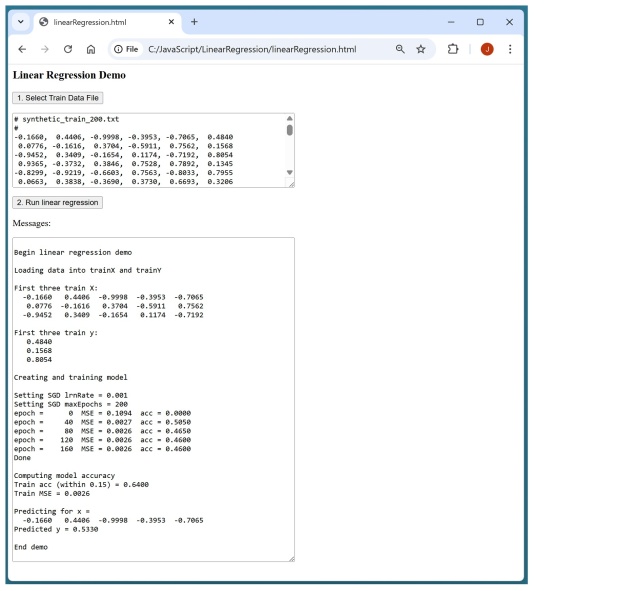

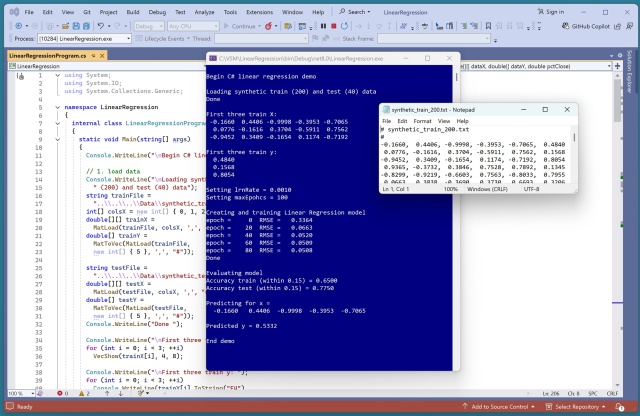

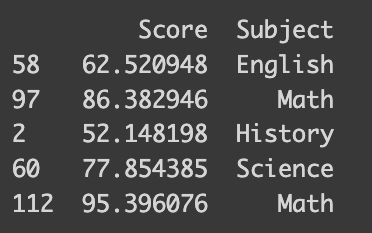

Linear regression demo in JavaScript uses SGD for training. Predicts income from age, height, education with 64...

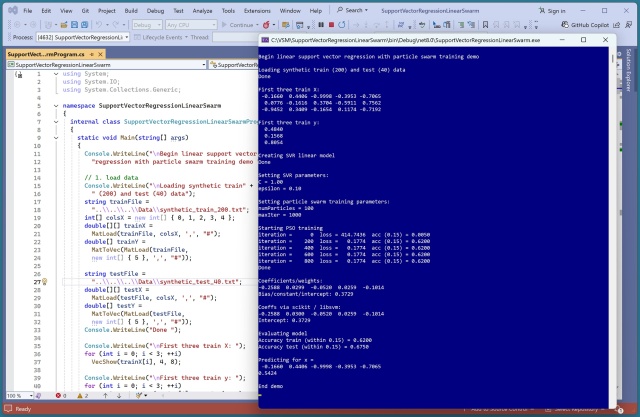

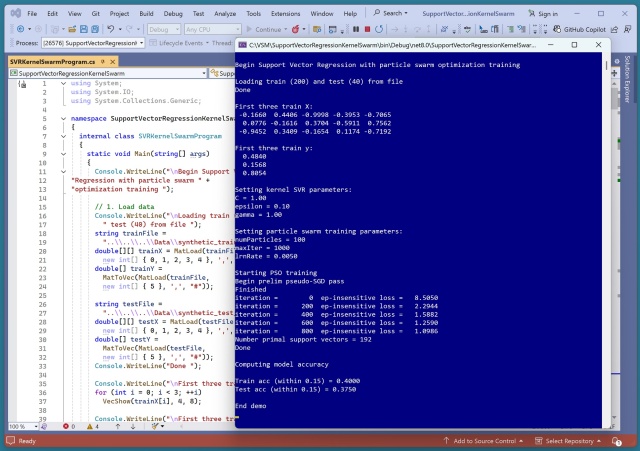

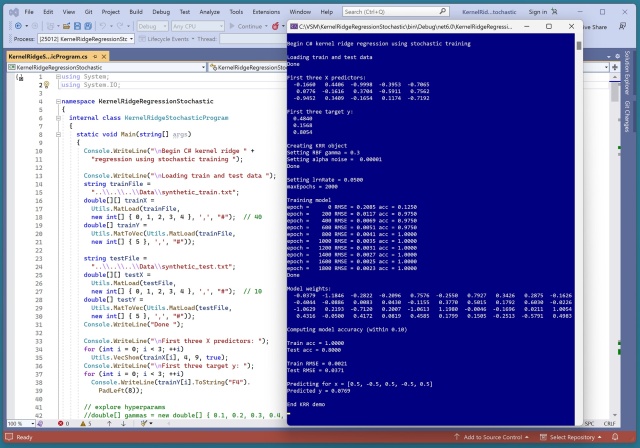

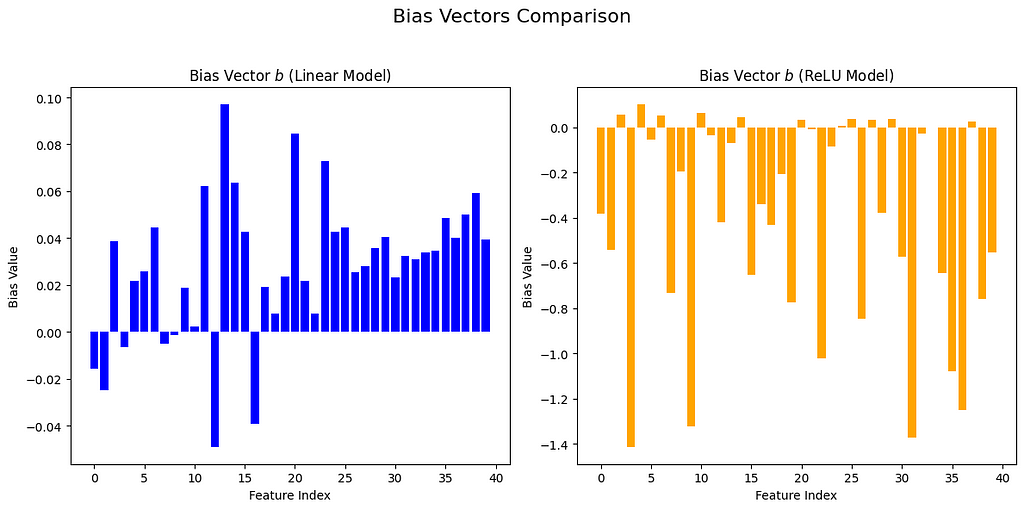

Article showcases linear support vector regression using C# with particle swarm training for model prediction accuracy assessment. Demo reveals challenges in predicting non-linear data, highlighting the importance of specialized optimization algorithms like particle...

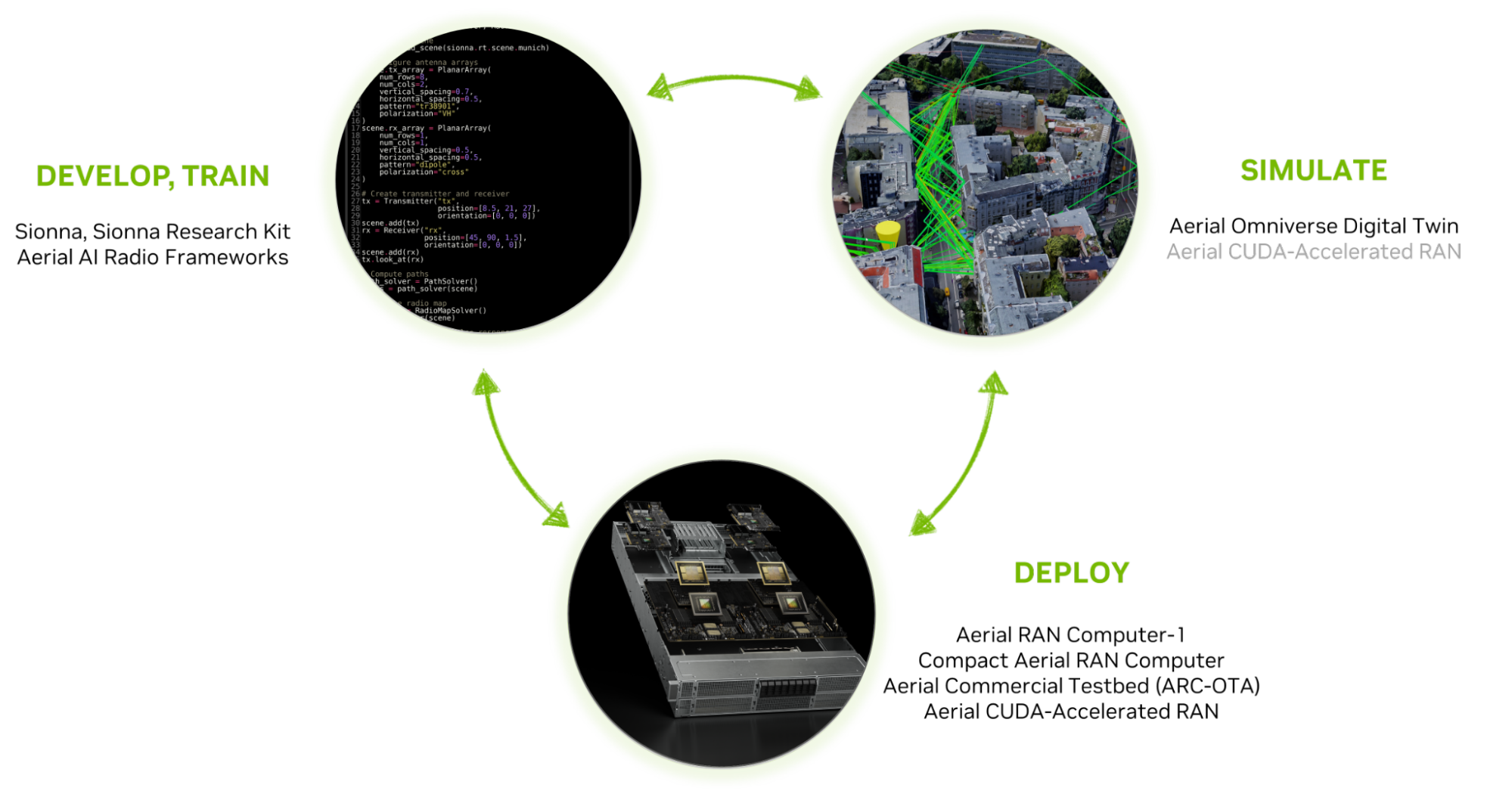

European telecoms are leveraging NVIDIA for 6G development, integrating AI for innovation and sustainability. Collaboration with U.K. government and leading universities, Finland's real-time network digital twin, and France's OAI partnership highlight cutting-edge advancements in AI-native wireless...

MIT researchers have developed a groundbreaking AI hardware accelerator for wireless signal processing that operates at the speed of light, offering a 100x faster and more energy-efficient alternative to digital AI accelerators. This technology could revolutionize future 6G wireless applications and enable real-time AI inference for various high-performance computing tasks, from autonomous...

Linear regression prediction system demoed using JavaScript on client side for simplicity. Trained model achieved 64.00% accuracy due to non-linear data structure. Renowned artist Robert McGinnis, known for iconic book covers and movie posters, recently passed...

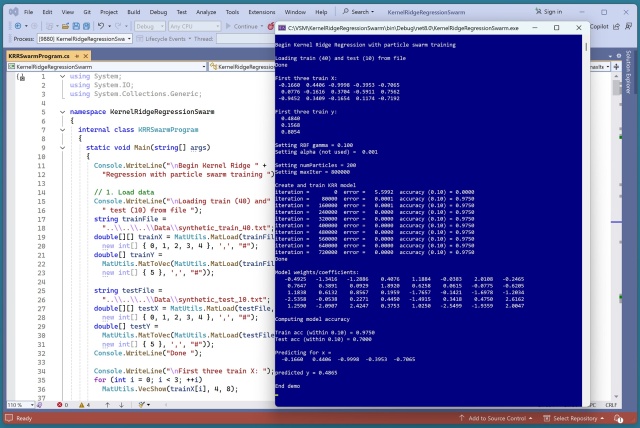

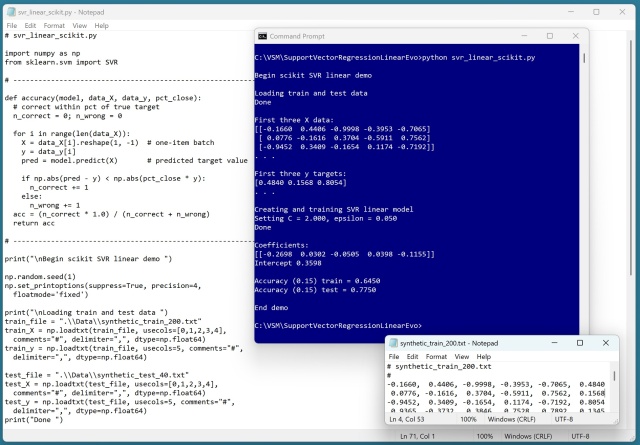

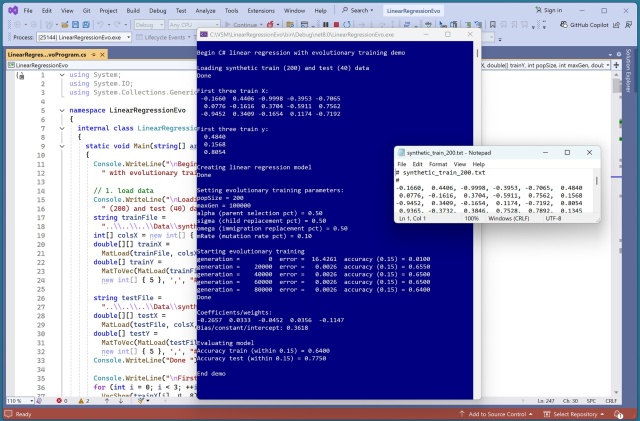

Training linear support vector regression (SVR) poses challenges due to the non-calculus differentiable loss function. Utilizing particle swarm optimization (PSO) proved more effective than evolutionary algorithms for training linear SVR...

An article on Pure AI simplifies AI Large Language Model Transformers using a factory analogy, making it accessible for non-engineers and business professionals. The analogy breaks down the process into steps like Loading Dock Input, Material Sorters, and Final Assemblers, offering a clear understanding of how Transformers...

Training linear SVR is challenging due to its non-calculus differentiable loss function, leading to the exploration of PSO over evolutionary algorithms. Using PSO for linear SVR training yielded superior results, showcasing the importance of parameter tuning for optimizing predictive...

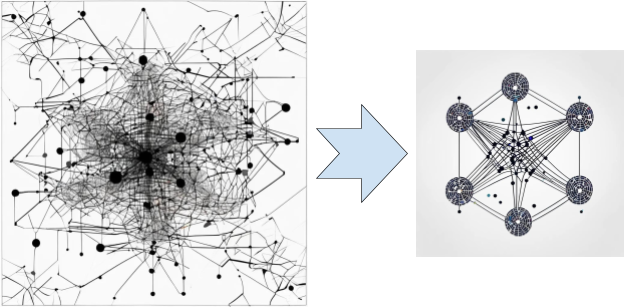

Model compression is essential in the age of large language models. Learn about pruning, quantization, low-rank factorization, and Knowledge Distillation techniques in Machine...

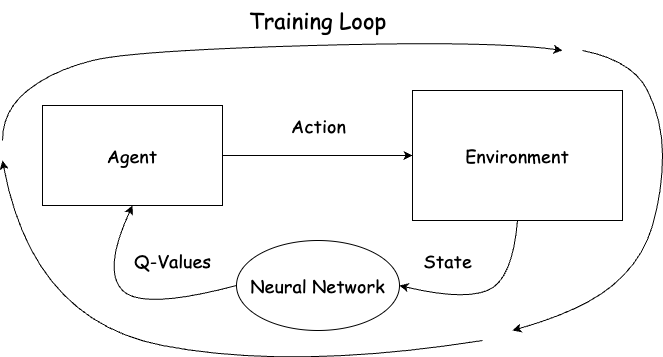

Summary: Part I of Sutton and Barto's book covers fundamental Reinforcement Learning techniques, while Part II focuses on using deep neural networks for approximate solutions. The upcoming series will benchmark algorithms in Gridworld environments to identify the most effective...

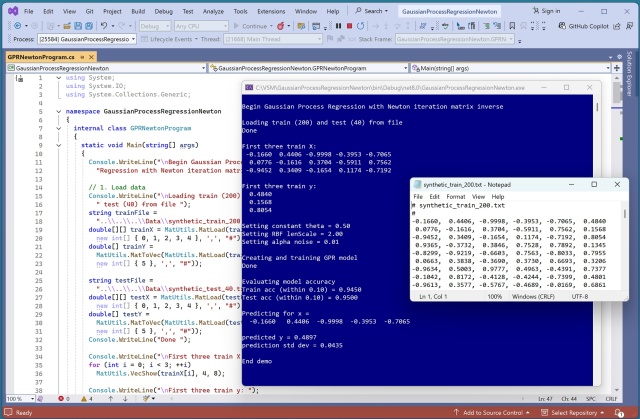

Summary: This article clarifies the misconceptions around backpropagation by explaining the total-derivative and introducing the vector chain rule to simplify complex calculations in neural networks. The implementation of vector calculus in backprop equations optimizes the computation of gradients for all weights in a layer simultaneously, enhancing efficiency in training...

MIT researchers developed LinOSS, a stable AI model inspired by neural oscillations, outperforming existing models in long sequence analysis. LinOSS offers efficient predictions for various fields, from health-care analytics to financial forecasting, bridging biological inspiration with computational...

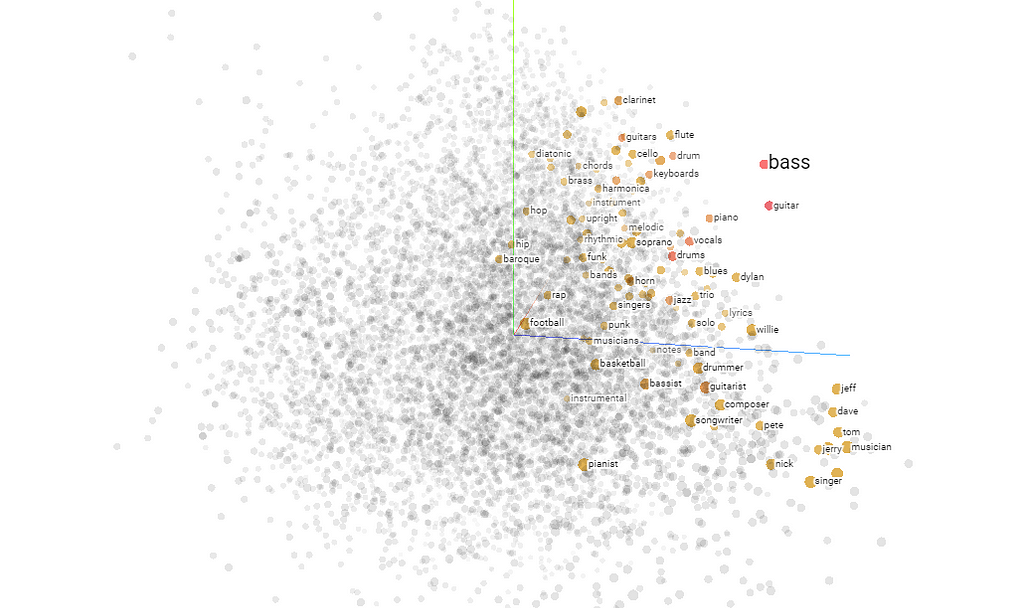

DeepType utilizes neural networks for clustering, extracting meaningful structure from data for more insightful analysis and predictions. By training on task-relevant representations, DeepType enhances clustering accuracy and reveals valuable insights, as seen in patient groupings based on genetic data for improved survival rate...

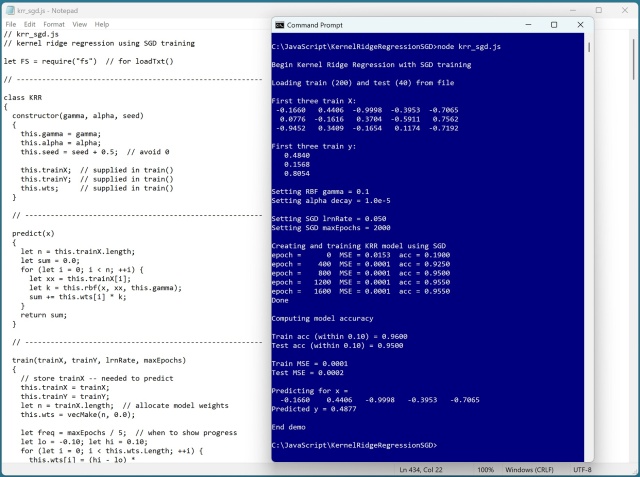

Kernel ridge regression (KRR) uses a kernel function to predict values and prevent overfitting. Implementing KRR in JavaScript is a challenging yet rewarding puzzle, offering accurate predictions and various training techniques like stochastic gradient...

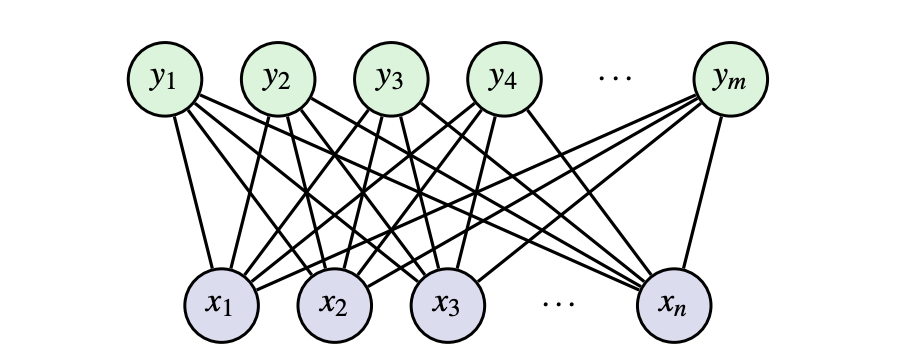

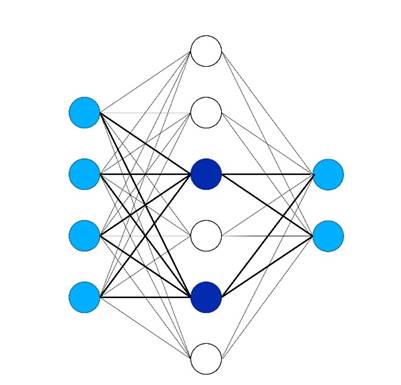

The Universal Approximation Theorem reveals the power of a single hidden layer neural network. Hugging Face showcases over one million pretrained models, highlighting the need for diverse network...

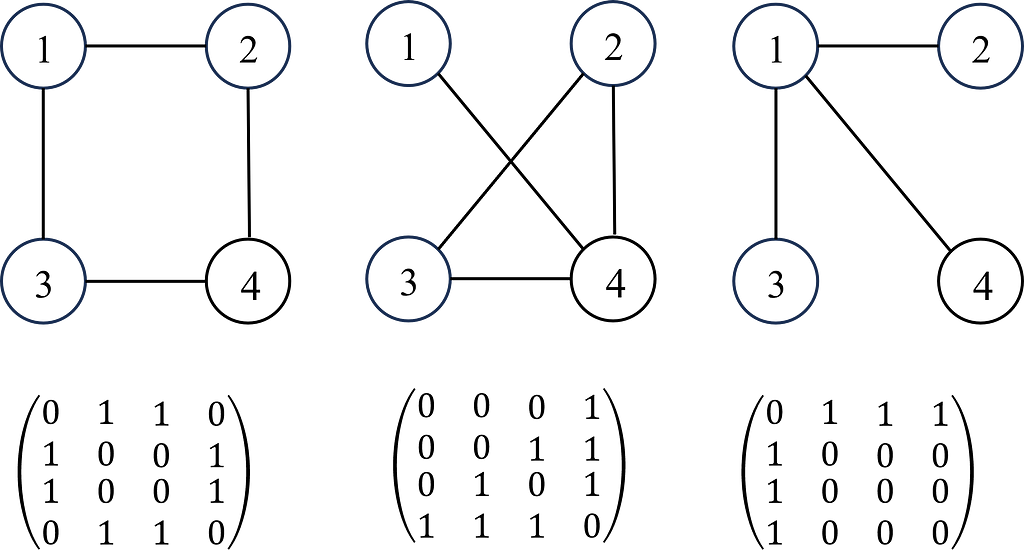

Link prediction is a popular topic in social networks, e-commerce, and biology. Methods range from simple heuristics to advanced GNN-based models like...

The Air Mobility Command's 618th AOC is enhancing mission planning with AI-powered chat tools developed by Lincoln Laboratory. Natural language processing enables quick trend analysis and intelligent search capabilities for critical decision-making in the U.S. Air...

Kernelized SVR, trained with PSO, tackles non-linear data using RBF. Epsilon-insensitive loss and PSO make for a challenging yet promising...

Physics-Informed Neural Networks (PINNs) blend Physics with AI for accurate predictions. Explore how PINNs revolutionize financial modeling using differential...

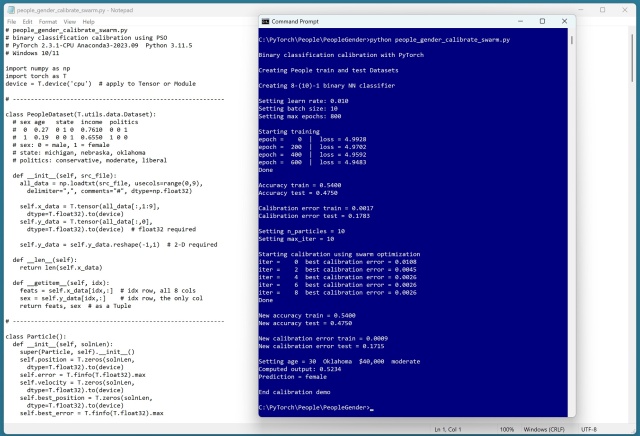

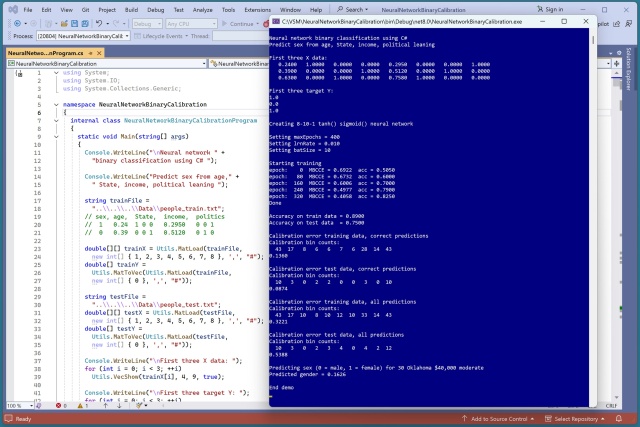

Calibration error in prediction models is crucial. A demo using PyTorch and PSO shows how to improve it...

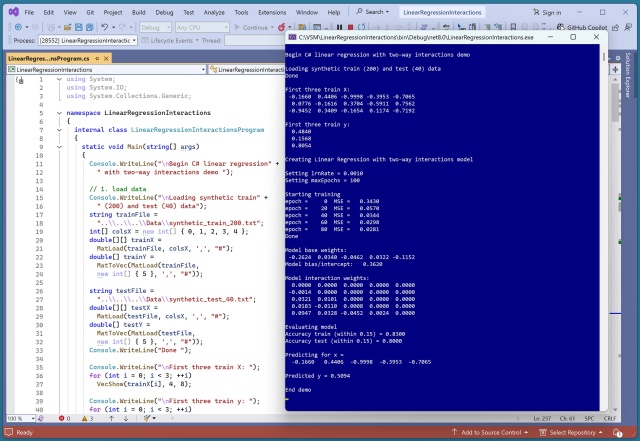

Implementing linear regression with two-way interactions improved prediction accuracy significantly. The model achieved 83% accuracy on the training data and 80% on the test data, showcasing its...

Artificial intelligence models like CNNs mimic human visual processing but struggle with causal relationships. Despite outperforming humans in some tasks, they fail in generalizing image classification, highlighting...

The April 2025 Microsoft Visual Studio Magazine article demonstrates Linear Support Vector Regression using C# with Evolutionary Training. Linear SVR penalizes outliers and keeps model values small, but simpler techniques like L1 and L2 regression are more...

Transformer-based LLMs have advanced in tasks, but remain black boxes. Anthropic's new paper on circuit tracing aims to reveal LLMs' internal logic for...

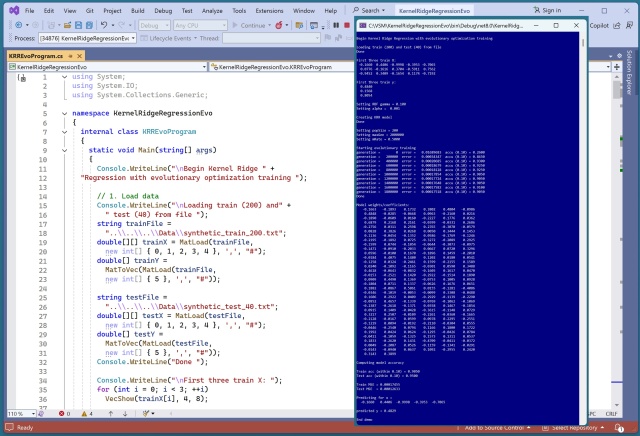

Evolutionary optimization training for Kernel Ridge Regression shows promise but caps at 90-93% accuracy due to scalability issues. Traditional matrix inverse technique outperforms in accuracy and...

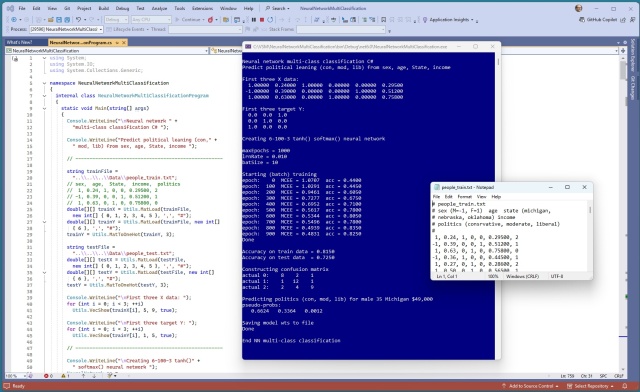

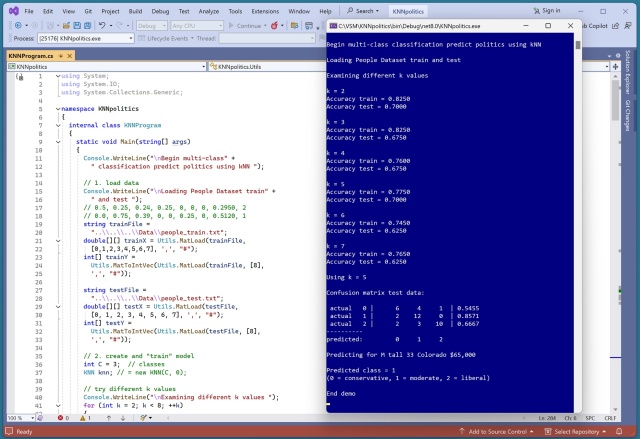

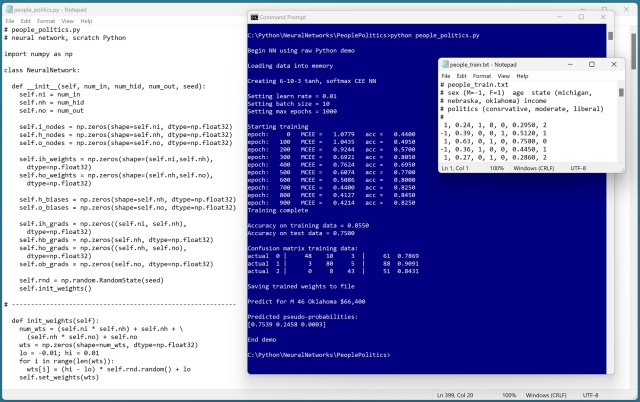

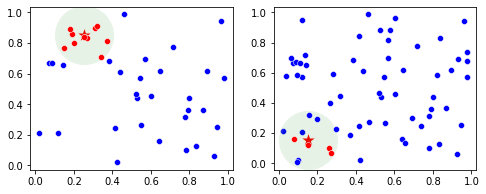

Machine learning model interpretability can be challenging. Experiment reveals age and income have the most significant impact on predicting political...

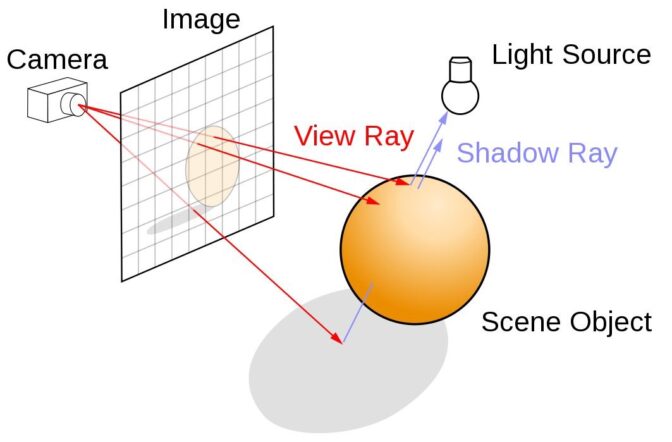

The diffusion model, pioneered by Sohl-Dickstein et al. and further developed by Ho et al., has been adapted by OpenAI and Google to create DALLE-2 and Imagen, capable of generating high-quality images. The model works by transforming noise into images through forward and backward diffusion processes, maintaining the original image's dimensionality in the latent...

Algorithm combining PSO with EO, EPSO, performs similarly to PSO and EO, not significantly better. Slow for practical use, but shows promise in training a KRR prediction...

Graph Convolutional Networks (GCNs) and Graph Attention Networks (GATs) have limitations with large graphs and changing structures. GraphSAGE offers a solution by sampling neighbors and using aggregation functions for faster and scalable...

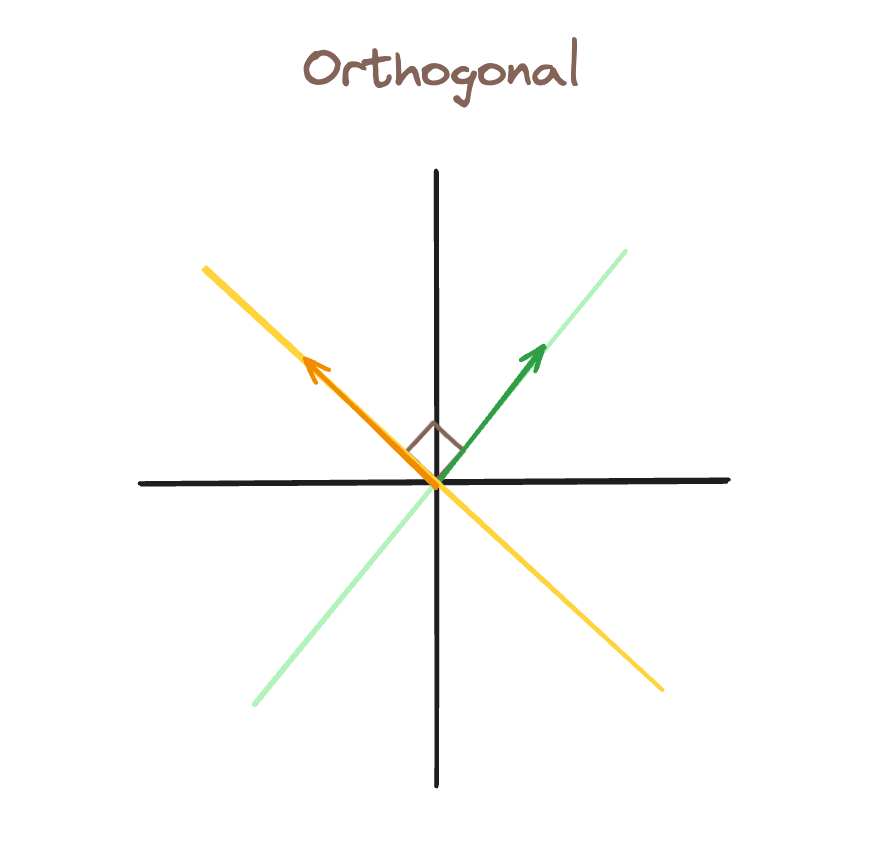

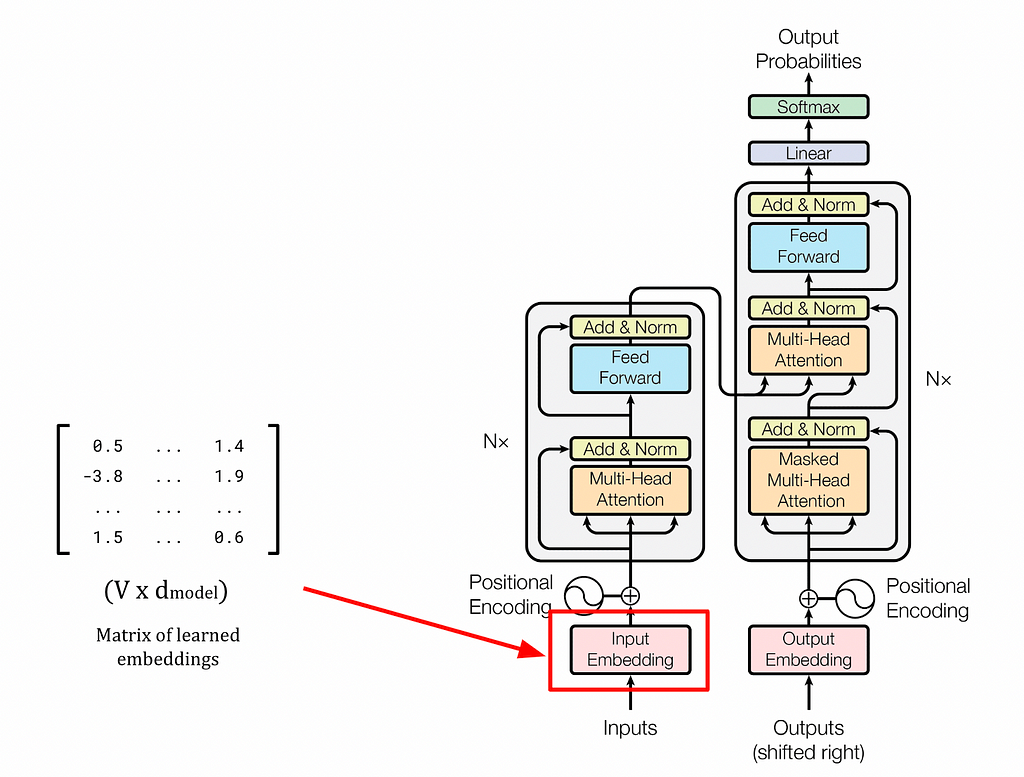

Attention mechanism, crucial in Machine Translation, helps RNNs overcome challenges, leading to the rise of Transformers. Self-attention in Transformers involves key, value, and query vectors to focus on important elements within a...

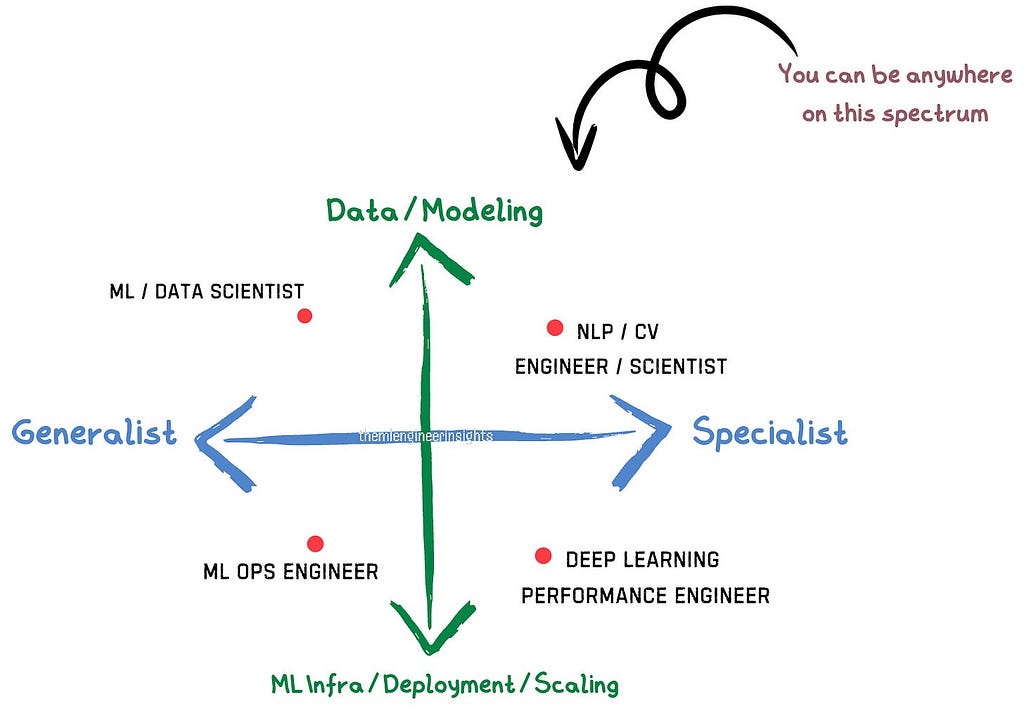

Amy reflects on her journey from unemployment to finding new identities. Transitioning from data science to machine learning engineering, she shares valuable lessons and insights on adapting to changing job market...

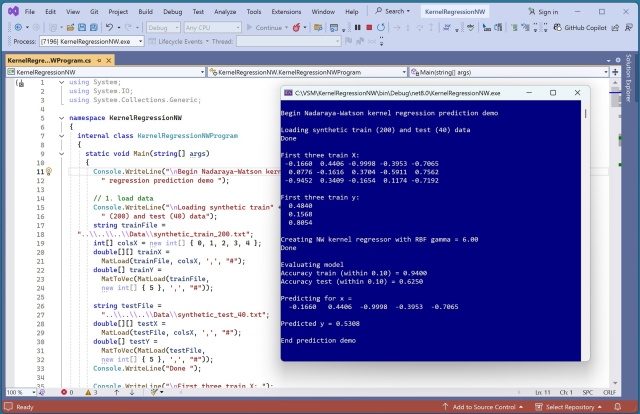

The blog post discusses Nadaraya-Watson kernel regression using a radial basis function kernel, emphasizing the importance of normalizing predictor values. The key equation for NW kernel regression involves a weighted average of target y values based on the RBF kernel function...

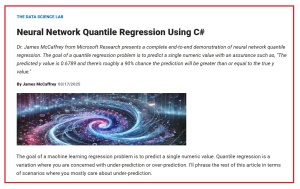

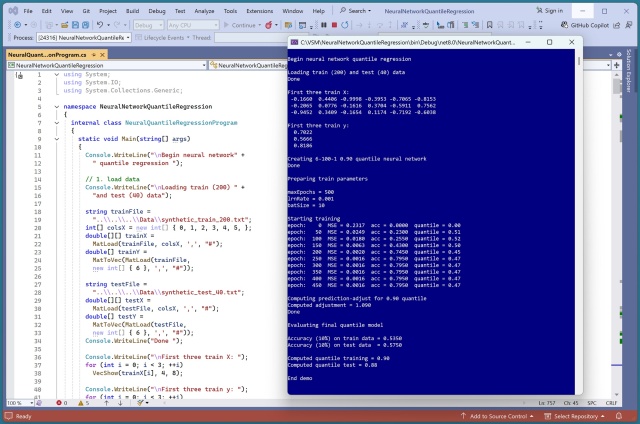

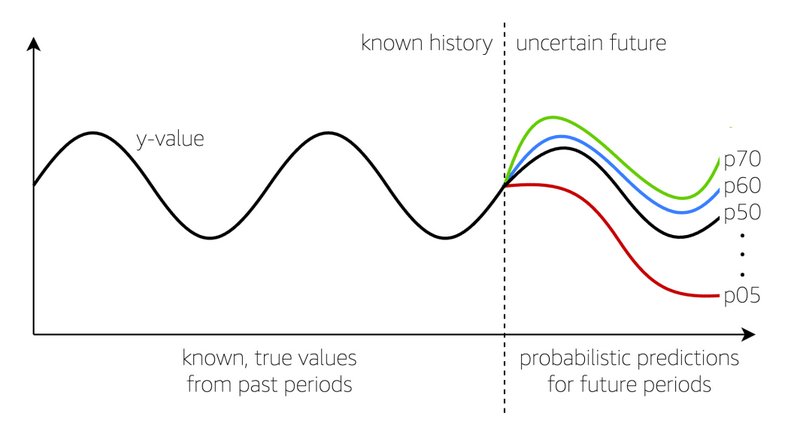

Article: "Neural Network Quantile Regression Using C#." A unique approach to machine learning regression is quantile regression, particularly useful for scenarios with significant consequences for under-prediction. By utilizing a custom loss function, neural network quantile regression aims to predict values to a specified quantile, offering a promising method for accurate...

AI-based PawMatchAI can identify 124 dog breeds by analyzing structured traits like body proportions and fur texture, inspired by human expert recognition methods. Unlike traditional CNNs, this model separates key characteristics for clearer interpretability, revolutionizing AI-based breed...

Neural network binary classifier pseudo-probabilities' calibration error function for sex prediction yields promising results. Accuracy on test data is 0.75, with calibration error less than 0.20, indicating a good model...

Review papers are essential for staying informed in the rapidly evolving field of Physics-Informed Neural Networks (PINNs). The must-read paper "Scientific Machine Learning through Physics-Informed Neural Networks" covers key themes, toolsets, and future directions, offering a comprehensive analysis of PINN fundamentals and practical...

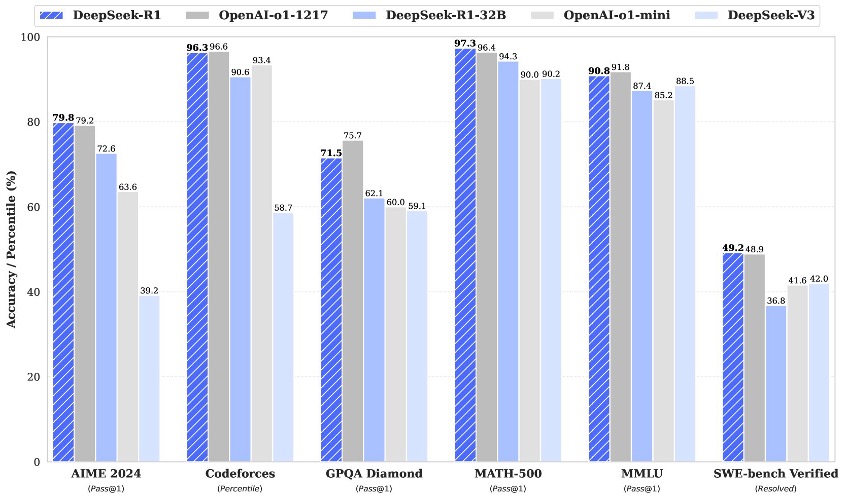

DeepSeek-R1 by DeepSeek AI integrates reinforcement learning for refined outputs. Model variants like DeepSeek-V3 utilize MoE architecture for efficient...

Support Vector Regression (SVR) with a linear kernel penalizes outliers more than close data points, controlled by C and epsilon parameters. SVR, while complex, yields similar results to plain linear regression, making it less practical for linear...

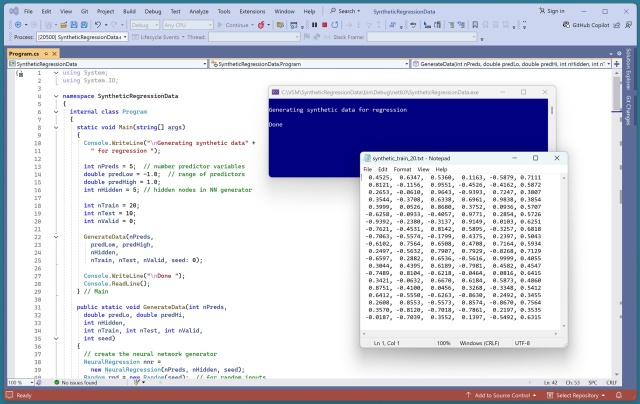

Demo showcases evolutionary training for linear regression using C#. Utilizes a neural network to generate synthetic data. Evolutionary algorithm outperforms traditional training methods in...

GPT-3 sparked interest in Large Language Models (LLMs) like ChatGPT. Learn how LLMs process text through tokenization and neural...

AI struggles to differentiate between similar dog breeds due to entangled features. PawMatchAI uses a unique Morphological Feature Extractor to mimic how human experts recognize breeds, focusing on structured...

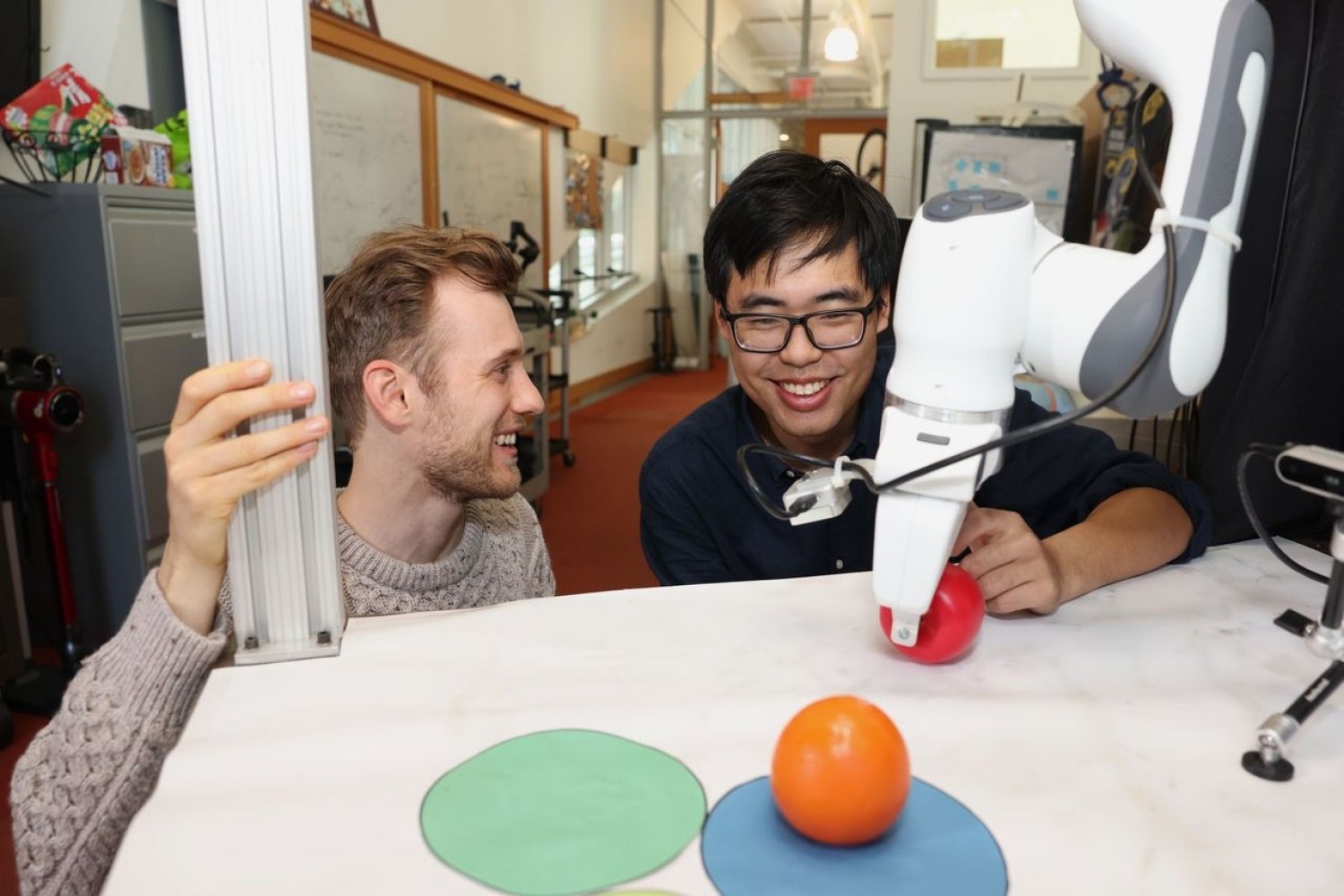

MIT and NVIDIA researchers develop a new framework allowing users to correct robot behavior in real-time without retraining. This intuitive method outperformed alternatives by 21%, potentially enabling laypeople to guide factory-trained robots in household...

Advanced neural network architecture CPTR combines ViT encoder with Transformer decoder for image captioning, improving upon earlier models. CPTR model uses ViT for encoding images and Transformer for decoding captions, enhancing image captioning...

Implementing a neural network quantile regression system in PyTorch was challenging. Exploring C# for the same task proved even more difficult, with calibration...

Transformers are revolutionizing NLP with efficient self-attention mechanisms. Integrating transformers in computer vision faces scalability challenges, but promising breakthroughs are on the...

Author experimented with PyTorch and C# neural networks to create a successful quantile regression system, explaining the concept and challenges. Neural network quantile regression offers a powerful alternative to classical techniques, allowing precise prediction...

Summary: Learn how Large Language Models (LLMs) are built and trained, demystifying the process. Explore pre-training, tokenization, and neural network training in...

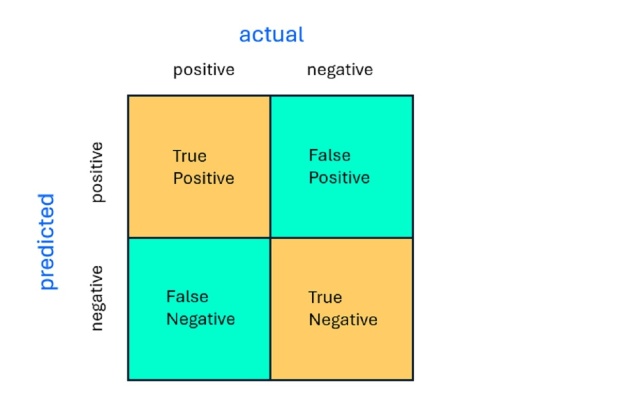

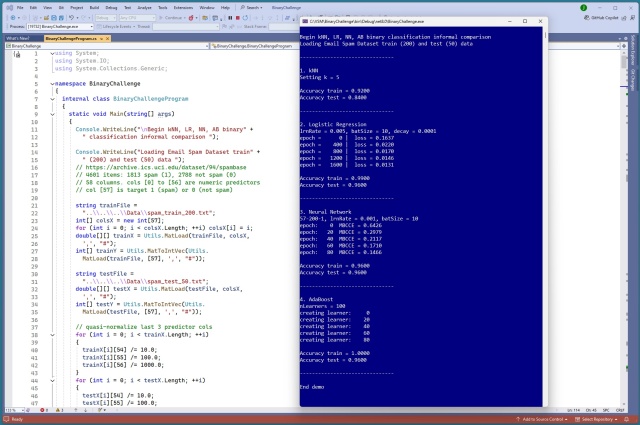

Binary classification problems can be tricky to interpret due to ambiguity in the confusion matrix, where definitions of TP, TN, FP, and FN can vary. Understanding these terms is crucial for accurate analysis. Be cautious when interpreting confusion matrices to avoid confusion in machine learning...

Machine learning engineer shares journey from physics student to data scientist, landing first role after applying to 300+ jobs. Explored AI after watching DeepMind's AlphaGo documentary, highlighting the importance of hard work and...

Data science advancements like Transformer, ChatGPT, and RAG are reshaping tech. Understanding NLP evolution is key for aspiring data...

DeepSeek's R1 LLM outperforms competitors like OpenAI's o1, at a fraction of the cost. Model distillation key to R1's success, may signal a shift towards LLM...

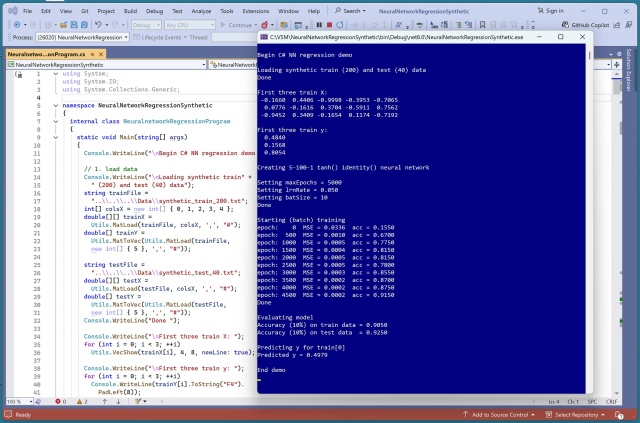

Main techniques for regression include Linear, k-Nearest Neighbors, Kernel Ridge, Gaussian Ridge, Neural Network, Random Forest, AdaBoost, and Gradient Boosting. Each technique's effectiveness varies based on dataset size and...

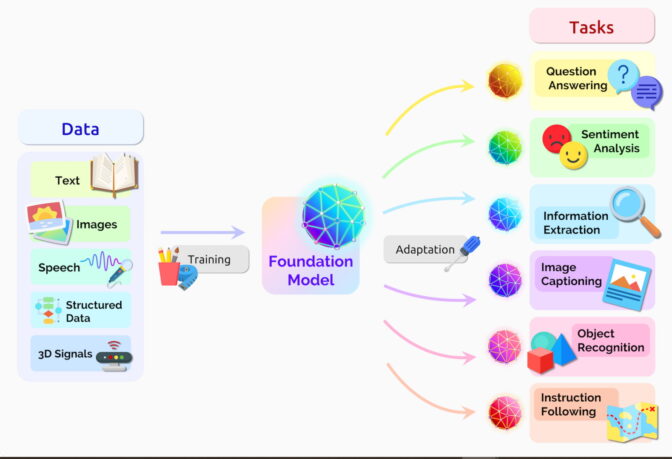

Researchers are rapidly developing AI foundation models, with 149 published in 2023, double the previous year. These neural networks, like transformers and large language models, offer vast potential for diverse tasks and economic...

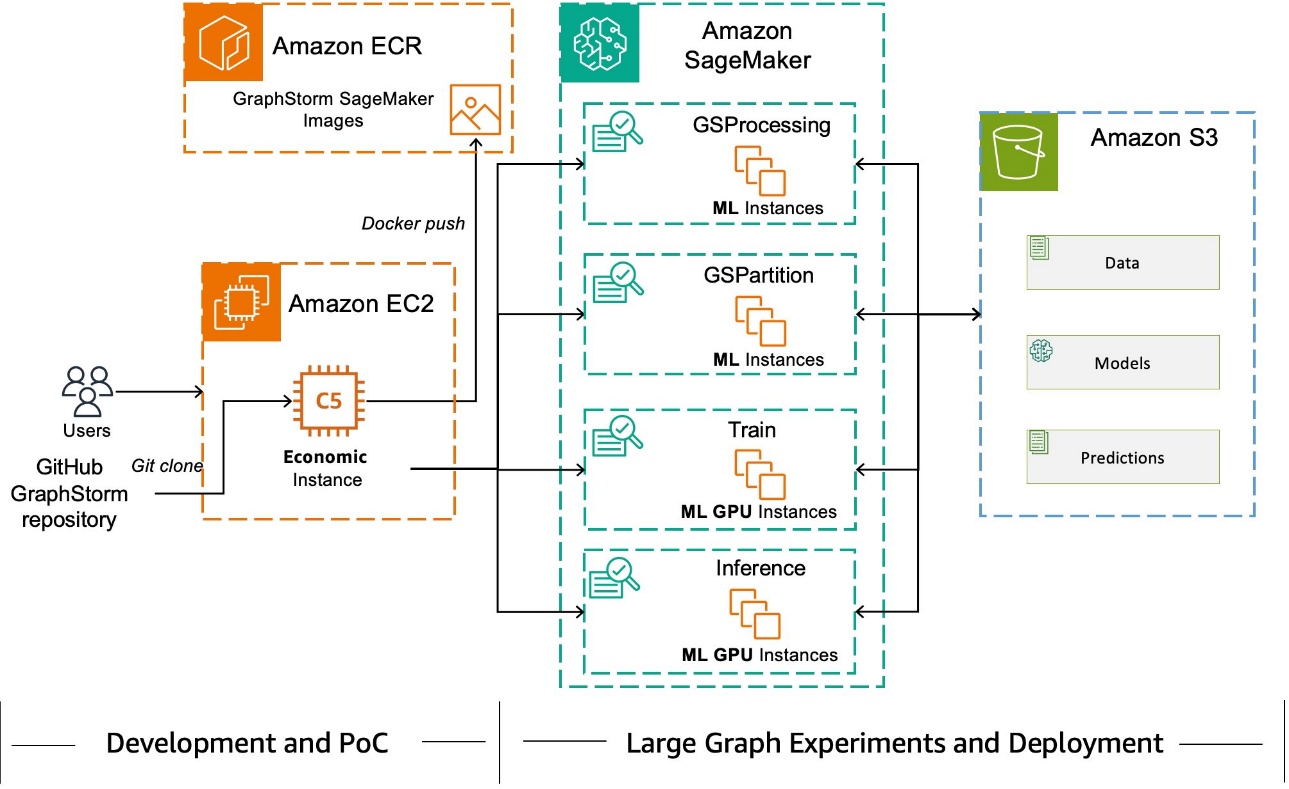

GraphStorm v0.4 by AWS AI introduces integration with DGL-GraphBolt for faster GNN training and inference on large-scale graphs. GraphBolt's fCSC graph structure reduces memory costs by up to 56%, enhancing performance in distributed...

Kaiming He at MIT sees AI breaking down walls between scientific disciplines, creating a common language for progress and collaboration. From AlphaFold to ChatGPT, AI tools are propelling advancements in diverse fields like protein structure prediction and natural language...

LLM applications require intentional temperature settings to control randomness. Temperature values impact the model's outputs, making them more random or focused. Softmax function transforms raw scores into a clean probability distribution for accurate...

MIT researchers developed an automated system to reduce energy consumption in AI models by utilizing data redundancies. The system improved computation speed by nearly 30 times and could optimize algorithms for various...

An exploration of neural networks inspired by the human brain, including back-propagation training. Understand AI at its...

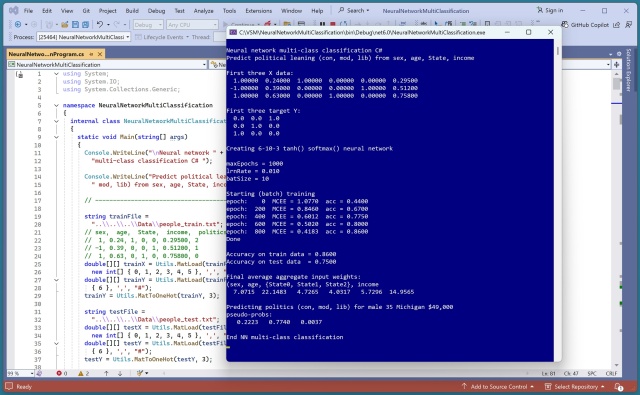

Speaker to present "Introduction to Neural Networks Using C#" at 2025 Visual Studio Live conference in Las Vegas. Demo includes multi-class classification system predicting political leaning from synthetic...

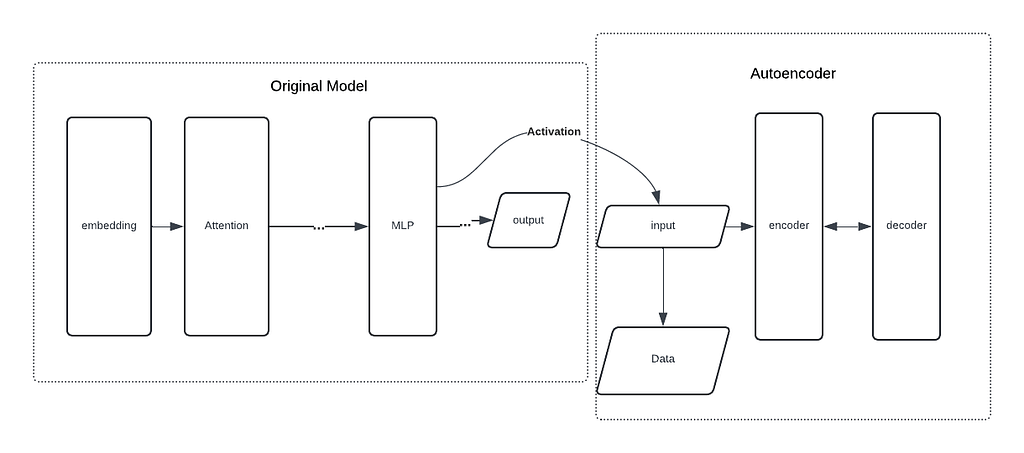

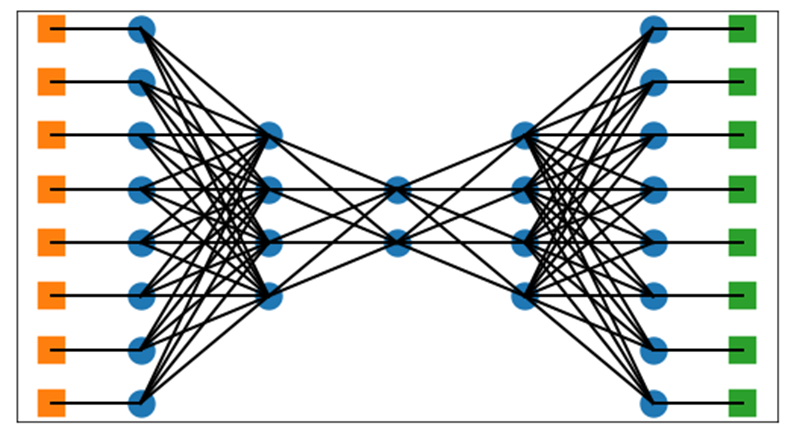

Disentangle complex Neural Networks with Sparse Autoencoder to uncover interpretable features, overcoming superposition challenges in Large Language Models. Sparse Autoencoder introduces sparsity in hidden layers to decompose neural networks into more understandable representations for...

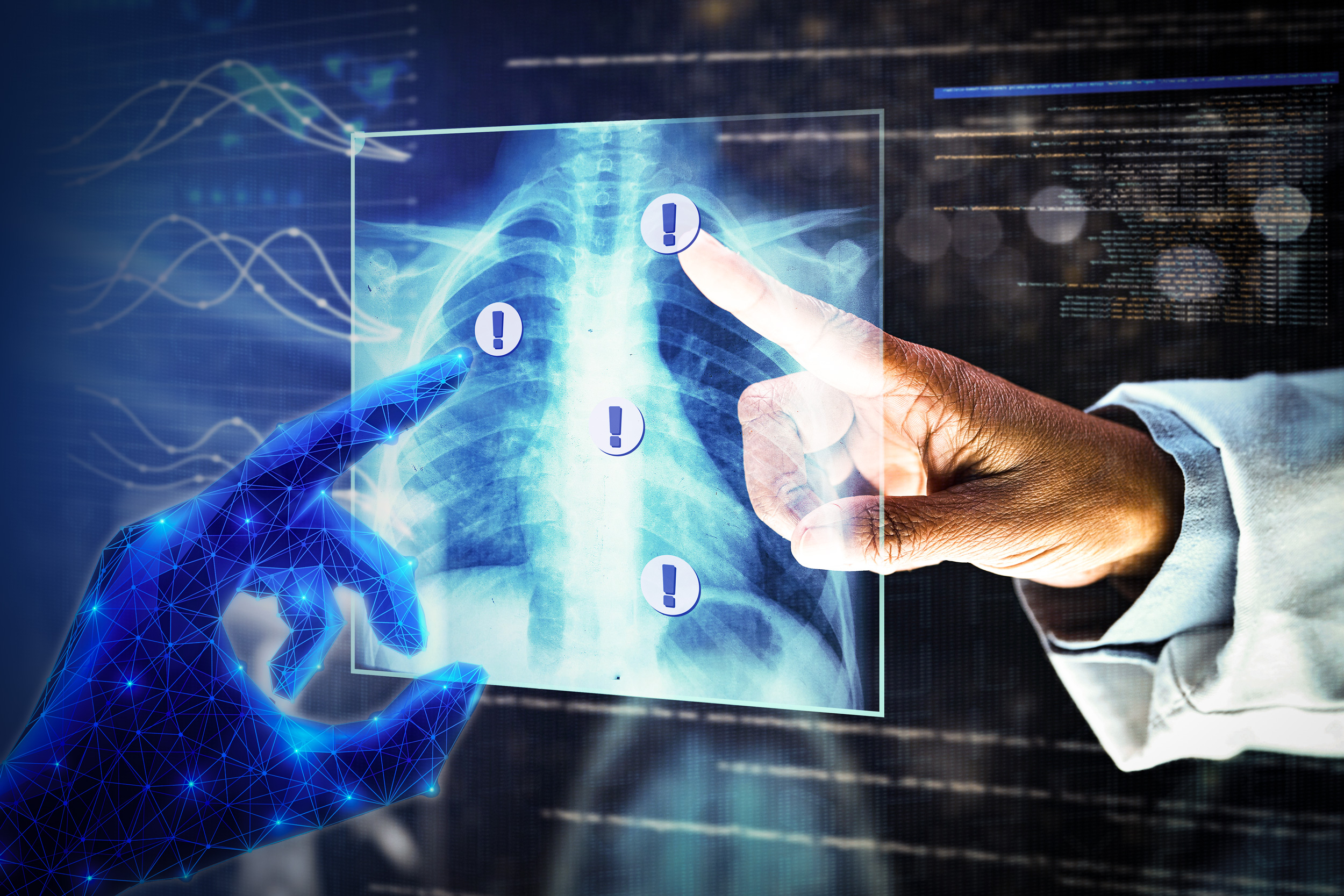

Digital pathology is transforming cancer diagnosis with AI-powered computational pathology. French startup Bioptimus released H-optimus-0, the world's largest FM for pathology, setting a new benchmark in medical...

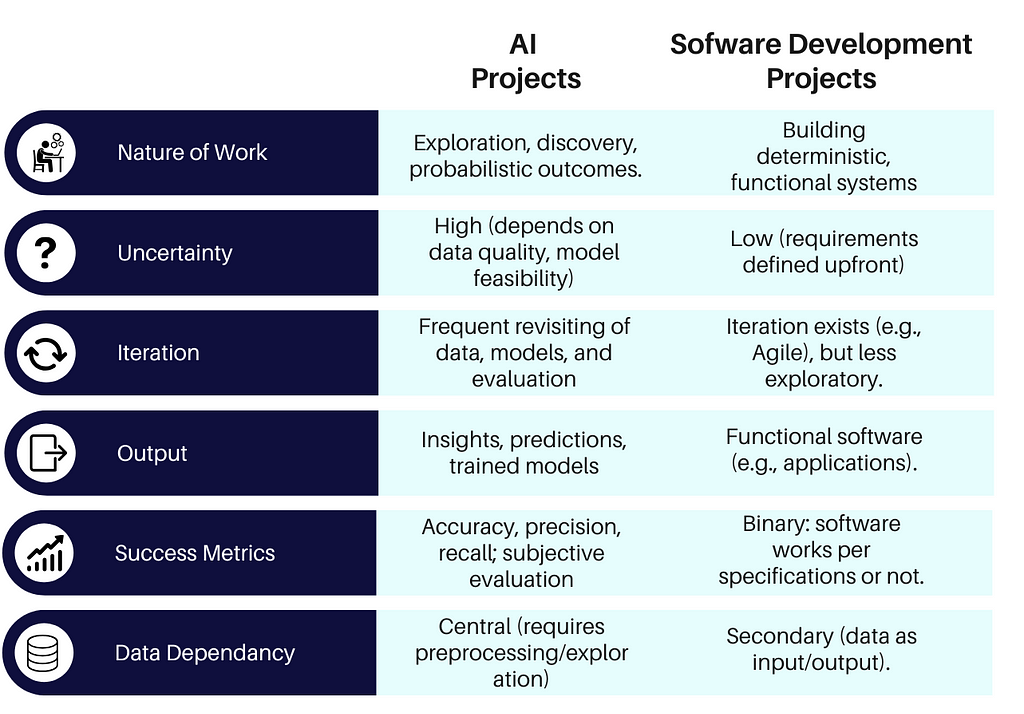

AI projects differ from traditional software development in their iterative approach, emphasizing discovery and adaptation. The AI development lifecycle includes problem definition, data preparation, model development, evaluation, deployment, and...

Retrieval-augmented generation (RAG) enhances generative AI with specific data sources, improving accuracy and trustworthiness. RAG helps models provide authoritative answers, clear ambiguity, and prevent incorrect responses, revolutionizing user...

C# machine learning implementations aim to mimic scikit-learn's API design for consistency. Debate arises over passing all parameters to constructors versus just training data to...

Machine learning boosts mobile advertising and gaming industries with neural networks for click prediction. Top players like Applovin are investing billions in user acquisition, migrating to deep learning for enhanced...

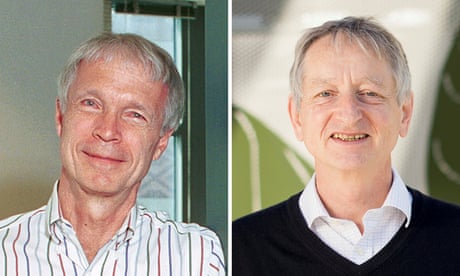

Geoffrey Hinton's Nobel Prize-winning work on Restricted Boltzmann Machines (RBMs) explained and implemented in PyTorch. RBMs are unsupervised learning models for extracting meaningful features without output labels, utilizing energy functions and probability...

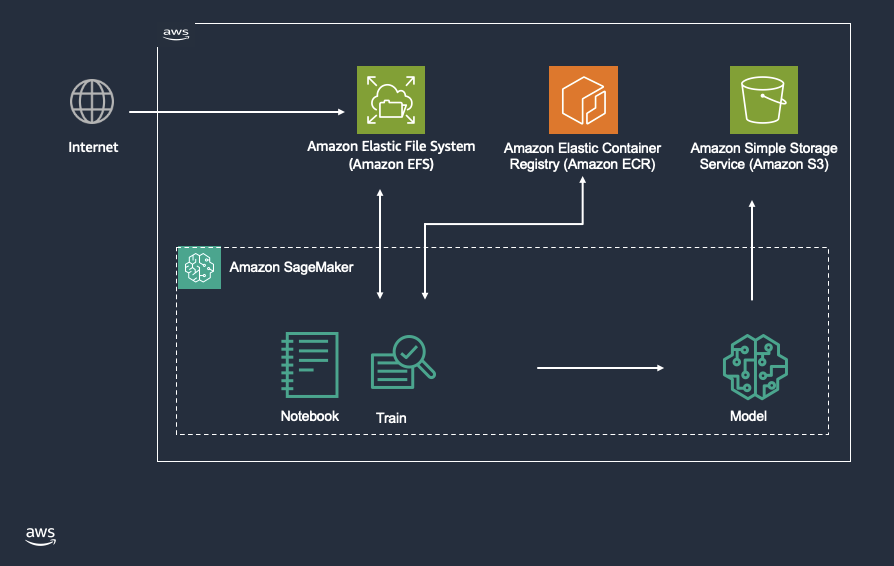

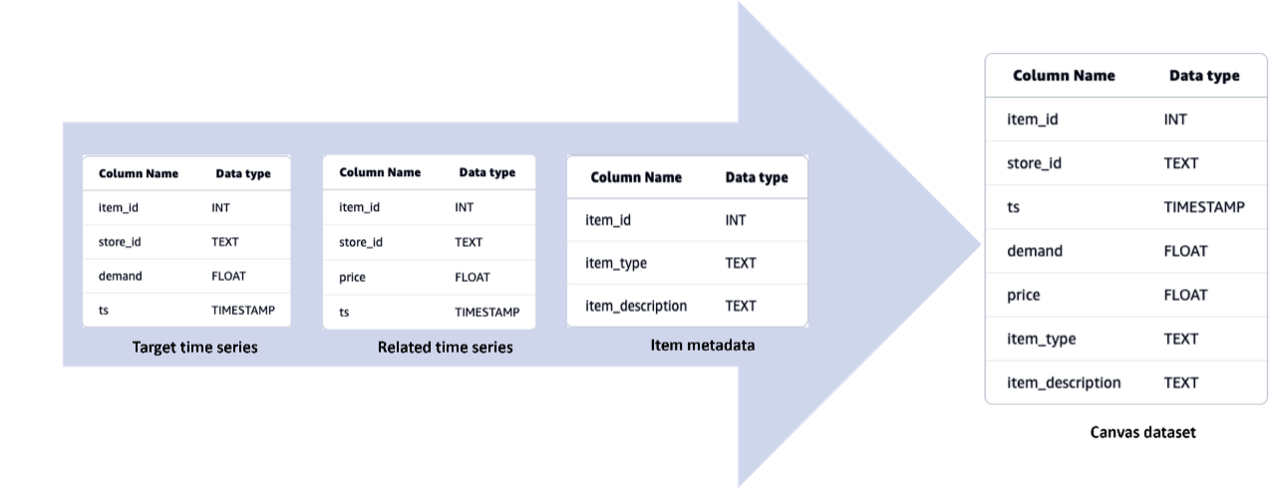

Supply chain forecasting is crucial for businesses facing volatile markets. Amazon Web Services' SageMaker Canvas offers no-code ML solutions for accurate forecasting in retail and consumer packaged goods...

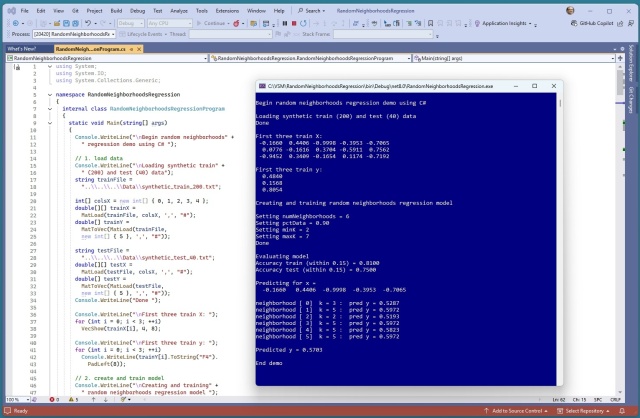

Algorithm idea of random neighborhood regression creates an ensemble of k-nearest neighbor regressors to address overfitting and trial-and-error issues in basic k-nearest neighbors regression. A successful demo using C# showcased improved accuracy in prediction with virtual collections of...

MIT researchers at the McGovern Institute for Brain Research have discovered the vital role of precise timing in auditory neurons for recognizing voices and locating sounds. Using machine learning, the team's models provide insights for studying hearing impairment and developing...

Materials design has evolved from alchemy to machine learning. Research led by Ju Li introduces a new method using coupled-cluster theory to boost accuracy and speed in materials...

Linear regression with two-way interactions can enhance prediction accuracy significantly. The model was implemented successfully using C# and achieved high accuracy...

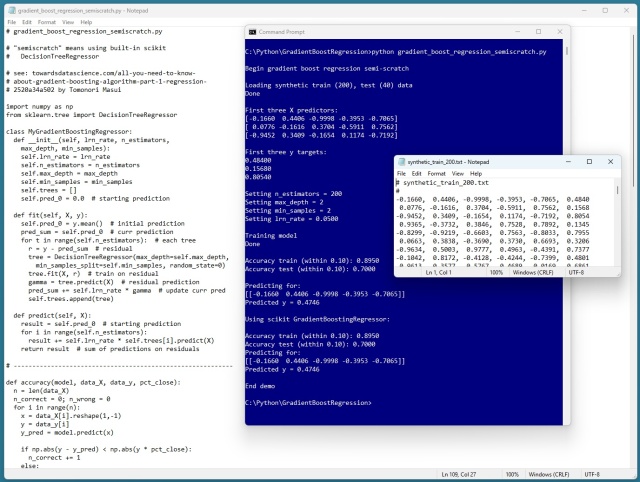

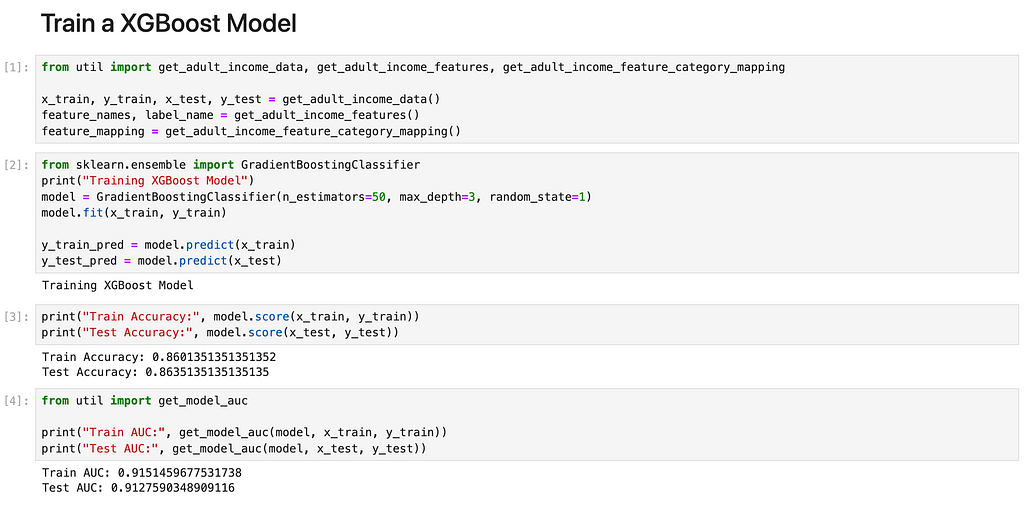

Gradient boosting regression (GBR) uses decision trees to predict values. A demo in Python showcases the accuracy of GBR in predicting synthetic data, matching results from scikit library. XGBoost and LightGBM are popular GBR libraries for machine learning...

Deep Instinct offers DSX, a cutting-edge cybersecurity solution using deep learning and generative AI to protect against malware and ransomware in real-time. Their DIANNA tool, powered by Amazon Bedrock, enhances SOC teams' capabilities by providing rapid analysis of known and unknown threats, addressing key challenges in the evolving threat...

Deep learning excels in outlier detection for image, video, and audio data, but struggles with tabular data. Traditional methods still prevail in tabular outlier detection, yet deep learning shows promise for future...

Tech company employee creates linear regression demo using synthetic data, highlighting API design insights resembling scikit-learn library. Predictions show accuracy of 77.5% on test data, showcasing practical application of stochastic gradient...

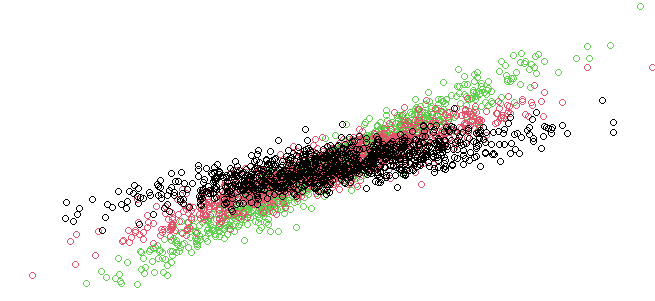

Newton iteration matrix inverse was successfully used in Gaussian process regression to improve efficiency, accuracy, and robustness. The demo showcased high accuracy levels in predicting target values for synthetic data with a complex underlying...

Neural networks face challenges with superposition, where one neuron represents multiple features. Non-linearity and feature sparsity play key roles in causing...

Linear regression can handle non-linear data using finite normal mixtures. This approach allows for flexibility and interpretability, making it a powerful machine learning tool. Simulating a mixture model for regression with MCMC sampling shows how to recover components using Bayesian...

Understanding loss functions is crucial for training neural networks. Cross-entropy helps quantify differences in probability distributions, aiding in model...

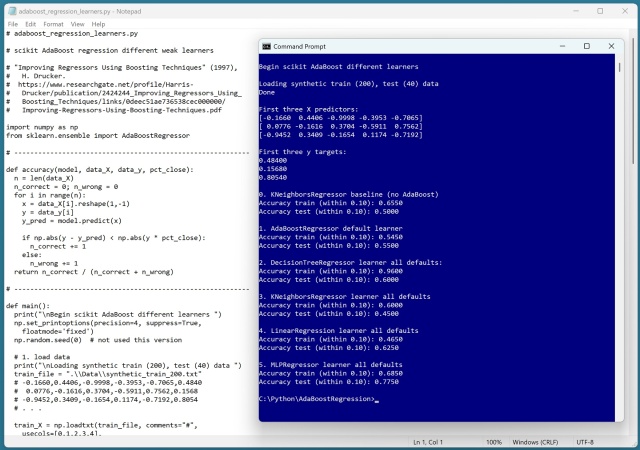

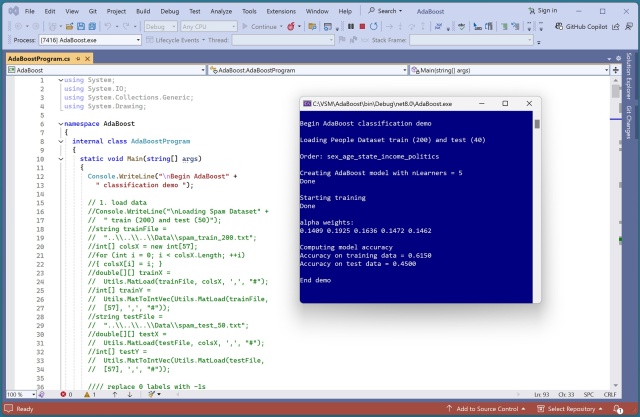

AdaBoost.R2 modifies AdaBoost for regression, creating a sequence of decision trees for better predictions. Weighted median enhances accuracy by emphasizing high-confidence tree...

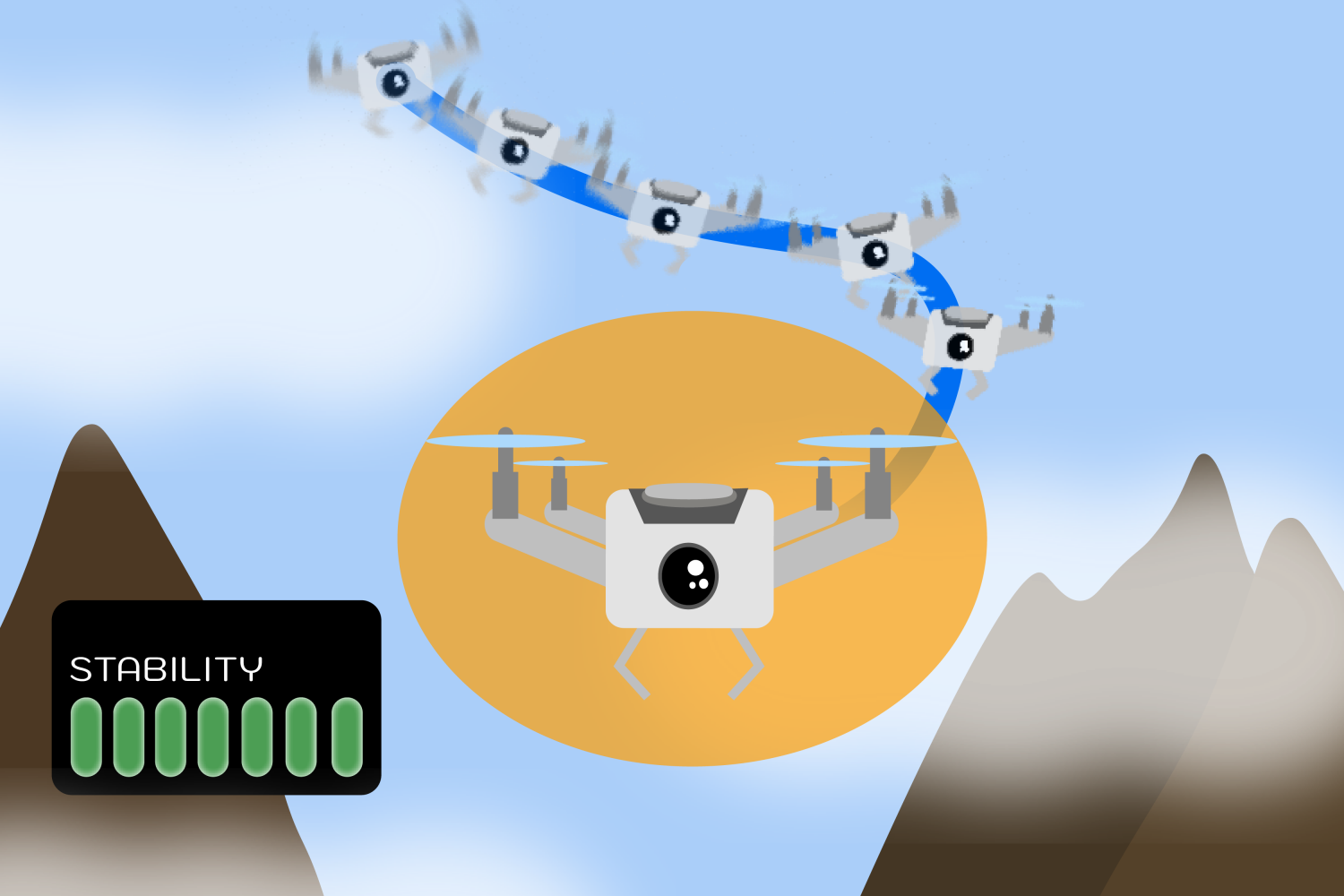

Corvus Robotics uses autonomous drones for efficient warehouse inventory management, increasing operational speed and accuracy. Co-founder Mohammed Kabir developed the drone platform to navigate warehouses without GPS, revolutionizing inventory...

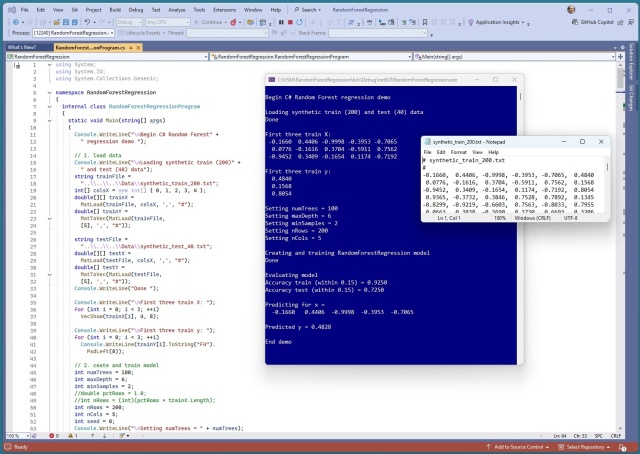

Machine learning random forest regression predicts values using decision trees. C# demo shows synthetic data prediction accuracy of 0.9250 for training and 0.7250 for...

New OpenAI o1 Model outperforms ChatGPT-40. Experiment with ChatGPT-o1 to generate Python code yields 90...

DDPG enhances AI-driven medical robotics by solving the challenge of continuous action control. The Actor-Critic framework in DDPG combines DPG and DQN to improve stability and performance in environments with continuous action...

MIT's Daniela Rus receives 2024 John Scott Award for groundbreaking robotics research, redefining the capabilities of robots beyond traditional norms. Rus's work focuses on developing explainable algorithms to create collaborative robots that can solve real-world challenges, emphasizing the synergy between the body and brain for intelligent...

Customer profiling is evolving with vector-based exemplar recommenders like Pinterest's Pinnersage engine, offering tailored user choices. These algorithms simplify recommendations by converting samples into vectors, improving user...

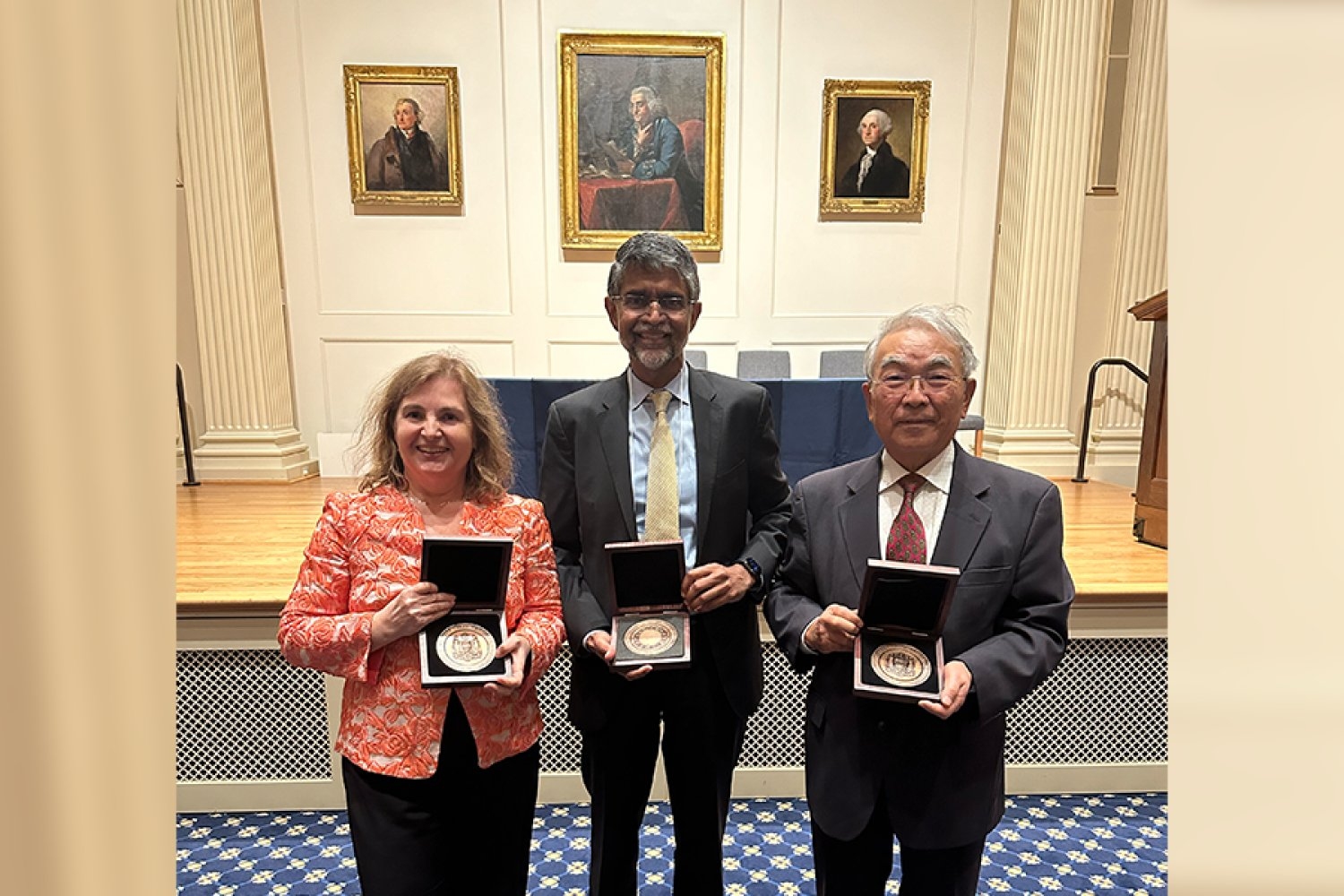

AdaBoost regression combines weak learners like decision tree, k-NN, and linear regression. Results show neural network as the best performer in prediction...

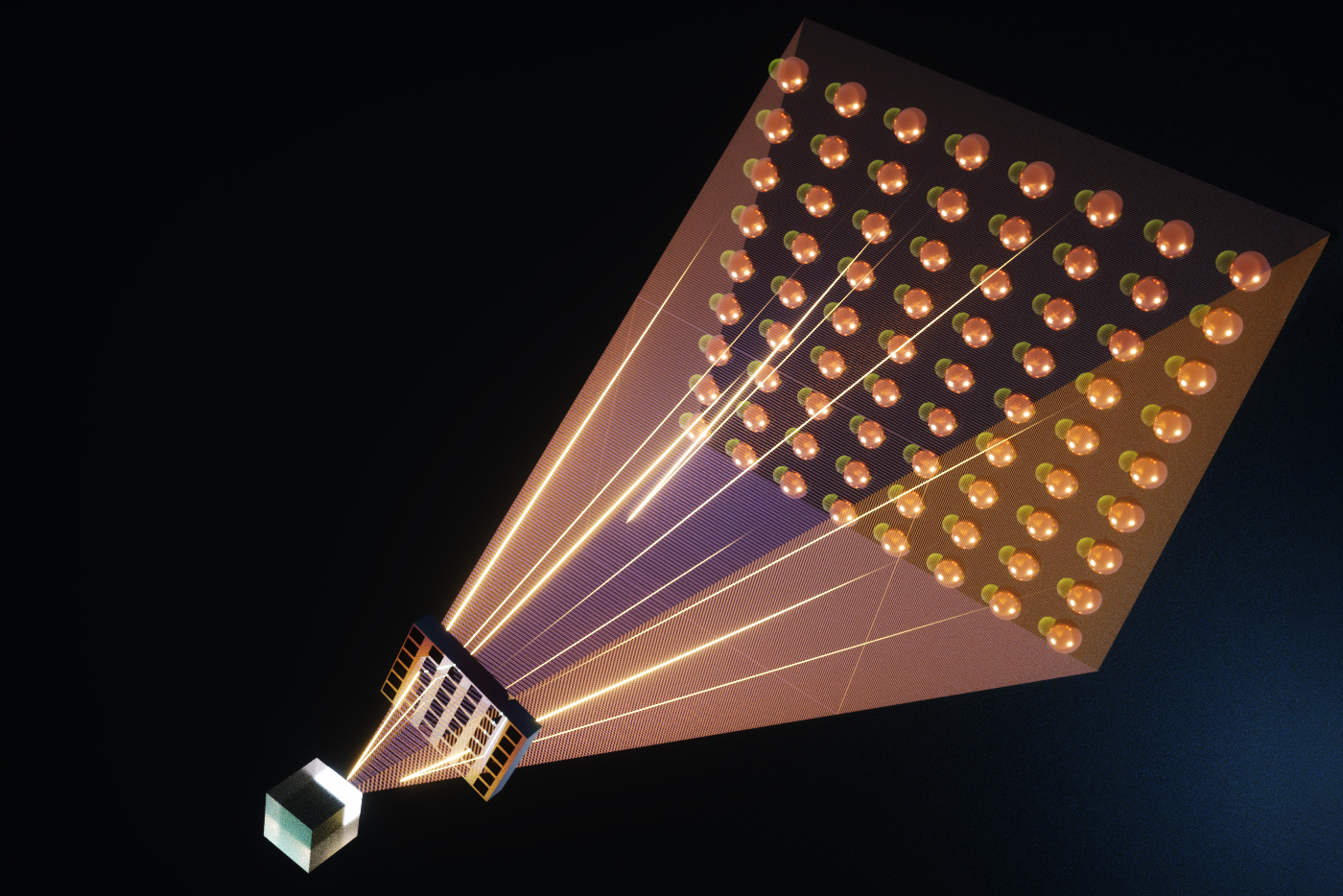

MIT scientists develop photonic chip for deep neural network computations, achieving high speed and accuracy. The chip could revolutionize deep learning for applications like lidar and high-speed...

Generate synthetic data for machine learning regression using a neural network with specified parameters. Simplify complex data generation with a customizable function in...

Developers at re:Invent 2024 face unique challenges of physical AWS DeepRacer racing. Transition from virtual to physical racing poses a significant challenge due to differences in environments and car...

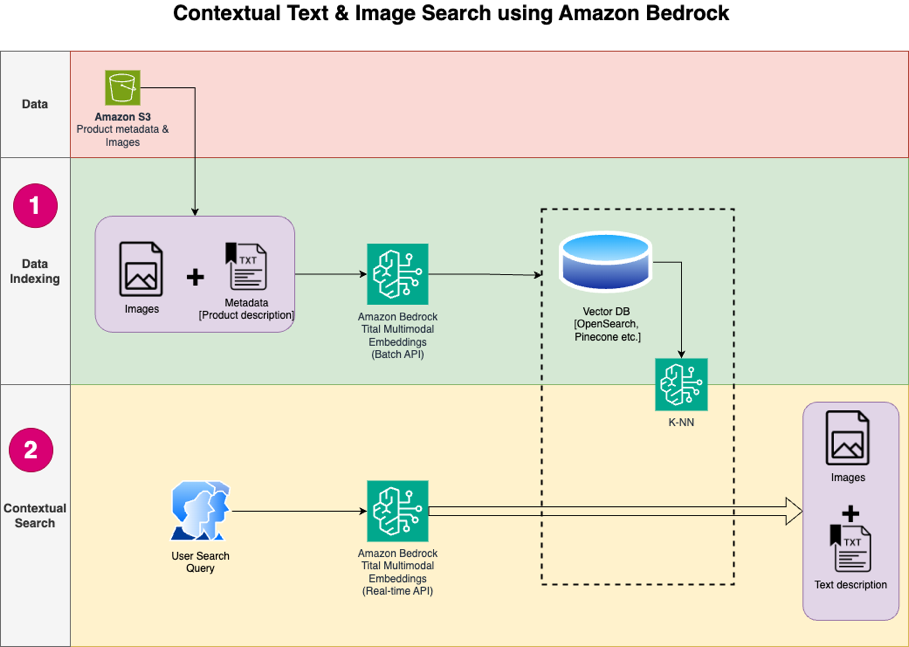

Multimodal embeddings merge text and image data into a single model, enabling cross-modal applications like image captioning and content moderation. CLIP aligns text and image representations for 0-shot image classification, showcasing the power of shared embedding...

Neuromorphic Computing reimagines AI hardware and algorithms, inspired by the brain, to reduce energy consumption and push AI to the edge. OpenAI's $51 million deal with Rain AI for neuromorphic chips signals a shift towards greener AI at data...

Summary: Bias-variance tradeoff affects predictive models, balancing complexity and accuracy. Real-world examples show how underfitting and overfitting impact model...

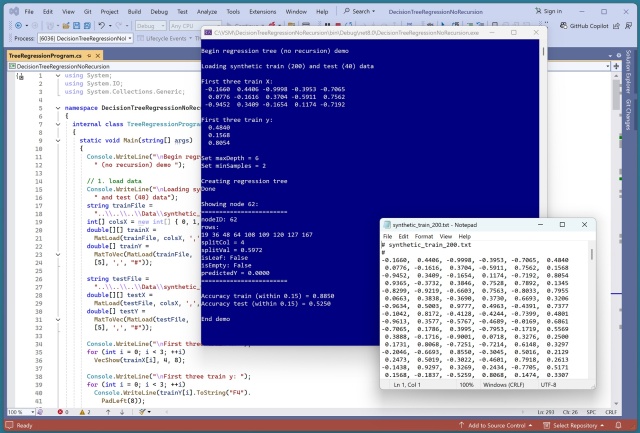

Software engineer James McCaffrey designed a decision tree regression system in C# without recursion or pointers. He removed row indices from nodes to save memory, making debugging easier and predictions more...

Marzyeh Ghassemi combines her love for video games and health in her work at MIT, focusing on using machine learning to improve healthcare equity. Ghassemi's research group at LIDS explores how biases in health data can impact machine learning models, highlighting the importance of diversity and inclusion in AI...

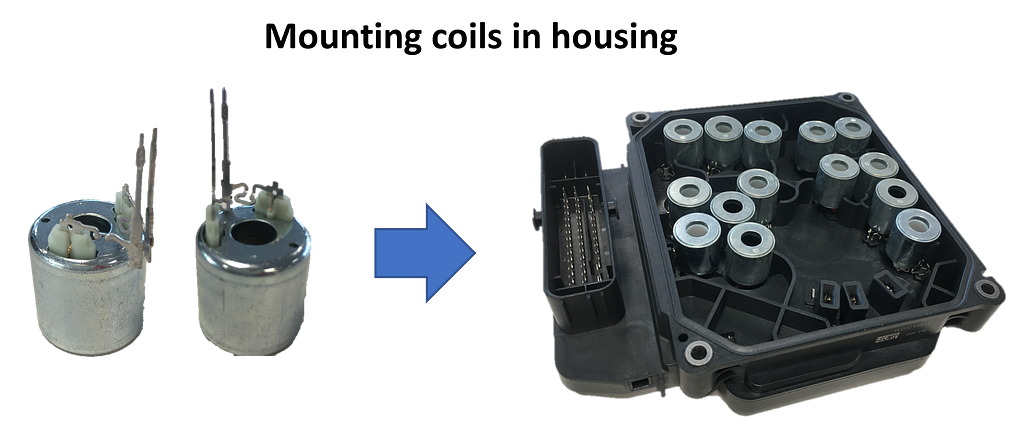

Developing a CNN for automotive electronics inspection tasks using PyTorch. Exploring convolutional layers and how CNNs make decisions in visual...

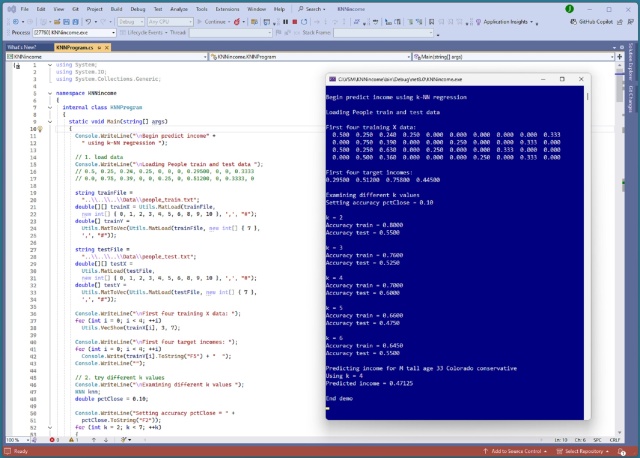

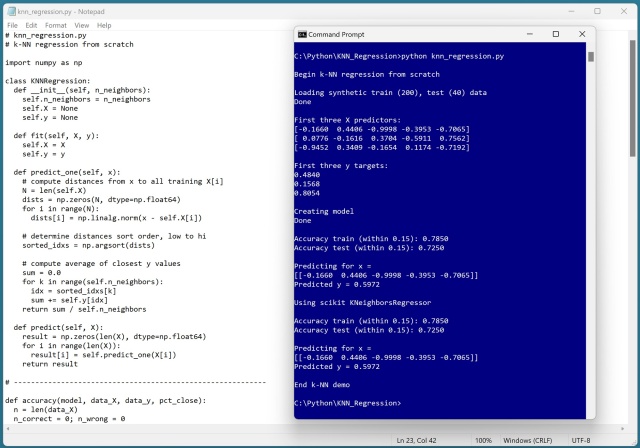

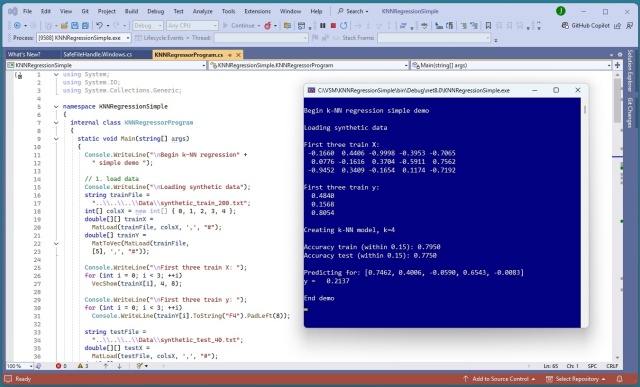

Summary: Microsoft Visual Studio Magazine's November 2024 edition features a demo of k-NN regression using C#, known for simplicity and interpretability. The technique predicts numeric values based on closest training data, with a demo showcasing accuracy and prediction...

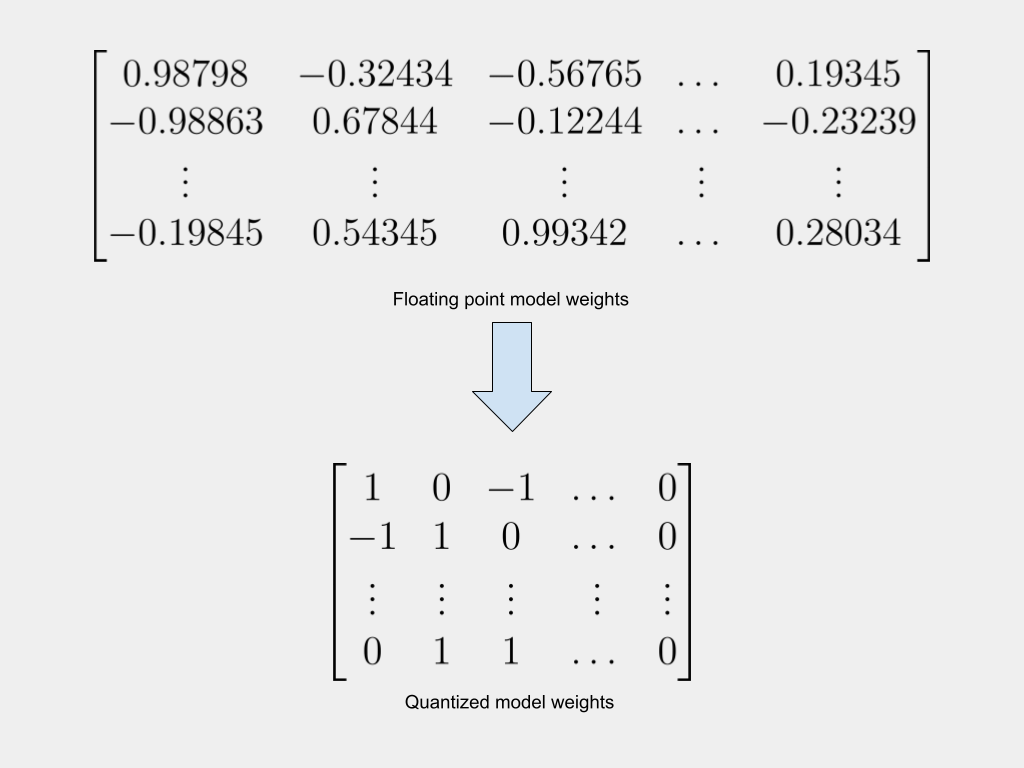

Large AI models are costly to use and train, leading to a focus on quantization to reduce model size while maintaining accuracy. Two key approaches discussed are post-training quantization (PTQ) and Quantization Aware Training (QAT), each with its own techniques for minimizing accuracy...

Mathematics in modern machine learning is evolving. Shift towards scale broadens scope of applicable mathematical fields, impacting design...

Implementing k-NN regression in C# for predicting income from demographic data. Encoding, normalizing, and testing accuracy with different k...

Implementing k-nearest neighbors regression from scratch using Python with synthetic data, demonstrating prediction accuracy within 0.15. Validation against scikit-learn KNeighborsRegressor module for matching results, showcasing the simplicity and effectiveness of the...

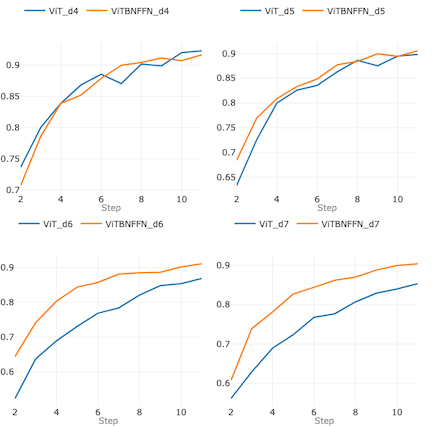

Integrating BatchNorm in Vision Transformer leads to faster convergence and stability. ViTBNFFN outperforms ViT with larger depths and higher learning...

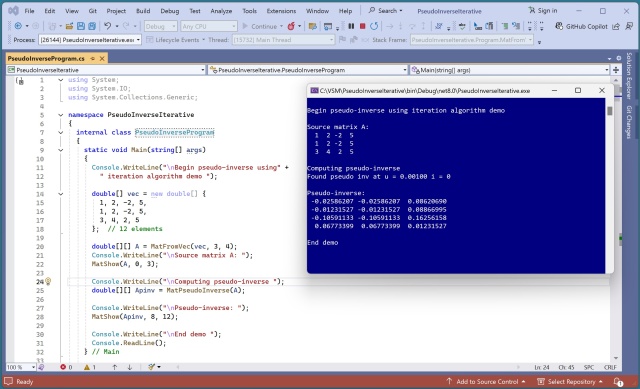

Research paper presents a new elegant iterative technique for computing the Moore-Penrose pseudo-inverse of a matrix. The method uses Calculus gradient and iterative looping to approach the true pseudo-inverse, resembling neural network training...

Generative AI by Stability AI is transforming visual content creation for media, advertising, and entertainment industries. Amazon Bedrock's new models offer improved text-to-image capabilities, enhancing creativity and efficiency in marketing and...

AI models, like LLaMA 3.1, require large GPU memory, hindering accessibility on consumer devices. Research on quantization offers a solution to reduce model size and enable local AI model...

K-nearest neighbors regression predicts values by finding nearest neighbors in training data, achieving 79.50% accuracy in the demo. Unlike other techniques, k-NN regression doesn't create a mathematical model, using training data as the model...

Article explains inner workings of Large Language Models (LLMs) from basic math to advanced AI models like GPT and Transformer architecture. Detailed breakdown covers embeddings, attention, softmax, and more, enabling recreation of modern LLMs from...

ML metamorphosis, a process chaining different models together, can significantly improve model quality beyond traditional training methods. Knowledge distillation transfers knowledge from a large model to a smaller, more efficient one, resulting in faster and lighter models with improved...

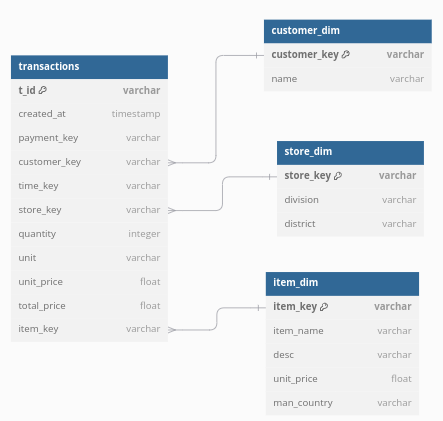

Engage in Relational Deep Learning (RDL) by directly training on your relational database, transforming tables into a graph for efficient ML tasks. RDL eliminates feature engineering steps by learning from raw relational data, enhancing model performance and...

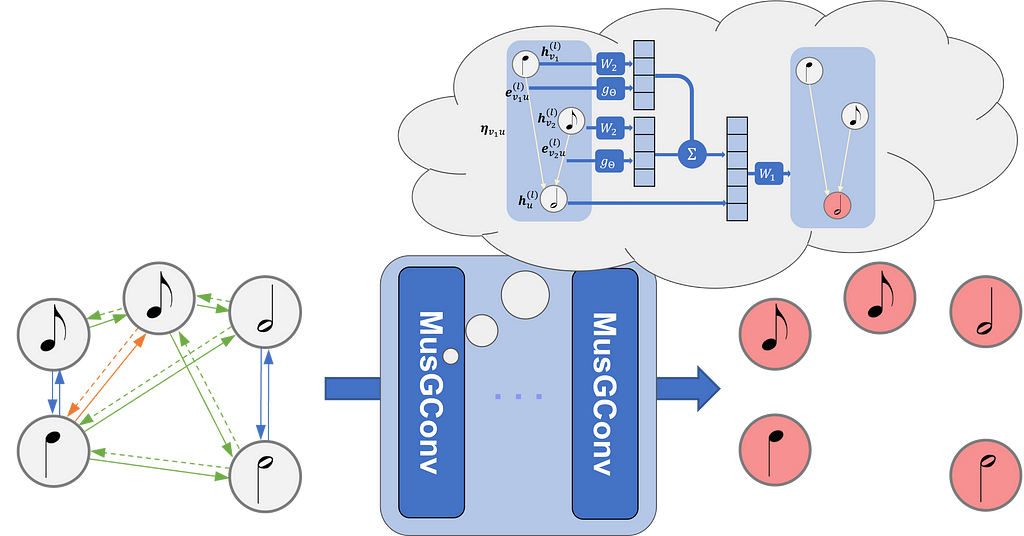

GraphMuse Python library utilizes Graph Neural Networks for music analysis, connecting notes in a score to create a continuous graph. Built on PyTorch and PyTorch Geometric, GraphMuse transforms musical scores into graphs up to x300 faster than previous methods, revolutionizing music...

MIT researchers propose Diffusion Forcing, a new training technique that combines next-token and full-sequence diffusion models for flexible, reliable sequence generation. This method enhances AI decision-making, improves video quality, and aids robots in completing tasks by predicting future steps with varying noise...

Geoffrey Hinton and John Hopfield awarded 2024 Nobel prize for pioneering artificial neural networks inspired by the brain. Their work revolutionized AI capabilities with memory storage and learning functions mimicking human...

AdaBoost training is deterministic, unaffected by data order. Results remain identical, a rarity in ML...

MIT CSAIL researchers have developed an AI-driven approach using graph neural networks to improve simulation accuracy by distributing data points more uniformly across space. Their method, Message-Passing Monte Carlo, enhances simulations in fields like robotics and finance, crucial for accurate...

Training computer vision models with Ultralytics' YOLOv8 is now easier using Python, CLI, or Google Colab. YOLOv8 is known for accuracy, speed, and flexibility, offering local-based or cloud-based training options, such as Google Colab for enhanced computation...

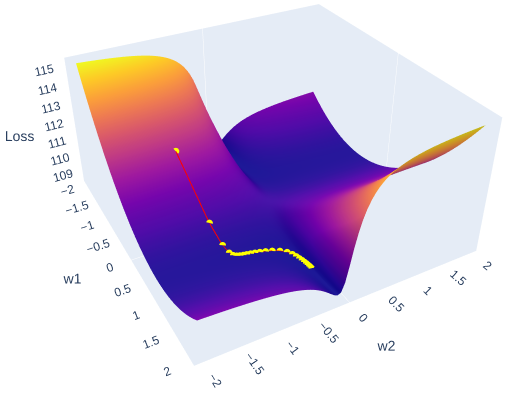

Exploring Neural Networks in Hydrometeorology: A unique approach to optimizing error surfaces in 3D using PyTorch. Learn how to visualize and interactively illustrate the steps of Stochastic Gradient Descent with plotly Python...

AI hosting platform Hugging Face hits 1 million AI model listings, offering customization for specialized tasks. CEO Delangue emphasizes the importance of tailored models for individual use-cases, highlighting the platform's...

AdaBoost is a powerful binary classification technique showcased in a demo for email spam detection. While AdaBoost doesn't require data normalization, it may be prone to model overfitting compared to newer algorithms like XGBoost and...

AI image generator Flux recreates handwriting, sparking ethical questions and emotional connections. A unique way to preserve personal memories and celebrate loved...

Implementing multi-class k-nearest neighbors classification from scratch using a synthetic dataset. Encoding and normalizing raw data for accurate predictions, with k=5 yielding the best...

Compress LLMs 10X without performance loss. Techniques like quantization, pruning, and knowledge distillation make powerful ML models more...

A comparison of kNN, LR, NN, and AB for binary classification revealed insights on predictive power, ease of training, and interpretability. Experiments with the UCI Email Spam Dataset showed LR and NN outperforming kNN and AB in...

Google and Tel Aviv University introduce GameNGen, an AI model simulating Doom using Stable Diffusion techniques. The neural network system could revolutionize real-time video game synthesis by predicting and generating graphics on the...

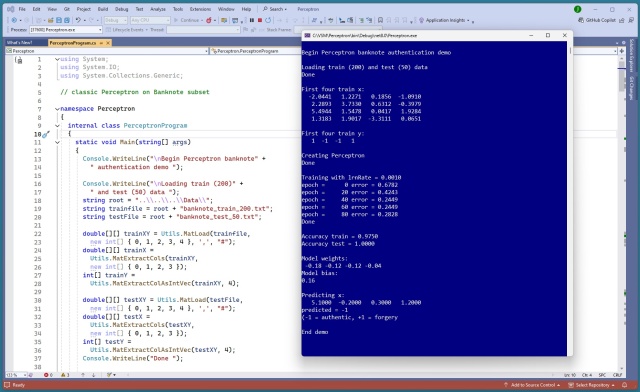

Engaging summary: A classic Perceptron demo using Banknote Authentication Dataset showcases simple binary classification. Training and testing data yield high accuracy in predicting authenticity, highlighting the foundational role of Perceptrons in neural...

Decoding ML job roles is key to interview success. Understanding spectrum of roles can refine strategy and boost...

Integrating Batch Normalization in a ViT architecture reduces training and inference times by over 60%, maintaining or improving accuracy. The modification involves replacing Layer Normalization with Batch Normalization in the encoder-only transformer...

AI can create images and sounds simultaneously, like corgis barking. Researchers at the University of Michigan explore this groundbreaking...

Summary: Learn how to build a 124M GPT2 model with Jax for efficient training speed, compare it with Pytorch, and explore the key features of Jax like JIT Compilation and Autograd. Reproduce NanoGPT with Jax and compare multiGPU training token/sec between Pytorch and...

GraphStorm is a low-code GML framework for building ML solutions on enterprise-scale graphs in days. Version 0.3 adds multi-task learning support for node classification and link prediction...

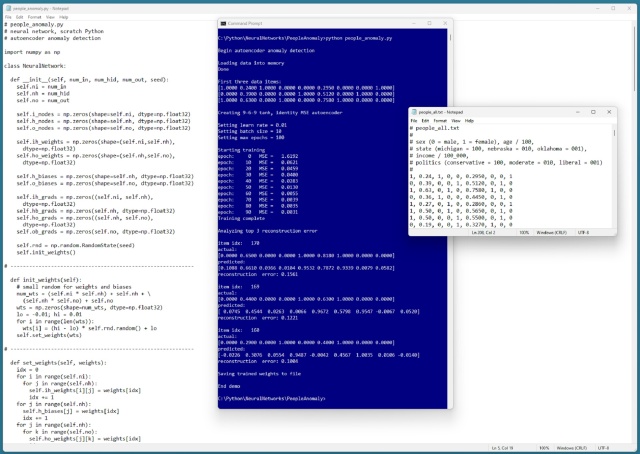

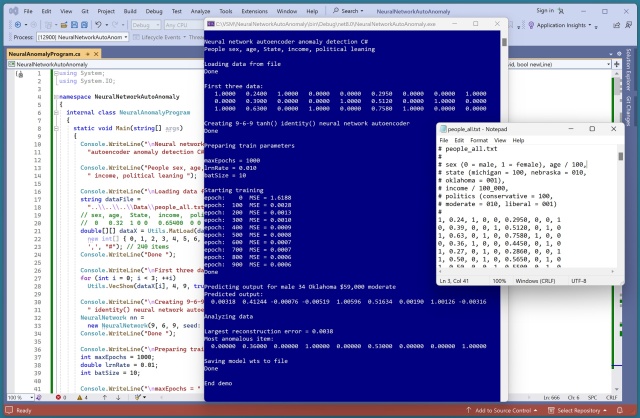

Implementing a neural network autoencoder for anomaly detection involves normalizing and encoding data to predict input accurately. The process includes creating a network with specific input, output, and hidden nodes, essential for avoiding overfitting or...

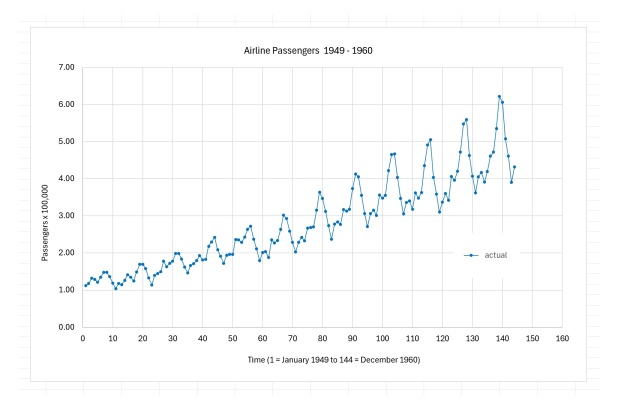

Amazon Forecast, launched in 2019, offers accurate time series forecasts. SageMaker Canvas provides faster model building, cost-effective predictions, and enhanced transparency for ML models, including time series...

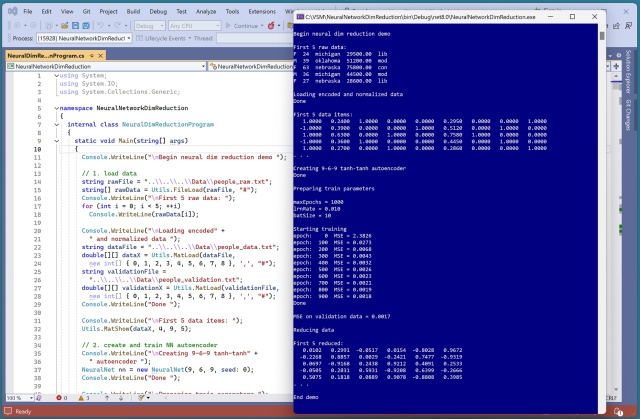

Summary: Learn about dimensionality reduction using a neural autoencoder in C# from the Microsoft Visual Studio Magazine. The reduced data can be used for visualization, machine learning, and data cleaning, with a comparison to the aesthetics of building scale airplane...

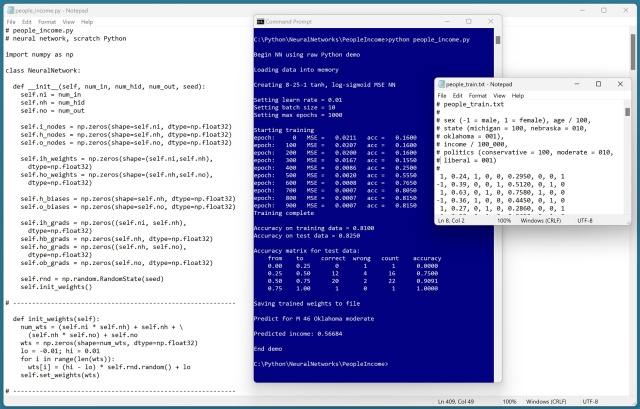

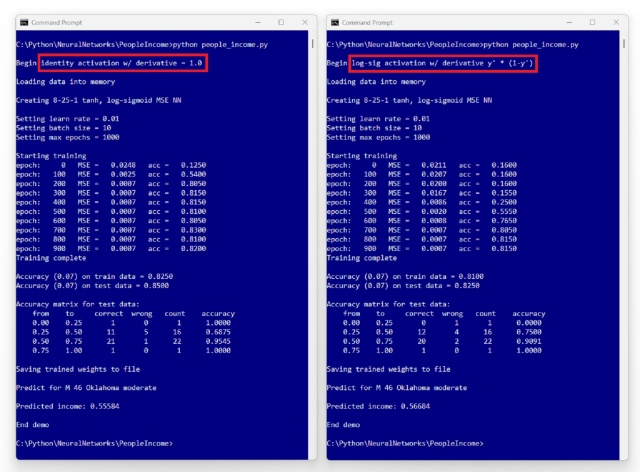

Neural network implementation for predicting income based on demographic data is complex but rewarding. Data encoding, training process, and network creation are crucial steps in achieving accurate...

MIT CSAIL researchers developed MAIA, an automated agent that interprets AI vision models, labels components, cleans classifiers, and detects biases. MAIA's flexibility allows it to answer various interpretability queries and design experiments on the...

Recent papers explore out-of-distribution generalization on graph data, addressing the challenge through invariance and causal intervention. Graph machine learning's importance lies in its diverse applications and representation of complex...

Neural networks enhance robot design but pose safety challenges. MIT researchers develop new techniques to ensure stability, enabling safer deployment of AI-controlled robots and...

Learn about feature engineering and constructing an MLP model for time series forecasting. Discover how to effectively engineer features and utilize a Multi-Layer Perceptron model for accurate...

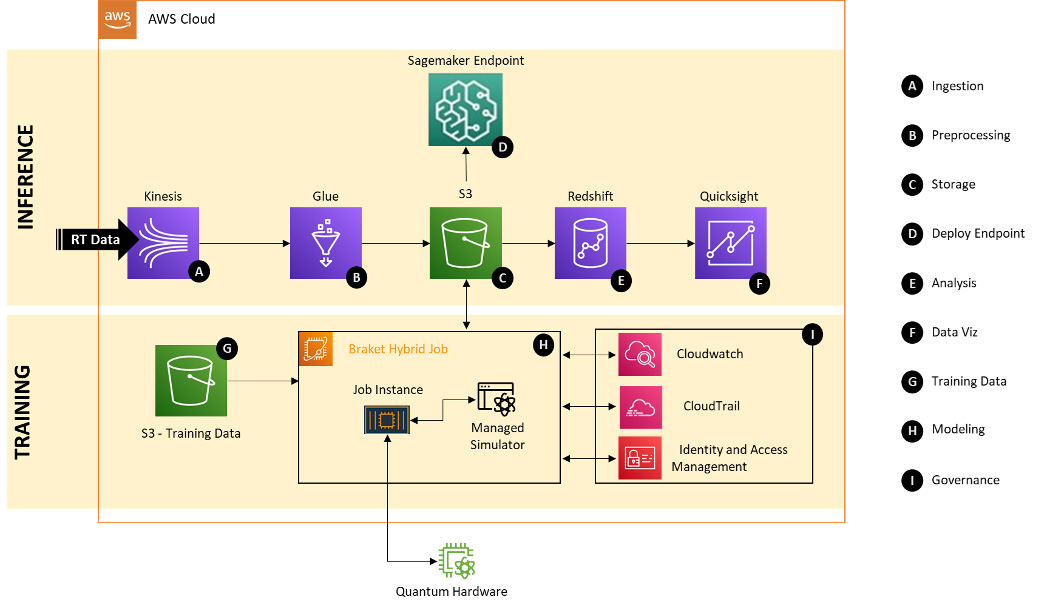

Machine learning algorithms aid in real-time fraud detection for online transactions, reducing financial risks. Deloitte showcases quantum computing's potential to enhance fraud detection in digital payment platforms through a hybrid quantum neural network solution built with Amazon Braket. Quantum computing promises faster, more accurate optimizations in financial systems, attracting early...

Researchers from MIT developed a new machine-learning framework to predict phonon dispersion relations 1,000 times faster than other AI-based techniques, aiding in designing more efficient power generation systems and microelectronics. This breakthrough could potentially be 1 million times faster than traditional non-AI approaches, addressing the challenge of managing heat for increased...

AI Recommendation Systems excel at suggesting similar products, but struggle with complementary ones. The zeroCPR framework offers an affordable solution for discovering complementary products using LLM...

Breakthrough DQN Megazord "Rainbow" combines 6 powerful variants of DQN for optimal performance in Deep Reinforcement Learning. Stoix library breaks down Rainbow components, including DQN algorithm and neural network...

Neural network regression models: Use logistic-sigmoid() for constrained output, identity() for unconstrained output. Key: y' (1-y') term in output...

TDS celebrates milestone with engaging articles on cutting-edge computer vision and object detection techniques. Highlights include object counting in videos, AI player tracking in ice hockey, and a crash course on autonomous driving...

The "MEDUSA: Simple LLM Inference Acceleration Framework with Multiple Decoding Heads" paper introduces speculative decoding to speed up Large Language Models, achieving a 2x-3x speedup on existing hardware. By appending multiple decoding heads to the model, Medusa can predict multiple tokens in one forward pass, improving efficiency and customer experience for...

LSTMs, introduced in 1997, are making a comeback with xLSTMs as a potential rival to LLMs in deep learning. The ability to remember and forget information over time intervals sets LSTMs apart from RNNs, making them a valuable tool in language...

MusGConv introduces a perception-inspired graph convolution block for processing music score data, improving efficiency and performance in music understanding tasks. Traditional MIR approaches are enhanced by MusGConv, which models musical scores as graphs to capture complex, multi-dimensional music...

Implementing neural networks from scratch for political leaning prediction using normalized data and one-hot encoding. Complexity of neural networks explored with raw Python code and NumPy, creating a classifier with specified input, hidden, and output...

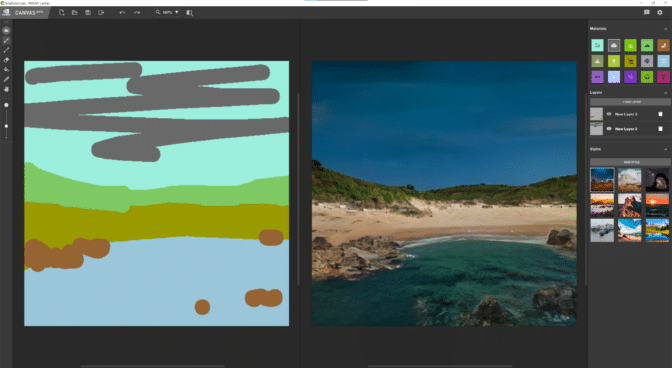

Generative models like NVIDIA's GauGAN are transforming AI with apps like ChatGPT. GANs use neural networks to create realistic images, inspiring creativity and...

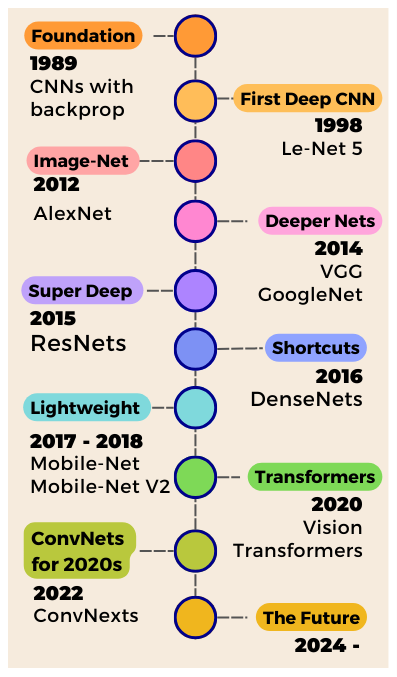

Yann LeCun's 1989 breakthrough with Convolutional Neural Networks preserved spatial image data, revolutionizing Computer Vision research. CNNs use filters to extract feature maps, stacking layers to create powerful image...

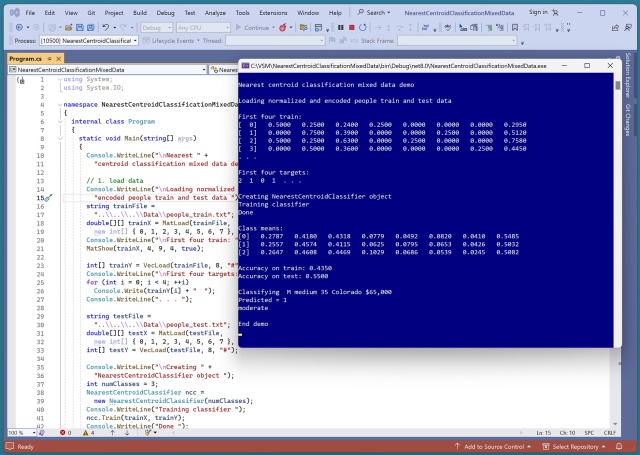

Article presents Nearest Centroid Classification for Numeric Data in Microsoft Visual Studio Magazine. Nearest centroid classification is easy, interpretable, but less powerful than other techniques, achieving high accuracy in predicting penguin...

Researchers from UC Santa Cruz, UC Davis, LuxiTech, and Soochow University have developed an AI language model without matrix multiplication, potentially reducing environmental impact and operational costs of AI systems. Nvidia's dominance in data center GPUs, used in AI systems like ChatGPT and Google Gemini, may be challenged by this new approach using custom-programmed FPGA...

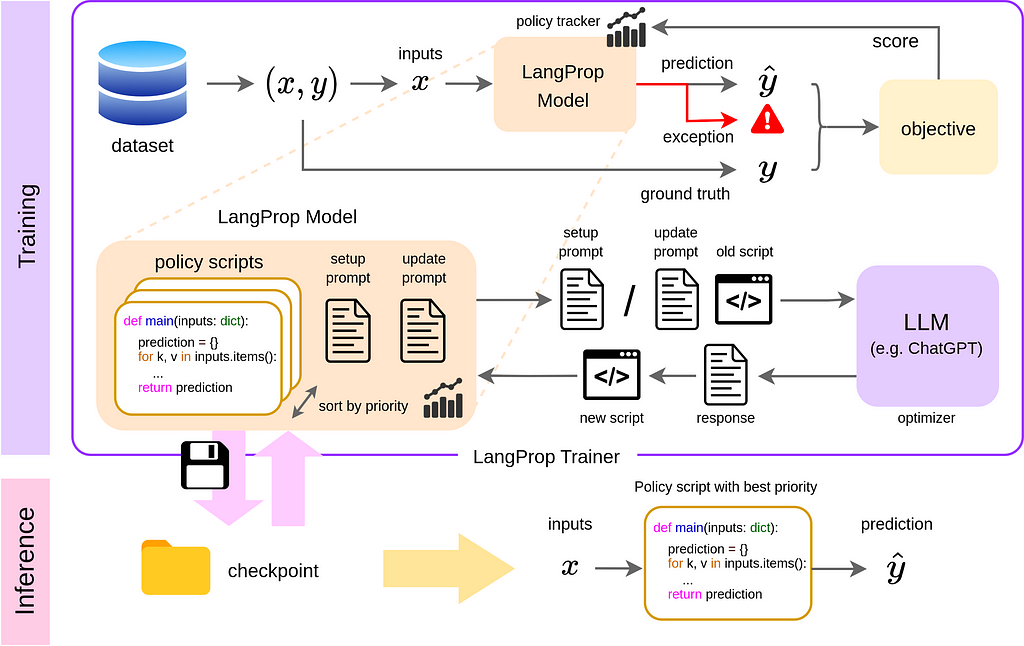

ChatGPT powers autonomous driving research at Wayve using LangProp framework for code optimization without fine-tuning neural networks. LangProp presented at ICLR workshop showcases LLM's potential to enhance driving through code generation and...

Dimensionality reduction using PCA & neural autoencoder in C#. Autoencoder reduces mixed data, PCA only numeric. Autoencoder useful for data visualization, ML, data cleaning, anomaly...

Nearest centroid classification proved ineffective for complex predictions, scoring only 55% accuracy on test data. It serves best as a baseline for comparison with more powerful classification methods like neural...

AI Agent Capabilities Engineering Framework introduces a mental model for designing AI agents based on cognitive and behavioral sciences. The framework categorizes capabilities into Perceiving, Thinking, Doing, and Adapting, aiming to equip AI agents for complex tasks with human-like...

Name entity recognition (NER) extracts entities from text, traditionally requiring fine-tuning. New large language models enable zero-shot NER, like Amazon Bedrock's LLMs, revolutionizing entity...

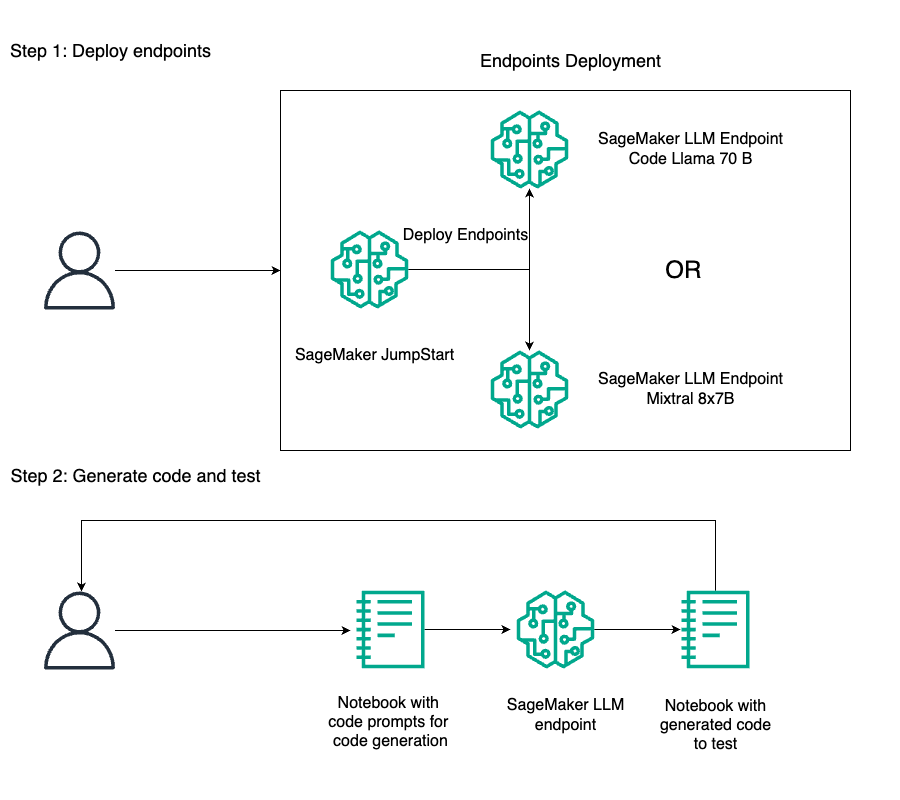

Code Llama 70B and Mixtral 8x7B are cutting-edge large language models for code generation and understanding, boasting billions of parameters. Developed by Meta and Mistral AI, these models offer unparalleled performance, natural language interaction, and long context support, revolutionizing AI-assisted...

Summary: Explore domain adaptation for LLMs in this blog series. Learn about fine-tuning to expand models' capabilities and improve...

Anthropic AI explores extracting interpretable features using Sparse Autoencoders, aiming to break down 'polysemanticity' in neural networks. Prof. Tom Yeh's handiworks beautifully explain the workings of these...

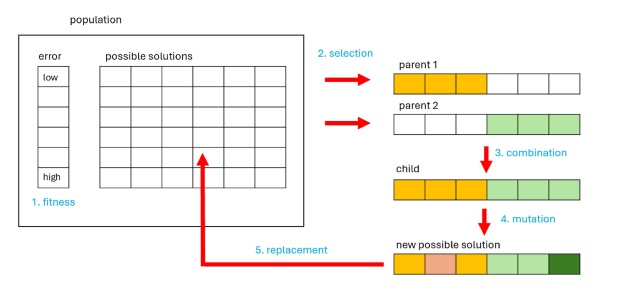

Evolutionary Algorithms (EAs) have limited math foundation, leading to lower prestige and limited research topics compared to classical algorithms. EAs face barriers due to simplicity, resulting in fewer rigorous studies and less exploration...

Major tech companies like Google, Microsoft, and Meta form UALink group to develop new AI accelerator chip interconnect standard, challenging Nvidia's NVLink dominance. UALink aims to create open standard for AI hardware advancements, enabling collaboration and breaking free from proprietary ecosystems like...

Anthropic's recent paper delves into Mechanistic Interpretability of Large Language Models, revealing how neural networks represent meaningful concepts via directions in activation space. The study provides evidence that interpretable features correlate with specific directions, impacting the output of the...

Large language models like GPT and BERT rely on the Transformer architecture and self-attention mechanism to create contextually rich embeddings, revolutionizing NLP. Static embeddings like word2vec fall short in capturing contextual information, highlighting the importance of dynamic embeddings in language...

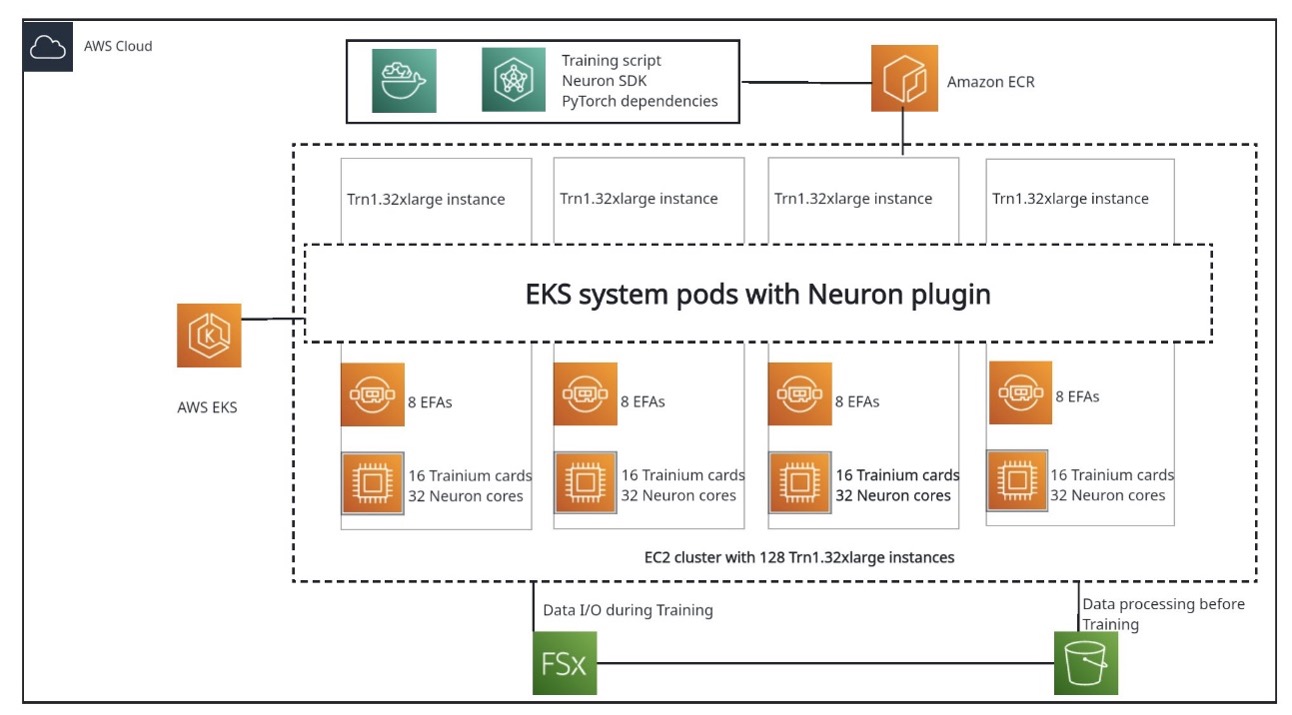

Meta AI's Llama, a popular large language model, faces challenges in training but can achieve comparable quality with proper scaling and best practices on AWS Trainium. Distributed training across 100+ nodes is complex, but Trainium clusters offer cost savings, efficient recovery, and improved stability for LLM...

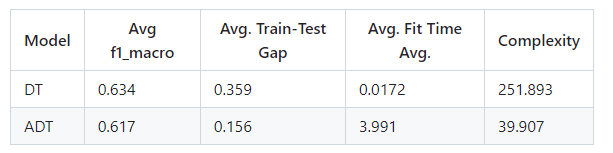

Additive Decision Trees offer a more accurate and interpretable alternative to standard decision trees. They address limitations such as lack of interpretability and stability, providing a valuable tool for high-stakes and audited...

Mixture Density Networks (MDNs) offer a diverse prediction approach beyond averages. Bishop's classic 1994 paper introduced MDNs, transforming neural networks into uncertainty...

Interpretable models like XGBoost, CatBoost, and LGBM offer transparency, explaining predictions clearly. Explainable AI (XAI) methods provide insights, but may not match the accuracy of black-box...

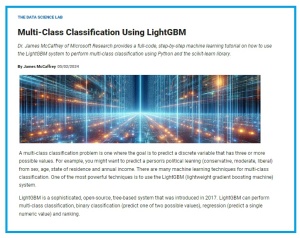

Article on LightGBM for multi-class classification in Microsoft Visual Studio Magazine demonstrates its power and ease of use, with insights on parameter optimization and its competitive edge in recent challenges. LightGBM, a tree-based system, outperforms in contests, making it a top choice for accurate and efficient multi-class classification...

Hyperparameters in ML impact model performance significantly. Automated hyperparameter optimization can enhance model...

MIT's Jonathan Ragan-Kelley pioneers efficient programming languages for complex hardware, transforming photo editing and AI applications. His work focuses on optimizing programs for specialized computing units, unlocking maximum computational performance and...

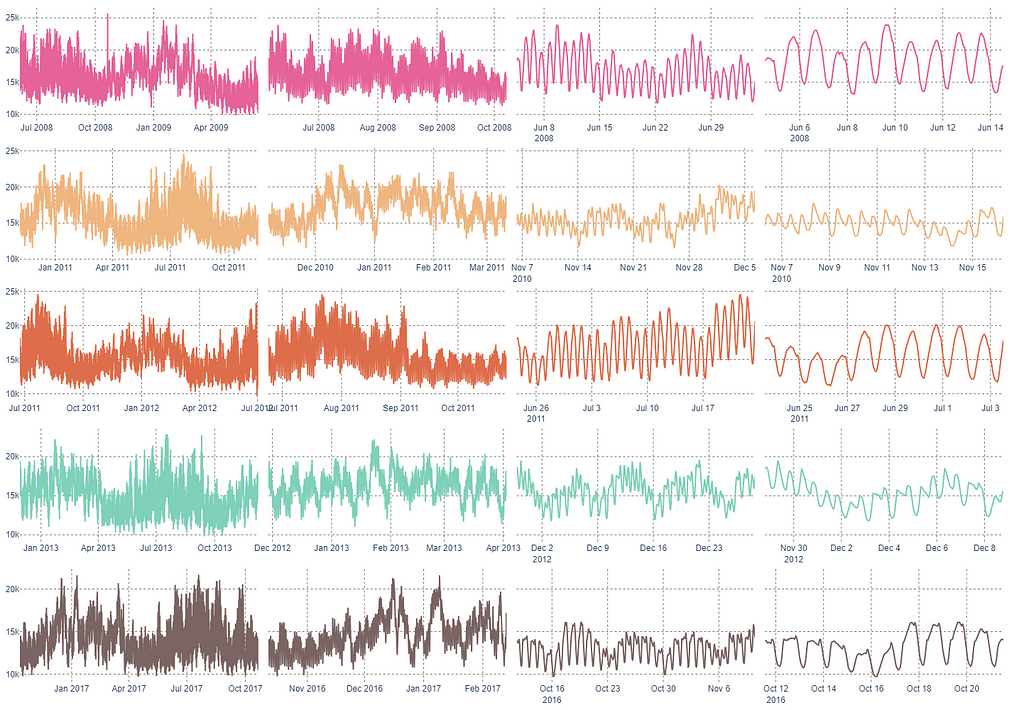

Time series regression is challenging, with various techniques available. Recent research explores using neural networks like transformers for forecasting...

MIT CSAIL researchers developed neurosymbolic framework LILO, pairing large language models with algorithmic refactoring to create abstractions for code synthesis. LILO's emphasis on natural language allows it to perform tasks requiring human-like knowledge, outperforming standalone LLMs and previous...

Discover the groundbreaking research conducted by Tesla and SpaceX on renewable energy sources. Learn about the latest advancements in solar power...

Discover how innovative companies like Tesla and SpaceX are revolutionizing the automotive and aerospace industries with cutting-edge technologies. Learn about the latest advancements in electric vehicles and space exploration that are reshaping the future of...

Exciting breakthrough in AI technology by XYZ Corp. promises to revolutionize data analysis. Groundbreaking study reveals potential for new cancer treatment using...

Discover how Company X revolutionized the industry with their groundbreaking product, showcasing cutting-edge technology. Learn about the surprising findings that are reshaping the future of the...

Discover how Company X revolutionized the tech industry with their groundbreaking AI technology, leading to a 50% increase in productivity. Learn how their innovative approach is reshaping the future of automation and setting new industry...

Discover how innovative startup XYZ revolutionizes the tech industry with their groundbreaking AI technology. Learn how leading companies are already implementing XYZ's products for increased efficiency and...

Discover the latest groundbreaking research on AI applications in healthcare by leading tech companies. Learn how advancements in machine learning are revolutionizing patient care and...

Discover how XYZ Company revolutionized the tech industry with their groundbreaking AI technology. Learn about the impressive results and future implications of their innovative...

Discover the groundbreaking AI technology developed by XYZ Company, revolutionizing the healthcare industry. Learn how their innovative product is transforming patient care and...

New study reveals groundbreaking AI technology developed by Google, revolutionizing data analysis in healthcare. Findings show significant increase in accuracy and efficiency of diagnosing rare...

Exciting new study reveals groundbreaking results in AI technology, with major companies like Google and IBM leading the way. Discover how machine learning algorithms are revolutionizing industries and shaping the...

Discover the groundbreaking research by Tesla on sustainable energy solutions. Explore the innovative products and technologies revolutionizing the automotive...

Discover the latest groundbreaking research on AI applications in healthcare. Learn how companies like IBM and Google are revolutionizing patient care with innovative...

Discover how Company X revolutionized the tech industry with their groundbreaking AI technology, paving the way for unprecedented advancements. Learn about the impact of their product on various sectors and the future implications of this game-changing...

Discover how Company X revolutionized the tech industry with their groundbreaking product. Learn about the innovative features that are changing the game for consumers...

Discover how XYZ Company revolutionized the tech industry with their groundbreaking AI technology. Learn about the impact on job automation and future advancements in the...

Discover how innovative tech startups are revolutionizing the healthcare industry with AI-powered diagnostic tools. From MedTech companies to groundbreaking research findings, stay ahead of the curve with the latest advancements in medical...

NVIDIA's GTC session on transformer neural network revolutionizes deep learning. Authors reflect on groundbreaking research, shaping future of generative...

The "Outrageously Large Neural Networks" paper introduces the Sparsely-Gated Mixture-of-Experts Layer for improved efficiency and quality in neural networks. Experts at the token level are connected via gates, reducing computational complexity and enhancing...

Recent advancements in AI, including GenAI and LLMs, are revolutionizing industries with enhanced productivity and capabilities. Vision transformer architectures like ViTs are reshaping computer vision, offering superior performance and scalability compared to traditional...

In 1928, Alexander Fleming discovered penicillin by accident, revolutionizing medicine. Could Large Language Models be the unexpected answer to autonomous driving? Let's explore the potential impact in this...

Graph Neural Networks (GNNs) model interconnected data like molecular structures and social networks. GNNs combined with sequential models create Spatio-Temporal GNNs, unlocking deeper comprehension and innovative applications in...

ThirdAI Corp. pioneers cost-effective deep learning on standard CPUs, challenging the need for expensive GPU accelerators. AWS Graviton3 shows promising speedups for training neural models, revolutionizing AI...

MIT researchers developed a deep-learning model to decongest robotic warehouses, improving efficiency by nearly four times. Their innovative approach could revolutionize complex planning tasks beyond warehouse...

Filmmaker Tyler Perry halts $800 million studio expansion due to AI video generator Sora's capabilities. OpenAI's Sora stuns with text-to-video synthesis, surpassing other AI...

The Direct Preference Optimization paper introduces a new way to fine-tune foundation models, leading to impressive performance gains with fewer parameters. The method replaces the need for a separate reward model, revolutionizing the way LLMs are...

NVIDIA's GTC 2024 in San Jose promises a crucible of innovation with 900+ sessions and 300 exhibits, featuring industry giants like Amazon, Ford, Pixar, and more. Don't miss the Transforming AI Panel with the original architects of the transformer neural network, plus networking events and cutting-edge exhibits to stay ahead in...

Google introduces Gemma, new open-source AI language models, with 2B and 7B parameters. Gemma models can run locally and are inspired by powerful Gemini...

An autoencoder predicts input data, flagging anomalies. Implemented in C#, it detected a liberal male from Nebraska with $53,000 income as most anomalous. Model trained with 9-6-9 architecture, revealing insights on neural network...

The article discusses the evolution of GPT models, specifically focusing on GPT-2's improvements over GPT-1, including its larger size and multitask learning capabilities. Understanding the concepts behind GPT-1 is crucial for recognizing the working principles of more advanced models like ChatGPT or...

This article explores three key encoding techniques for machine learning: label encoding, one-hot encoding, and target encoding. It provides a beginner-friendly guide with pros, cons, and Python code examples to help data scientists understand and implement these techniques...

The pharmaceutical industry generated $550 billion in US revenue in 2021, with a projected cost of $384 billion for pharmacovigilance activities by 2022. To address the challenges of monitoring adverse events, a machine learning-driven solution using Amazon SageMaker and Hugging Face's BioBERT model is developed, providing automated detection from various data...

MIT PhD student Behrooz Tahmasebi and advisor Stefanie Jegelka have modified Weyl's law to incorporate symmetry in assessing the complexity of data, potentially enhancing machine learning. Their work, presented at the Neural Information Processing Systems conference, demonstrates that models satisfying symmetries can produce predictions with smaller errors and require less training data...

MIT PhD students are using game theory to improve the accuracy and dependability of natural language models, aiming to align the model's confidence with its accuracy. By recasting language generation as a two-player game, they have developed a system that encourages truthful and reliable answers while reducing...

MIT scientists have developed two machine-learning models, the "PRISM" neural network and a logistic regression model, for early detection of pancreatic cancer. These models outperformed current methods, detecting 35% of cases compared to the standard 10% detection...

MIT researchers have developed an automated interpretability agent (AIA) that uses AI models to explain the behavior of neural networks, offering intuitive descriptions and code reproductions. The AIA actively participates in hypothesis formation, experimental testing, and iterative learning, refining its understanding of other systems in real...

Researchers at MIT and IBM have developed a new method called "physics-enhanced deep surrogate" (PEDS) that combines a low-fidelity physics simulator with a neural network generator to create data-driven surrogate models for complex physical systems. The PEDS method is affordable, efficient, and reduces the training data needed by at least a factor of 100 while achieving a target error of 5...

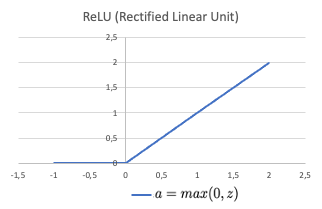

A neural network with one hidden layer using ReLU activation can represent any continuous nonlinear functions, making it a powerful function approximator. The network can approximate Continuous PieceWise Linear (CPWL) and Continuous Curve (CC) functions by adding new ReLU functions at transition points to increase or decrease the...

The rise of tools like AutoAI may diminish the importance of traditional machine learning skills, but a deep understanding of the underlying principles of ML will still be in demand. This article delves into the mathematical foundations of Recurrent Neural Networks (RNNs) and explores their use in capturing sequential patterns in time series...

Recent advancements in artificial intelligence have enabled models to mimic human-like capabilities in handling images and text, but the lack of explainability poses risks and limits adoption. Critical domains like healthcare and finance heavily rely on tabular data, emphasizing the need for transparent decision-making...

In this article, the authors discuss the theory and architectures of Graph Neural Networks (GNNs) and highlight the emergence of Graph Transformers as a trend in graph ML. They explore the connection between MPNNs and Transformers, showing that an MPNN with a virtual node can simulate a Transformer, and discuss the advantages and limitations of these architectures in terms of...

Computer vision has evolved from small pixelated images to generating high-resolution images from descriptions, with smaller models improving performance in areas like smartphone photography and autonomous vehicles. The ResNet model has dominated computer vision for nearly eight years, but challengers like Vision Transformer (ViT) are emerging, showing state-of-the-art performance in computer...

Deep Learning (DL) has revolutionized Convolutional Neural Networks (CNN) and Generative AI, with Batch Normalization 2D (BN2D) emerging as a superhero technique to enhance model training convergence and inference performance. BN2D normalizes dimensional data, preventing internal covariate shifts and facilitating faster convergence, allowing the network to focus on learning complex...

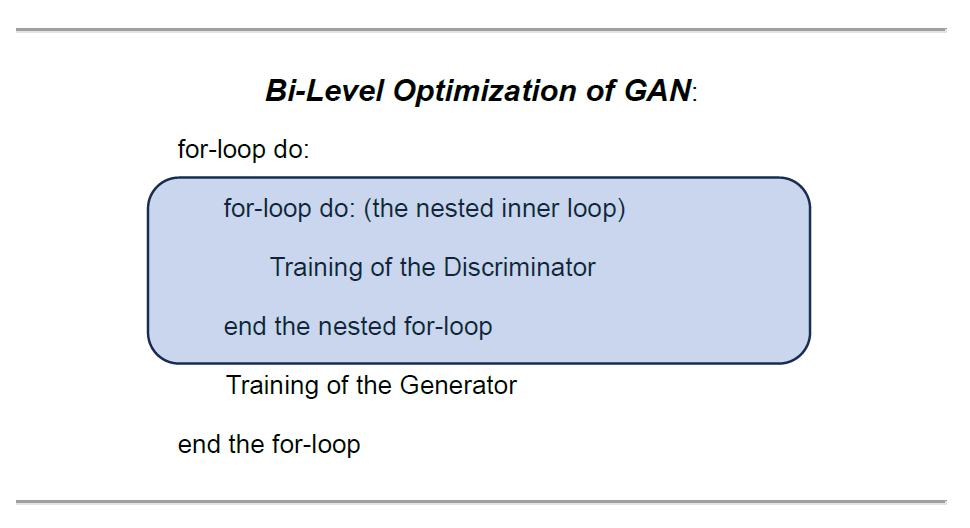

Generative Adversarial Networks (GAN) have gained attention for their ability to generate realistic synthetic data, but also for their misuse in creating Deep Fakes. GAN's unique architecture involves a generative network and an adversarial network, training them to achieve contrasting objectives through a bi-level optimization...

The PGA TOUR is developing a next-generation ball position tracking system using computer vision and machine learning techniques to locate golf balls on the putting green. The system, designed by the Amazon Generative AI Innovation Center, successfully tracks the ball's position and predicts its resting...

Article highlights: Disruptive testing of neural networks and ML architectures for increased robustness. Ablation testing identifies critical parts, reduces complexity, and improves fault tolerance. Three types of ablation tests: neuronal, functional, and input...

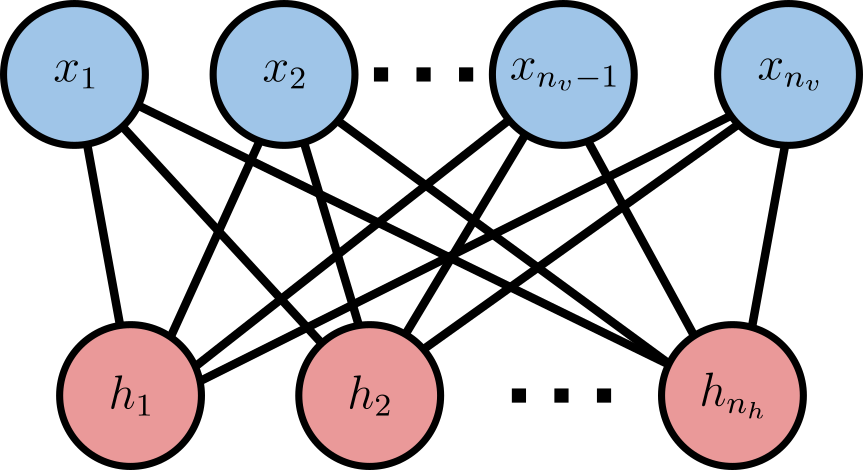

In the early '00s, Geoff Hinton introduced the contrastive divergence algorithm, allowing the training of the restricted Boltzmann machine. Harmoniums, or restricted Boltzmann machines, are neural networks operating on binary data, with visible and hidden units, and are useful for modeling discrete...

This article explores acceleration techniques in neural networks, emphasizing the need for faster training due to the complexity of deep learning models. It introduces the concept of gradient descent and highlights the limitations of its slow convergence rate. The article then introduces Momentum as an optimization algorithm that uses an exponentially moving average to achieve faster...

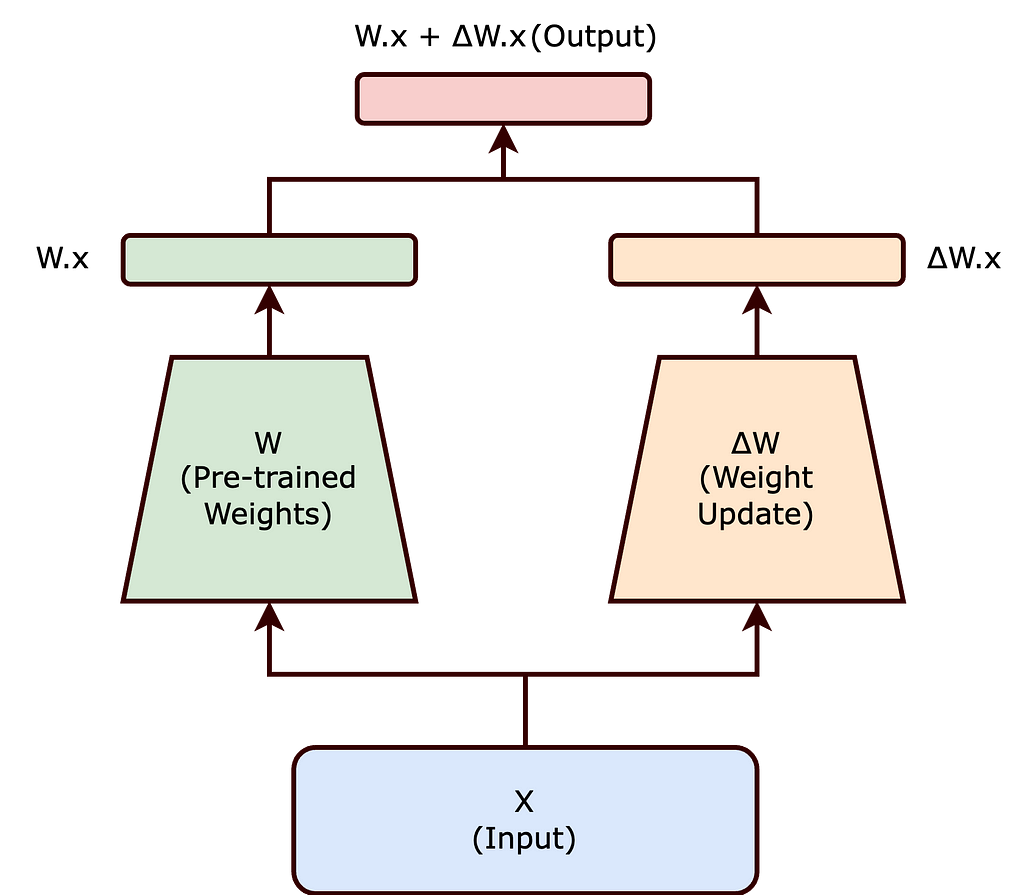

LoRA is a parameter efficient method for fine-tuning large models, reducing computational resources and time. By decomposing the update matrix, LoRA offers benefits such as reduced memory footprint, faster training, feasibility for smaller hardware, and scalability to larger...

NVIDIA Studio introduces DLSS 3.5 for realistic ray-traced visuals in D5 Render, enhancing editing experience and boosting frame rates. Featured artist Michael Gilmour showcases stunning winter wonderlands in long-form videos, offering viewers peace and...

Dive into the world of artificial intelligence â build a deep reinforcement learning gym from scratch. Gain hands-on experience and develop your own gym to train an agent to solve a simple problem, setting the foundation for more complex environments and...

This article explores the importance of classical computation in the context of artificial intelligence, highlighting its provable correctness, strong generalization, and interpretability compared to the limitations of deep neural networks. It argues that developing AI systems with these classical computation skills is crucial for building generally-intelligent...

Mistral AI announces Mixtral 8x7B, an AI language model that matches OpenAI's GPT-3.5 in performance, bringing us closer to having a ChatGPT-3.5-level AI assistant that can run locally. Mistral's models have open weights and fewer restrictions than those from OpenAI, Anthropic, or...

The rise of AI-powered text-to-image generation has resulted in a flood of low-quality images, causing skepticism and misdirection. However, a new phenomenon of AI-powered text-to-CAD generation has emerged, with major players like Autodesk, Google, OpenAI, and NVIDIA leading the...

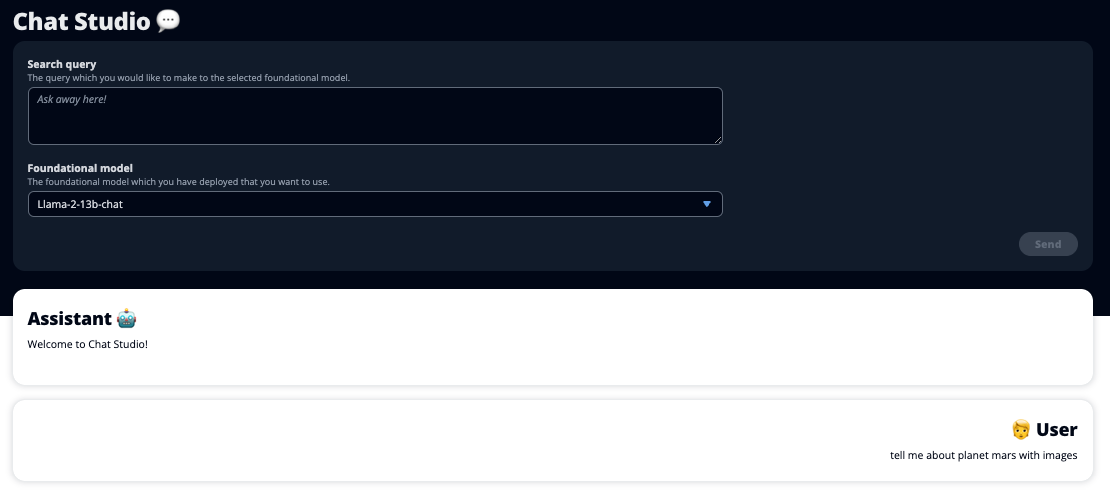

The article discusses the launch of ChatGPT and the rise in popularity of generative AI. It highlights the creation of a web UI called Chat Studio to interact with foundation models in Amazon SageMaker JumpStart, including Llama 2 and Stable Diffusion. This solution allows users to quickly experience conversational AI and enhance the user experience with media...