Bank of England warns of AI programs potentially manipulating markets for profit, citing risks in a report on autonomous systems. AI's ability to exploit opportunities raises concerns for banks and traders, according to the financial policy committee.

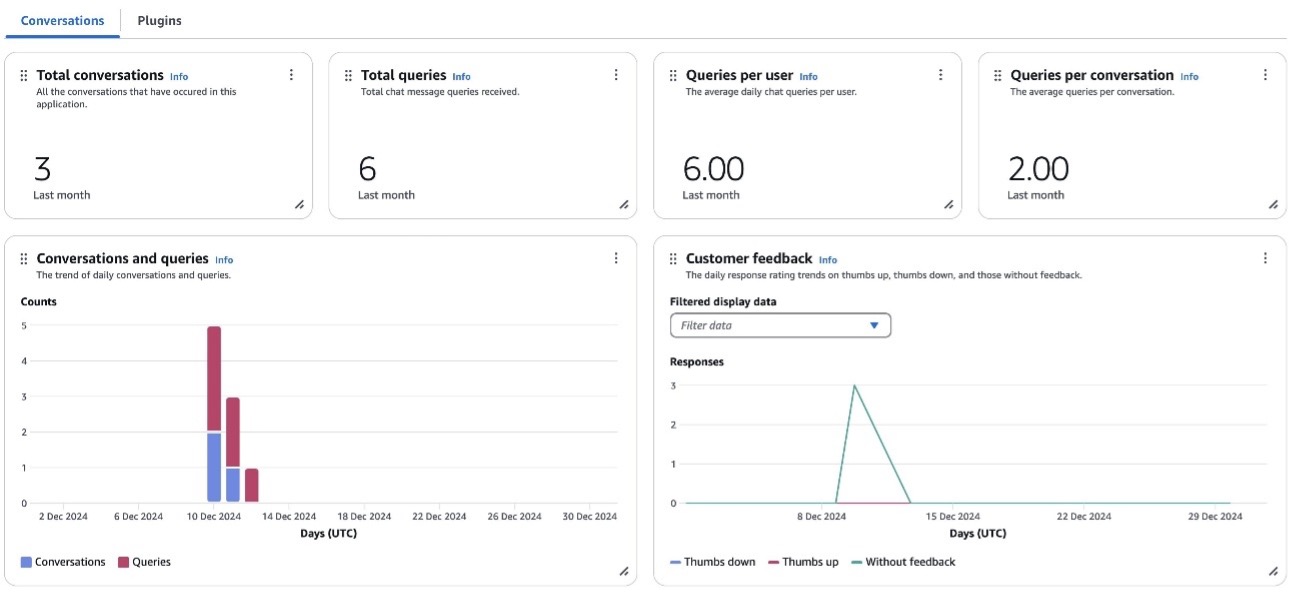

Amazon Q Business offers AI-powered assistance to boost workforce efficiency by reducing time spent on tasks. With robust security features and detailed insights, organizations can measure productivity gains and optimize usage for maximum impact.

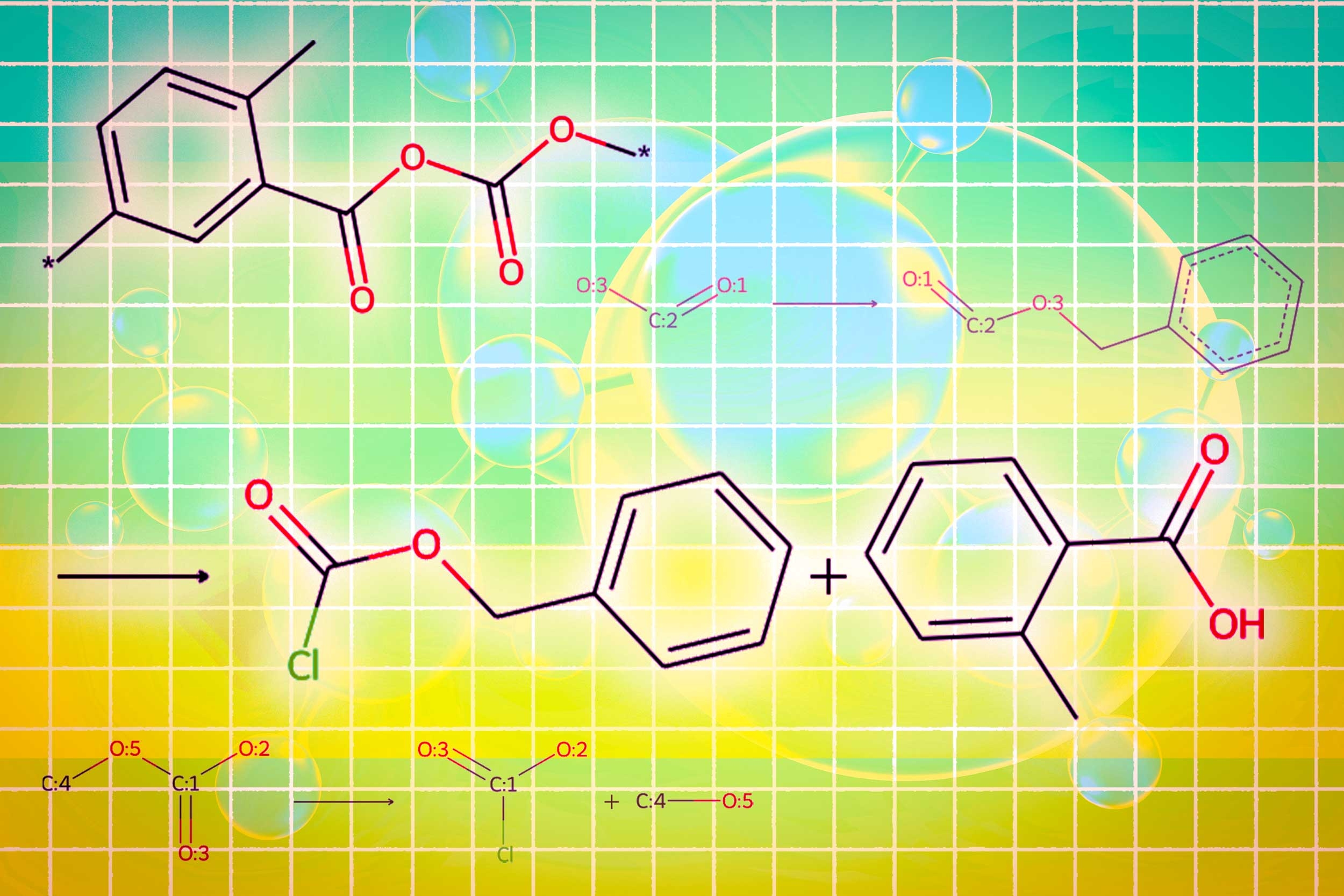

MIT and MIT-IBM Watson AI Lab researchers develop a groundbreaking multimodal approach using Large Language Models and graph-based models to streamline molecule design, increasing success rate from 5% to 35%. This innovative technique could automate the entire process of molecule design and synthesis, potentially revolutionizing drug discovery.

Dr. Mehmet Oz, head of $1.5tn Medicare and Medicaid agency, suggests AI models may surpass human doctors. Oz emphasizes cost efficiency and patient preference for AI avatars in healthcare.

ML models need to run in a production environment, which may differ from the local machine. Docker containers help ensure models can run anywhere, improving reproducibility and collaboration for Data Scientists.

Transformer-based LLMs have advanced in tasks, but remain black boxes. Anthropic's new paper on circuit tracing aims to reveal LLMs' internal logic for interpretability.

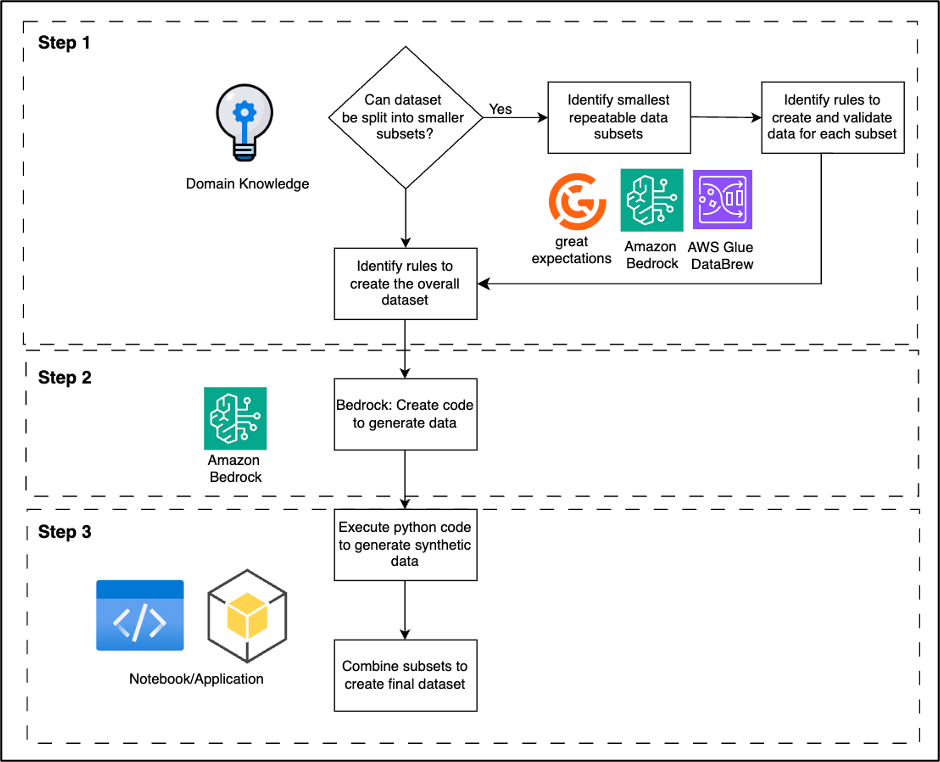

Organizations turn to synthetic data to navigate privacy regulations and data scarcity in AI development. Amazon Bedrock offers secure, compliant, and high-quality synthetic data generation for various industries, addressing challenges and unlocking the potential of data-driven processes.

Australian team revives US composer Alvin Lucier, sparking AI authorship debate. Eerie symphony plays without musicians, only a fragment of a performer remains.

Donald Trump signs executive orders to boost coal industry, sparking environmentalist backlash over impact on climate change. Environmentalists criticize move as regressive, claiming it will increase costs for consumers and hinder progress towards cleaner energy sources.

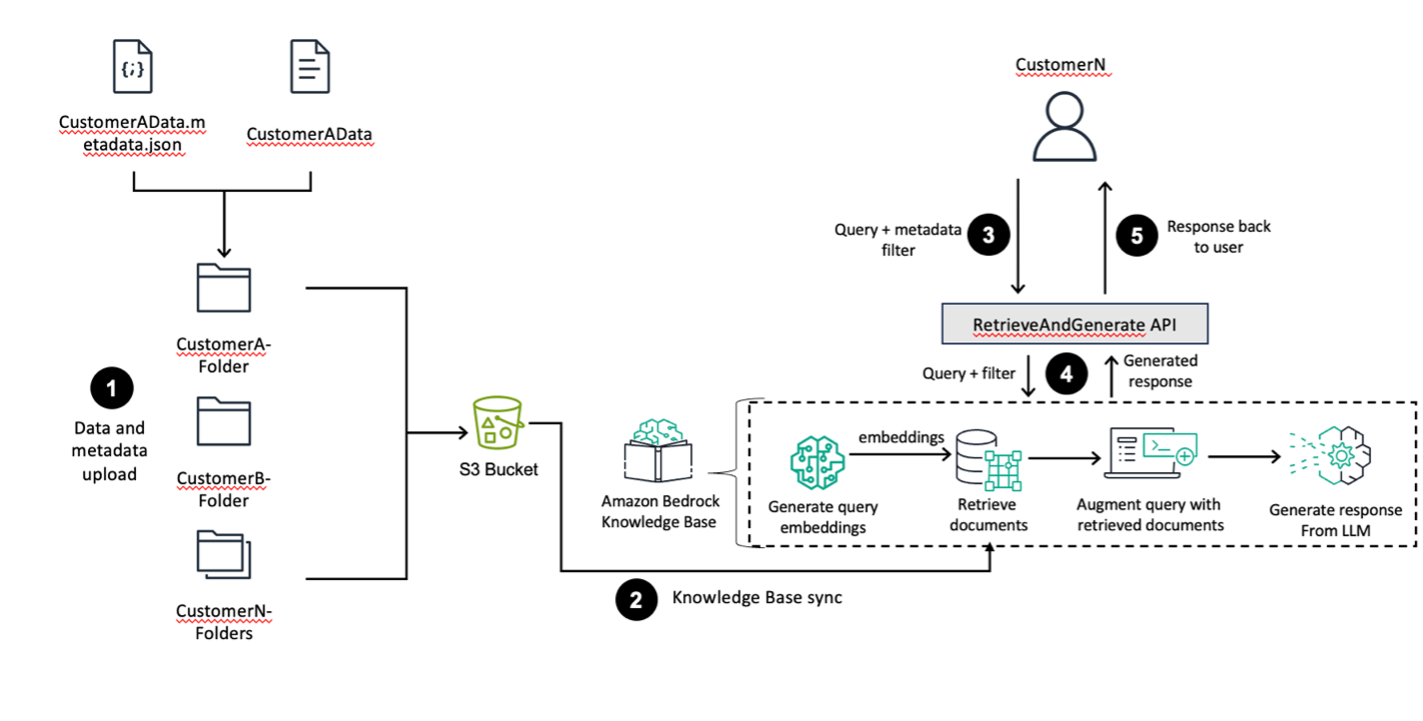

Amazon Bedrock offers high-performing foundation models and end-to-end RAG workflows for creating accurate generative AI applications. Utilize S3 folder structures and metadata filtering for efficient data segmentation within a single knowledge base, ensuring proper access controls across different business units.

NVIDIA highlights physical AI advancements during National Robotics Week, showcasing technologies shaping intelligent machines across industries. IEEE honors NVIDIA researchers for groundbreaking work in scalable robot learning, real-world reinforcement learning, and embodied AI.

Automated Valuation Models (AVMs) use AI to predict home values, but uncertainty can lead to costly mistakes. AVMU quantifies prediction reliability, aiding smarter decisions in real estate purchases.

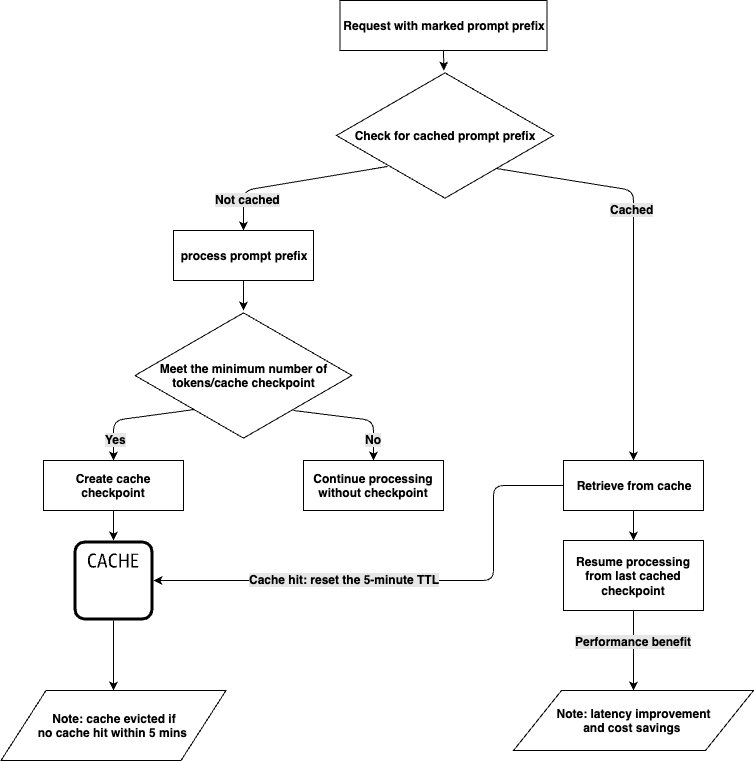

Amazon Bedrock now offers prompt caching with Anthropic’s Claude 3.5 Haiku and Claude 3.7 Sonnet models, reducing latency by up to 85% and costs by 90%. Mark specific portions of prompts to be cached, optimizing input token processing and maximizing cost savings.

John Hinkley compares AI to a piano in creative industries. Reform UK leaflet grumbles about bin collection, prompting nerdish suggestions.

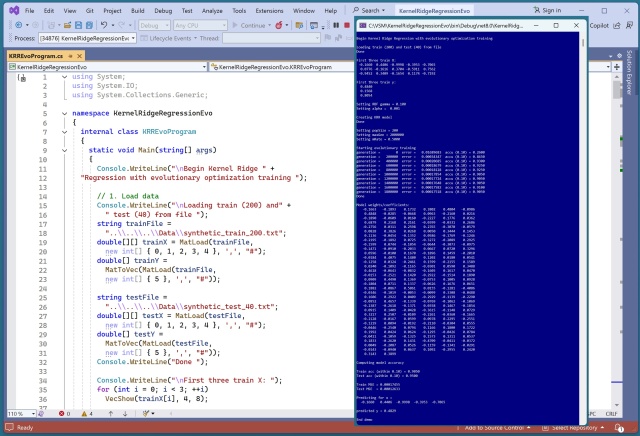

Evolutionary optimization training for Kernel Ridge Regression shows promise but caps at 90-93% accuracy due to scalability issues. Traditional matrix inverse technique outperforms in accuracy and speed.