Diffusion Models explained with illustrations, focusing on how they learn and generate data. Example using glyffuser to generate Chinese glyphs from English definitions.

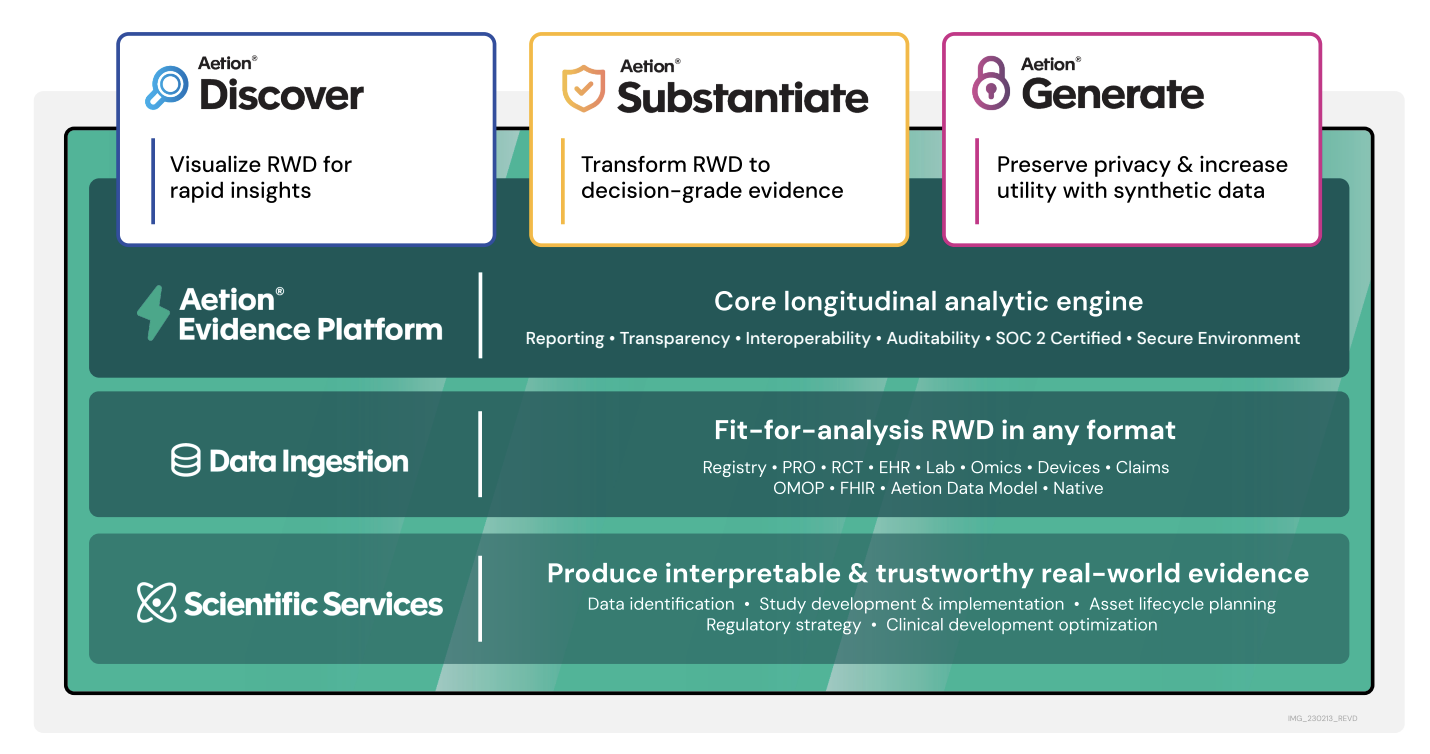

Aetion transforms real-world data into evidence for healthcare decision-makers using natural language queries and Amazon Bedrock technology. Aetion's Evidence Platform allows users to build cohorts and analyze outcomes, optimizing clinical trials and safety studies for medications and treatments.

StabilityAI introduces the groundbreaking Stable Diffusion XL model, advancing text-to-image AI technology. Learn how to efficiently fine-tune and host the model on AWS Inf2 instances for superior performance.

Sara Beery applies computer vision and machine learning to monitor salmon migration, critical for ecosystem health and cultural significance in the Pacific Northwest. Accurate salmon counting essential for managing fisheries amid threats from human activity, habitat loss, and climate change.

Researchers at Los Alamos repurposed Meta’s Wav2Vec-2.0 AI model to analyze seismic signals from Hawaii’s Kīlauea volcano. The AI can track fault movements in real time, a crucial step towards understanding earthquake behavior.

Alphabet removes ban on using AI for weapons and surveillance in updated guidelines, shifts focus from avoiding harm. The Google owner updates ethical guidelines, allowing for broader use of artificial intelligence technology.

Keir Starmer skips AI summit in Paris, missing chance to meet with Macron, Modi, Vance, and Musk. PM's absence from international conference, started by Rishi Sunak, raises eyebrows.

DeepSeek's launch sparks shockwaves, AI arms race fears rise. Paris AI summit agenda, young minds share hopes and fears.

Learn how to enhance your RAG application by mimicking human reasoning under a multi-agent framework. Discover how to improve data retrieval and reasoning processes for more accurate results.

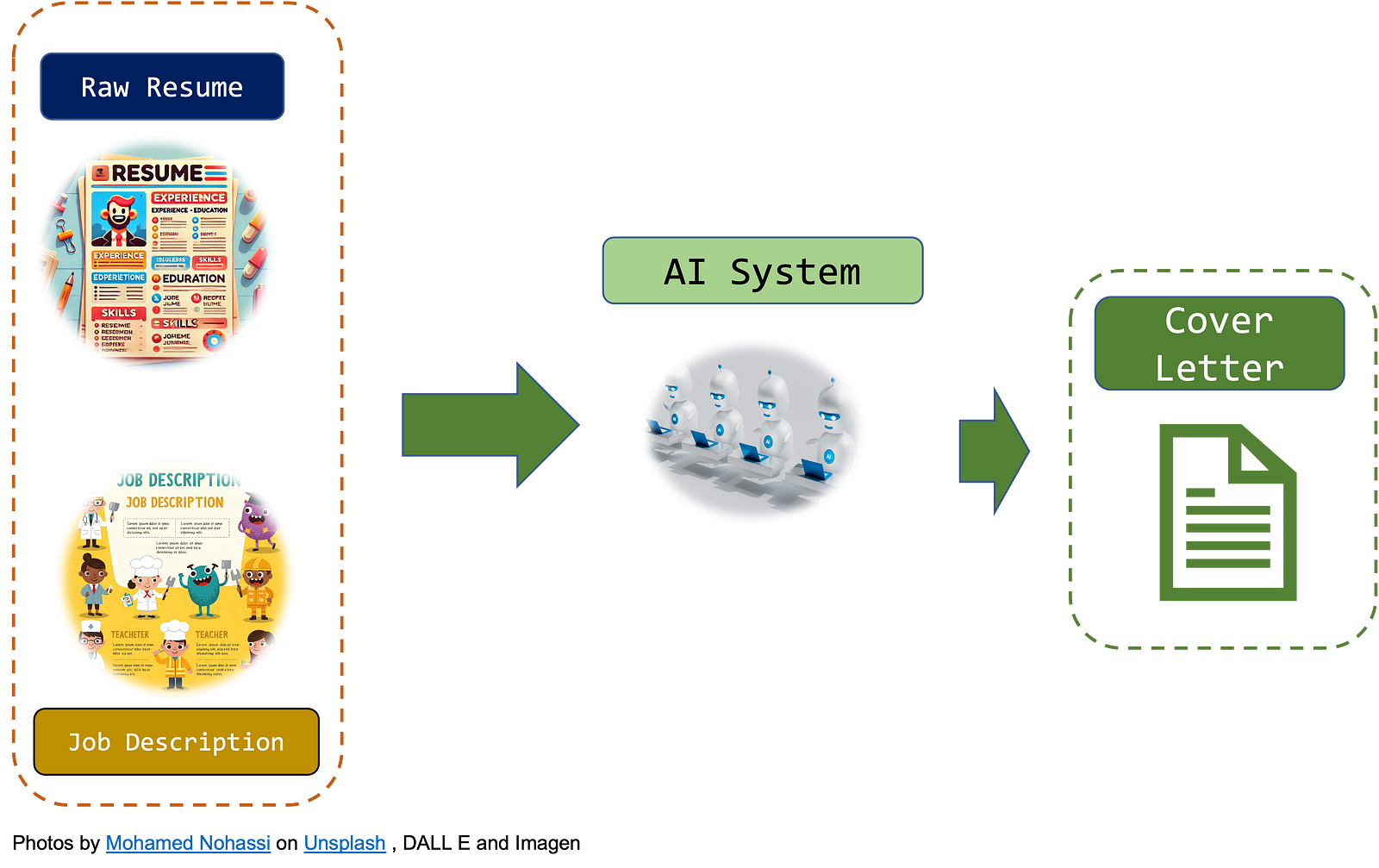

Build your own Cover Letter AI Generator App using Python with code from a public Github folder. AI tools like ChatGPT can help tailor resumes for specific companies, changing the job market game.

Chinese-developed chatbot DeepSeek challenges US tech supremacy with cheaper, energy-efficient AI tool. Despite limitations, its rapid rise highlights economic implications over technical advancements.

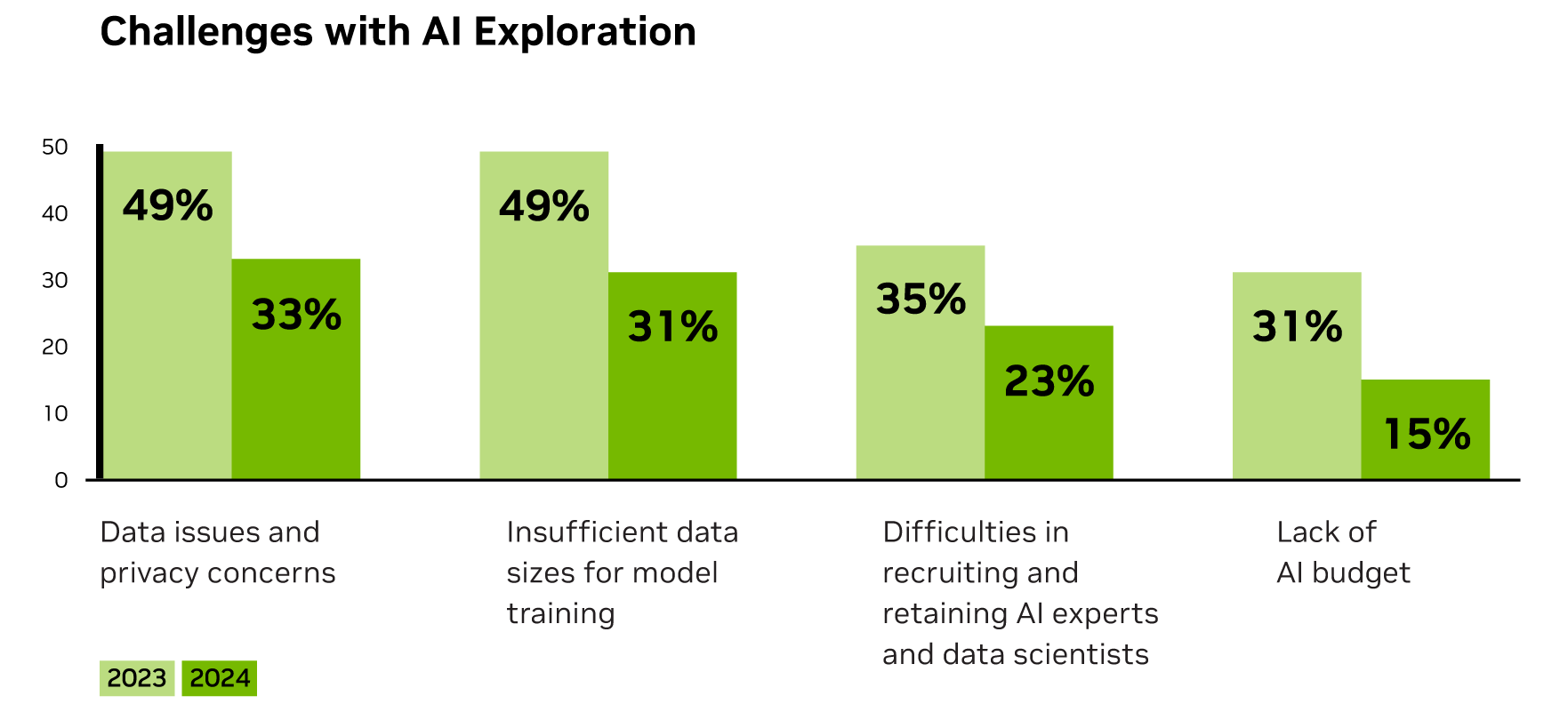

Financial institutions are leveraging AI for revenue growth and cost savings, with NVIDIA's report showing a significant increase in AI adoption and proficiency. Generative AI is driving ROI in trading, customer engagement, and more, as companies overcome barriers to successful AI deployment.

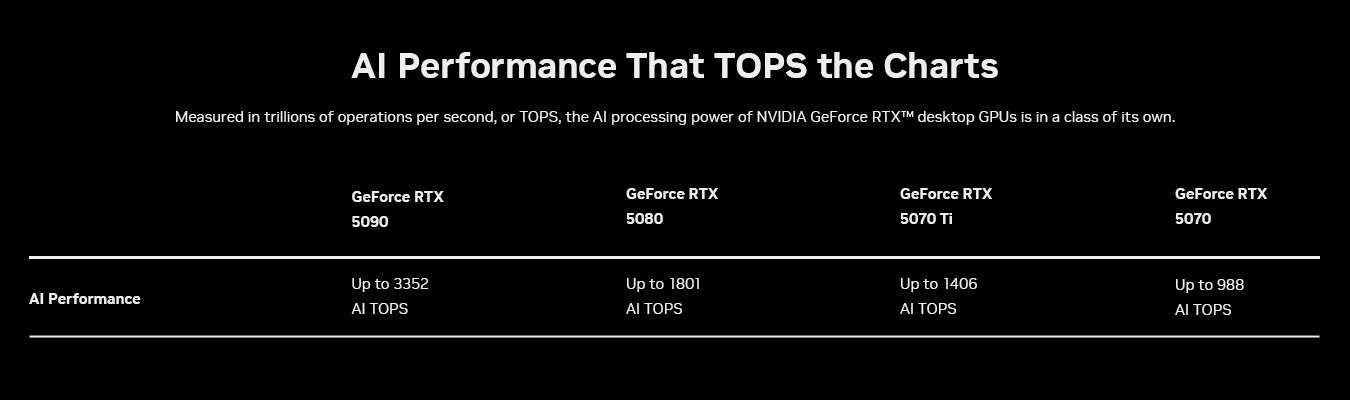

NVIDIA's GeForce RTX 5090 and 5080 GPUs, based on Blackwell architecture, offer 8x faster frame rates with DLSS 4 technology. NVIDIA NIM microservices and AI Blueprints for RTX enable easy access to generative AI models on PCs, accelerating AI development across platforms.

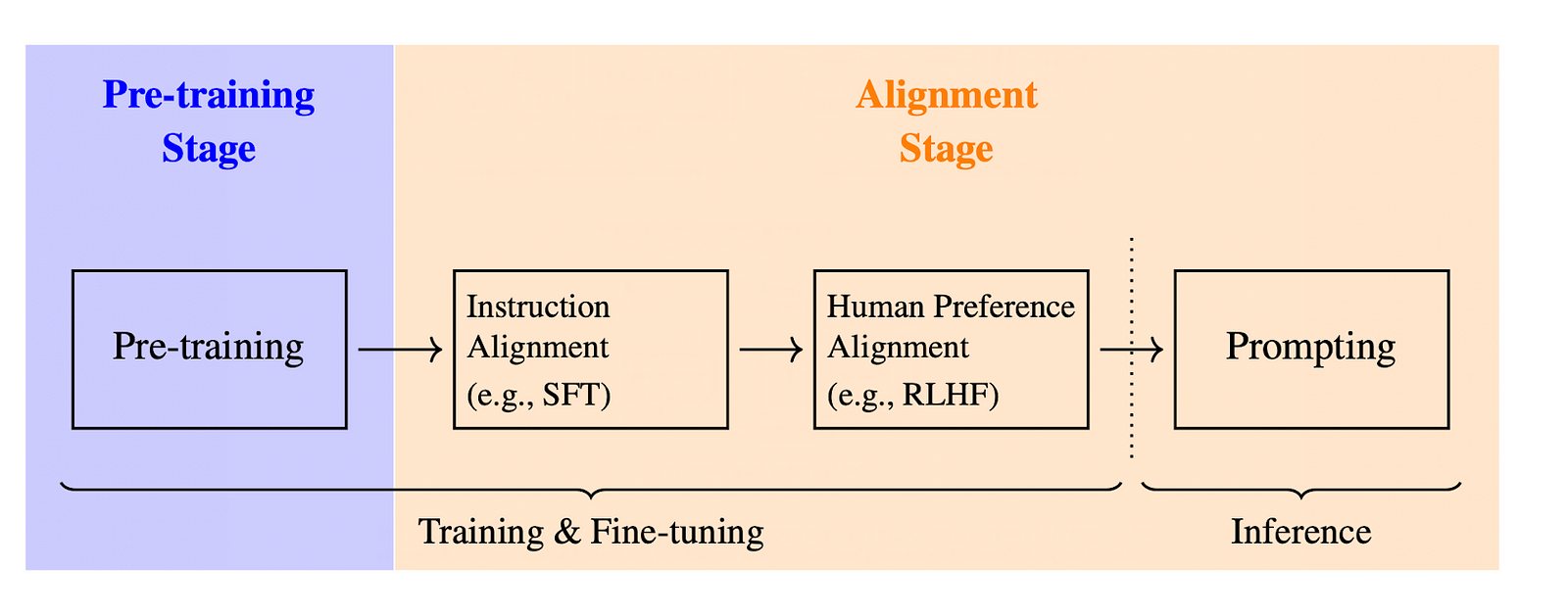

Deepseek's cost-effective AI performance is turning heads. Learn about Reinforcement Learning in Large Language Model training, focusing on TRPO, PPO, and GRPO. Explore RL basics using a maze analogy, and how it applies to LLMs for refining responses based on human feedback.

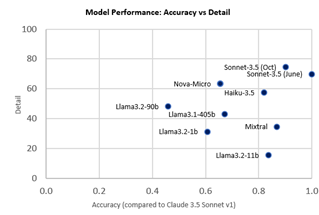

Trellix Wise, powered by AI, automates threat investigation for security teams, saving time and expanding coverage. Trellix's partnership with Amazon Nova Micro delivers faster inferences at a significantly lower cost, optimizing investigations.