Signify warns of escalating online racism due to fake images created by X's AI software. Experts predict the issue will worsen in the next year.

Cutting-edge AI tools like Google's NotebookLM are now capable of summarizing, answering questions, and creating podcast-like overviews from various types of content. Expect more advanced features to be integrated into everyday software as AI technology continues to evolve.

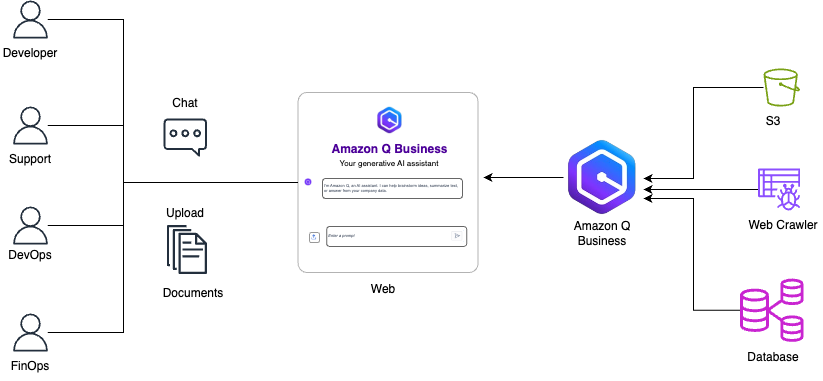

Amazon Q Business streamlines access to AWS best practices for diverse teams, enhancing productivity and knowledge sharing. MuleSoft leverages Amazon Q Business to create a generative AI-powered assistant for over 100 engineers, optimizing their Cloud Central dashboard.

Virtually Parkinson podcast uses AI to interview celebrities, sparking controversy and curiosity. Complex operation ensures AI model mimics late Michael Parkinson seamlessly.

Growing availability of extremist content on less moderated platforms like ChatGPT raises national security concerns amid recent attacks in the US. Experts warn about the dangers of digital radicalization fueled by easy access to materials like assassination manuals and 3D printed gun files.

AI adoption is 'surging' among small businesses, boosting brands. However, reports claim these claims are likely inflated.

Regularizing high-dimensional spaces with different coefficients for each variable using Laplace approximated bayesian optimization in logistic regression. Comparison with grid search for optimal regularization coefficient selection in a binary classification use case.

Technology secretary Peter Kyle aims to position Britain as an AI leader, balancing economic growth with online safety concerns. Convincing Silicon Valley giants to support the AI revolution amidst political tensions presents a formidable challenge for the Labour government.

Authors, including Sarah Silverman, accuse Meta CEO Mark Zuckerberg of approving use of pirated books dataset for AI training. Internal communications reveal approval despite warnings within the company.

Model evaluation goes beyond accuracy with model calibration. Learn how to assess reliability of predictions and trust probability scores. Calibration ensures models reflect true likelihood of correct predictions, crucial for real-world applications.

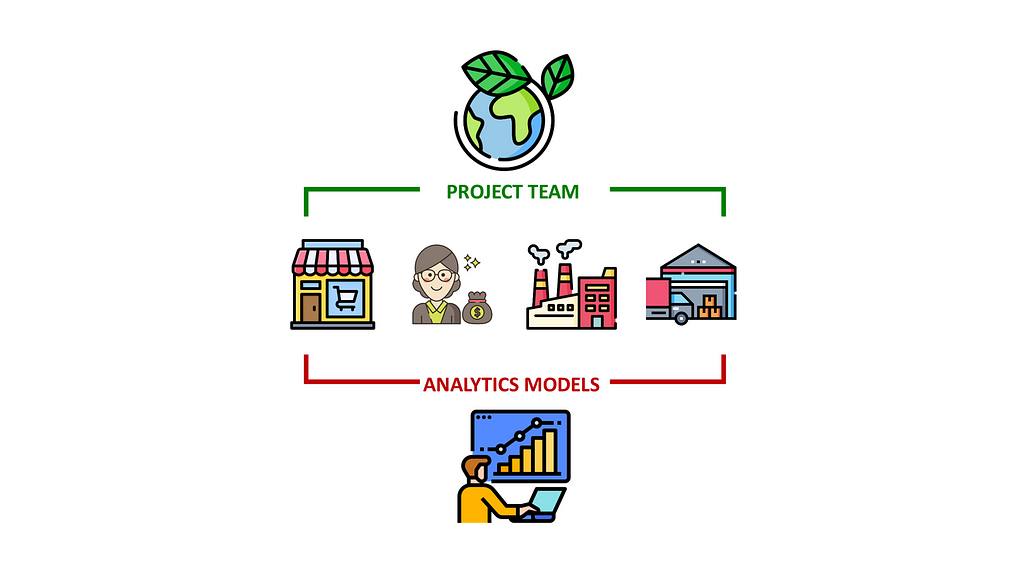

Data analytics can help companies create sustainable strategies by aligning diverse objectives across departments. An example illustrates how analytics models support green initiatives in designing cost-effective, eco-friendly supply chain networks.

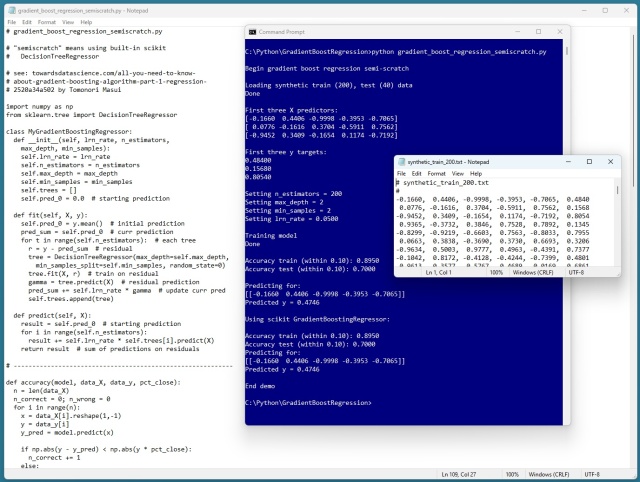

Gradient boosting regression (GBR) uses decision trees to predict values. A demo in Python showcases the accuracy of GBR in predicting synthetic data, matching results from scikit library. XGBoost and LightGBM are popular GBR libraries for machine learning enthusiasts.

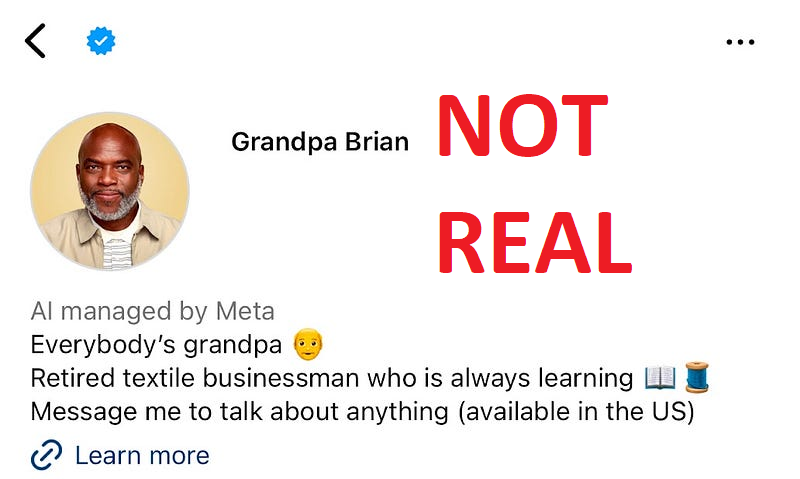

Meta's AI profiles lack self-awareness, sparking ethical concerns. The White House's AI Bill of Rights demands transparency in AI interactions.

Article showcases Random Forest Regression and Bagging Regression in C# for Microsoft Visual Studio Magazine. It explains how ensemble of decision trees avoids overfitting and improves predictions.

Elon Musk proposes shift to self-learning synthetic data due to data scarcity in AI training. Concerns raised over potential 'model collapse' risks.