AI video synthesis models like Kling and video-01 from Kuaishou Technology and Minimax are pushing boundaries, creating viral AI-generated videos that are reshaping meme culture. Kling, surpassing Sora, can generate high-quality videos from text prompts, still images, or existing videos, sparking controversy and fascination.

Amazon Lookout for Metrics, a ML anomaly detection service by Amazon, will end support on October 10, 2025. Customers can transition to alternative AWS services like Amazon OpenSearch, CloudWatch, Redshift ML for anomaly detection.

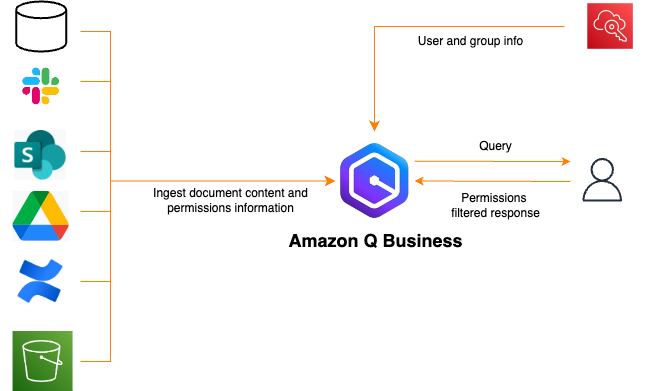

Amazon Q Business is an AI assistant with over 40 connectors, including Slack, to enhance productivity and knowledge sharing. The integration with Slack allows for quick and secure access to valuable organizational knowledge through generative AI capabilities.

Black Forest Labs introduces FLUX. 1 AI for image generation, optimized for GeForce RTX and NVIDIA RTX GPUs. FLUX. 1 excels in prompt adherence, rendering accurate human anatomy and legible text within images, offering three variants for different user needs.

ChatGPT's impact on universities questioned, but AI proves beneficial for higher education. Universities embracing AI to enhance student and staff experiences.

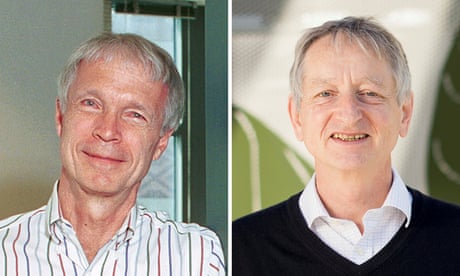

Demis Hassabis and John Jumper of DeepMind, along with David Baker, win Nobel Prize for protein structure advancements. Their work on AlphaFold AI model revolutionizes protein structure prediction.

Researchers from MIT, CMU, and Lehigh collaborate on DARPA-funded METALS program to optimize multi-material structures for aerospace applications, including rocket engines. Project merges classical mechanics with AI design tech for compositionally graded alloys, enabling leap-ahead performance in structural components.

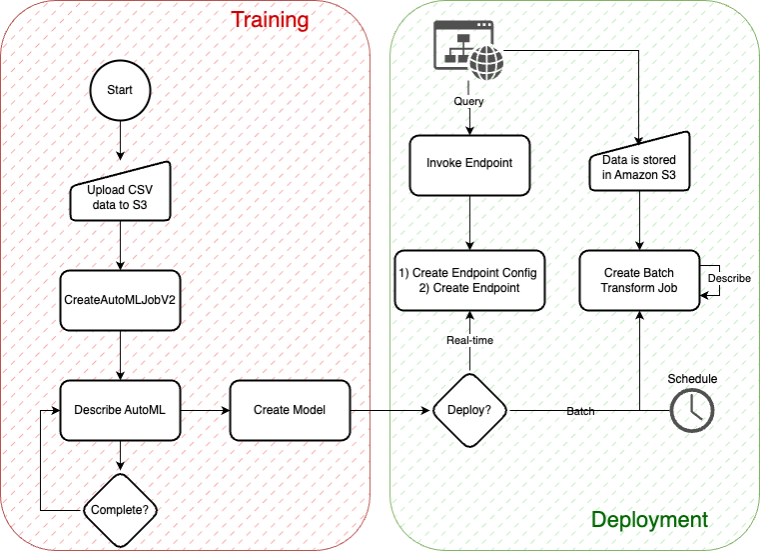

Time series forecasting is crucial for predicting future values, but faces challenges like seasonality and manual tuning. Amazon SageMaker AutoMLV2 simplifies the process with automation, from data preparation to model deployment.

Blake Montgomery takes over as the new writer of TechScape, discussing a middle school's tech ban and opting out of AI training. Stay updated with the latest tech news by signing up for the newsletter.

Geoffrey Hinton and John Hopfield awarded 2024 Nobel prize for pioneering artificial neural networks inspired by the brain. Their work revolutionized AI capabilities with memory storage and learning functions mimicking human cognition.

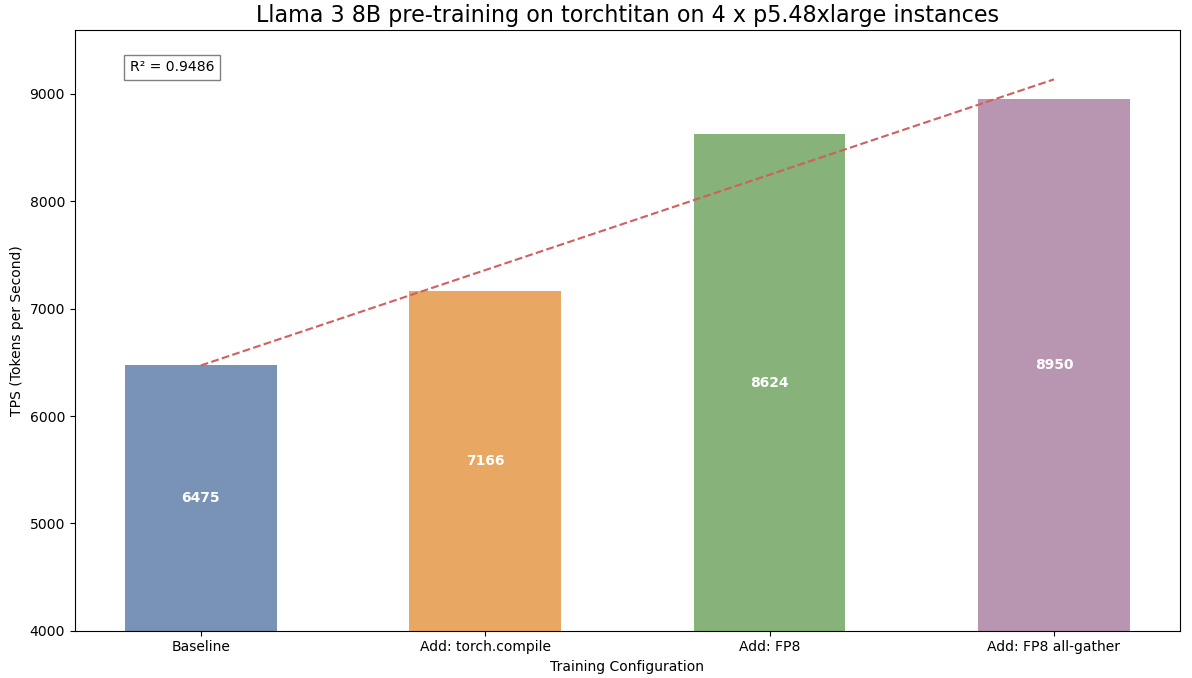

Pre-training large language models (LLMs) with torchtitan library accelerates Meta Llama 3-like models, showcasing FSDP2 and FP8 support. Amazon SageMaker Model Training reduces time and cost, offering high-performing ML compute infrastructure.

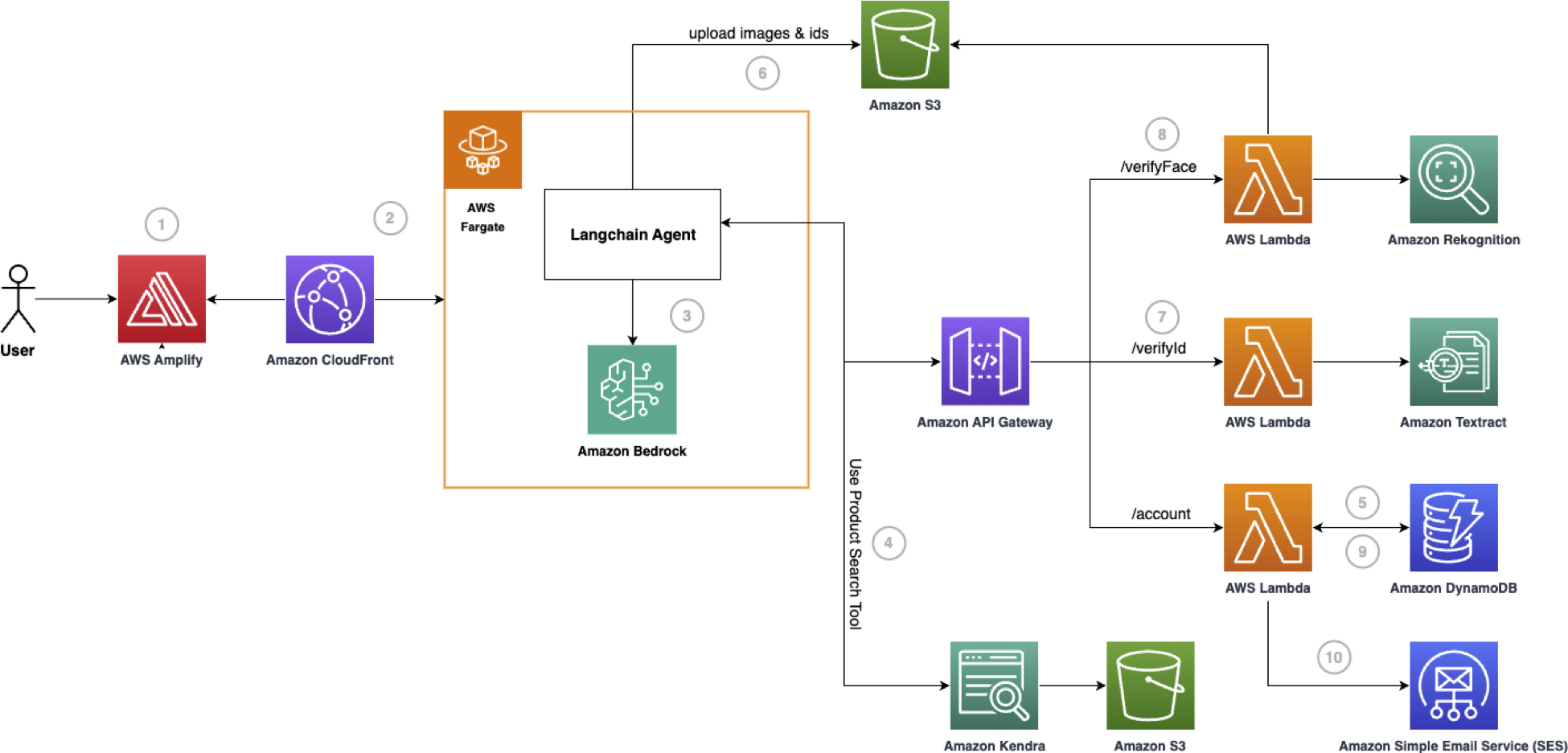

Generative AI digital assistant on AWS streamlines banking customer onboarding, automating paperwork, identity verification, and providing instant customer engagement. Challenges like manual processes, security risks, and limited accessibility addressed through innovative solution, enhancing customer experience and efficiency.

Learn how to run Rust code in the browser using WebAssembly, providing dynamic web pages with privacy benefits. Follow nine rules for porting code to WASM in the browser, ensuring successful implementation and integration.

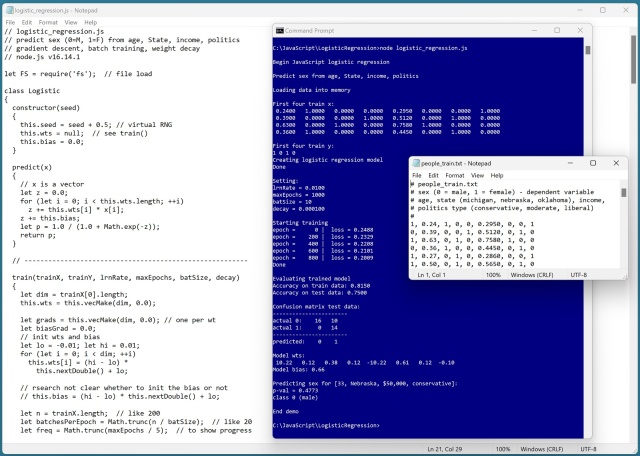

Implementing logistic regression using JavaScript to predict sex based on age, state, income, and political leaning. Training with batch gradient descent yields a model with 75% accuracy on test data.

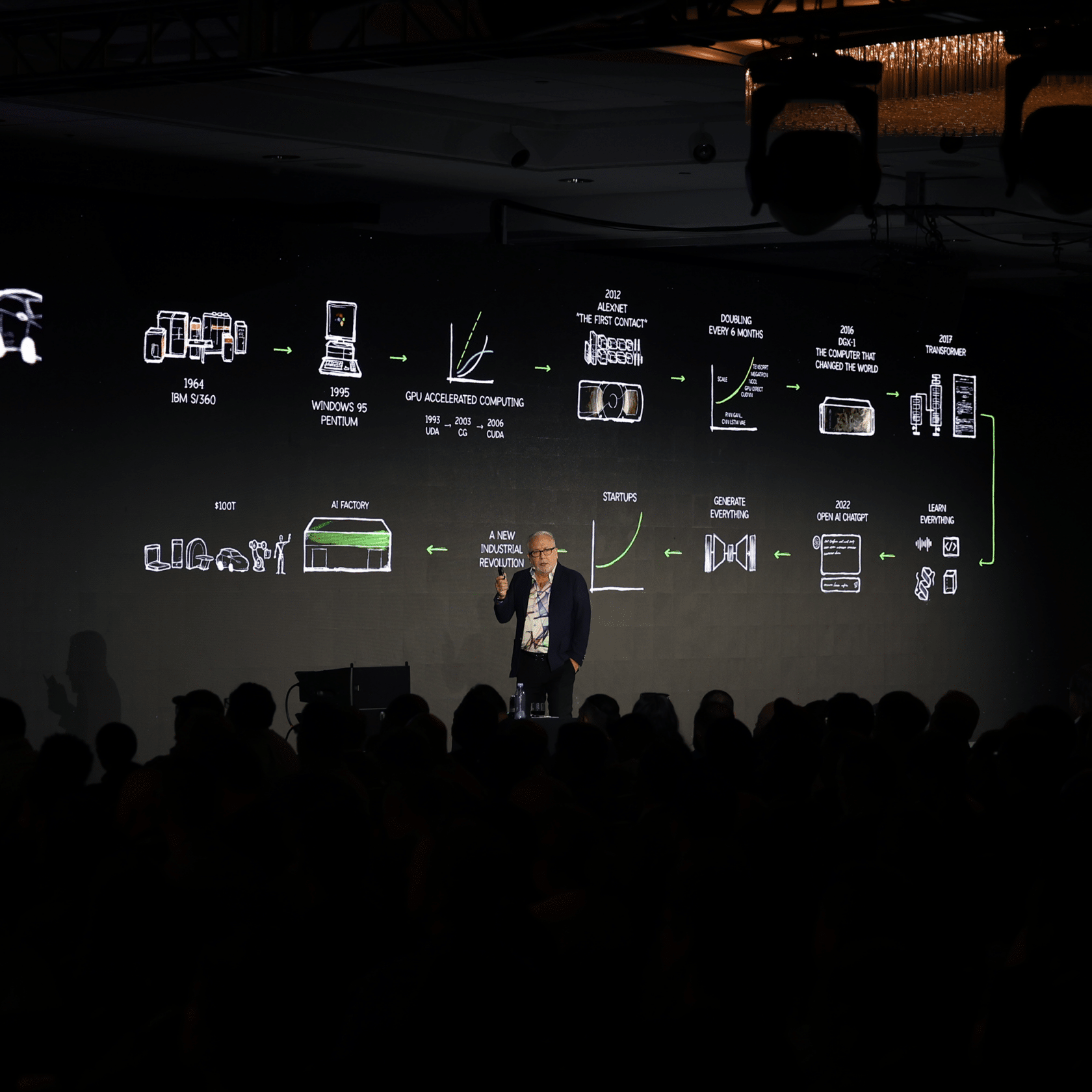

NVIDIA's accelerated computing is driving energy-efficient AI innovations, reducing energy consumption significantly while powering over 4,000 applications. Agentic AI is transforming industries by automating complex tasks and accelerating innovation, with NVIDIA collaborating on groundbreaking projects like real-time AI searches for fast radio bursts.