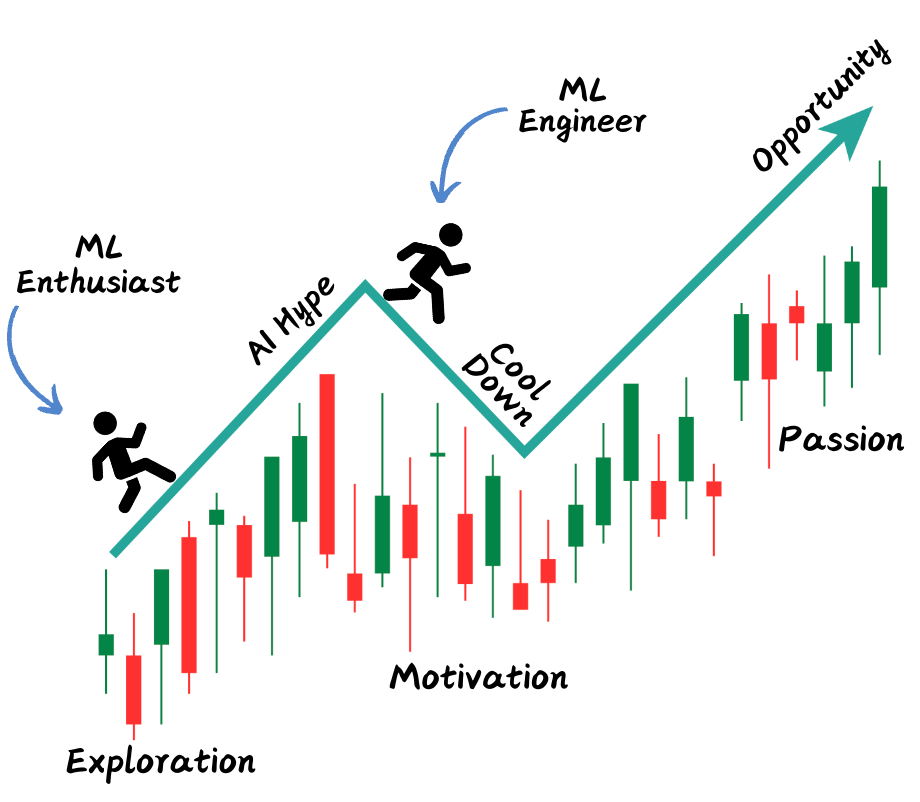

Transitioning from software engineer to machine learning engineer at FAANG companies involves 7 key steps, including finding motivation, exploring ML basics, networking, and finding your niche within the ML landscape. Understanding your interests and leveraging your current skills strategically are essential for a successful transition.

Prompt caching is a game-changer for reducing computational overhead and latency in attention-based models like GPT. Google, Anthropic, and OpenAI are leading the way with innovative caching techniques for long prompts, improving efficiency and reducing costs significantly.

Nearest Neighbor Regressor simplifies predicting continuous values using KD Trees and Ball Trees efficiently. A visual guide with code examples for beginners, focusing on construction and computation.

AI chatbots can steer people away from conspiracy beliefs, according to Thomas Costello's study. The power of artificial intelligence to promote truth is explored in this intriguing research.

Solving LinkedIn Queens game using backtracking & linear equations for quick solutions in less than 0.1 seconds. Linear equations provide a faster alternative to backtracking for solving the game's constraints.

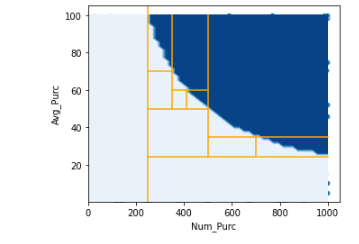

FormulaFeatures is a tool for creating interpretable models by automatically engineering concise, highly predictive features. It aims to improve the accuracy and interpretability of models like decision trees, enhancing visibility into predictions.

Enhance RAG workflow by chunking data for optimal results with GPT-4 models. Short, focused inputs yield better responses, balancing performance and efficiency.

Dozens of neo-Nazis are shifting from Telegram to SimpleX Chat, backed by Twitter founder Jack Dorsey. Extremist groups fear Telegram's privacy policies, seeking anonymity on the secretive app.

New AI agents excel in problem solving by reasoning and tool-driven decision making, showcasing impressive abilities beyond conversational tasks. Expressions of reasoning through evaluation and planning, as well as tool use, are key components in creating powerful AI solutions, with some models surpassing human accuracy on various benchmarks.

Computer scientist Julian Shun designs graph algorithms to analyze invisible connections efficiently, revolutionizing online recommendation systems. Shun's user-friendly frameworks leverage parallel computing for rapid analysis of massive networks, enhancing search engines and fraud detection.

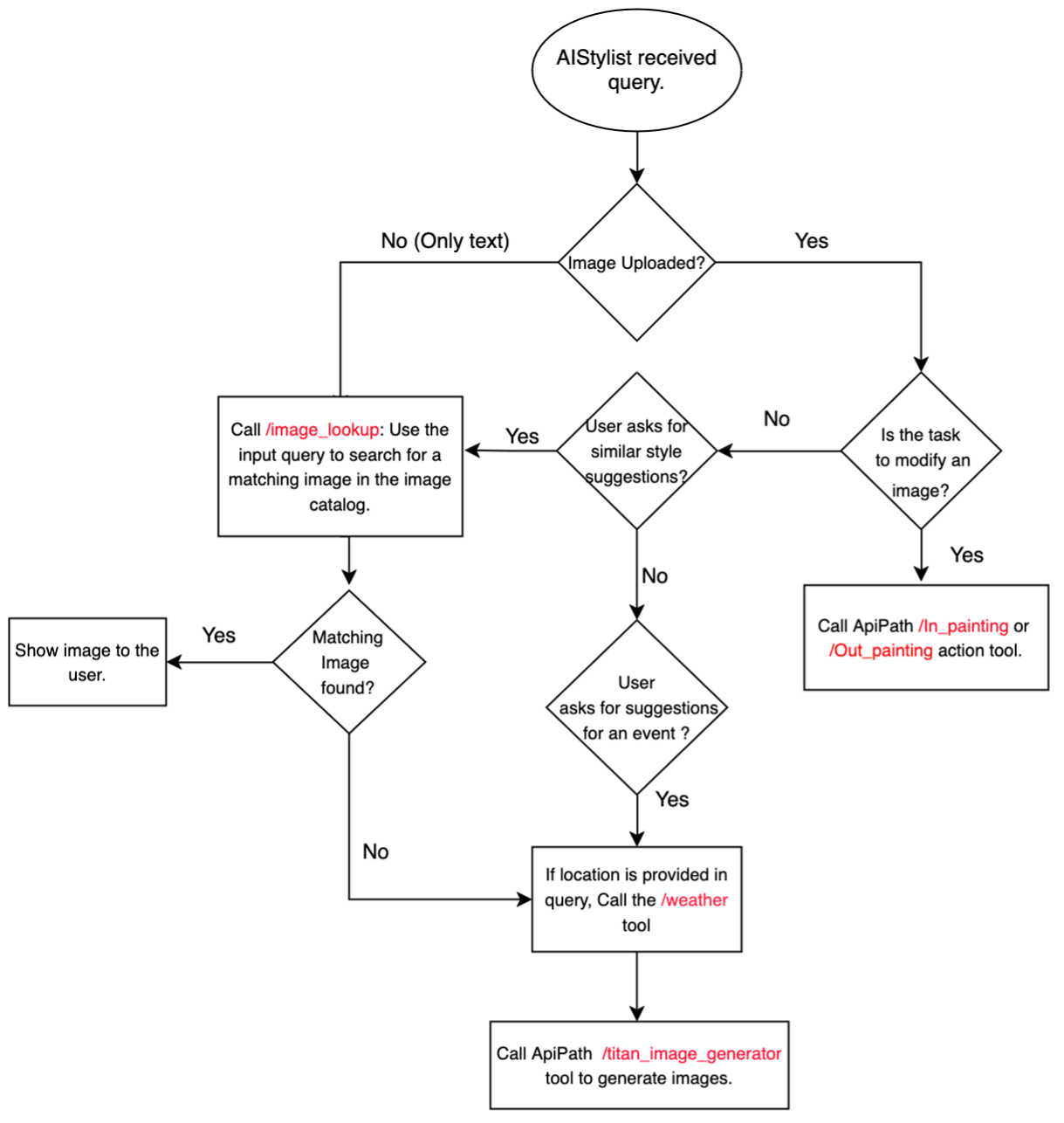

Agents simulating human actions paired with Amazon Titan models create personalized, multimodal fashion experiences. Customers can interact in natural language, receive outfit recommendations, and generate visual inspirations seamlessly.

Meta, owner of Facebook, unveils Movie Gen AI model for creating realistic video and audio clips to rival competitors. Samples show animals swimming, people painting using their real photos in the clips.

Retrieval augmented generation (RAG) combines information retrieval & language generation for accurate responses. RAG techniques are diverse, tailored to specific challenges & use cases, evolving beyond a one-size-fits-all approach.

Universities embrace generative AI for academic use, avoiding plagiarism. Student uses ChatGPT to anticipate interview questions accurately.

California police departments are utilizing AI tools to assist in report drafting. Experts are concerned about potential implications.