Attention mechanism, crucial in Machine Translation, helps RNNs overcome challenges, leading to the rise of Transformers. Self-attention in Transformers involves key, value, and query vectors to focus on important elements within a...

Transformers are revolutionizing NLP with efficient self-attention mechanisms. Integrating transformers in computer vision faces scalability challenges, but promising breakthroughs are on the...

AI advancements have merged NLP and Computer Vision, leading to image captioning models like the one in "Show and Tell." This model combines CNN for image processing and RNN for text generation, using GoogLeNet and...

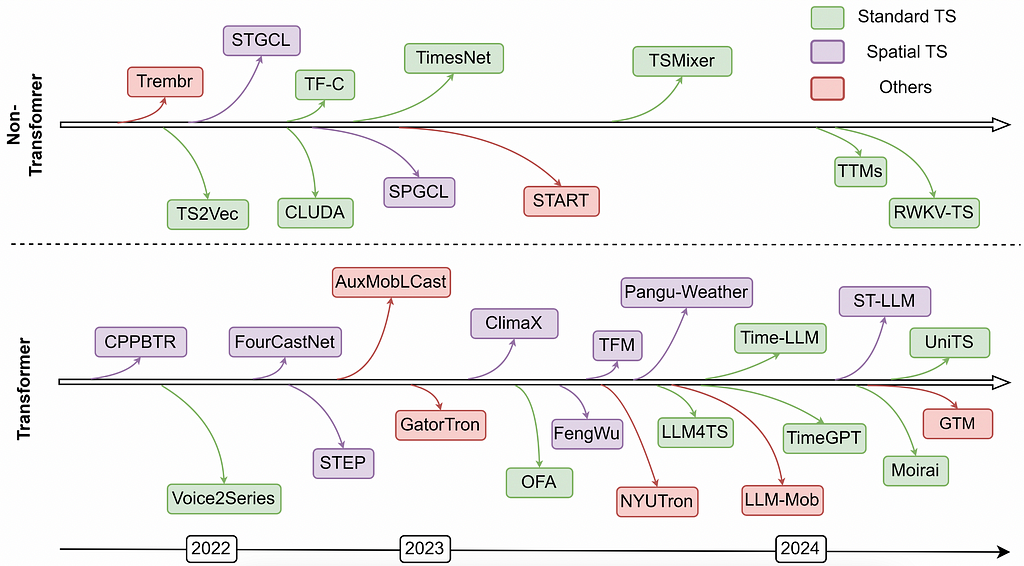

Foundation models, like Large Language Models (LLMs), are being adapted for time series modeling through Large Time Series Foundation Models (LTSM). By leveraging sequential data similarities, LTSM aims to learn from diverse time series data for tasks like outlier detection and classification, building on the success of LLMs in computational linguistic...

LSTMs, introduced in 1997, are making a comeback with xLSTMs as a potential rival to LLMs in deep learning. The ability to remember and forget information over time intervals sets LSTMs apart from RNNs, making them a valuable tool in language...

The rise of tools like AutoAI may diminish the importance of traditional machine learning skills, but a deep understanding of the underlying principles of ML will still be in demand. This article delves into the mathematical foundations of Recurrent Neural Networks (RNNs) and explores their use in capturing sequential patterns in time series...