Congresswoman Valerie Foushee faces progressive challenger Nida Allam in Durham's datacenter-driven congressional primary. Datacenter politics are shaping US elections, with the outcome of this rematch drawing national attention.

Hundreds of schools adopt an AI monitoring tool, finding students prefer confiding in a chatbot over humans. Middle school counselor in Putnam county, Florida receives alerts from an AI therapy platform, flagging students at risk based on chat messages.

OpenAI's CEO vows to prevent mass surveillance use of ChatGPT by US DoW, addressing concerns of opportunism and privacy risks. Sam Altman assures technology will not be utilized by defense intelligence agencies like the NSA.

AI, often used for mundane tasks, is now aiding US military aggression. Chris Stokel-Walker warns of risks in AI militarization, citing recent White House actions in Iran.

Retailers are tackling high return rates with virtual try-on tech like Amazon Nova Canvas, preserving details and reducing carbon emissions. Virtual try-on can be seamlessly integrated into ecommerce sites, mobile apps, and in-store kiosks, offering customers a personalized shopping experience.

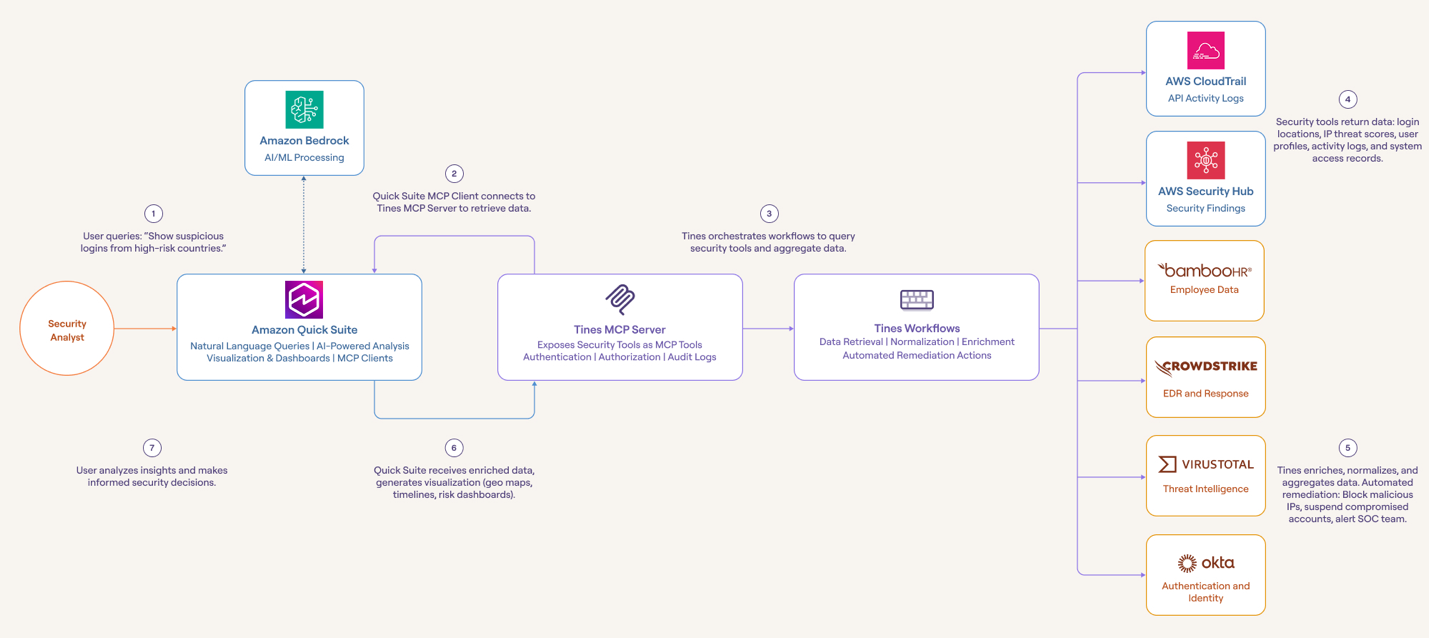

Automate security event response with Amazon Quick Suite and Tines. Integrate multiple tools for faster decision-making and AI-driven analysis.

News Corp CEO Robert Thomson discusses AI partnership with Meta, values news orgs as AI 'input'. Deal worth up to US$50m/year.

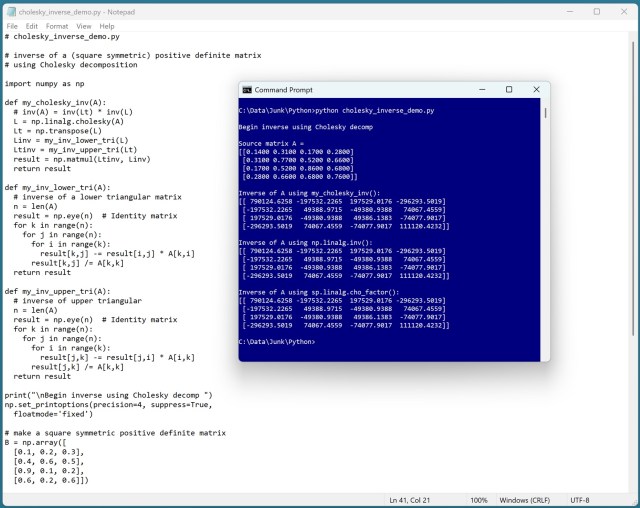

Machine learning utilizes Cholesky decomposition to compute the inverse of square symmetric positive definite matrices accurately. The precision of the np.linalg.inv() function on such matrices may be surprisingly low, highlighting the importance of alternative methods.

US military's rapid AI war planning in Iran raises concerns about human decision-making being bypassed. Anthropic's AI model, Claude, speeds up the kill chain in military strikes, signaling a new era of bombing.

Tech giants like Google, Microsoft, and Amazon are accused of using AI to control crop choices, impacting global food systems. Critics warn of companies undermining farmers' autonomy in food production, collaborating with industrial agriculture firms like IBM and Alibaba.

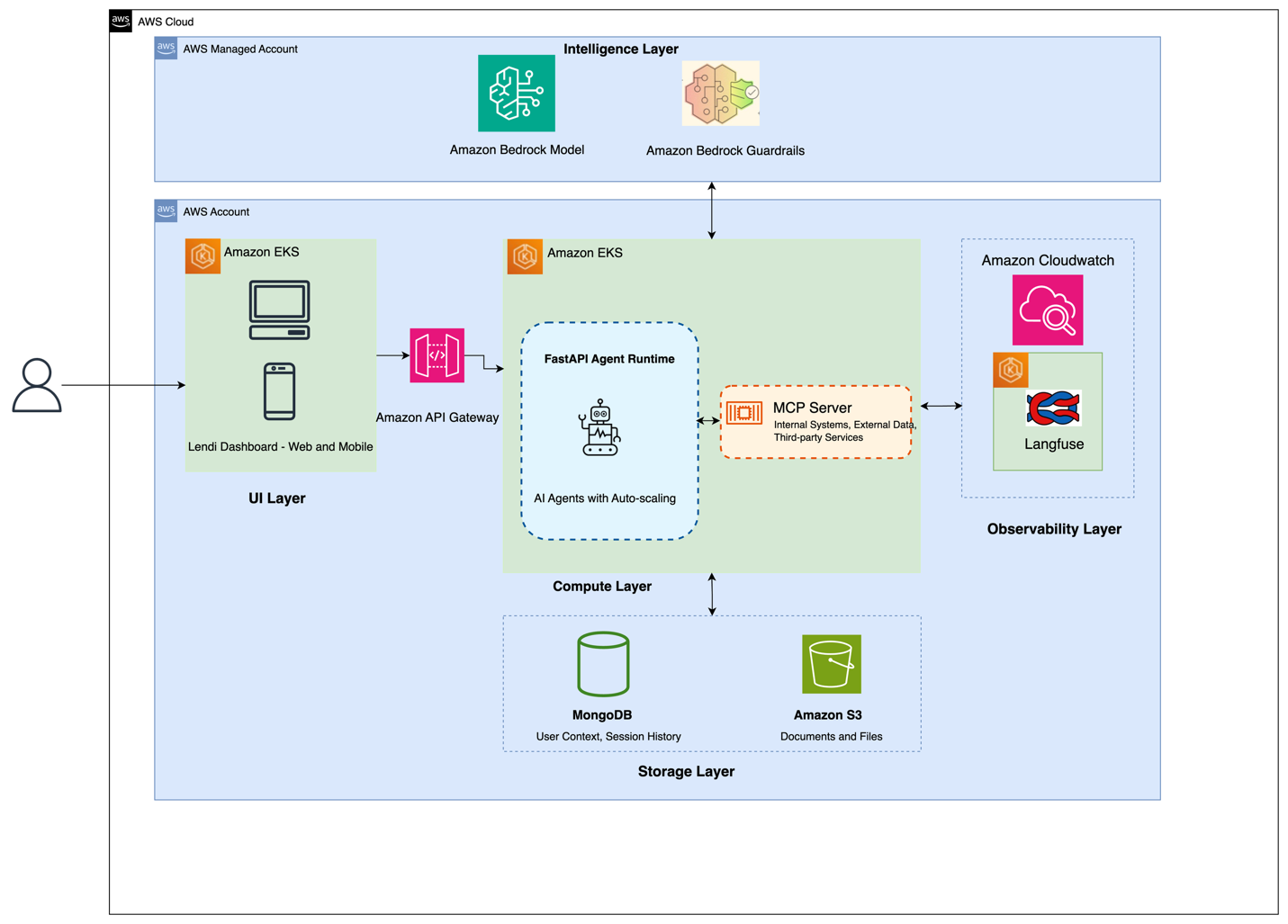

Lendi Group's AI-powered Guardian app monitors home loans, offers personalized insights, and simplifies refinancing. By using Amazon Bedrock's generative AI capabilities, Lendi Group aims to transform customer experiences while maintaining a human touch.

Claude rises to top of US and UK app store charts after Pentagon blacklist. Surges in popularity, surpassing OpenAI's ChatGPT.

Struggling with balancing generative AI safety? Amazon Bedrock Guardrails offer powerful tools for content filtering and protection. Best practices include selecting the right guardrail policies for efficient performance and application security.

A writer turned teacher grapples with AI's impact on English instruction, questioning the role of free online chatbots in student learning. The uncertainty of integrating AI into education adds a new layer of complexity to timeless pedagogical challenges.

Learn practical ways to work with AI while maintaining judgment, privacy, and humanity in the free course 'AI for the People.' Sign up for the six-week newsletter course today.