ChatGPT expands reach with questionable companions, causing concern. First Dog merchandise available at the First Dog shop.

PixArt-Sigma is a high-resolution diffusion transformer model with architectural improvements. AWS Trainium and AWS Inferentia chips enhance performance for running PixArt-Sigma.

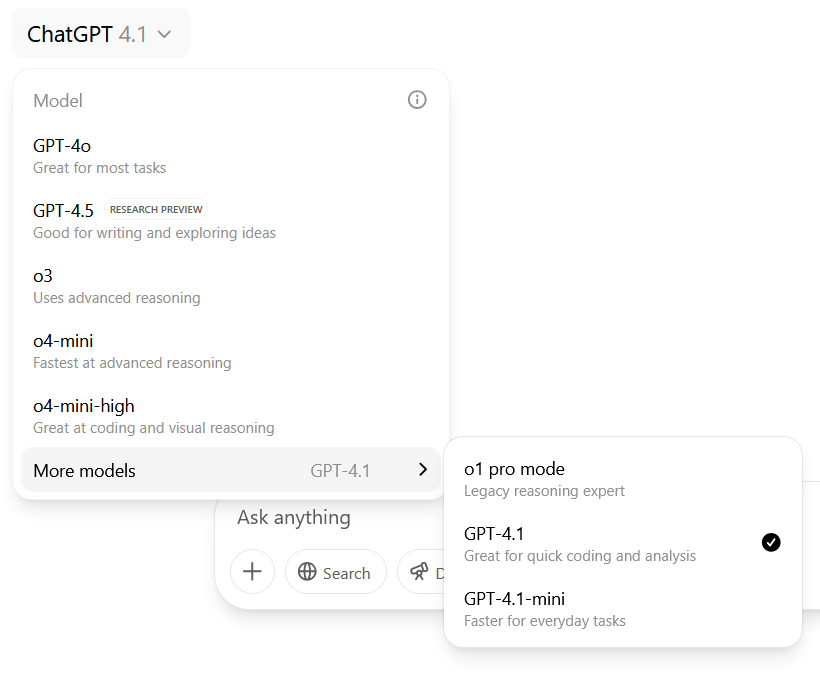

OpenAI introduces GPT-4.1 to ChatGPT, enhancing coding capabilities for subscribers. Confusion arises as users navigate the array of available AI models, sparking debate among novices and experts alike.

DeepSeek AI's DeepSeek-R1 model with 671 billion parameters showcases strong few-shot learning capabilities, prompting customization for various business applications. SageMaker HyperPod recipes streamline the fine-tuning process, offering optimized solutions for organizations seeking to enhance model performance and adaptability.

Elon Musk showcases Tesla Optimus robots at Saudi summit, announces Starlink deal for maritime and aviation in Saudi Arabia. Saudi minister praises Musk as a 'lifetime partner and friend' to the Kingdom.

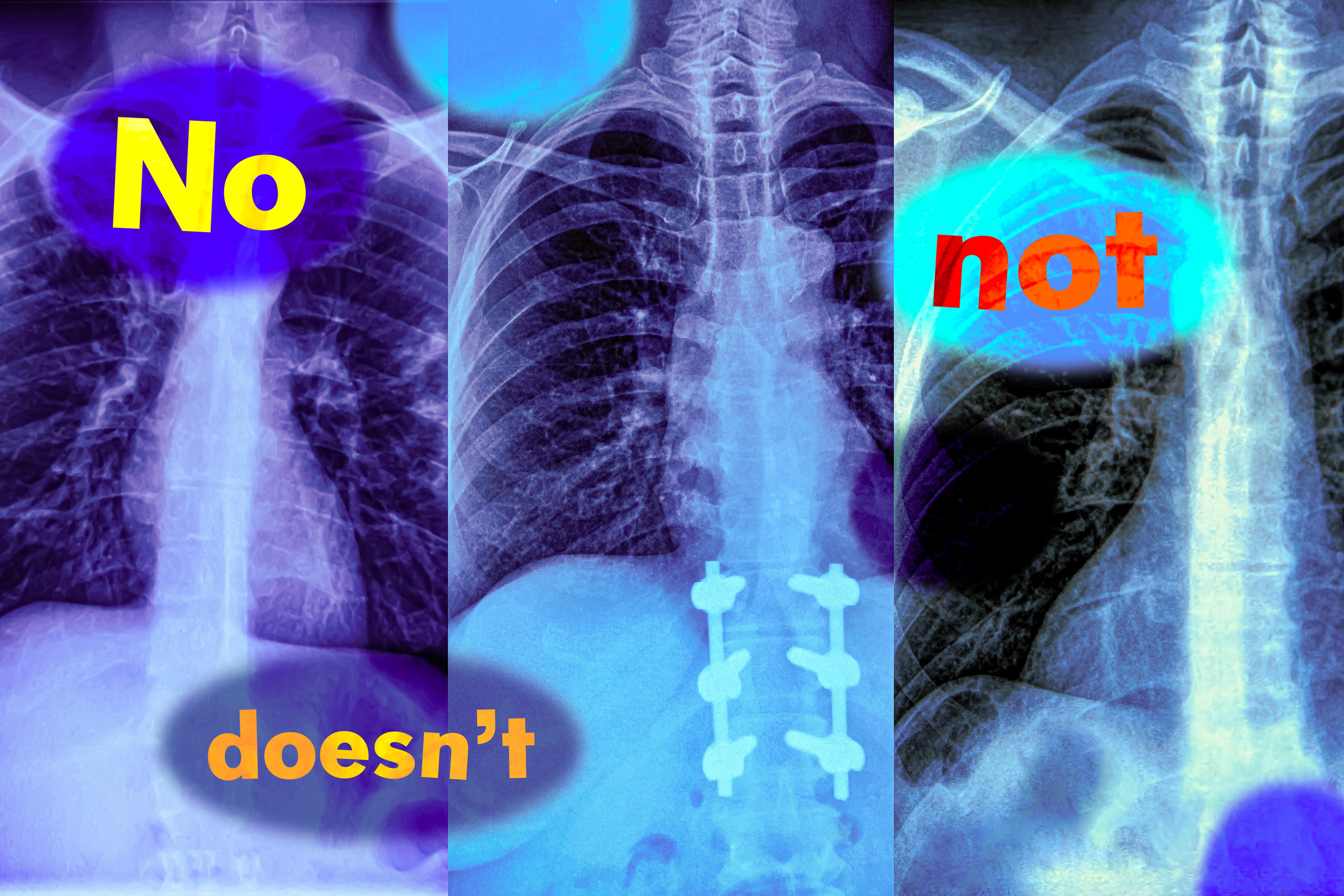

Vision-language models struggle with negation, impacting accuracy. MIT researchers urge caution in using these models blindly.

Apache Parquet is a game-changer in data storage, offering data compression, columnar storage, language flexibility, open-source format, and support for complex data types. Unlike traditional row-based storage, Parquet's column-based approach allows for faster data read operations, optimizing analytics workloads.

US Republicans seek to block state laws regulating AI for 10 years in budget bill, aiming to prevent guardrails on automated decision-making systems. Proposed provision in House bill would restrict any state or local regulation of AI models or systems unless to facilitate deployment.

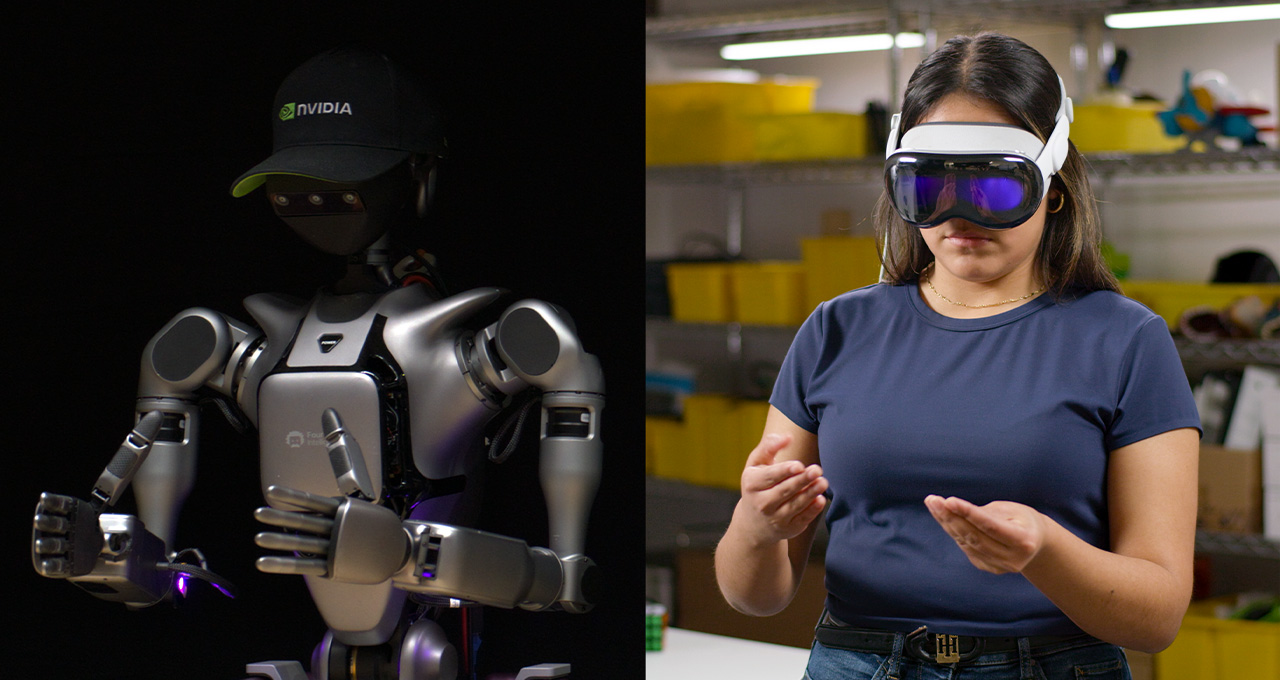

AI-powered robots showcased at Automate by KUKA, Standard Bots, UR, and Vention, utilizing NVIDIA technologies for industrial automation. NVIDIA's synthetic data blueprint accelerates robot training process, revolutionizing how robots are developed for various tasks.

Nvidia to sell hundreds of thousands of AI chips in Saudi Arabia, while Cisco partners with UAE's G42 for AI sector development. Trump touts $600bn in Saudi commitments to US tech firms during Gulf states tour.

AI tool Consult will analyze responses 1,000x faster than humans, saving time & money for the Scottish government. The system promises to revolutionize public consultations by efficiently processing feedback on non-surgical cosmetic procedures.

MIT's Shaping the Future of Work Initiative evolves into the James M. and Cathleen D. Stone Center on Inequality, focusing on wealth distribution and technology's impact on the workforce. Led by prominent scholars, the center aims to advance research, inform policymakers, and engage the public on critical economic issues.

Hardware choices and training time impact energy, water, and carbon footprints during AI model training. Longer training time can decrease energy efficiency by 0.03% per hour, highlighting environmental costs of AI adoption.

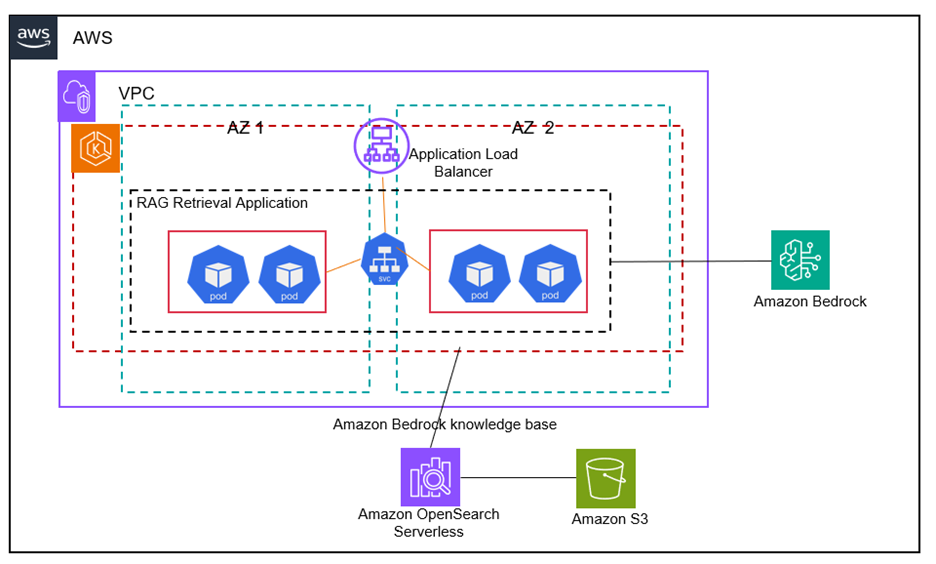

Amazon EKS and Bedrock create scalable, secure RAG solutions for generative AI apps on AWS, leveraging additional data for accurate responses. Using EKS managed node groups, the solution automates resource provisioning and scales efficiently based on demand, enhancing performance and security.

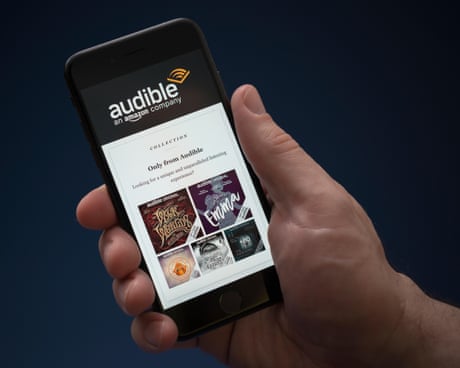

Audible, an Amazon brand, will introduce over 100 AI-generated voices for audiobooks in multiple languages. AI technology will be used for narration, with translation capabilities to come, offered to select publishers.