MIT researchers developed a new diagram-based language for optimizing deep-learning algorithms, simplifying complex tasks to napkin-sized drawings. The method focuses on efficient resource usage, particularly for large AI models like ChatGPT, using diagrams to represent parallelized operations on GPUs from companies like NVIDIA.

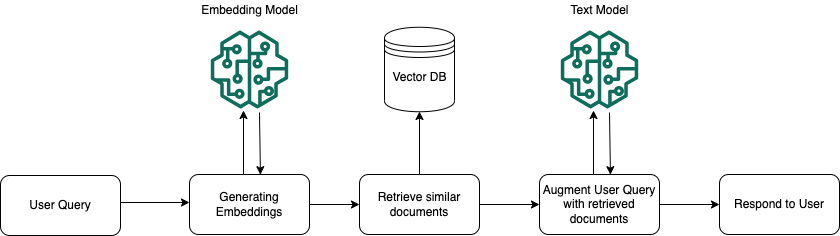

RAG applications enhance AI tasks with contextually relevant info, but require careful security measures to protect sensitive data. AWS offers generative AI security strategies like Amazon Bedrock Knowledge Bases to safeguard privacy and create appropriate threat models.

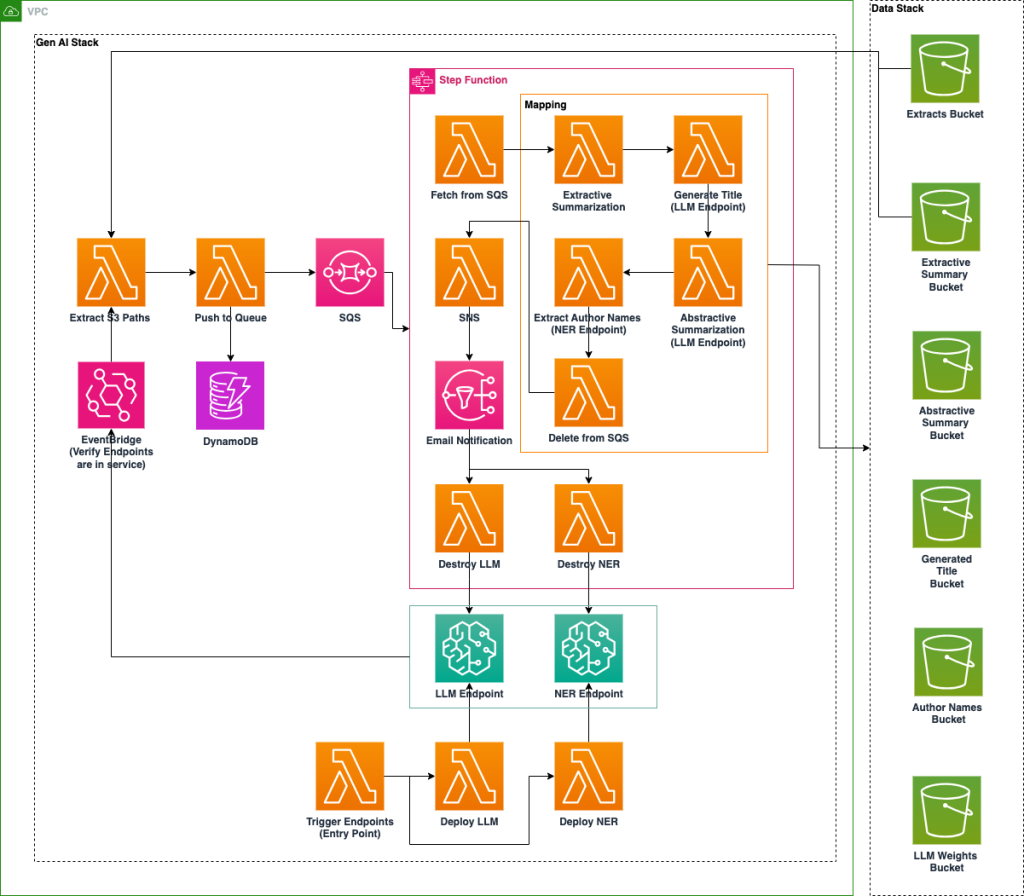

A U.S. National Laboratory implements AI platform on Amazon SageMaker to enhance accessibility of archival data through NER and LLM technologies. The cost-optimized system automates metadata enrichment, document classification, and summarization for improved document organization and retrieval.

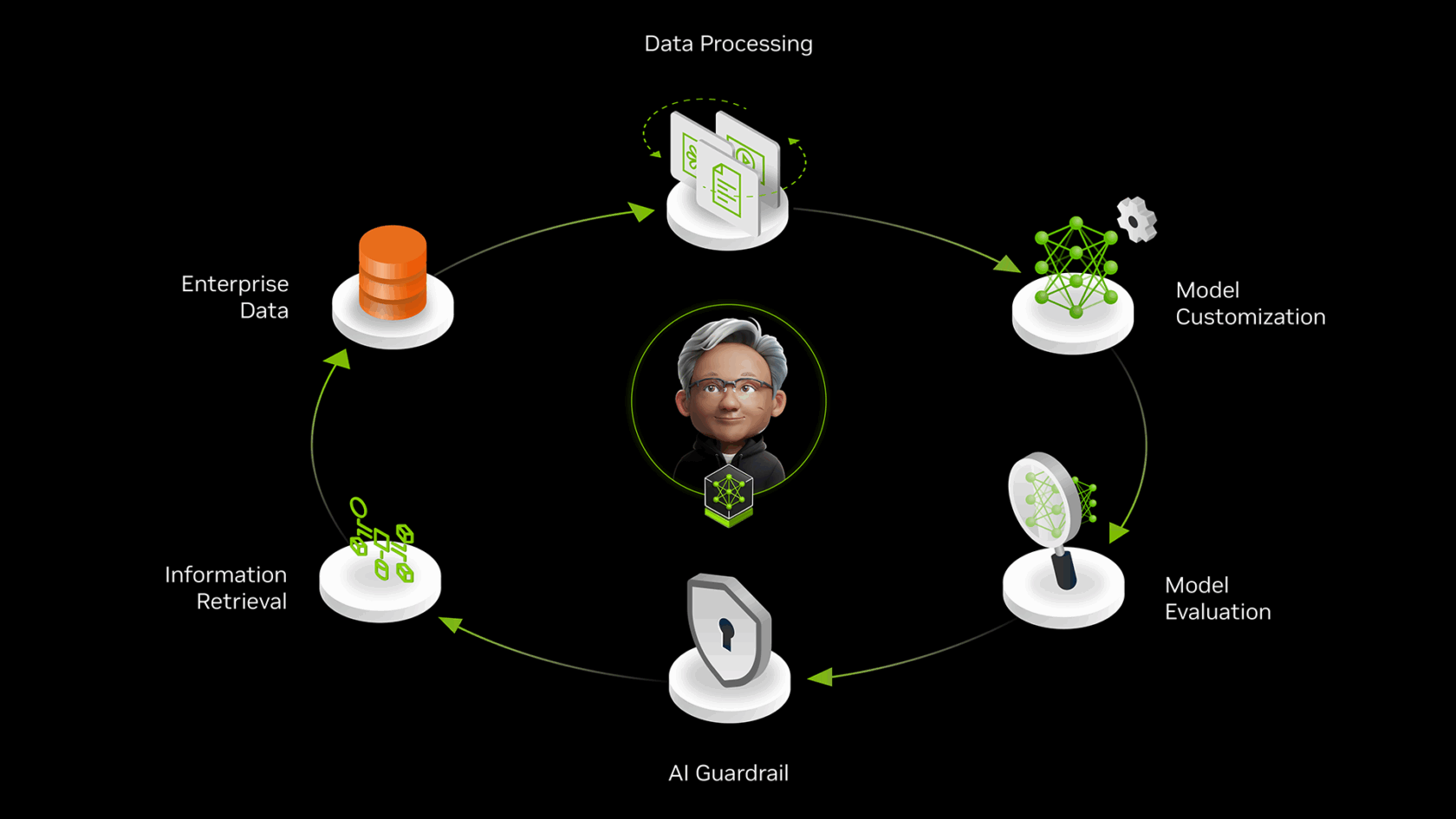

NVIDIA NeMo microservices enable enterprise IT to build AI teammates that improve productivity by tapping into data flywheels. NeMo tools like Customizer and Evaluator help optimize AI models for accuracy and efficiency, enhancing compliance and security measures.

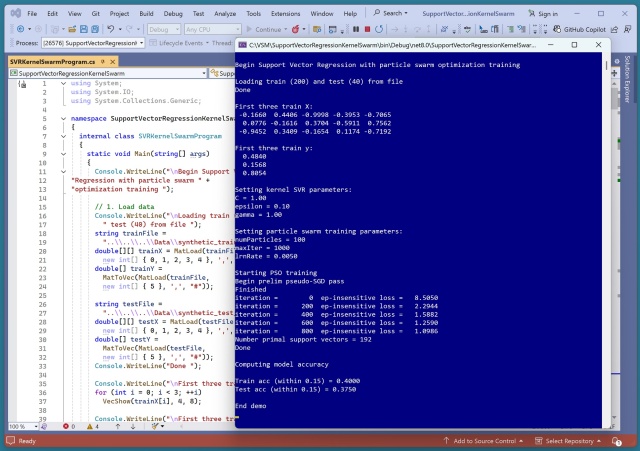

Kernelized SVR, trained with PSO, tackles non-linear data using RBF. Epsilon-insensitive loss and PSO make for a challenging yet promising system.

Cybersecurity leaders face tough questions on breach likelihood and cost. Only 15% use quantitative risk modeling, posing limitations.

Publishers and writers back a new collective licence by UK licensing bodies, allowing authors to be compensated for AI model training. The Copyright Licensing Agency, led by the Publishers’ Licensing Services and the Authors’ Licensing and Collecting Society, will introduce this groundbreaking initiative in the summer.

MIT researchers have developed a machine-learning model that predicts chemical reaction transition states in less than a second, aiding in the design of sustainable processes to create useful compounds. The model could streamline the process of designing pharmaceuticals and fuels, making it easier for chemists to utilize abundant natural resources efficiently.

The launch of DeepSeek-R1 rivals Meta and OpenAI, offering advanced reasoning capabilities at a fraction of the cost. Explore how to evaluate DeepSeek-R1's distilled models using recognized benchmarks like GPQA-Diamond.

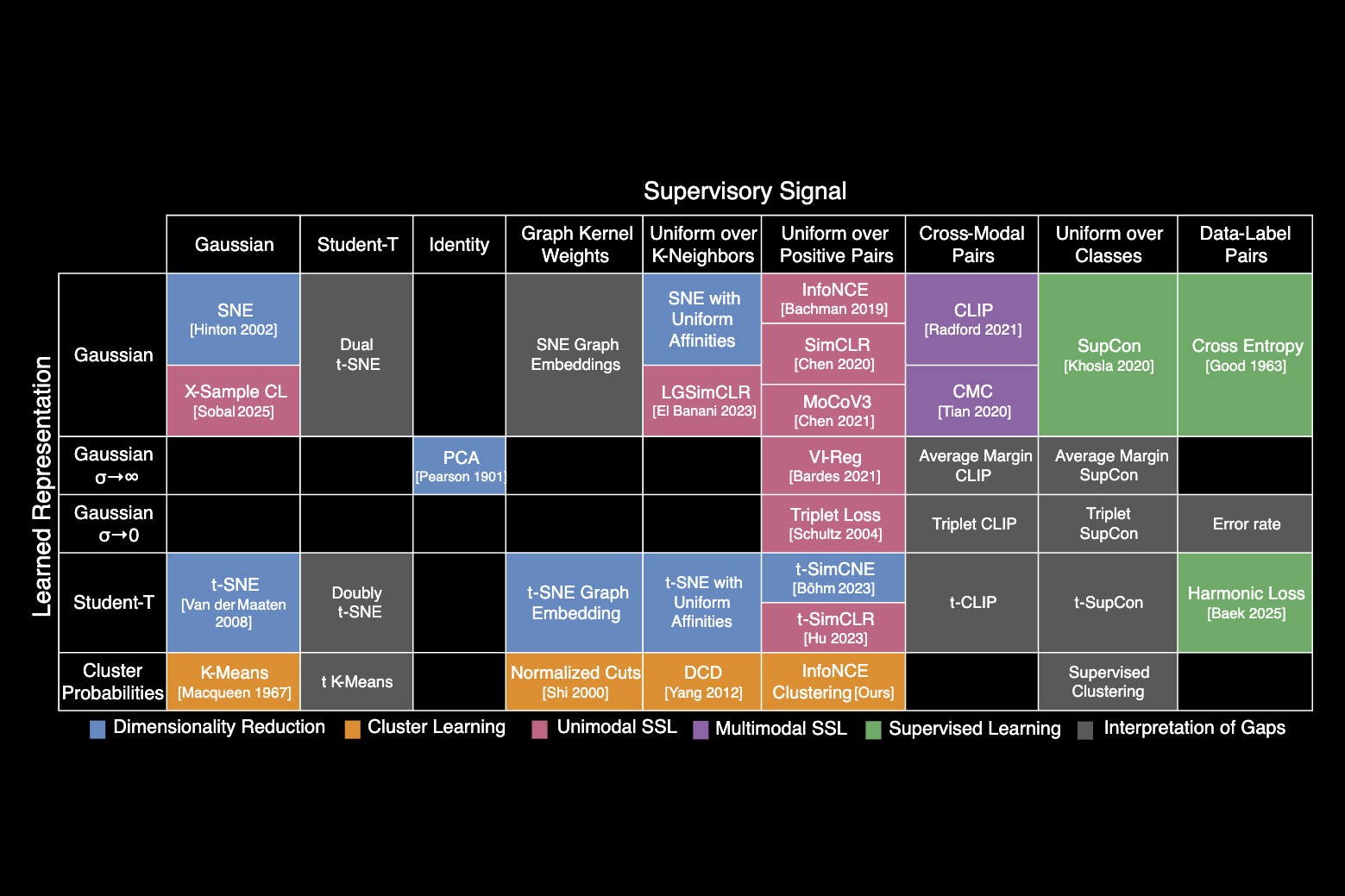

MIT researchers developed a periodic table of machine learning algorithms, revealing connections and a unifying equation. The table allows for the creation of new AI models by combining elements from different methods.

Summary: Testing is crucial for identifying issues in car blinkers or software code. Unit, integration, and end-to-end tests play key roles in ensuring functionality and reliability.

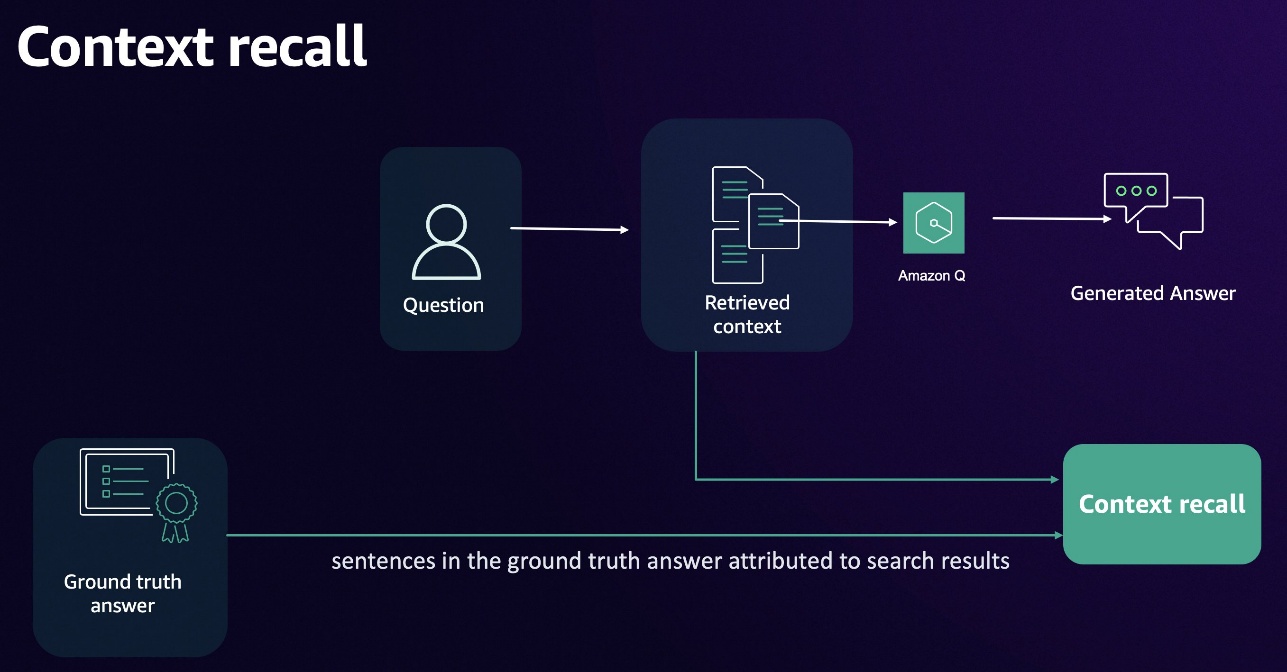

Amazon Q Business offers a fully managed RAG solution for companies, focusing on evaluation framework implementation. Challenges in assessing retrieval accuracy and answer quality are discussed, with key metrics highlighted for a generative AI solution.

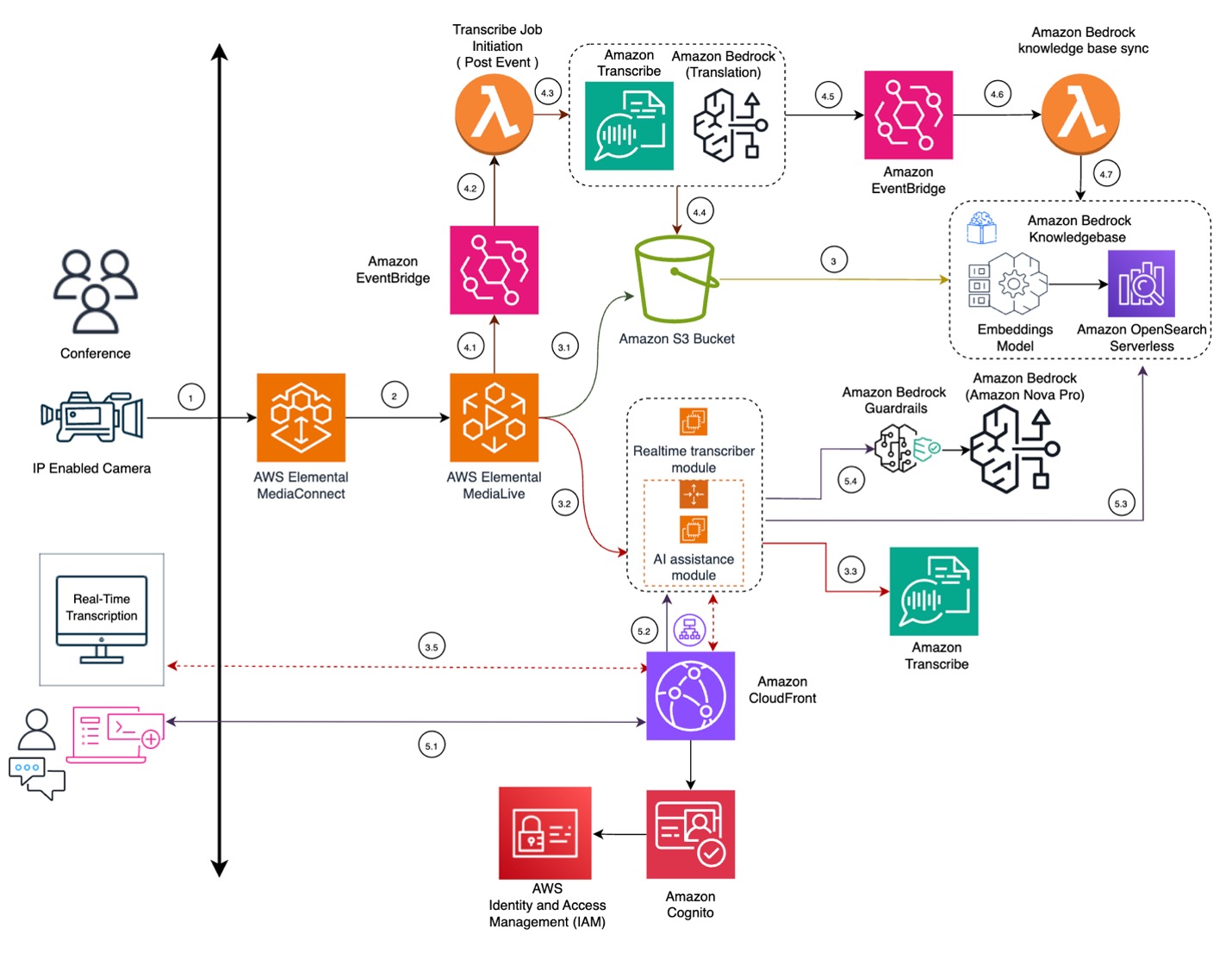

Infosys Consulting, with partners Amazon Web Services, developed Infosys Event AI to enhance knowledge sharing at events. Event AI offers real-time language translation, transcription, and knowledge retrieval to ensure valuable insights are accessible to all attendees, transforming event content into a searchable knowledge asset. By utilizing AWS services like Elemental MediaLive and Nova Pro, ...

AI data centers are transitioning to liquid cooling systems like the NVIDIA GB200 NVL72 and GB300 NVL72 to efficiently manage heat, energy costs, and achieve significant cost savings. Liquid cooling enables higher compute density, increased revenue potential, and up to 300x more water efficiency compared to traditional air-cooled architectures, revolutionizing the way data centers operate.

Feature selection is crucial in maximizing model performance. Regularization helps prevent overfitting by penalizing model complexity.