Nvidia faces $5.5bn hit after US ban on H20 AI chip sales in China, shares plummet in after-hours trading. Company now requires special licence for Chinese market, impacting future sales.

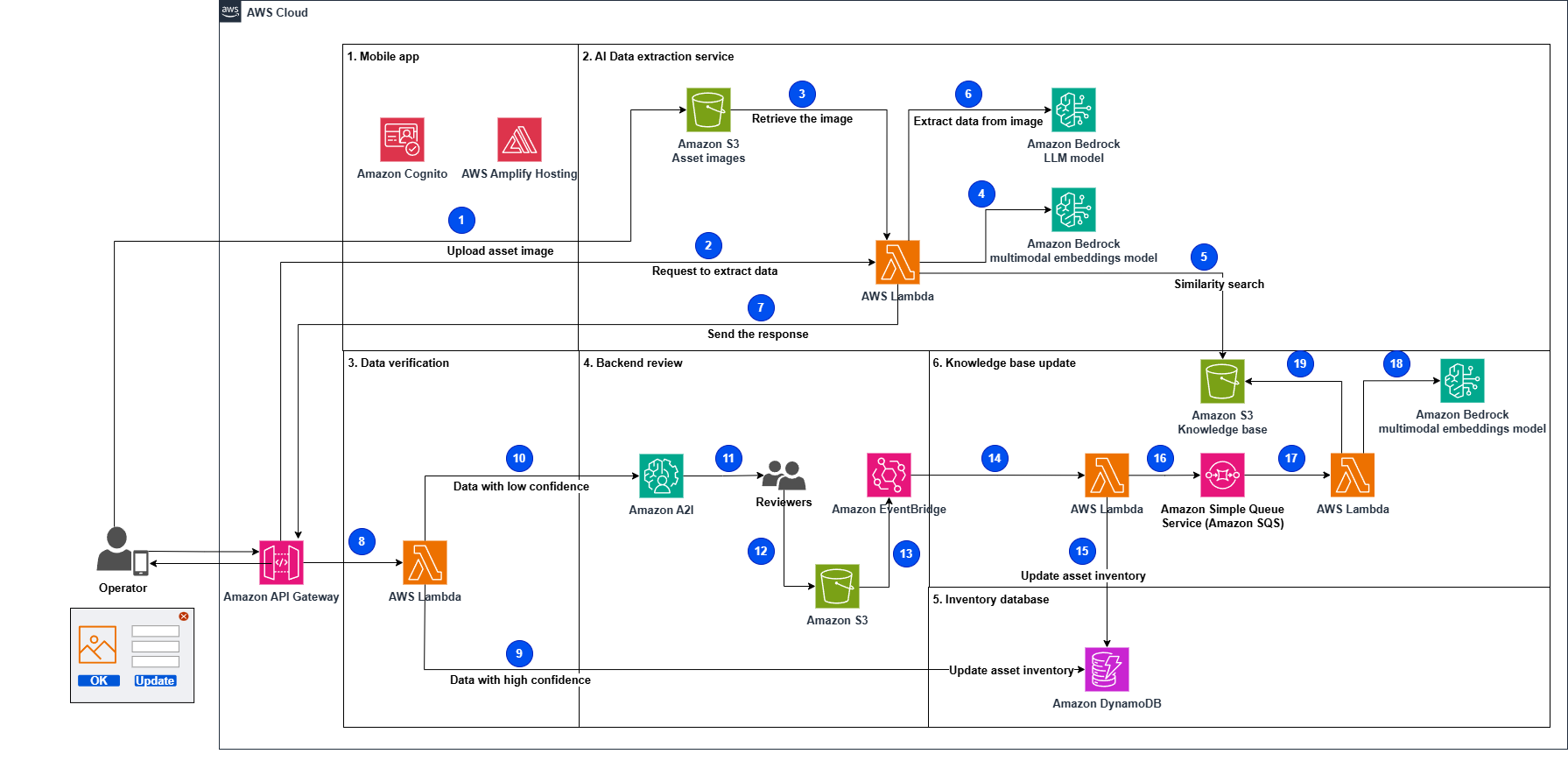

Using generative AI and large language models, electricity providers can streamline asset inventory management by automatically extracting data from labels using computer vision. This innovative solution leverages AWS services like Amazon Bedrock and Anthropic’s Claude 3 to simplify the process, allowing field technicians to easily update inventory databases with accurate information.

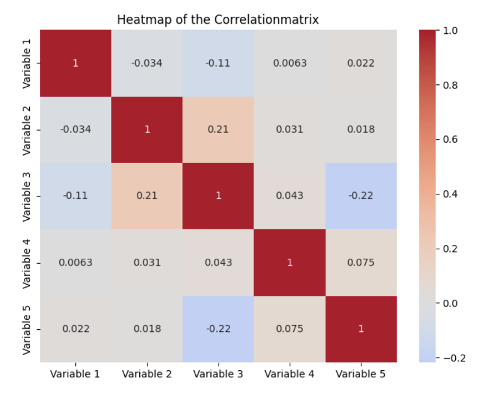

Multicollinearity in regression models can lead to unstable results. The Variance Inflation Factor (VIF) helps detect and measure this issue.

MIT researchers have developed a machine learning system that cuts solve time by 50% for complex logistical problems. The AI-enhanced approach reduces redundant computations for optimal solutions.

'The Legend of Ochi showcases 80s practical effects in a visually stunning family adventure, blending old-school techniques with modern technology to create an immersive experience.'

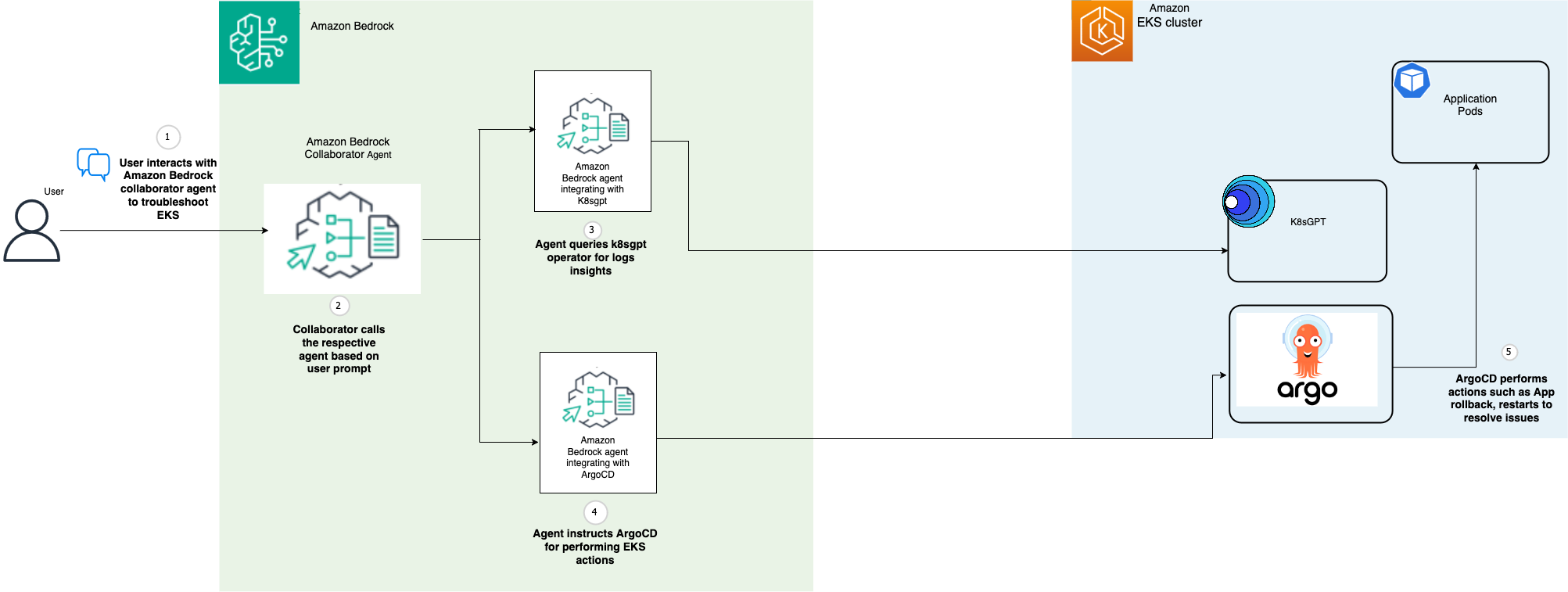

Amazon introduces multi-agent collaboration for Amazon Bedrock at AWS re:Invent 2024. This innovative solution streamlines EKS cluster management with intelligent cluster monitoring and automated remediation, reducing MTTR and MTTI significantly.

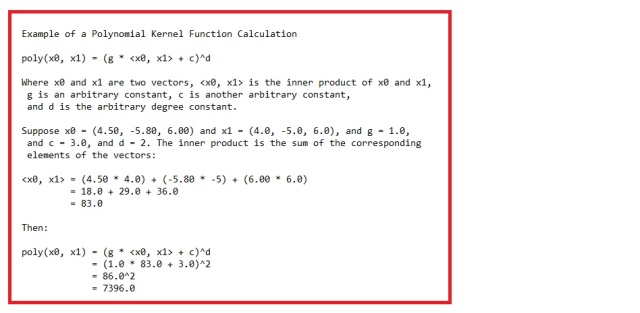

Kernel functions, like the polynomial and radial basis function, are key in machine learning for measuring similarity between vectors. While the polynomial kernel function is complex to compute, the RBF kernel function is simpler and more commonly used.

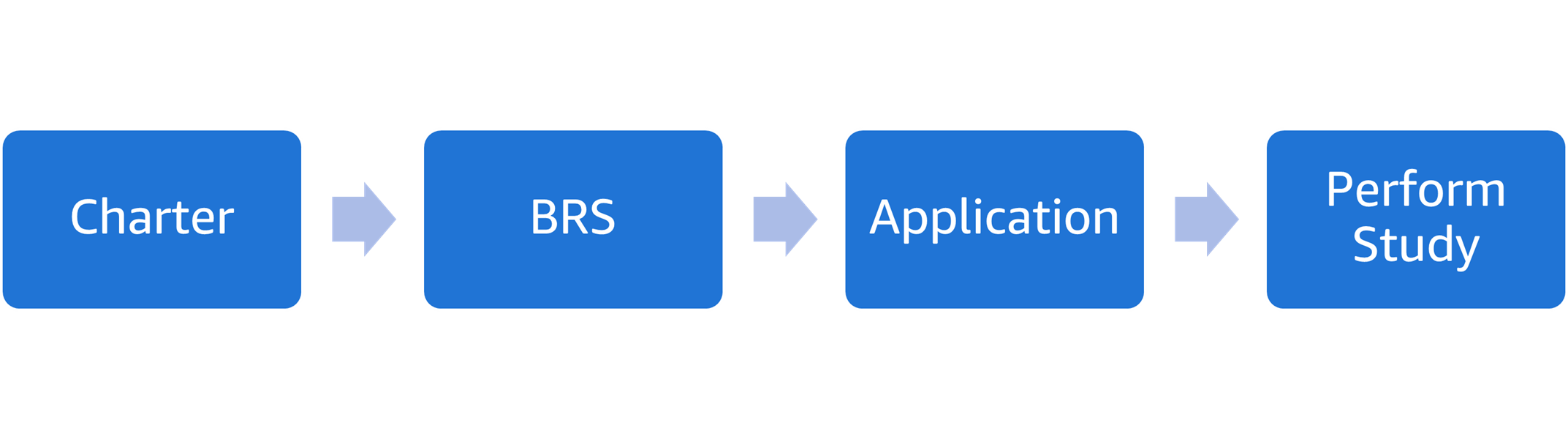

Clario, a leader in endpoint data solutions for clinical trials, modernized document generation with AWS AI services to streamline workflows. The solution automates BRS generation, reducing time-consuming manual tasks and minimizing errors in clinical trial documentation.

Document AI, offered by Snowflake, combines OCR with LLMs to extract info from digital documents efficiently. It bridges the paper and digital worlds, transforming data processing with ease and convenience.

David Salle uses AI on his paintings, creating wild results. Can AI truly offer new insights into his work?

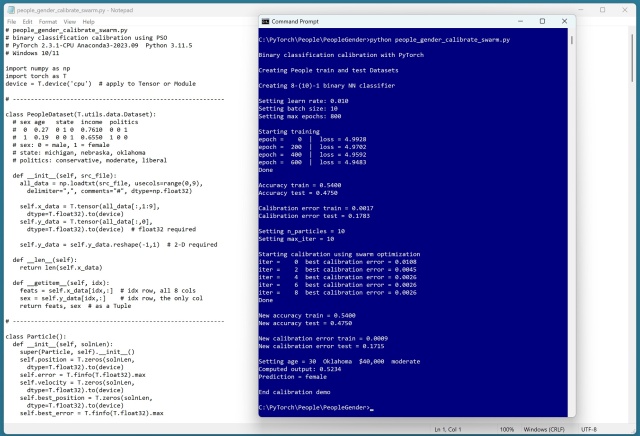

Calibration error in prediction models is crucial. A demo using PyTorch and PSO shows how to improve it effectively.

Republican-controlled Texas state house may delay Trump's AI infrastructure plans with legislation imposing hurdles on data centers. Stargate joint venture to build 20 data centers for AI computing power to compete with China and attract investors.

AWS provides optimized solutions for deploying large language models like Mixtral 8x7B, utilizing AWS Inferentia and AWS Trainium chips for high-performance inference. Learn how to deploy the Mixtral model on AWS Inferentia2 instances for cost-effective text generation.

Nvidia to invest $500bn in US AI infrastructure amid Trump's import threats. CEO dined at Mar-a-Lago.

Over 100m people are using personified chatbots for various purposes, from virtual 'wives' to mental health support. AI chatbots are transforming human connection by simulating human-like interactions through adaptive learning and personalized responses.