Biotech company advances ML & AI Algorithms for secure brain lesion segmentation in hospitals using federated learning. Protection measures safeguard algorithm code and data in a heterogeneous federated environment, including CoCo technology for confidential containers.

MIT and NVIDIA researchers developed HART, a hybrid image-generation tool that combines autoregressive and diffusion models to create high-quality images nine times faster. HART's innovative approach could revolutionize training self-driving cars and designing video game scenes.

Arve Hjalmar Holmen files complaint against ChatGPT for falsely accusing him of murdering his children. The chatbot's defamatory response shocks the Norwegian man with no criminal record.

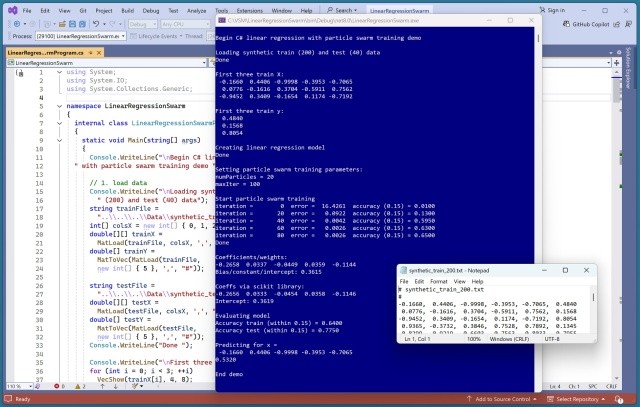

Particle swarm optimization (PSO) mimics swarms' motion to solve optimization problems. PSO effectively updates particles' positions towards better solutions using a weighted velocity factor.

Large Language Models (LLMs) solve most classification problems at 70-90% Precision/F1. R. E. D. addresses challenges in text classification beyond a few dozen classes.

Google's Data Science Agent in Colab simplifies data analysis with Gemini, automating tasks and providing customizable plans. It offers end-to-end execution and autocorrection, potentially reshaping data science workflows.

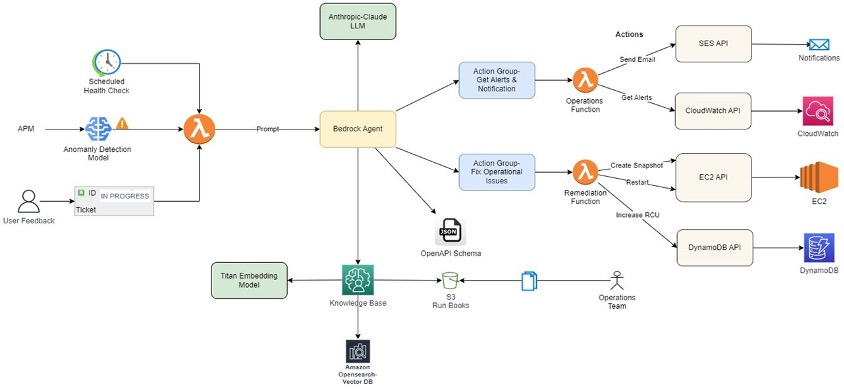

Generative AI streamlines IT incident management, automating detection, diagnosis, and resolution for improved efficiency. AWS combines services like Amazon Bedrock and CloudWatch to create AI assistants for effective incident management.

Director Greg Kohs delves into the world of Artificial General Intelligence, focusing on Google DeepMind's innovative approach. The documentary offers a character-driven study of DeepMind's CEO Demis Hassabis, revealing his fascinating journey from child chess prodigy to leading the forefront of AGI research.

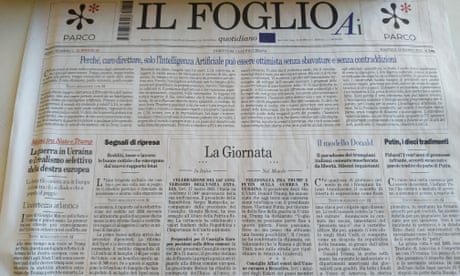

Italian newspaper Il Foglio claims to be the first in the world to publish an edition entirely written by artificial intelligence, including headlines and quotes. The experiment aims to showcase AI's impact on journalism, with editor Claudio Cerasa leading the initiative.

GeForce NOW introduces Assassin’s Creed Shadows and Fable Anniversary to its cloud gaming platform. Explore feudal Japan and shape destinies with enhanced graphics and smooth gameplay at up to 4K resolution and 120 frames per second.

UK performing arts leaders, including National Theatre and Royal Albert Hall bosses, express concern over AI companies using artists' work without permission, highlighting the importance of copyright for freelancers' livelihoods. They urge government to protect moral and economic rights of creative community in music, dance, drama, and opera.

Quantum supercomputers will integrate with AI to solve complex problems. NVIDIA's NVAQC will house a supercomputer for quantum research, partnering with key innovators and academic institutions.

Organizations are moving towards production-ready generative AI systems for real business value, like Hippocratic AI's AI-powered clinical assistants. Adobe's integration of generative AI in flagship products showcases the power of NVIDIA GPU-accelerated infrastructure for content creation.

Aardvark Weather AI predicts weather faster, using less power than current systems, revolutionizing forecasting efficiency. Single researcher with desktop computer can deliver accurate forecasts in a fraction of the time.

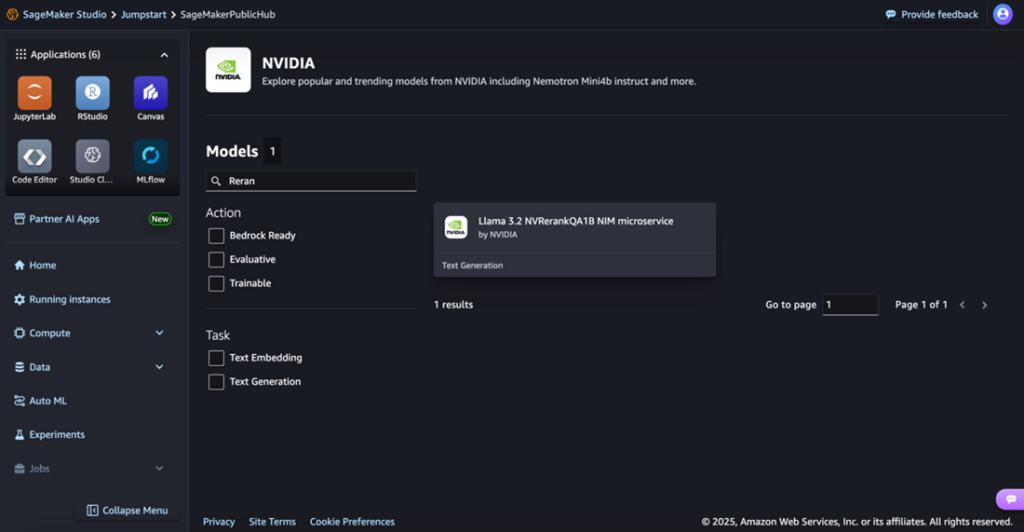

NVIDIA NIM microservices now available in Amazon SageMaker JumpStart for deploying optimized reranking and embedding models for generative AI on AWS. NeMo Retriever Llama3.2 offers multilingual text question-answering retrieval with dynamic embedding sizing, reducing data storage footprint by 35-fold.