Renewable energy investment hits record high, but future uncertain. MIT Energy Conference discusses need for level playing field and government support for clean energy breakthroughs.

Legal contracts are crucial for businesses, but understanding and extracting insights can be complex. Implementing GraphRAG in Neo4j can streamline the process by structuring contracts into a knowledge graph, enabling more precise and context-aware retrieval.

Authors protest Meta's use of LibGen for AI training at King's Cross office. Kate Mosse, Tracy Chevalier among demonstrators.

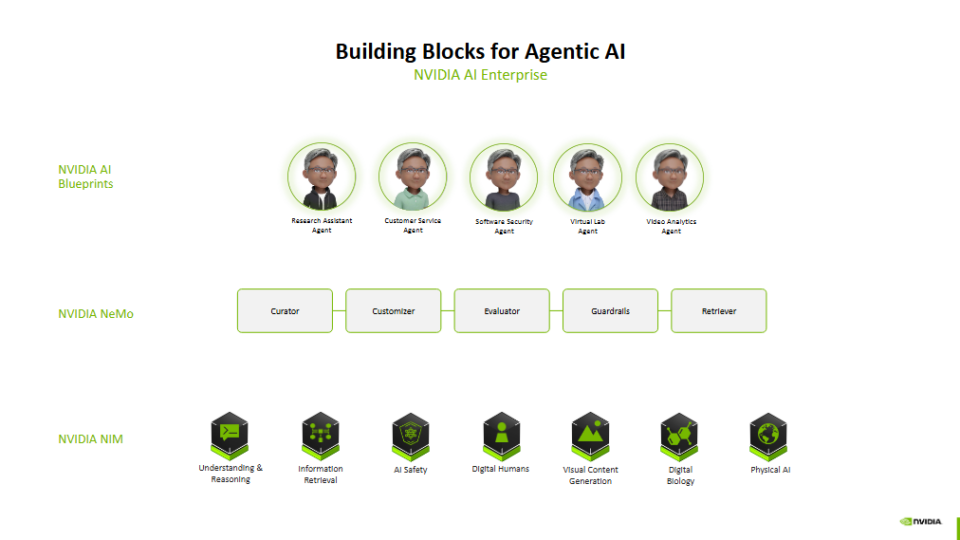

AI agents in retail provide personalized experiences, enrich product knowledge, and offer omnichannel support, redefining customer shopping experiences with seamless integration and virtual try-on capabilities. Retailers leveraging AI prioritize hyper-personalized recommendations to boost online sales and customer satisfaction, according to NVIDIA's latest report.

The Flash Attention algorithm revolutionizes transformers by optimizing memory access, making computations faster and more efficient. Flash Attention v3 introduces improvements for Nvidia Hopper and Blackwell GPUs, enhancing performance even further.

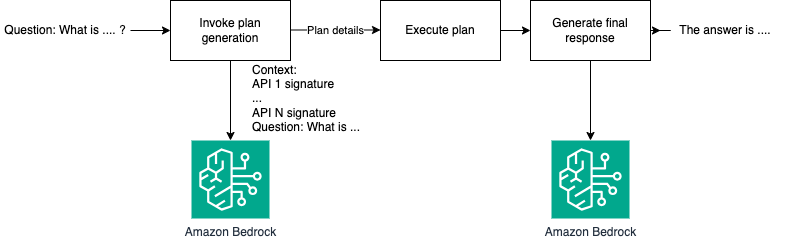

Large language models (LLMs) simplify complex tasks like dynamic reasoning and execution, revolutionizing data analysis. LLMs utilize external tools to provide accurate, context-aware answers to queries beyond text-based responses.

MIT researchers developed a framework guiding ChatGPT to efficiently solve complex planning problems with an 85% success rate, outperforming baselines. This versatile approach could optimize tasks like scheduling airline crews or managing machine time in factories, revolutionizing planning assistance.

The diffusion model, pioneered by Sohl-Dickstein et al. and further developed by Ho et al., has been adapted by OpenAI and Google to create DALLE-2 and Imagen, capable of generating high-quality images. The model works by transforming noise into images through forward and backward diffusion processes, maintaining the original image's dimensionality in the latent space.

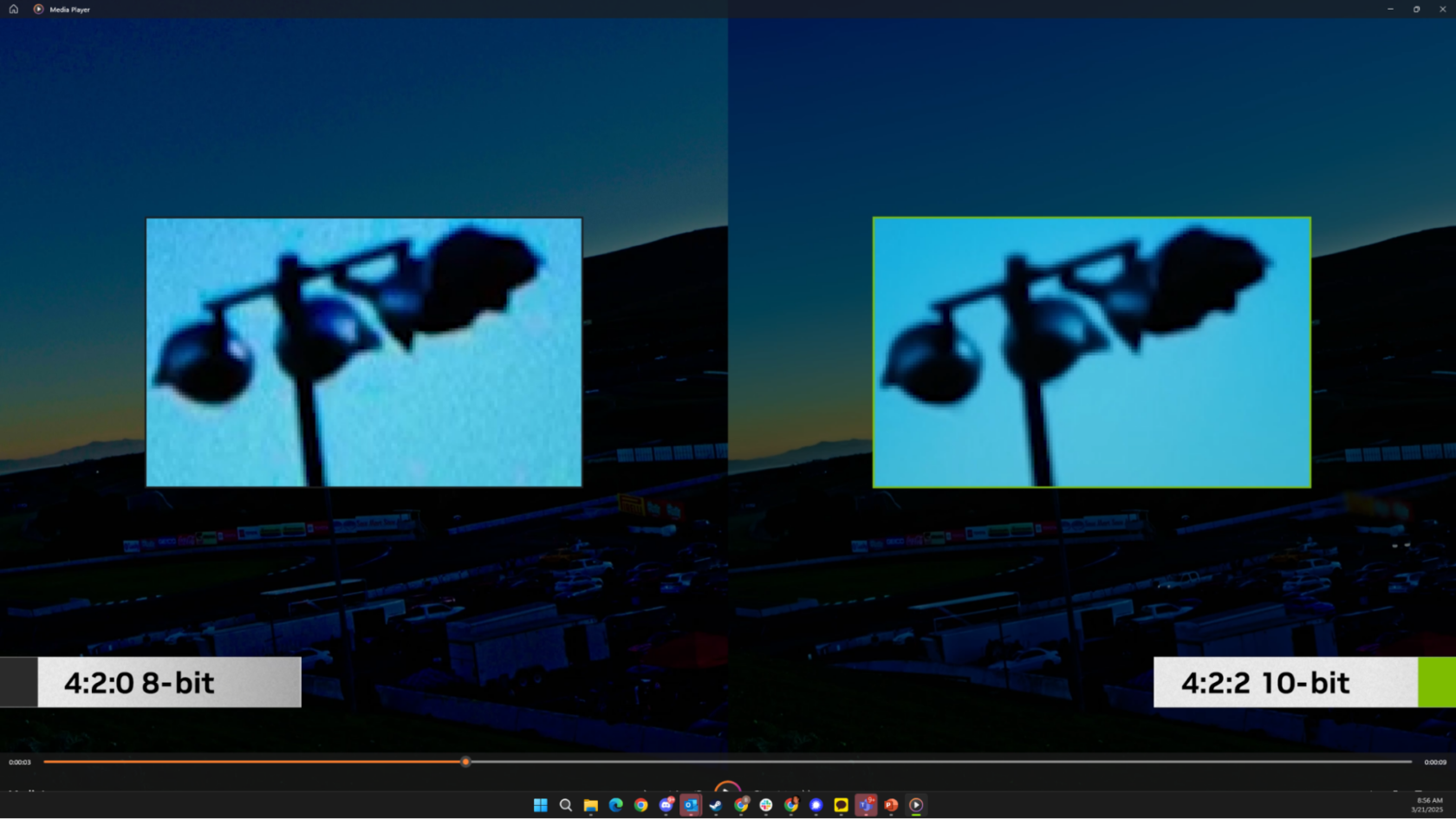

Adobe Premiere Pro (beta) and Adobe Media Encoder now support 4:2:2 color format, enhancing color accuracy and keying. NVIDIA GeForce RTX 50 Series laptops with Blackwell architecture accelerate advanced AI-powered features for video editing workflows.

UK gov't pledges economic impact assessment to address concerns from MPs, peers, and creatives like Paul McCartney. Proposal criticized by McCartney, Tom Stoppard, Kate Bush for AI companies to use copyright-protected work without permission.

Graph Convolutional Networks (GCNs) and Graph Attention Networks (GATs) have limitations with large graphs and changing structures. GraphSAGE offers a solution by sampling neighbors and using aggregation functions for faster and scalable training.

AI models are replacing traditional algorithms in algorithmic pipelines due to their higher resource requirements. Centralized inference servers may improve efficiency in processing large-scale inputs through deep learning models, as shown in a toy experiment using a ResNet-152 image classifier on 1,000 images.

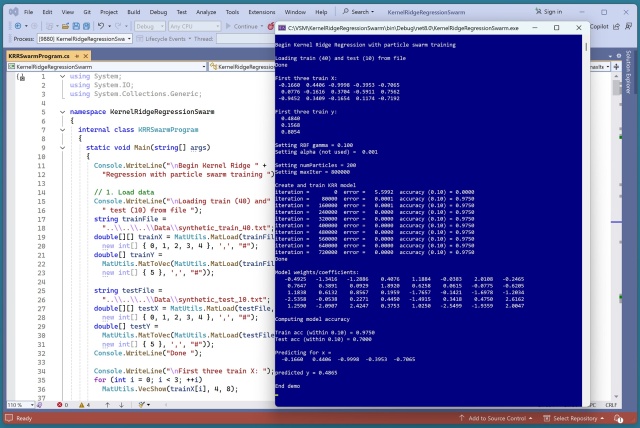

Algorithm combining PSO with EO, EPSO, performs similarly to PSO and EO, not significantly better. Slow for practical use, but shows promise in training a KRR prediction system.

GitHub Actions, a CI/CD tool, is not just for software - it automates data workflows, from setting up environments to deploying ML models. Free and easy to use, it offers pre-built actions and community support for automating tasks within repositories.

AI can enhance job search success, but requires a delicate balance with human interaction. Don't miss out on opportunities by underestimating AI's potential impact.