Safe Superintelligence (SSI), a new AI startup cofounded by Ilya Sutskever, raised $1 billion to develop "safe" AI systems surpassing human capabilities. Backed by top venture capital firms, SSI plans to expand its team and research locations in Palo Alto and Tel Aviv.

Marks & Spencer uses AI to personalize online shopping by advising on outfit choices based on body shape and style preferences, aiming to boost online sales. The 130-year-old retailer leverages technology to enhance the shopping experience and recommend items for consumers.

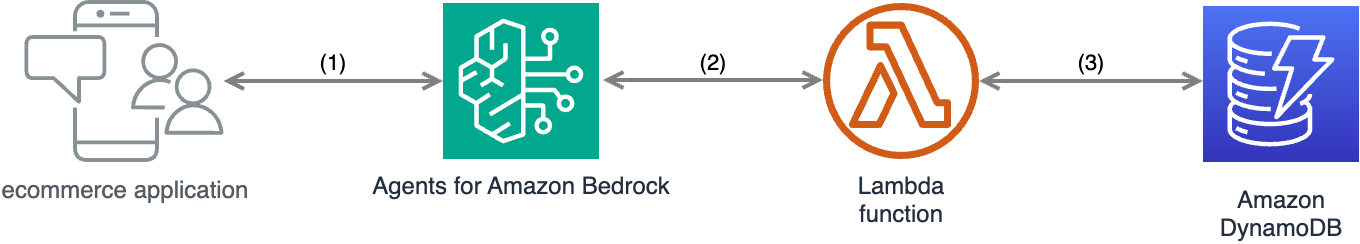

Amazon Bedrock offers high-performing AI models for building ecommerce chatbots. Amazon Bedrock Agents simplify the process of creating engaging and personalized conversational experiences for users.

New RTX AI PCs with GPUs and NPUs announced at IFA Berlin, accelerating over 600 AI-enabled games and applications worldwide. NVIDIA powers AI with RTX GPUs for advanced performance in gaming, content creation, software development, and STEM subjects.

Australian government considers EU-style AI act for minimum standards on high-risk AI. Industry minister proposes 10 mandatory guardrails for human oversight and challenge ability.

Volvo Cars' new electric EX90, powered by NVIDIA DRIVE Orin, offers advanced safety features and autonomous driving capabilities. The move to NVIDIA DRIVE Thor will quadruple processing power, improve energy efficiency, and enhance in-vehicle experiences.

Thomson Reuters Labs developed an efficient MLOps process using AWS SageMaker, accelerating AI innovation. TR Labs aims to standardize MLOps for smarter, cost-efficient machine learning tools.

Summary: Analyzing the performance patterns in heptathlon and decathlon reveals intriguing insights on event importance and scoring systems. The data shows significant differences in points received, shedding light on the impact of varying event performances at elite levels.

ABC announced "AI and the Future of Us: An Oprah Winfrey Special" featuring tech industry figures like OpenAI CEO Sam Altman. Critics question guest list and framing of the show, calling it an extended sales pitch for the generative AI industry.

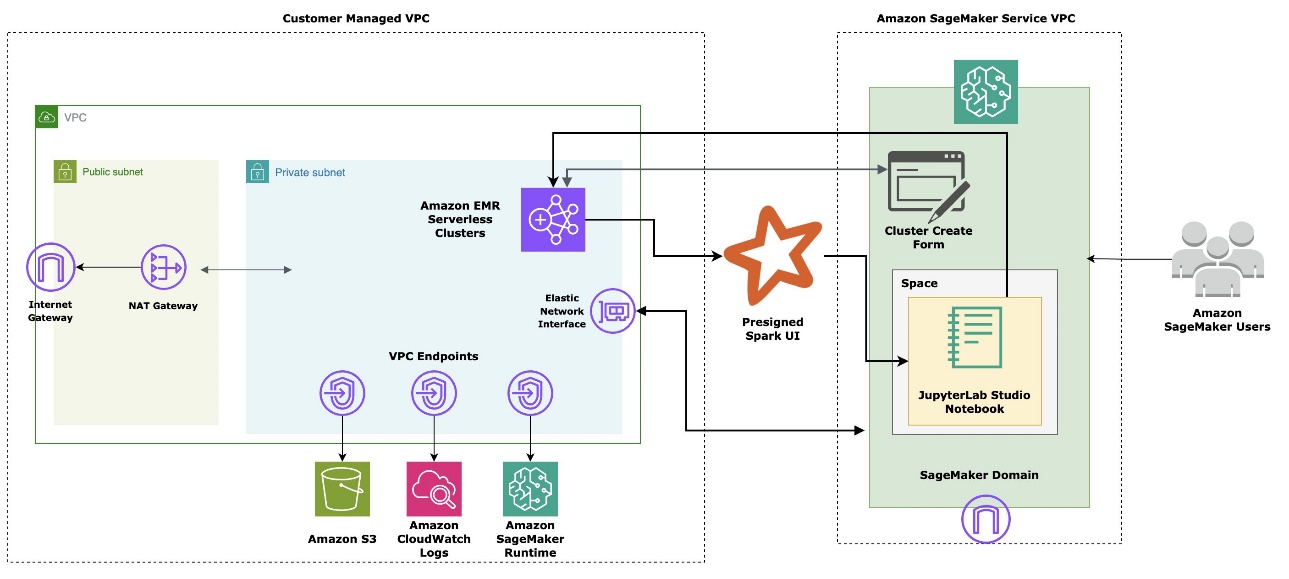

Amazon introduces EMR Serverless integration in SageMaker Studio, simplifying big data processing and ML workflows. Benefits include simplified infrastructure management, seamless integration, cost optimization, scalability, and performance improvements.

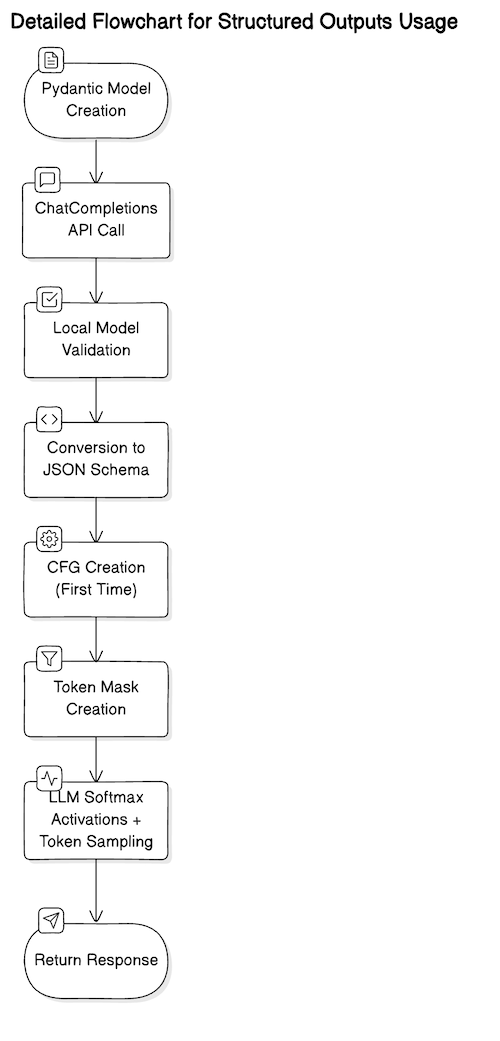

Structured outputs and LLMs are being utilized in various scenarios post-OpenAI's ChatCompletions API release. Pydantic is recommended by OpenAI for JSON schema implementation, enhancing code readability and maintainability.

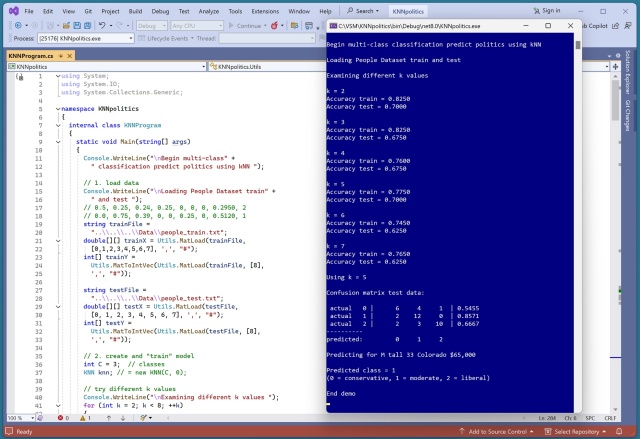

Implementing multi-class k-nearest neighbors classification from scratch using a synthetic dataset. Encoding and normalizing raw data for accurate predictions, with k=5 yielding the best results.

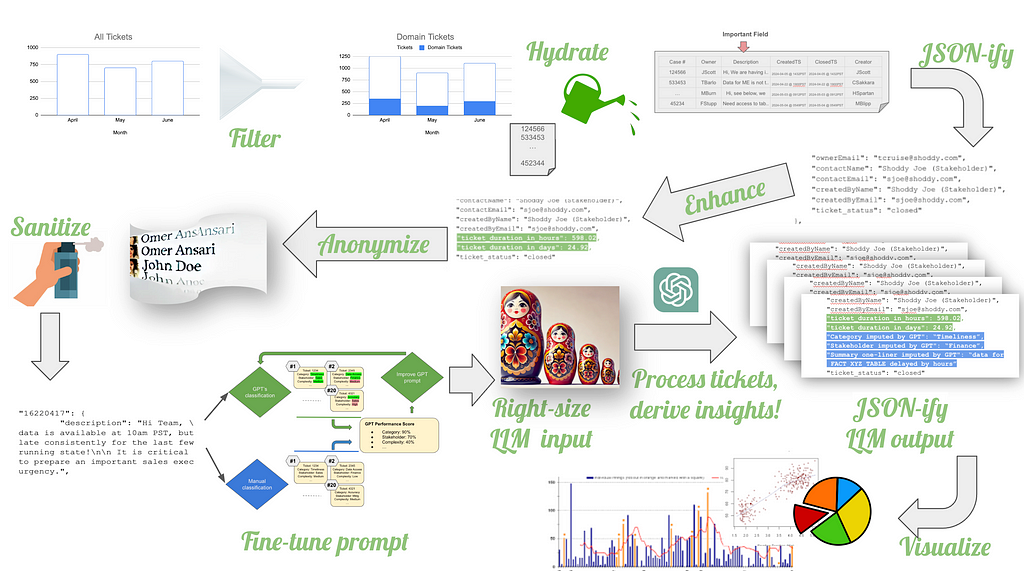

Large Language Models can help make sense of messy data without cleaning it at the source. Best practices for using generative AI like GPT to streamline data analysis and visualization, even with unreliable metadata.

Summary: Explore six unique encoding methods for categorical data in machine learning to bridge the gap between descriptive labels and numerical algorithms. Proper encoding is crucial for preserving data integrity and optimizing model performance.

Researchers from MIT and other institutions developed a tool called the Data Provenance Explorer to improve data transparency for AI models, addressing legal and ethical concerns. The tool helps practitioners select training datasets that fit their model's intended purpose, potentially enhancing AI accuracy in real-world applications.