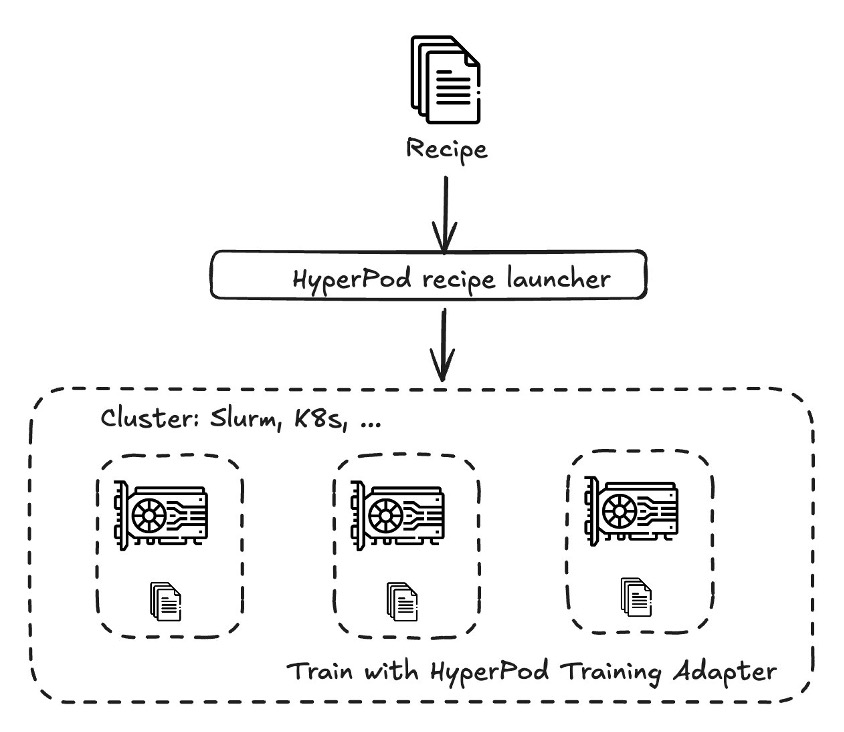

Organizations are customizing DeepSeek AI models for specific use cases, facing challenges in managing computational resources. Amazon SageMaker HyperPod recipes streamline DeepSeek model customization, achieving up to 49% improvement in Rouge 2 score.

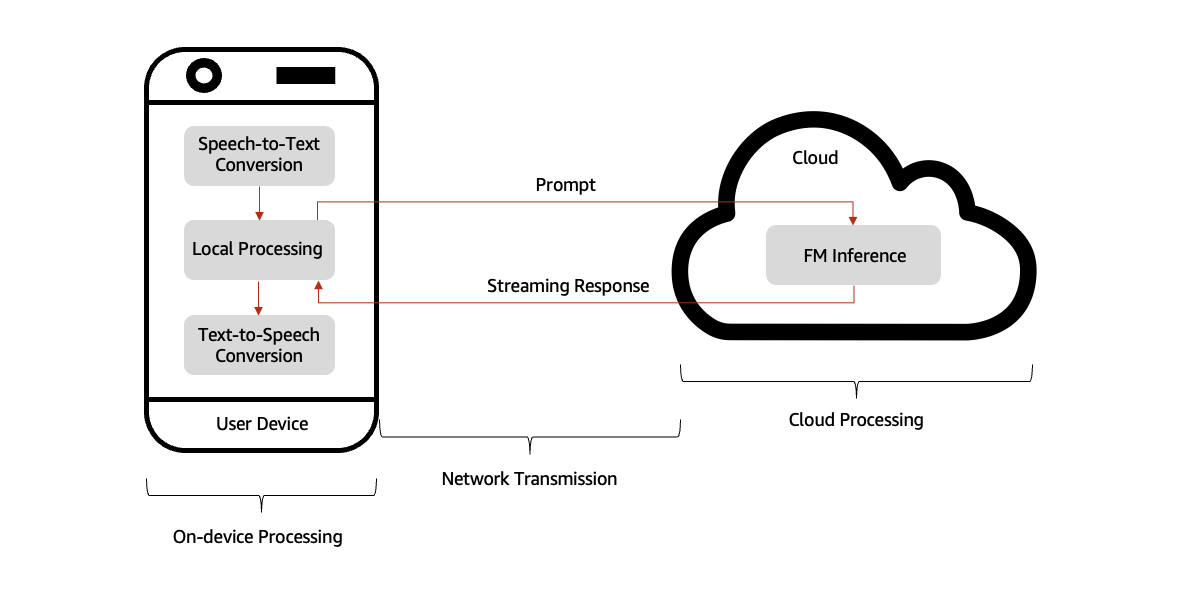

Generative AI advancements drive conversational AI assistants with real-time interactions across various sectors. Balancing cloud-based FM power with local device responsiveness is key for reducing response latency below 200 ms.

Paris restricts périphérique to car-sharing during rush-hour to reduce congestion and pollution. Lane open to vehicles with 2+ people, public transport, taxis, emergency services, and disabled.

New book by Karp and Zamiska explores AI, national security, and Silicon Valley's influence. Co-founder of Palantir, Karp's background and connections raise intrigue in tech industry.

The Trump administration is laying off 6,700 IRS workers during tax season, causing chaos. AI's disruption will have far-reaching effects beyond just the IRS layoffs.

TUC urges stronger copyright and AI protections to prevent exploitation by tech bosses in creative industries. Unions emphasize the need for proper guardrails for workers in the face of technological advancements.

Prof Andrew Moran and Dr Ben Wilkinson discuss the rise of AI in university essays, challenging traditional knowledge sources. The "Tinderfication" of knowledge is reshaping public discourse and undermining critical thinking.

William Boyd predicts a wave of James Bond spin-offs as Amazon acquires the franchise rights. He anticipates AI-generated novels, theme parks, and more.

Sky Sports pundit praised for accurate assessment of India's Champions Trophy gerrymandering. Speaking truth in sports amidst industrial-scale deception is a revolutionary act.

Vanderbilt professor introduces son to ChatGPT AI tools for everyday tasks and learning opportunities. 11-year-old now adept at using AI for games, fact-checking, and practical applications.

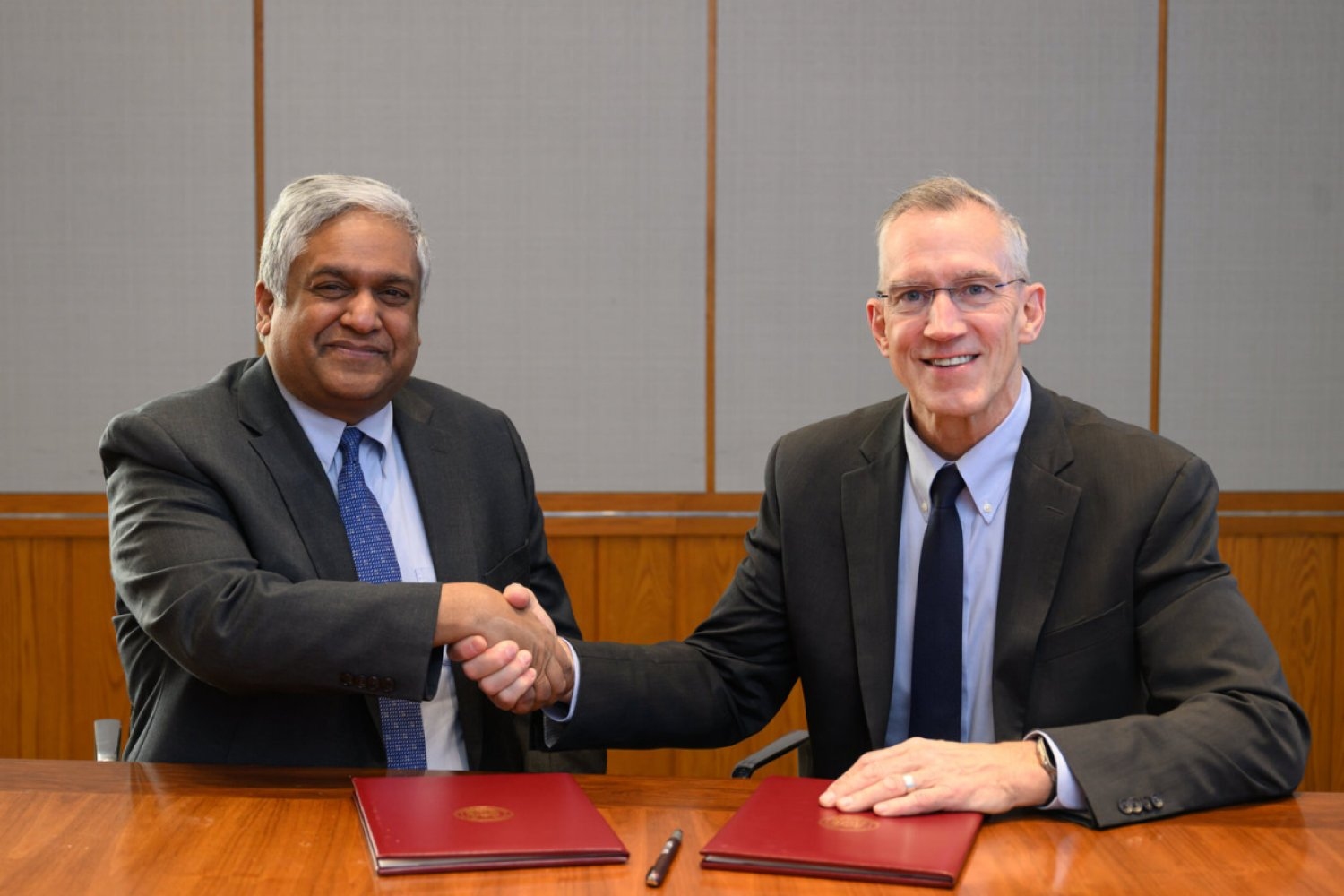

MIT and GlobalFoundries partner to enhance semiconductor technologies, focusing on AI and power efficiency with silicon photonics. Collaboration between academia and industry drives transformative solutions for the next generation of chip technologies.

Artificial intelligence company OpenAI launches video generation tool Sora in UK, sparking copyright debate led by film director Beeban Kidron. Training data crucial for Sora's existence, intensifying tech sector vs. creative industries clash over copyright.

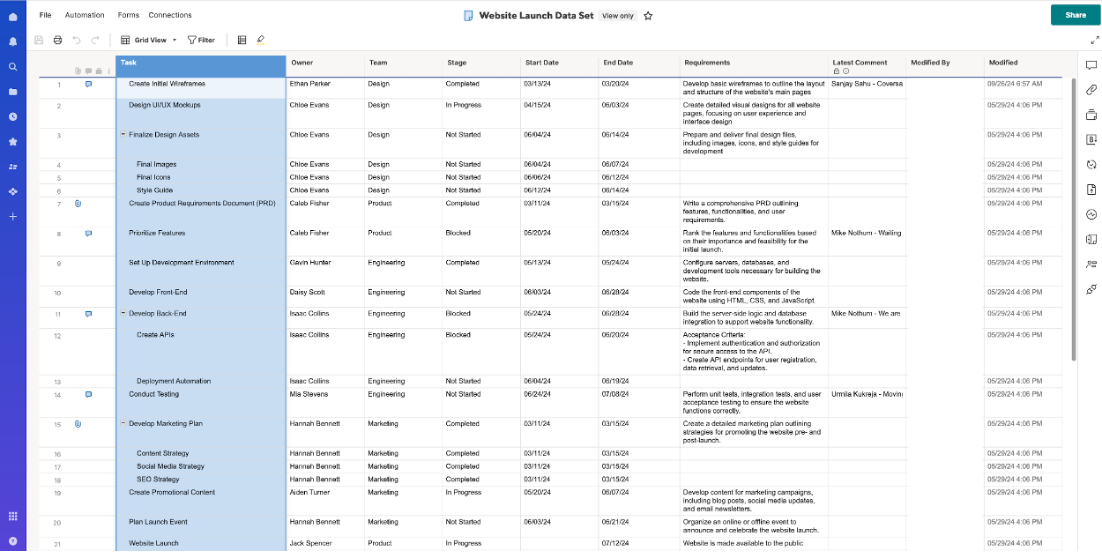

Amazon Q Business is a generative AI-powered assistant for enterprises, integrating with platforms like Smartsheet for enhanced insights and task management. Smartsheet combines spreadsheet simplicity with collaboration features, benefiting organizations worldwide.

Amazon Bedrock offers high-performing FMs for generative AI applications. Anthropic’s Claude Sonnet 3.5 LLM enhances problem-solving and critical thinking skills for knowledge workers.

Transformers are revolutionizing NLP with efficient self-attention mechanisms. Integrating transformers in computer vision faces scalability challenges, but promising breakthroughs are on the horizon.