Green Beret used AI platform to search for explosives info in Afghanistan. Tesla Cybertruck attacker utilized generative AI for attack planning.

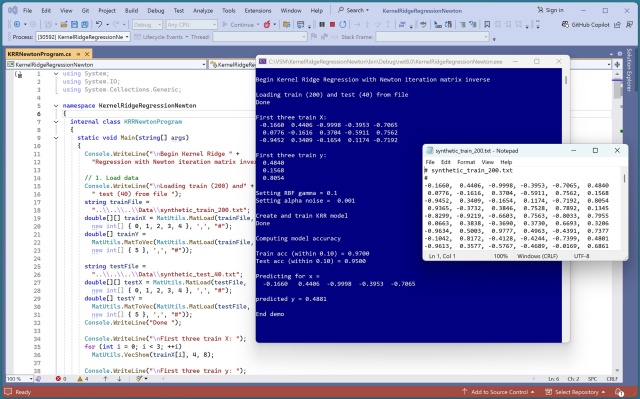

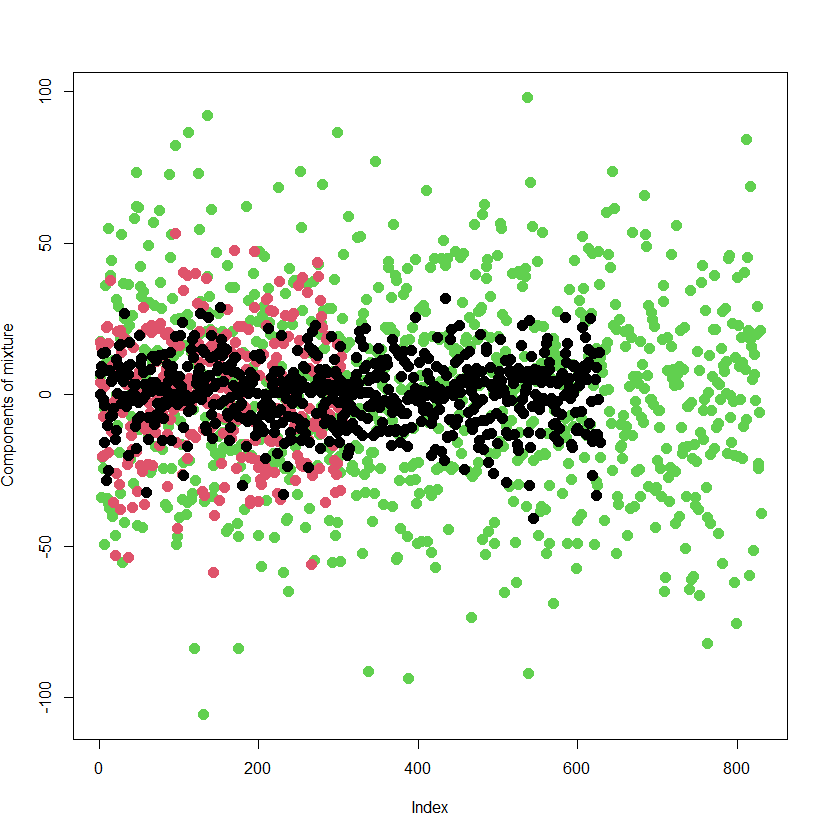

Common regression techniques include linear regression, k-nearest neighbors, and kernel ridge. Kernel ridge regression is powerful for complex non-linear data, but may not scale well to large datasets. Refactored KRR implementation with Newton iteration showed promising results in a demo using synthetic data.

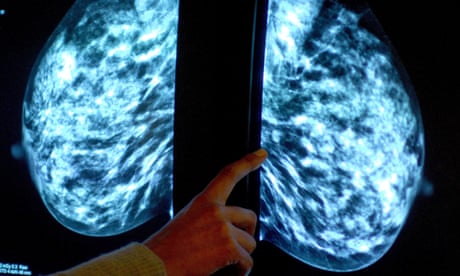

Artificial intelligence boosts breast cancer detection rates without increasing false positives in real-world test, researchers find. Studies show AI's potential to assist in spotting cancer in various medical imaging scans.

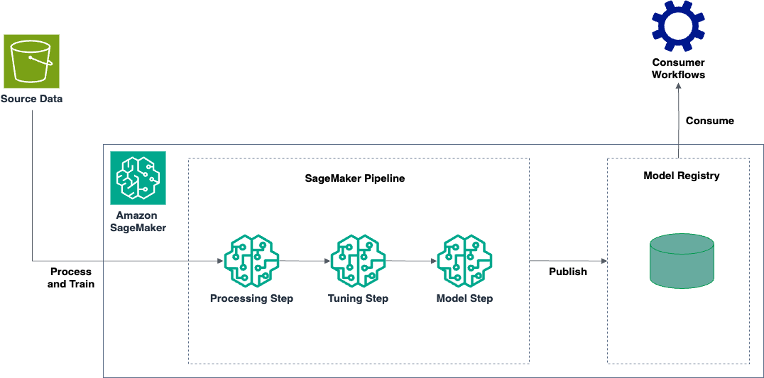

Build automated log anomaly detection mechanism using Amazon SageMaker. Process log data, train ML models, and automate workflow with SageMaker Pipelines.

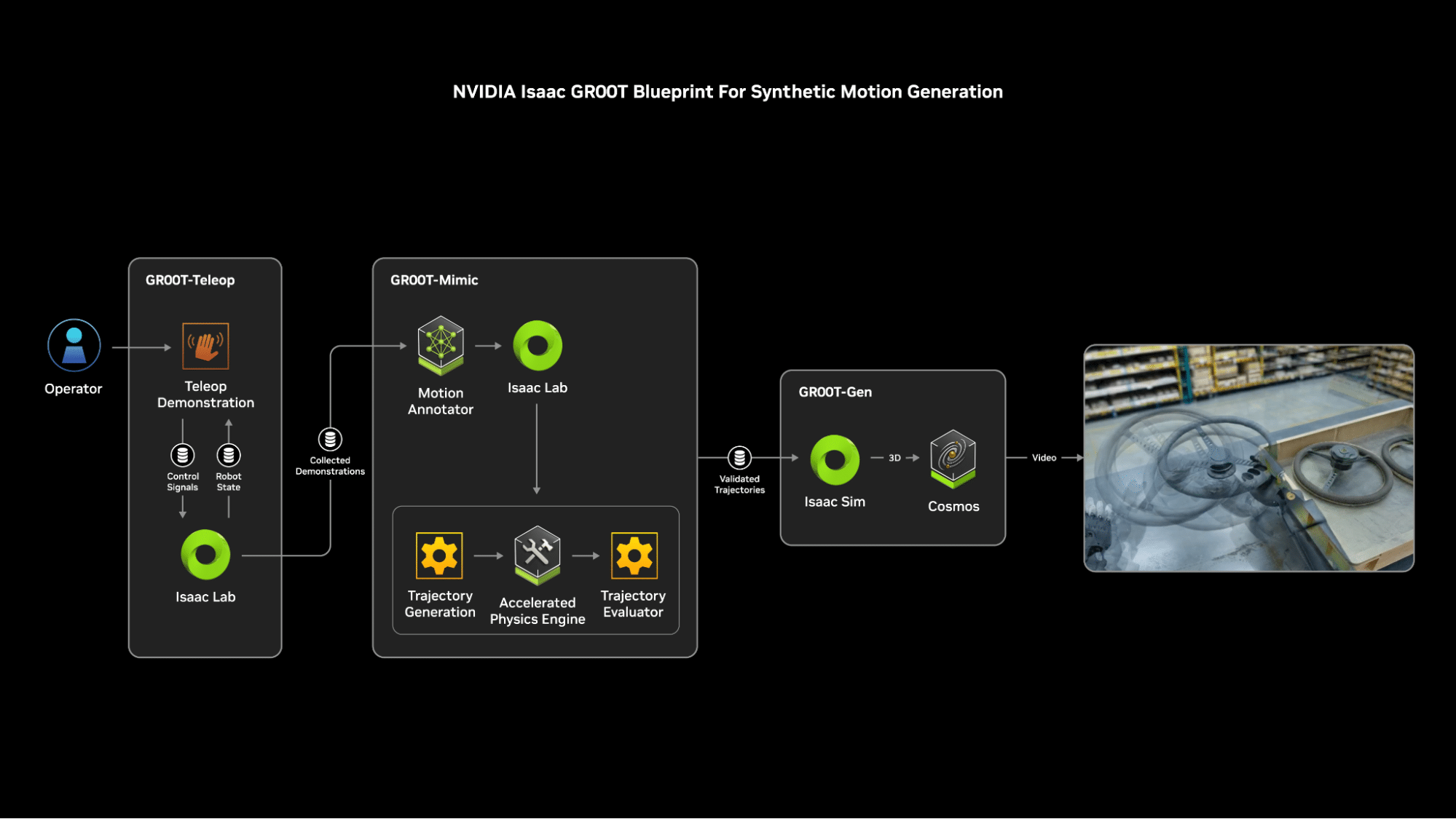

NVIDIA's Isaac GR00T Blueprint accelerates humanoid robot development with synthetic motion data. Cosmos platform narrows simulation-to-real gap for physical AI innovation.

NVIDIA Media2 uses AI to transform content creation and delivery in the media industry, staying on the cutting edge with technologies like NVIDIA Holoscan and Blackwell architecture. NVIDIA AI Enterprise offers a range of microservices for enhanced AI capabilities in media companies' workflows.

AI agents could revolutionize business output, says OpenAI CEO Sam Altman. Virtual employees may join workforces this year, changing how companies operate.

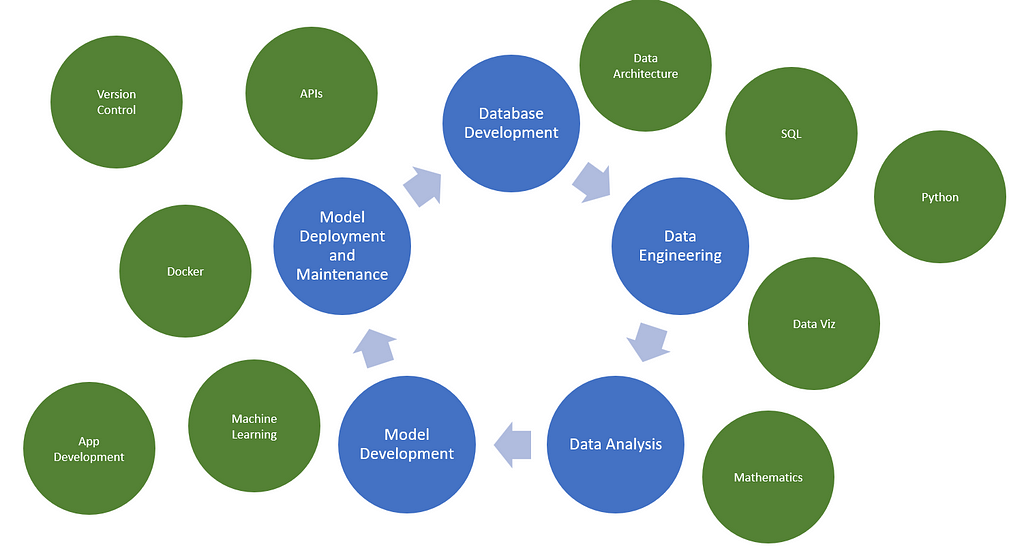

Data scientists' roles evolving to include ML Ops and deployment skills beyond model development. Learn how to deploy ML models using FastAPI and Docker for productionized APIs.

AI system can suggest perfect youth prospects with specific player attributes desired by football managers, potentially boosting team performance. Technologists claim managers could wish for players with traits like Erling Haaland's aggression or Jude Bellingham's poise, making it a sporting Aladdin's lamp.

Meta's AI characters, including 'proud Black queer momma', sparked viral conversations before being deleted. The company plans to introduce more AI character profiles despite previous removals.

New modeling tool combines feature selection with regression to address limitations and ensure consistent parameter estimation. Techniques like Lasso Regression and Bayesian Variable Selection aim to optimize model performance by selecting relevant variables and estimating coefficients accurately.

Major updates in AI ethics for 2024 include breakthroughs in LLM interpretability by Anthropic, human-centered AI design, and new AI legislation like the EU’s AI Act and California laws targeting deep fakes and misinformation. The focus on explainable AI and human empowerment, along with heuristics for evaluating AI legislation, are key highlights in the updates for the Johns Hopkins AI ethics ...

Geoffrey Hinton, the "godfather" of AI, highlights the struggle of intelligent beings controlled by less intelligent ones. The rise of AI demands a realistic understanding of our lack of control over both smart and dumb forces, as seen in current events like the coronavirus pandemic.

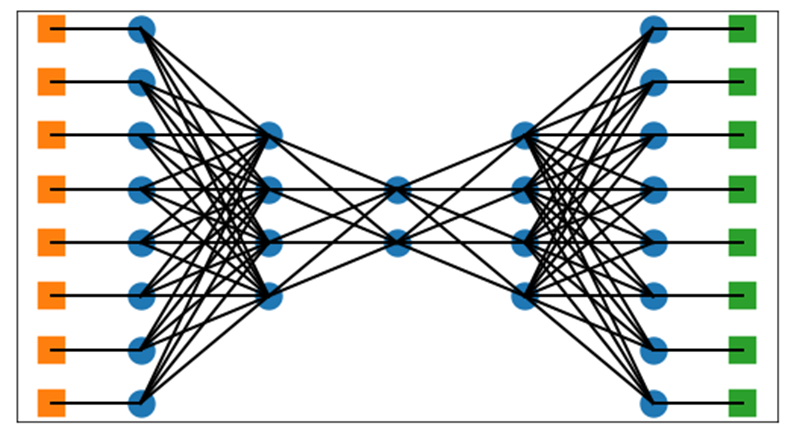

Deep learning excels in outlier detection for image, video, and audio data, but struggles with tabular data. Traditional methods still prevail in tabular outlier detection, yet deep learning shows promise for future advancements.

MIT researchers have developed a technique using large language models to accurately predict antibody structures, aiding in identifying potential treatments for infectious diseases like SARS-CoV-2. This breakthrough could save drug companies money by ensuring the right antibodies are chosen for clinical trials, with potential applications in studying super responders to diseases like HIV.