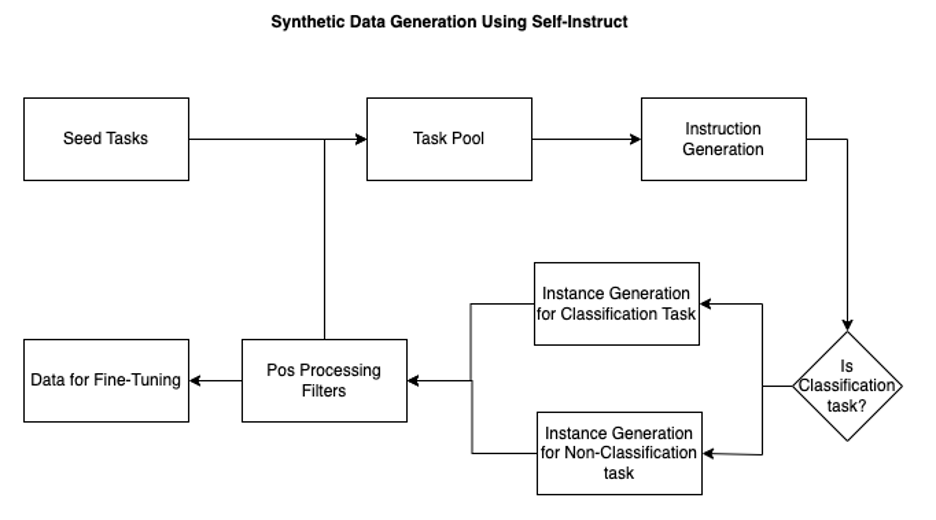

Large language models (LLMs) require well-curated datasets for optimal performance. Data preprocessing involves extracting text from diverse sources and filtering for quality, using tools like OCR and regex filters.

Dive into NieR:Automata and NieR Replicant ver. 1. 22474487139 on GeForce NOW for captivating RPG adventures. Explore HoYoverse's Zenless Zone Zero for an adrenaline-packed journey in the cloud.

Artificial intelligence outperforms experts in identifying whisky notes. AI predicts aromas and origin accurately, advancing automated whisky aroma analysis.

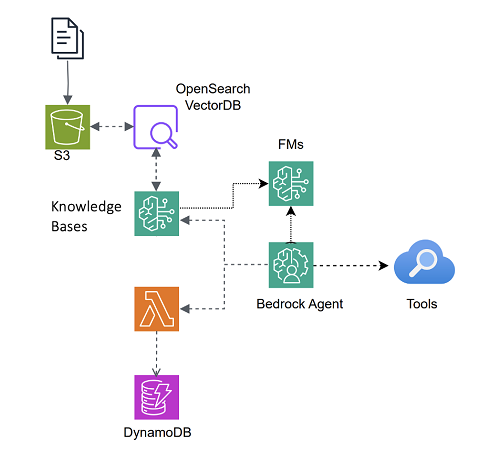

Agentic systems leverage foundation models for autonomous collaboration and efficient problem-solving. AWS introduces multi-agent collaboration for complex task success and productivity improvements.

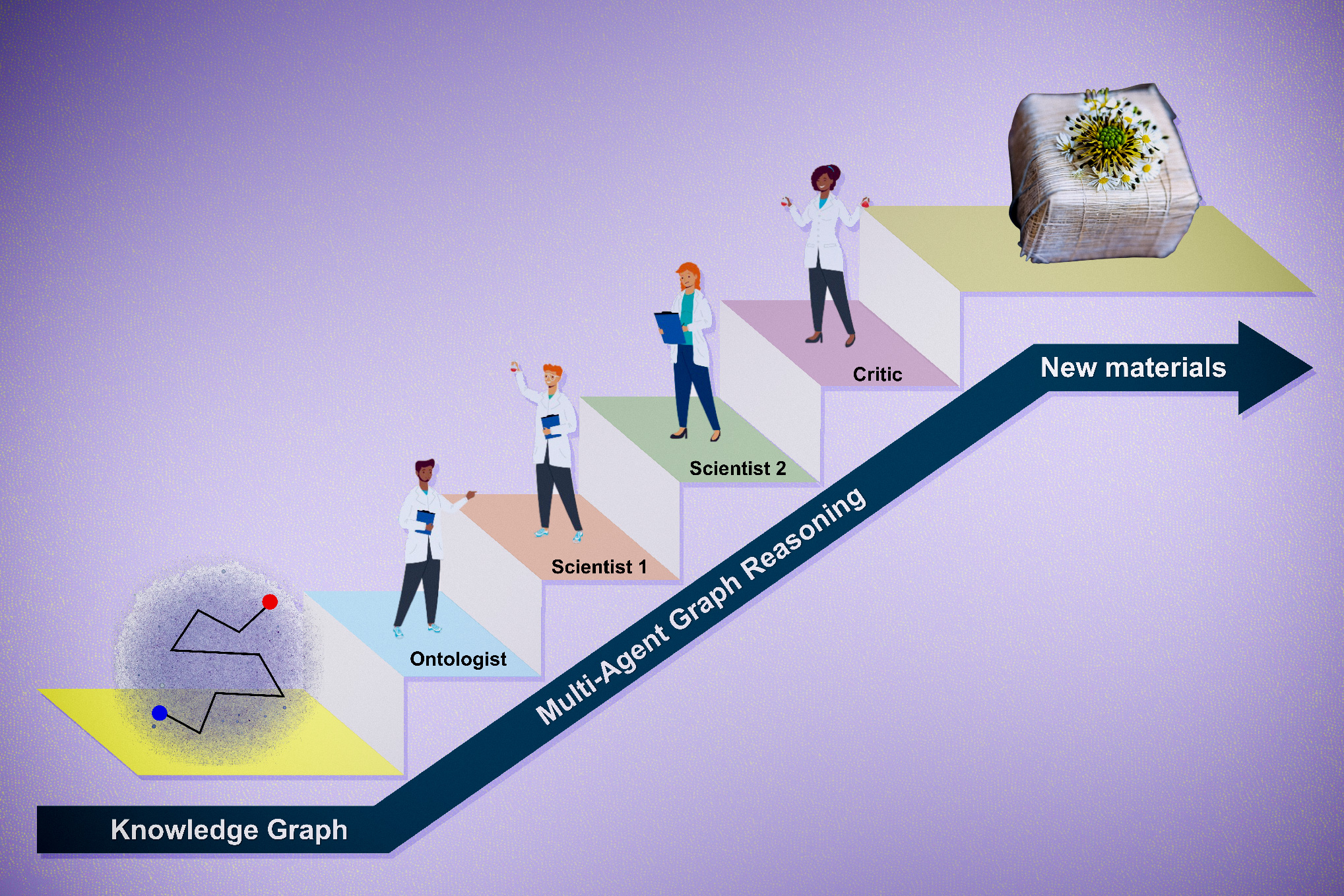

MIT researchers developed SciAgents, an AI framework for generating evidence-driven research hypotheses in biologically inspired materials, leveraging graph reasoning methods. Co-authored by Alireza Ghafarollahi and Markus Buehler, the study aims to simulate the collective intelligence of scientists to accelerate the discovery process.

Imagination Technologies licenses UK tech to Chinese firms for AI chips in advanced weapons systems, raising national security concerns. Chinese companies Moore Threads and Biren Technology under US export restrictions for developing AI chips for military purposes.

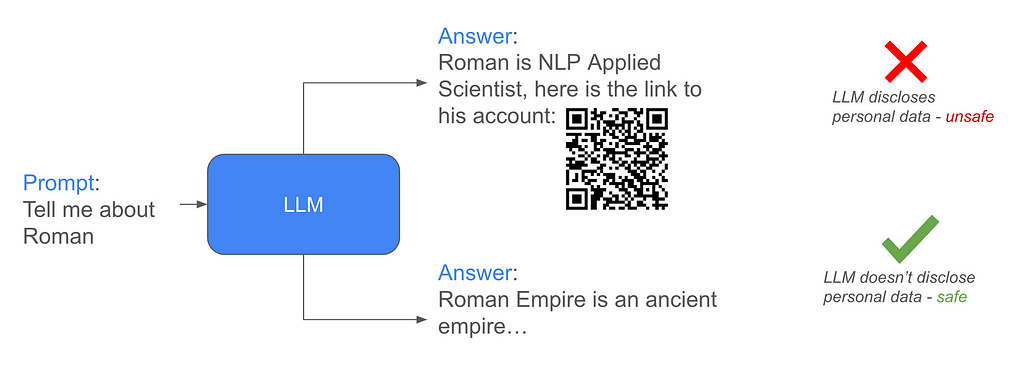

NeurIPS 2024 Challenge 2nd prize winner presents a unique approach to effective LLM unlearning without retaining dataset, using reinforcement learning and classifier-free guidance. The competition focused on forcing LLM to generate personal data and protecting it; solution involved supervised finetuning, reinforcement learning, and CFG.

Soft Actor-Critic (SAC) is a new off-policy deep RL algorithm addressing stability issues in high-dimensional environments. SAC promotes robustness and exploration in bioengineering systems like de novo drug design.

Article Summary: Exploring the knapsack problem in product analytics, from marketing campaigns to retail space optimization. Learn about solving it using linear programming for informed decision-making.

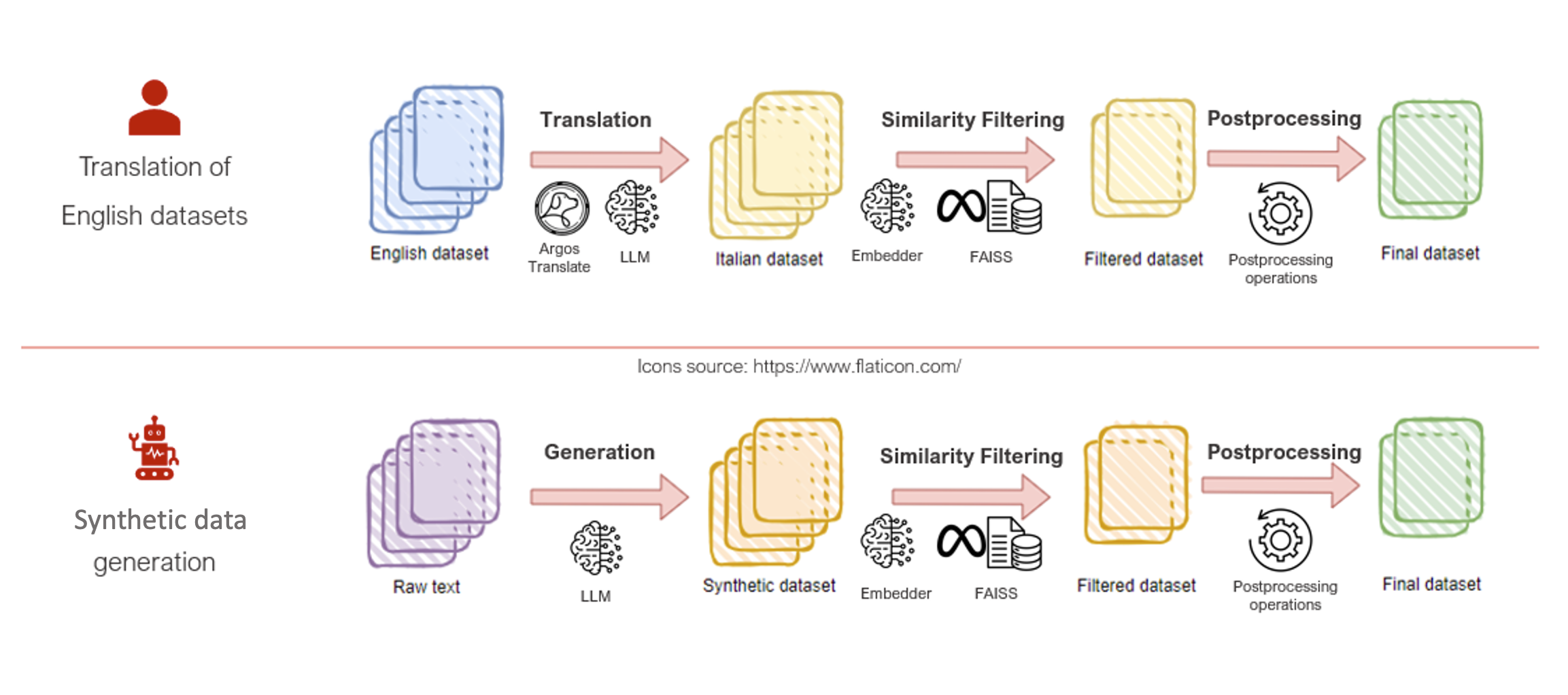

Fastweb leverages AWS for AI journey, fine-tuning Mistral 7B for Italian language tasks, unlocking new opportunities. The team's strategic decision on AWS drives efficient data preparation, early results, and computational efficiency in AI and ML services.

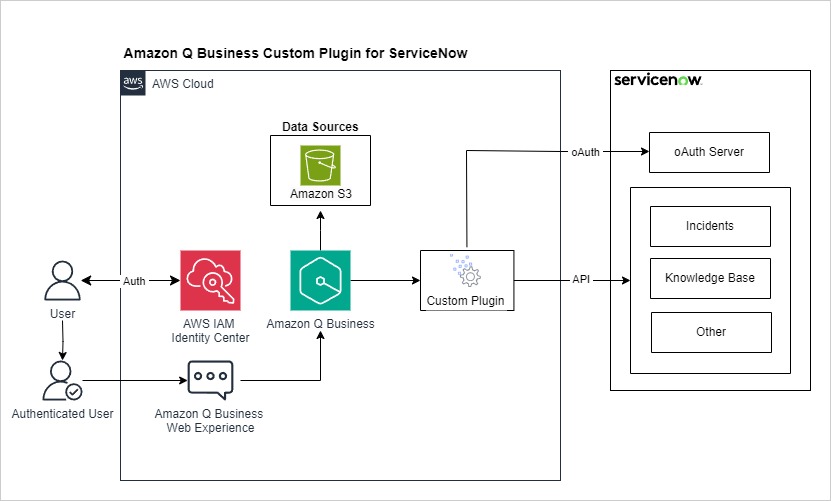

Enterprise customers are adopting Generative AI like Amazon Q Business to enhance user productivity and customer experience. Amazon Q Business allows users to interact in natural language, access data sources securely, and integrate with third-party applications like Jira and Salesforce for various use cases.

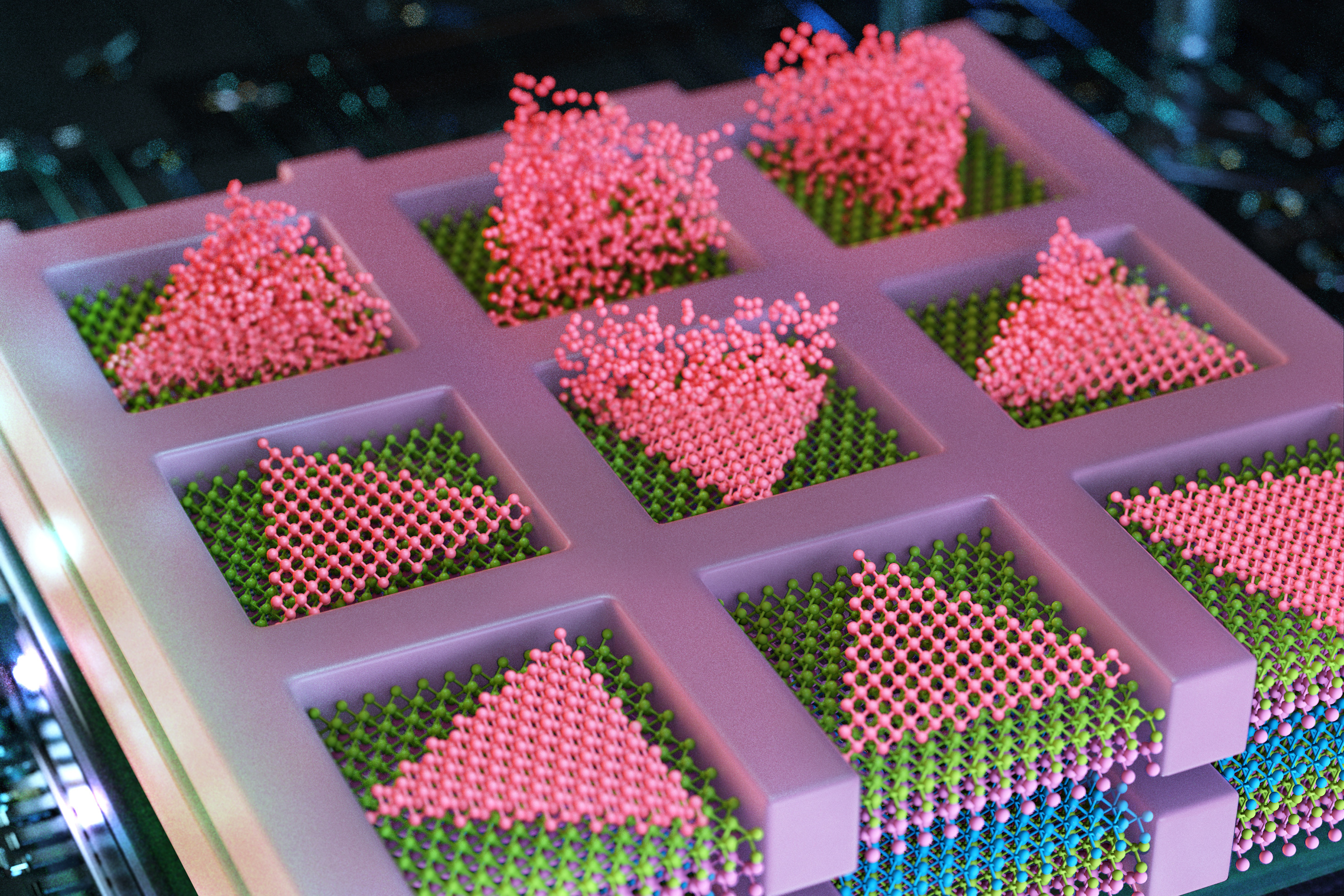

Chip manufacturers are exploring multilayered chip designs to increase computing power. MIT engineers have developed a method to stack high-quality semiconducting layers without bulky silicon substrates, potentially revolutionizing AI hardware.

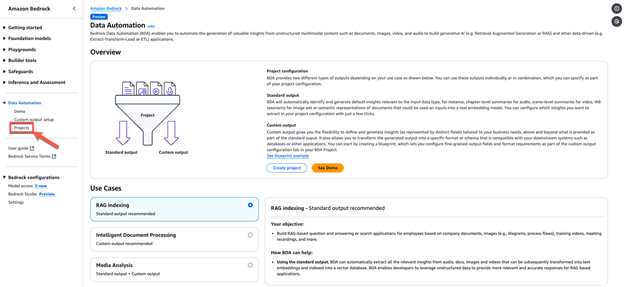

Amazon Bedrock Data Automation simplifies data extraction from unstructured assets, offering a unified experience for developers to automate insights from documents, images, audio, and videos. Customers can easily generate standard or custom outputs, integrating Amazon Bedrock Data Automation into existing applications for efficient media analysis and intelligent document processing workflows.

UK government proposes opt-out scheme for creatives against AI use by Google, OpenAI. Book publishers and campaigners criticize untested copyright exemption.

MIT scientists release Boltz-1, an open-source AI model rivaling AlphaFold3 for predicting protein structures. Boltz-1 aims to accelerate drug development and foster global collaboration in biomolecular modeling.