Meta allows US national security agencies and defense contractors to use its AI model, Llama, despite policy restrictions. Exception made for US, UK, Canada, Australia, and New Zealand.

Austin is booming with jobs and entertainment, but traffic is a major issue. Rekor uses NVIDIA technology to help Texas manage traffic, reduce incidents, and improve safety.

Government launches GPT-4o chatbot to assist with regulations on Gov.UK website, warns of potential 'hallucination' issue. Users can expect varied results as the AI technology undergoes testing by 15,000 businesses before wider release.

Study finds popular generative AI models like GPT-4 can give accurate driving directions in NYC without a true internal map. Researchers develop new metrics to test whether large language models truly understand the world.

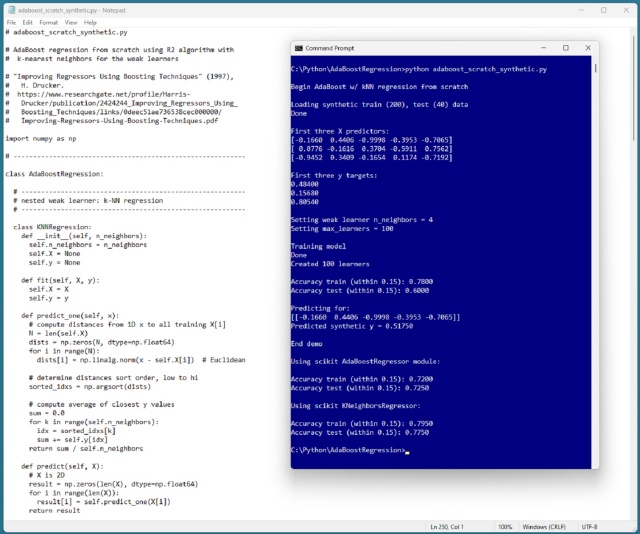

Implementing AdaBoost regression from scratch using k-nearest neighbors instead of decision trees, following the AdaBoost. R2 algorithm. The author delves into the intricacies of the weighted median, contributing a new approach to AdaBoost regression.

VBK, the largest Dutch publisher, to trial AI for translating books into English, acquired by Simon & Schuster. AI to assist in translation of commercial fiction, Vanessa van Hofwegen confirms.

Es Devlin wins $100,000 Eugene McDermott Award at MIT for innovative art exploring biodiversity, AI poetry, and more. Devlin's residency includes a public lecture and collaboration with MIT's creative community.

MIT researchers have developed 3D transistors using ultrathin materials to surpass silicon's energy efficiency limits. These nanowire devices offer high performance at lower voltages, potentially revolutionizing electronics.

AI models, like LLaMA 3.1, require large GPU memory, hindering accessibility on consumer devices. Research on quantization offers a solution to reduce model size and enable local AI model running.

Democratic secretaries of state seek social media companies' plans to moderate inflammatory content and AI during elections. Seven officials, including those from Maine and Washington, urge Google, X, and Meta to address the issue.

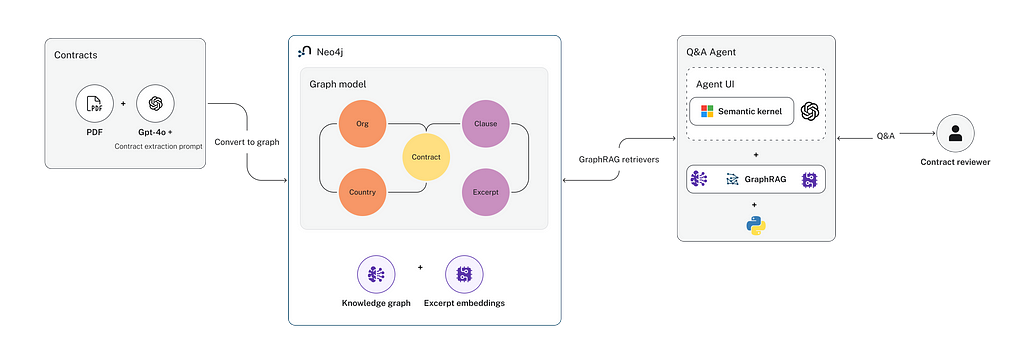

Summary: Introducing a new GraphRAG approach for efficient commercial contract data extraction and Q&A agent building. Focus on targeted information extraction and knowledge graph organization enhances accuracy and performance, making it suitable for handling complex legal questions.

W. E. B. Du Bois' legacy on systemic racism research at MIT continues with the ICSR Data Hub, providing vital criminal justice datasets for analysis and solutions. Computational technologies uncover racial bias in American society, offering hope for a more equitable future.

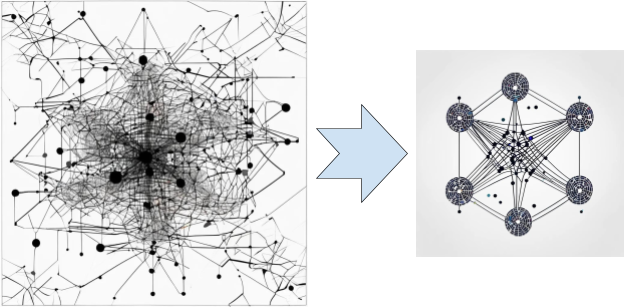

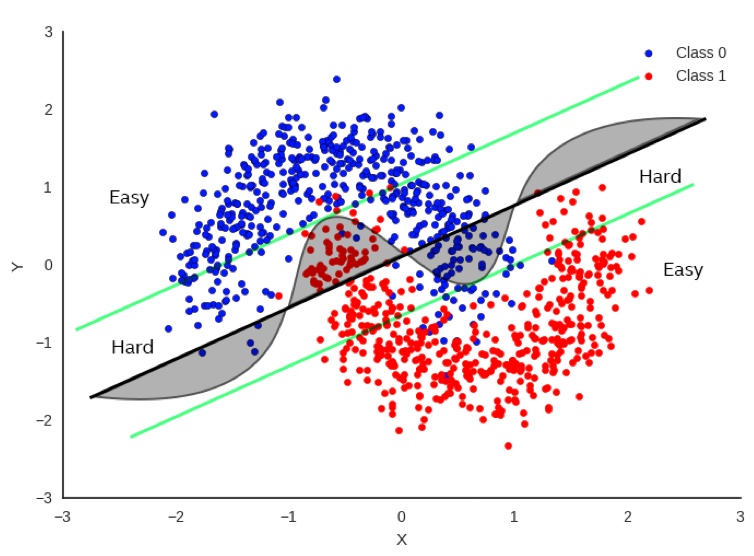

Dynamic execution in AI tasks can optimize performance by distinguishing between Hard and Easy problems. By identifying and addressing the complexity of data points, accuracy can be maintained while saving computational resources.

AI vs human in cryptic crossword challenge. Times' competition sets gold standard. AI named Ross competes on Crossword Genius app.

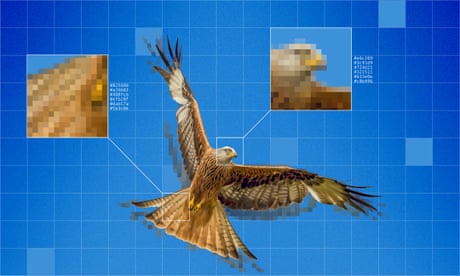

Generative AI is reshaping the art world, with humans still playing a crucial role in creating AI art. Rachel Ossip explores the intersection of technology and creativity in the evolving art landscape.