Robert Downey Jr, returning to MCU as Doctor Doom, vows to sue over AI replicas of himself. Threatens legal action on podcast.

Summary: A paper on LLM reasoning questions AI models' math capabilities, revealing performance variability. Not all models excel equally, suggesting potential data contamination issues and the need for synthetic data.

MIT researchers developed a technique to train general-purpose robots using a vast amount of diverse data sources. This method outperformed traditional techniques by over 20% in simulations and real-world experiments, showing promise for more efficient and effective robot training.

Keir Starmer pledges to support media outlets in getting paid for their work amid AI advancements. PM vows to protect press freedoms against digital technology threats.

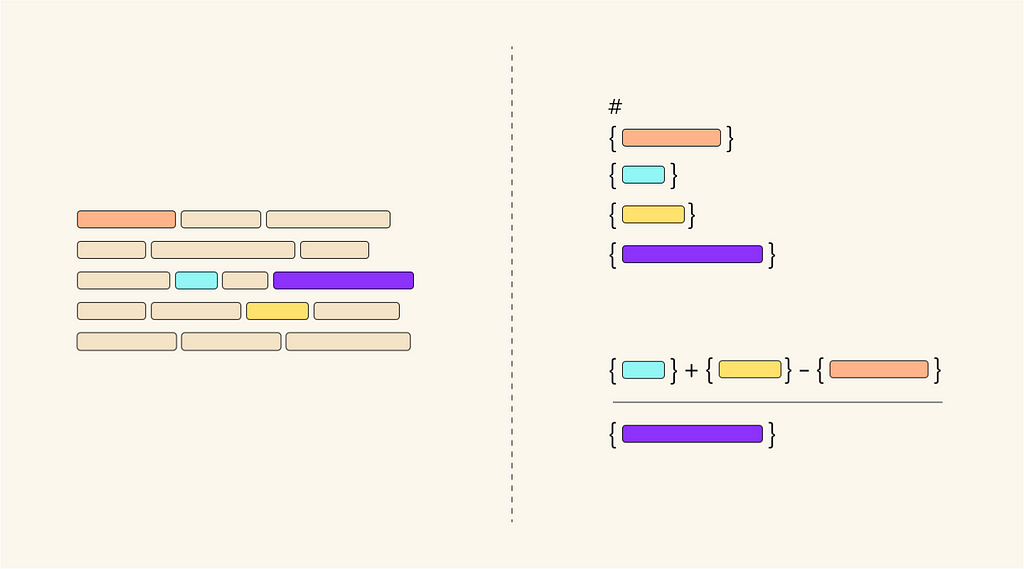

Data minimization principle in machine learning emphasizes collecting only essential data to mitigate privacy risks. Regulations worldwide mandate purpose limitation and data relevance for optimal data protection.

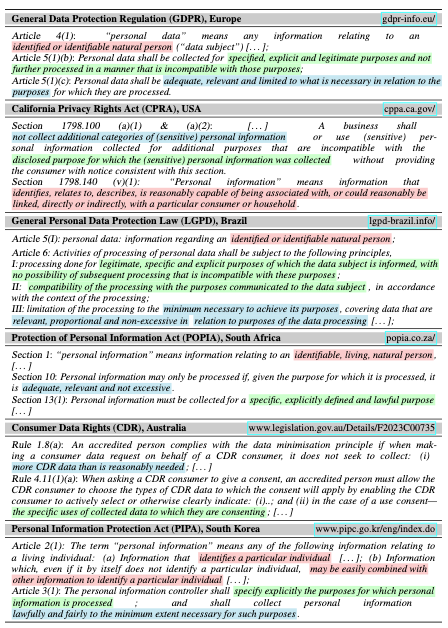

Hugh Nelson, 27, from Bolton, sentenced to 18 years for using AI to create child abuse images from real children's photos. First prosecution of its kind in the UK, following investigation by Greater Manchester police.

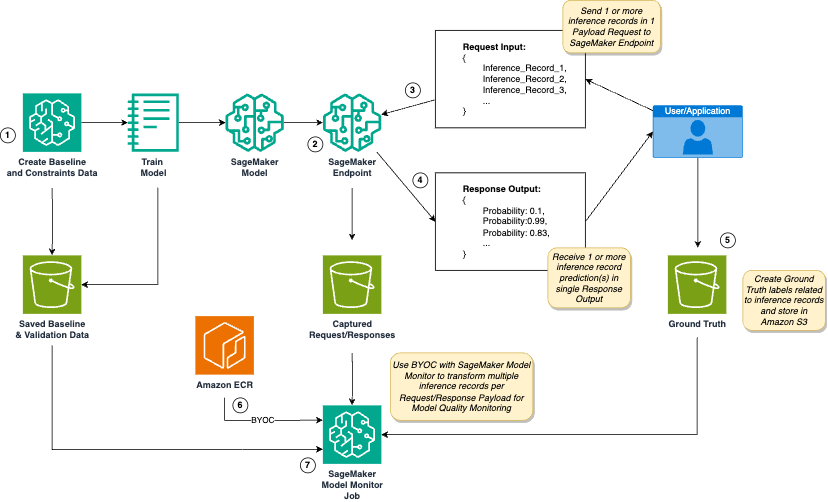

Customized model monitoring with Amazon SageMaker is crucial for real-time AI/ML scenarios. SageMaker Model Monitor offers advanced capabilities for monitoring model quality and handling multi-payload requests, accelerating customized model monitoring development.

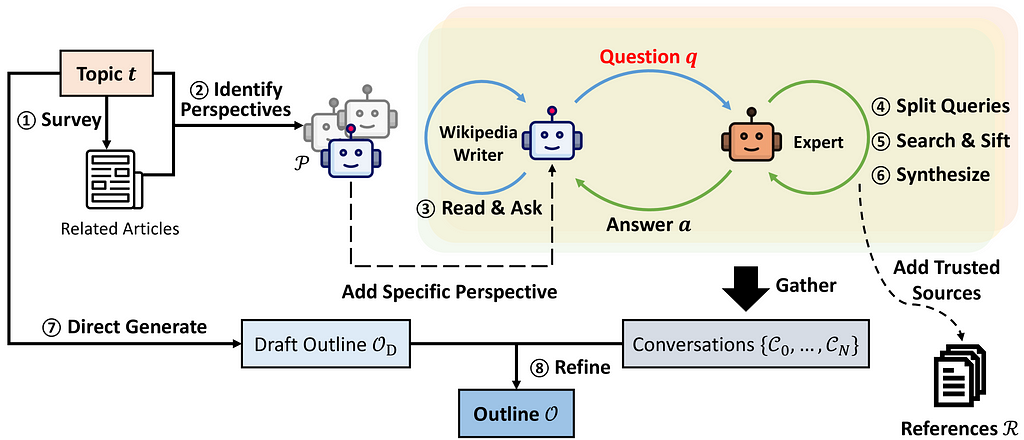

Stanford's STORM AI system uses LLM agents for complex research tasks, outperforming traditional methods. Survey shows 70% of Wikipedia editors find STORM useful for pre-writing research.

Summary: A GNN Approach to Voice and Staff Prediction for Score Engraving addresses the challenge of separating musical notes into voices and staves, crucial for creating readable musical scores. The system aims to enhance the readability of transcribed music, particularly for complex piano pieces, by improving the separation of staves and voices.

Ministers facing backlash over AI plan to scrape content from publishers and artists. BBC among opponents of default training for tech companies.

Sir Michael Parkinson's son reveals plans for a digital replica to interview new stars, following in his father's iconic footsteps in showbiz history. The replica will bring back the charm and impact of classic interviews, revolutionizing the chatshow scene once again.

Child actress Kaylin Hayman bravely confronted a man who used AI to create child sex abuse materials from her Instagram photos. The perpetrator targeted her and about 40 other child actors, including using her face in pornographic images.

Data preprocessing involves techniques like missing value imputation and oversampling for better classification model accuracy. Oversampling, undersampling, and hybrid sampling methods help balance datasets for more accurate predictions in machine learning challenges.

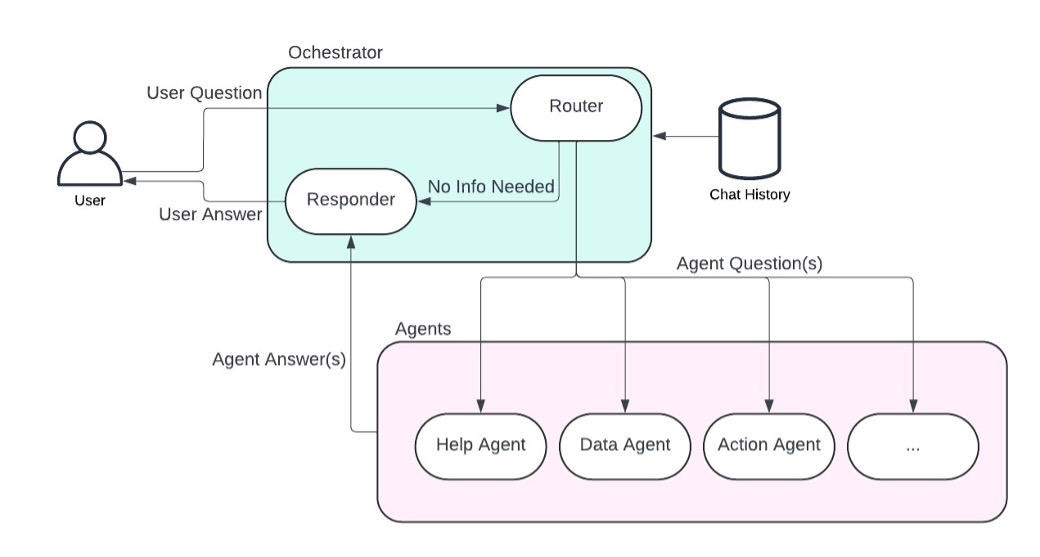

Planview developed an AI assistant called Planview Copilot using Amazon Bedrock, revolutionizing project management interactions. The multi-agent system allows for efficient task routing and personalized user experiences, enhancing productivity and decision-making.

Tom Massey collaborates with Microsoft to unveil the Avanade "intelligent" garden at Chelsea flower show 2025. Visitors can interact with the garden, equipped with AI and sensors, to receive plant data and gardening advice.