Health secretary and Keir Starmer emphasize AI diagnosis for early cancer detection at NHS event, highlighting urgent need for technological advancements in healthcare. Some patients faced 'death sentence' due to NHS delays, sparking calls for improved use of AI and technology in healthcare.

MIT researchers developed SymGen to help human fact-checkers quickly verify responses from large language models by providing citations that directly link to the source document, speeding up verification time by about 20%. SymGen allows users to selectively focus on specific parts of the text to ensure accuracy, potentially increasing confidence in model responses in high-stakes settings like h...

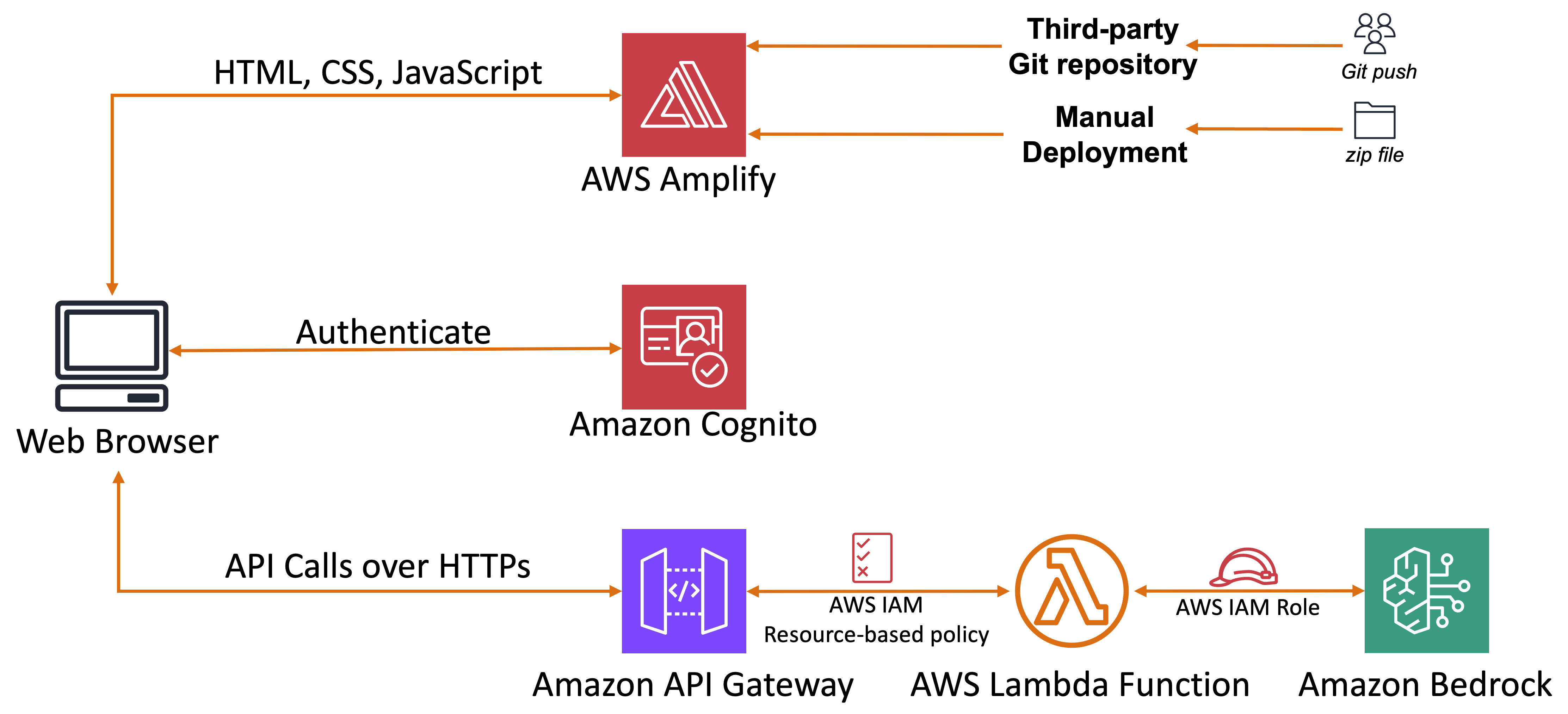

Generative AI adoption in image editing revolutionizes industries. Amazon Bedrock offers serverless solution for editing images using AI FMs.

£1 AI X-ray add-on aims to prevent missed fractures in England, reducing errors in initial assessments. Up to 10% of fractures are missed, prompting the need for this cost-effective solution.

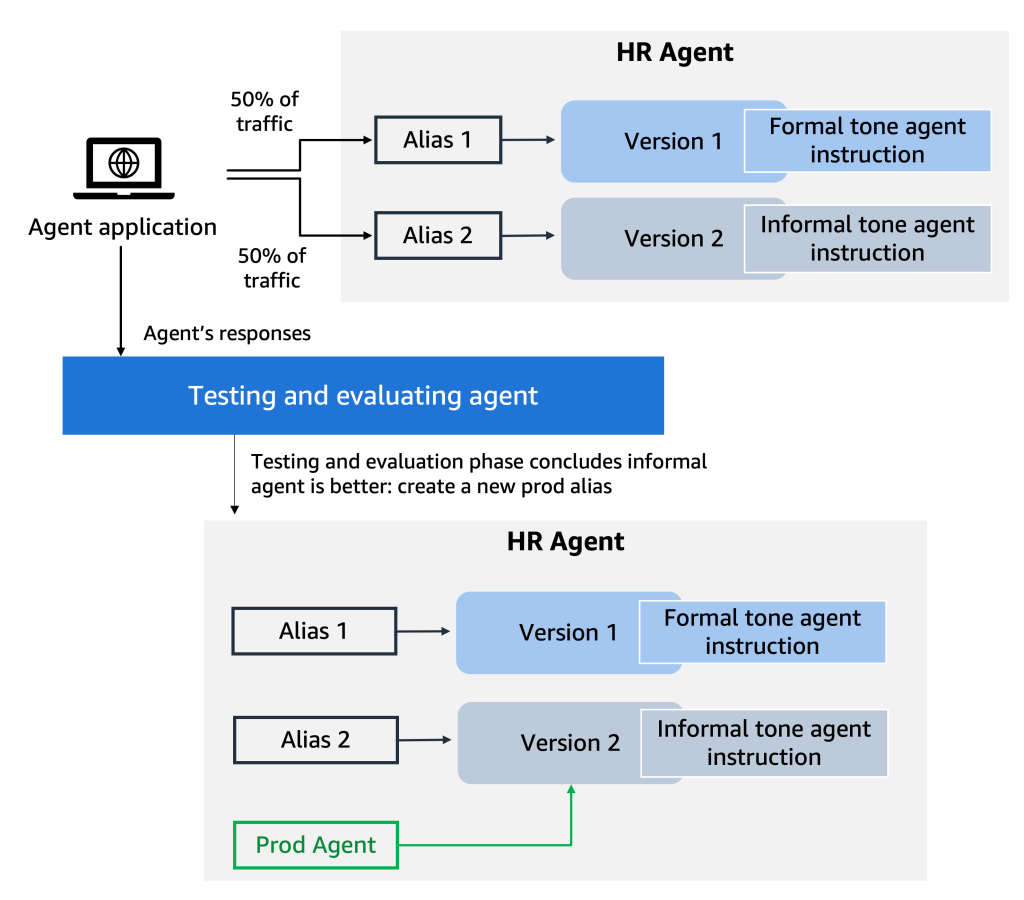

Amazon Bedrock Agents streamline generative AI app development by orchestrating multistep tasks with foundation models and Retrieval Augmented Generation. Architectural considerations and IaC frameworks enhance robust, scalable, and secure agent development for conversational AI applications.

Microsoft introduces autonomous AI agents for customer use, allowing creation of virtual employees for various tasks. Company offers 10 off-the-shelf bots for roles like supply chain management and customer service.

Avoid reading anything by ChatGPT - AI chatbots often produce convoluted, confusing text. Keep it simple, don't waste time deciphering unnecessary 'slop'.

ByteDance fires intern for sabotaging AI project training in August, citing 'malicious interference'.

Data drift and concept drift are crucial factors impacting ML model performance over time. Understanding and addressing these issues is key to maintaining model accuracy and effectiveness. Retraining strategies play a vital role in mitigating performance degradation caused by changing data patterns and relationships.

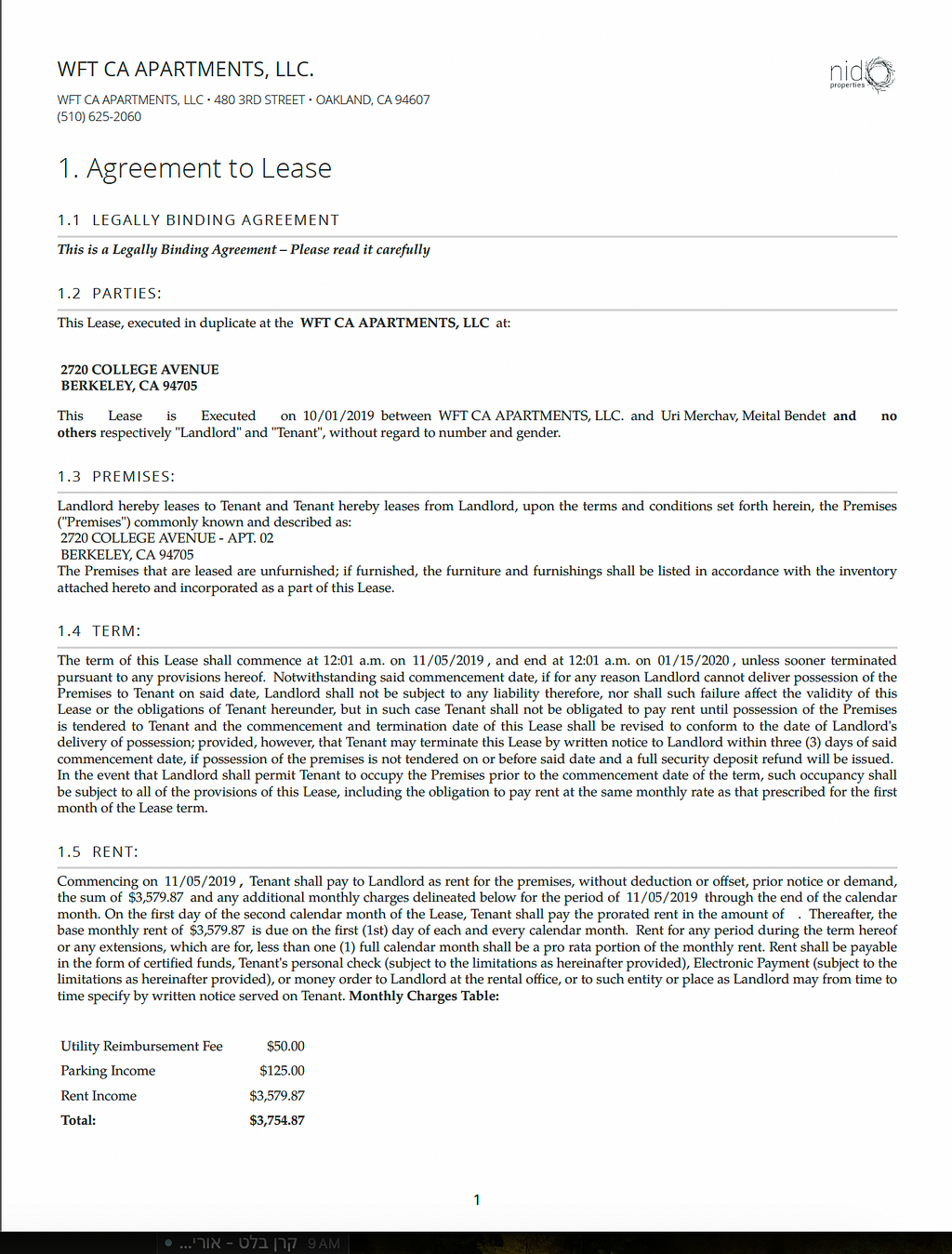

Explanations in AI outputs can be unnecessary, but crucial for accuracy and actionable insights. DocuPanda offers a solution by extracting key information from complex documents, enhancing efficiency and clarity.

Mark Cuban, billionaire and campaign surrogate for Kamala Harris, addresses AI, taxes, and memes. Cuban draws on his diverse experience and confronts Trump-supporting billionaires like Elon Musk.

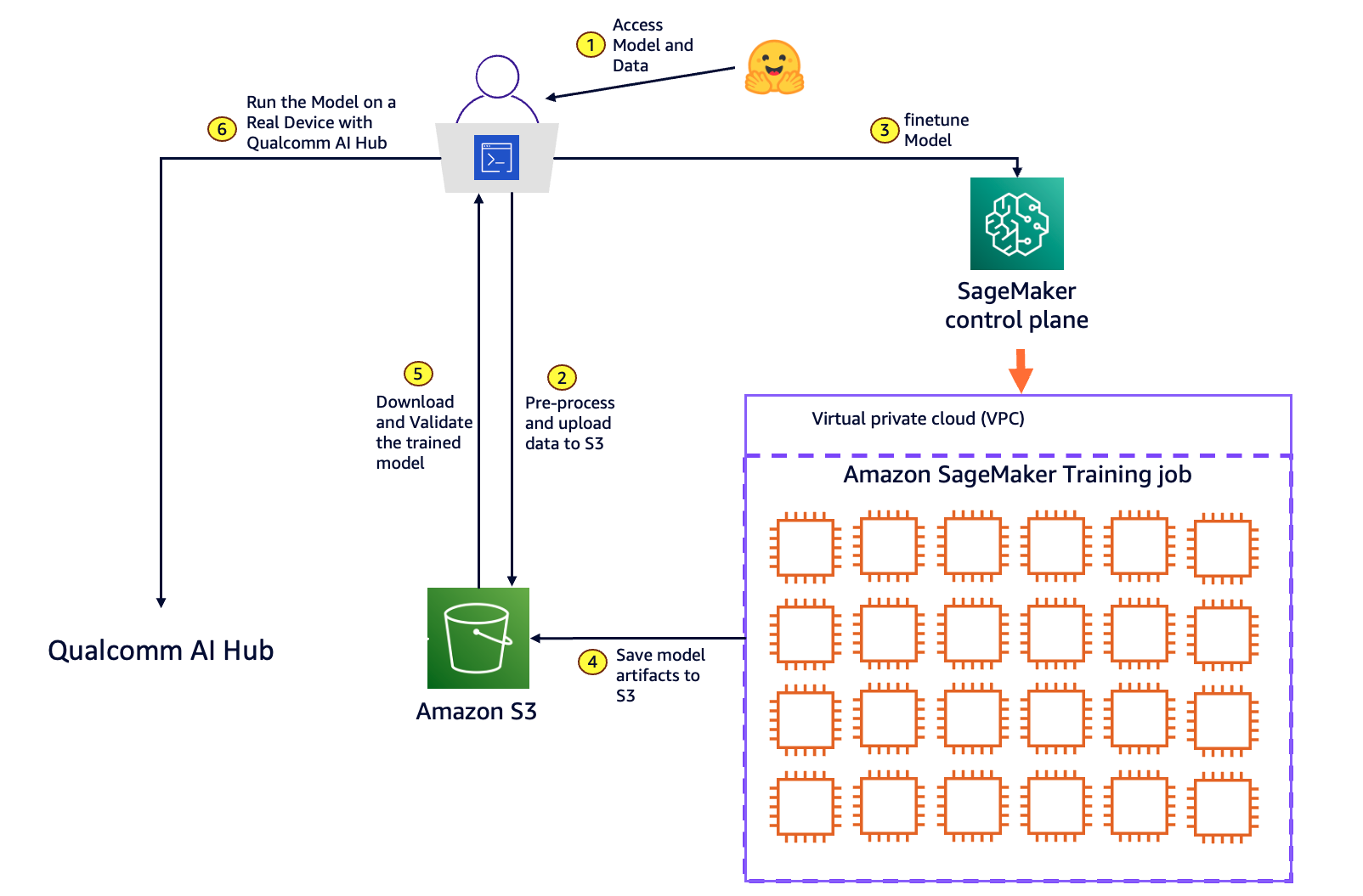

Innovative solution by Qualcomm & Amazon SageMaker enables end-to-end model customization & deployment at the edge. Developers can BYOM & BYOD for optimized machine learning solutions targeting on-device deployment.

"Transforming RAG systems into LEGO-like reconfigurable frameworks by Gao et al. (2024) simplifies understanding and designing diverse RAG solutions using modular components." "The structured approach breaks down RAG systems into six key components, providing flexibility and clarity in building and navigating the RAG process."

OpenAI releases early Windows version of ChatGPT app for subscribers, positioning it as a beta test. Users can access various models, generate images with DALL-E 3, and analyze files.

Firms seek right to take vital data for AI systems, raising concerns about privacy. Author uses pickpocket analogy to highlight potential dangers of opt-out data regime.