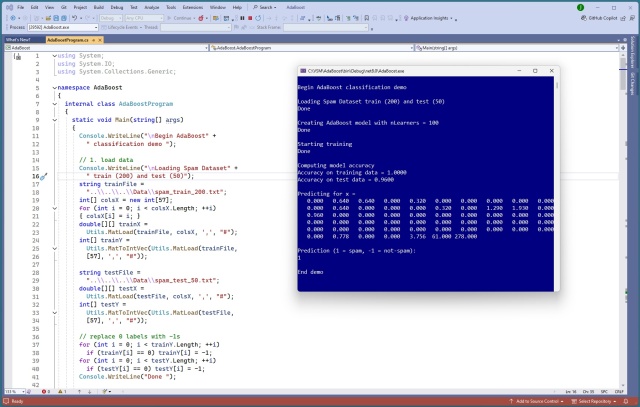

Implementing AdaBoost from scratch in Python and C# for binary classification using decision stumps. AdaBoost excels with unnormalized data and requires tuning only the number of weak learners.

Researchers claim 80% accuracy in screening children under 2 for autism using machine learning AI, offering potential benefits.

NVIDIA Research introduces StormCast AI model for accurate weather prediction at mesoscale, aiding disaster planning and mitigation. CorrDiff by NVIDIA Earth-2 boosts energy efficiency and lowers costs for fine-scale typhoon predictions, showing potential global impact.

Victor Miller and his ChatGPT bot, Vic, aim to lead Cheyenne in a hybrid format, proposing AI to run the local government if elected mayor. Voters in Wyoming's capital city must decide on the innovative idea of AI assisting in city governance.

Nearest Neighbor Classifier uses past experiences to make predictions, mimicking real-world decision-making. K Nearest Neighbor model predicts based on majority class of closest data points, making it intuitive and easy to understand.

Google's Lucinda Longcroft interrupted by AI chatbot during Senate hearing. Proposal for gambling levy to reduce Australian media reliance on betting ads.

AI-generated song by Butterbro blurs line between parody and discrimination, featuring racial stereotypes, makes Top 50 in Germany. Song "Verknallt in einen Talahon" challenges norms with modern lyrics and 60s schlager pop vibes.

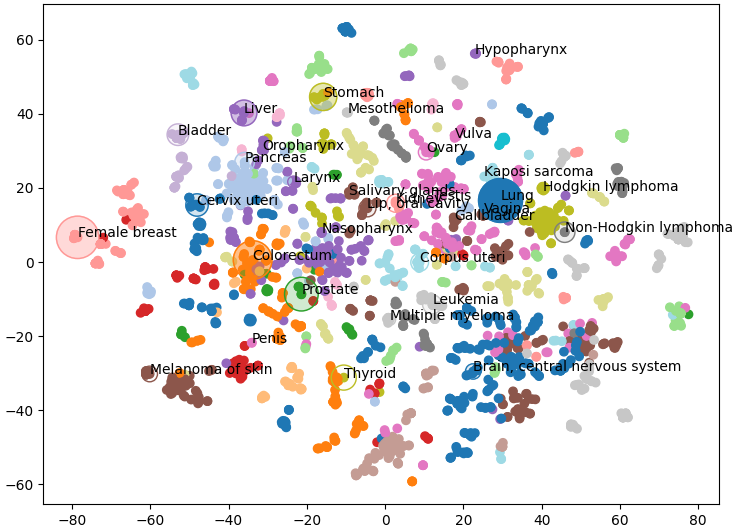

Identify cancer clusters in knowledge graphs using neo4j in a docker container. Visualize disease ontology relationships for insights on cancer types.

Duke of Sussex addresses online disinformation at Colombia summit, emphasizing impact on real-world actions. Warns against false information spread through AI and social media.

OpenAI bans Iranian group's accounts for using ChatGPT to influence US election and other issues. Operation Storm-2035 generated content on candidates, Gaza conflict, and Olympics.

New solution optimizes call scripts for sales campaigns, dynamically adjusting based on real-time data for increased effectiveness. Algorithm presented at KDD 2024 conference outperforms existing solutions, maximizing customer conversion rates.

Advanced AI poses risks of digital imposters online, so MIT, OpenAI, Microsoft propose personhood credentials to verify real humans online securely. Personhood credentials prove human identity without revealing sensitive information, combining cryptography security and unique human capabilities.

AI-generated videos struggle with realism, leading to humorous parodies on TikTok and Bilibili. Users on X join in, creating entertaining parodies of image synthesis videos.

Azure Landing Zone ensures secure data processing in the cloud with essential elements like networking, security, and compliance. Tailor Azure subscriptions for application and platform resources to streamline infrastructure configuration and enable successful cloud operations.

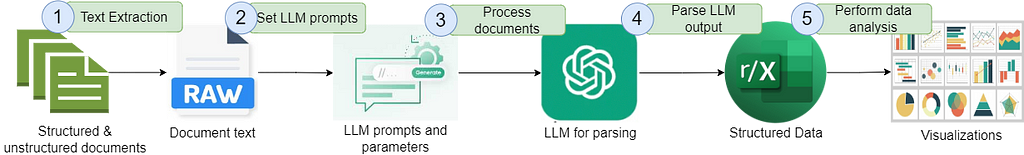

LLM-Powered Parsing and Analysis of Semi-Structured & Unstructured Documents: Learn how Large Language Models can automate data extraction from diverse documents for in-depth analysis, providing valuable insights into AI trends and challenges in various industries. Document parsing using LLMs offers flexibility and accuracy in extracting key information from real-world documents, enabling organ...