RAG combines retrieval and foundation models for powerful question answering systems. Automate RAG deployment with Amazon Bedrock and AWS CloudFormation for seamless setup.

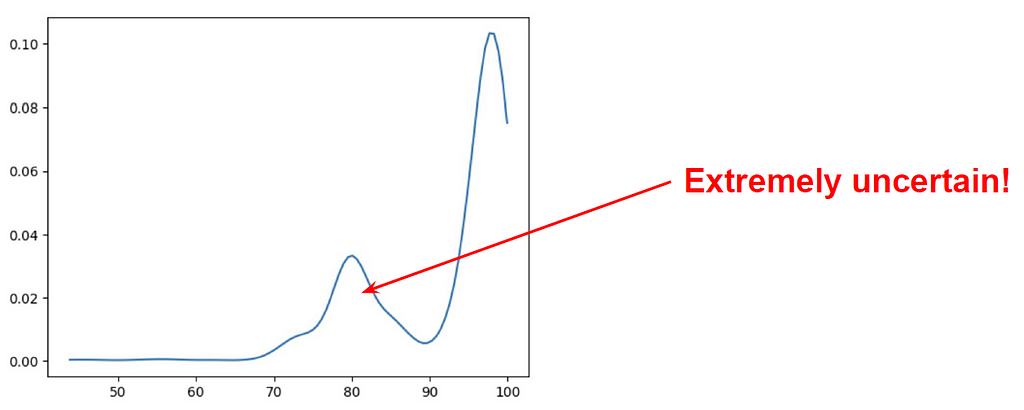

LLM prompts show brittleness in AI responses. Experiment with OpenAI's GPT-4o reveals 55% accuracy with original prompt.

Summary: Learn how to build a 124M GPT2 model with Jax for efficient training speed, compare it with Pytorch, and explore the key features of Jax like JIT Compilation and Autograd. Reproduce NanoGPT with Jax and compare multiGPU training token/sec between Pytorch and Jax.

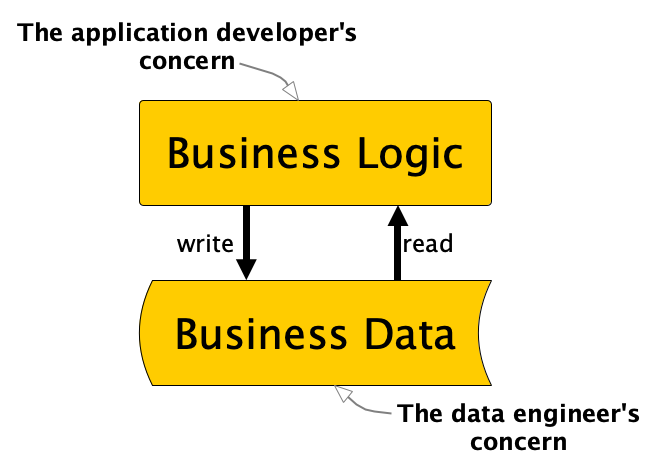

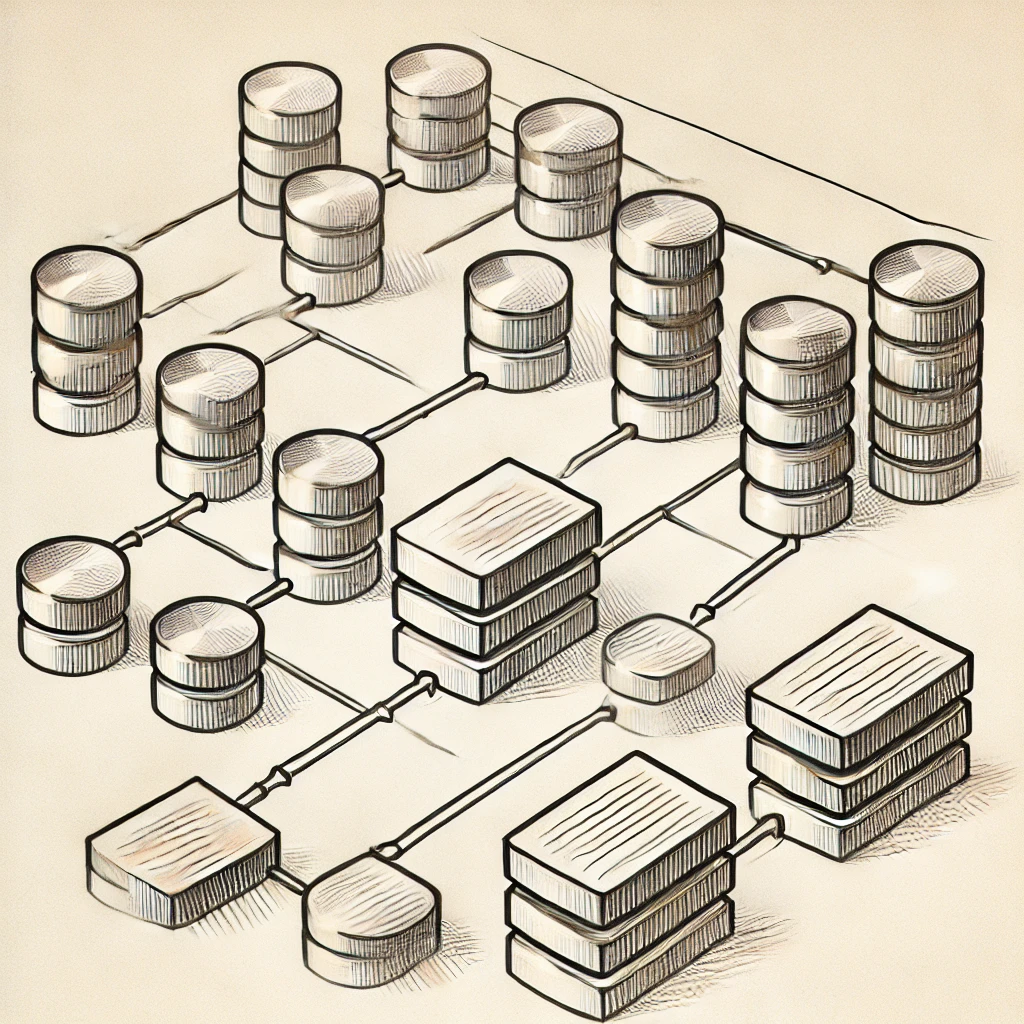

Current data engineering landscape strays from simplicity, overlooking Unix principles. Unix-like systems offer elegant data abstractions as files, yet databases complicate access with SQL interfaces.

LLMs can predict metadata for humanitarian datasets without fine-tuning, offering efficient and accurate results. GPT-4o shows promise in predicting HXL tags and attributes, simplifying data processing for humanitarian efforts.

AI's impact on society prompts the question: How do we ensure AI benefits humanity? Exploring the connection between human flourishing and AI development reveals the need for societal infrastructure to promote wellbeing.

Daniel Bedingfield argues that AI is music's future, warning 'neo-luddites' risk being left behind. Growing use of AI in creative industries sparks debate over job security and artistic integrity.

Sam Altman's ChatGPT captivates global attention, sparking AI mania. But a scientist warns of the dangers posed by Altman and AI.

Introducing Friend: a $99 wearable AI companion that records interactions and texts back, offering companionship and support. This 'Tamagotchi with a soul' is set to ship in early 2025, providing attentive listening without any requests for help moving or attending one-man plays.

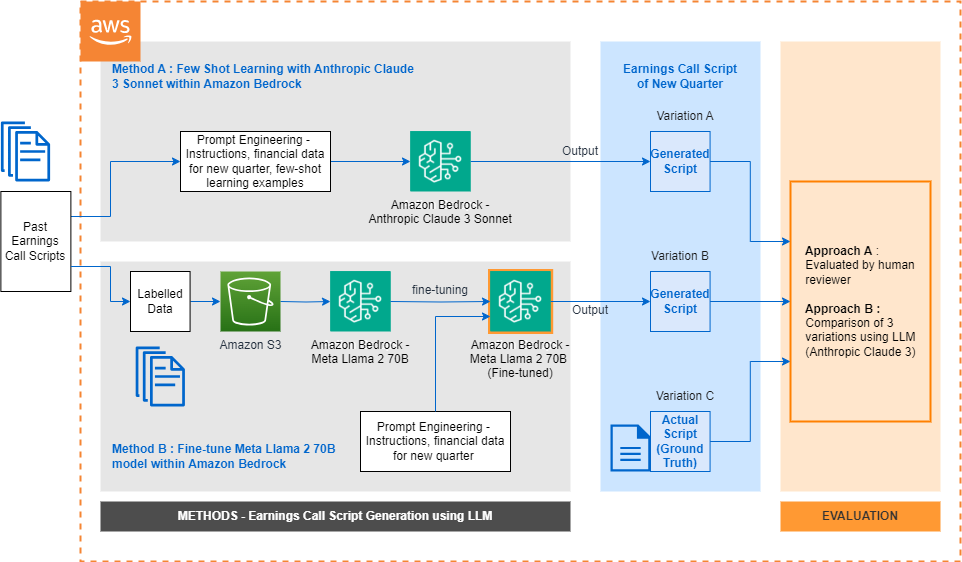

Earnings calls are vital for investors; generative AI can streamline creating scripts for new quarters. Amazon Bedrock simplifies building and scaling AI applications with customizable models.

Black Forest Labs debuts FLUX.1 text-to-image AI models after engineers leave Stability AI due to poor performance issues. The company offers high-end, mid-range, and faster versions, claiming superior image quality and text prompt accuracy.

Labour government delays £1.3bn Tory-funded supercomputer and AI projects. Future of UK's first exascale supercomputer uncertain.

Concerns about AI: surveillance potential, culture hijacking, relieving need to think. Luddites rising against AI in novel ways.

GraphStorm is a low-code GML framework for building ML solutions on enterprise-scale graphs in days. Version 0.3 adds multi-task learning support for node classification and link prediction tasks.

Israeli NGO Madrasa teaches Arabic to Hebrew speakers with unique multilingual data. Volunteers analyze diverse student responses for insights.