Backlash against AI actor Tilly Norwood swift and fierce in Hollywood after brief appearance. Critics and actors unite in condemning depressing, dystopian concept with dodgy tech and shonky features.

AI like ChatGPT can help spark a child's imagination through personalized stories. Scientists are concerned about AI's impact on creativity.

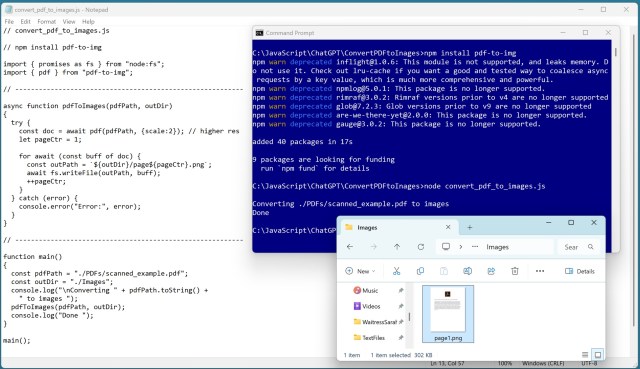

Exploring PDF-to-image conversion using JavaScript led to deprecated sub-libraries in the pdf-to-img package, making it unreliable for production. Consider well-supported Python libraries like PyMuPDF for efficient PDF extraction and text conversion.

The TX-GAIN supercomputer at LLSC is the most powerful AI system at a US university, enabling breakthroughs in generative AI and data analysis. Equipped with over 600 NVIDIA GPUs, TX-GAIN supports research in various domains, from radar signatures to designing new medicines.

Imogen Heap reflects on her journey creating and promoting her album 'Speak For Yourself' solo 20 years ago, before the era of social media and free promotion. She remortgaged her flat to finance the album after a disappointing experience with her previous label, and now receives royalties after 25 years thanks to TikTok.

Jürgen Matthäus solves mystery of iconic Holocaust image, 'The Last Jew in Vinnitsa', with chilling details revealed. German soldier aiming pistol at kneeling man in front of mass grave in Ukraine finally identified.

Ana Bakshi has been named the new executive director of the Martin Trust Center for MIT Entrepreneurship, bringing a decorated background in entrepreneurship education and experience in leading high-growth companies, including an AI startup. With a focus on raising the bar for innovation-driven entrepreneurship education, Bakshi's expertise will help translate academic research into real-world ...

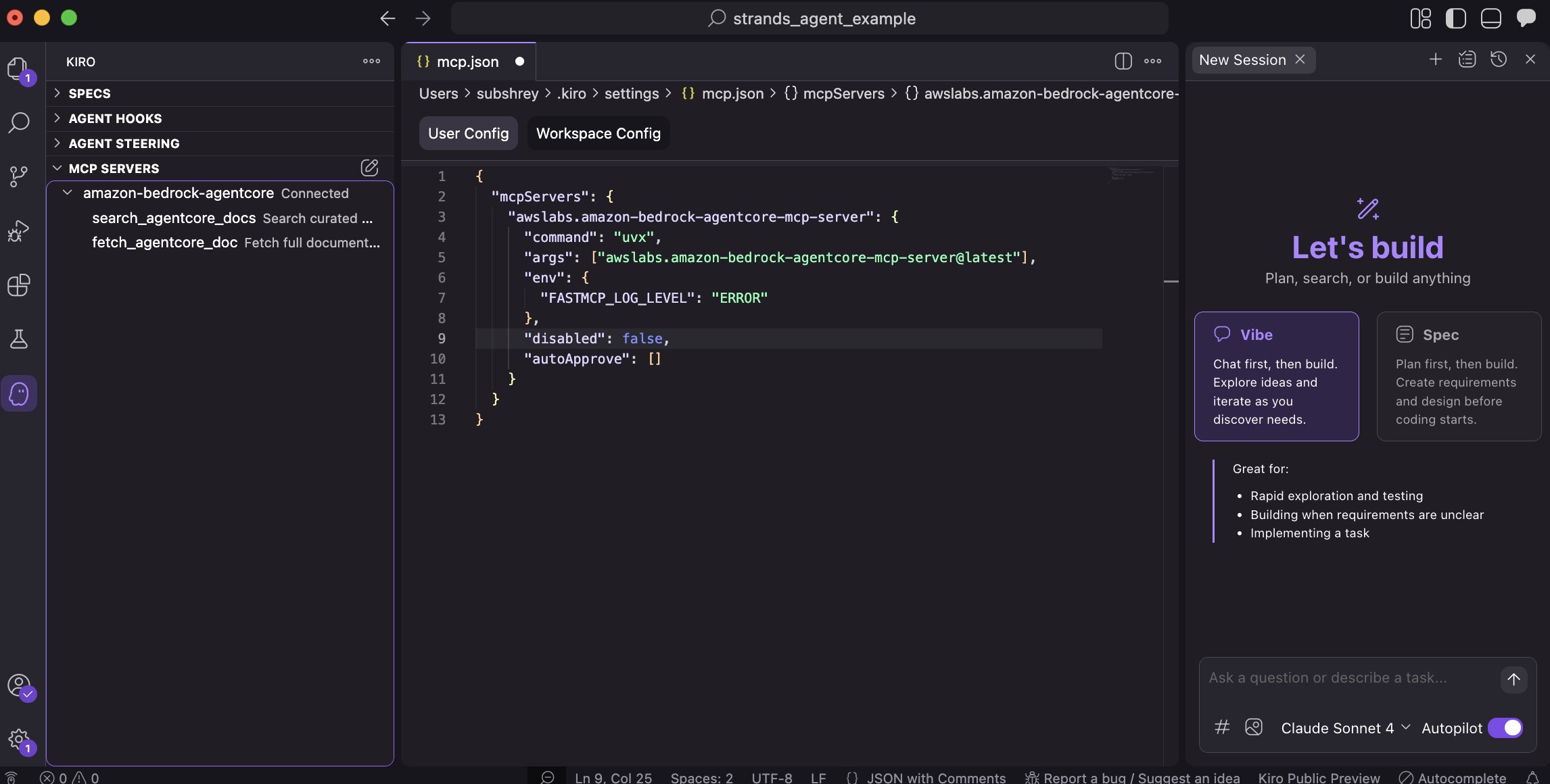

Introducing the Amazon Bedrock AgentCore MCP Server for rapid AI agent development. Automate agent lifecycle, streamline tool integration, and simplify testing with conversational commands.

Academic publishing under scrutiny for quality issues and overwhelming volume of papers. University of Exeter proposes solutions to restore trust in research system.

Yale study finds US job market slow to change post-ChatGPT release. No major AI disruption reported since 2022.

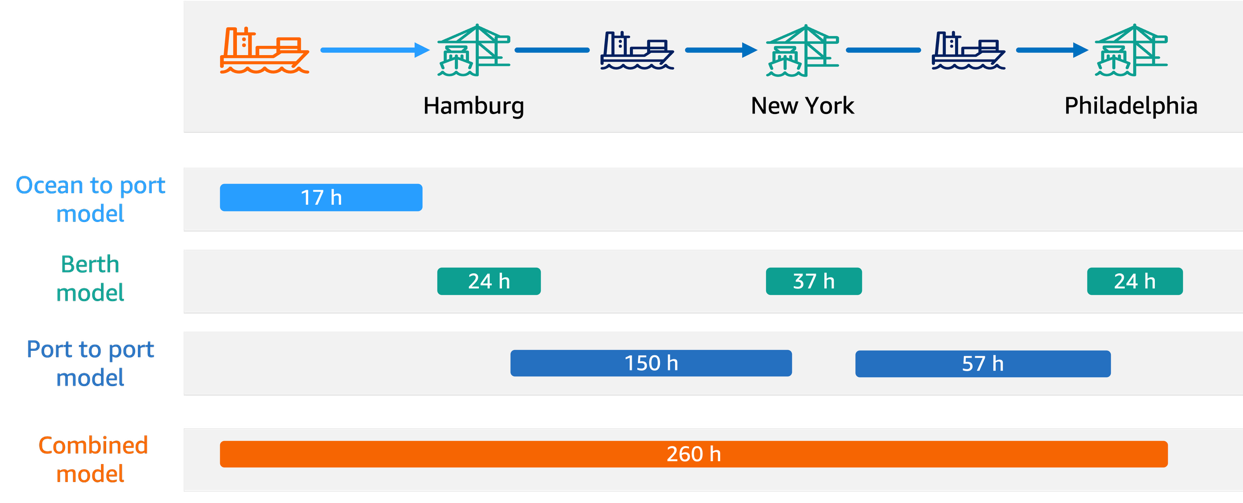

Hapag-Lloyd uses ML to predict vessel schedules accurately, enhancing reliability and customer satisfaction. Challenges include dynamic shipping conditions and data integration at scale, requiring robust MLOps infrastructure.

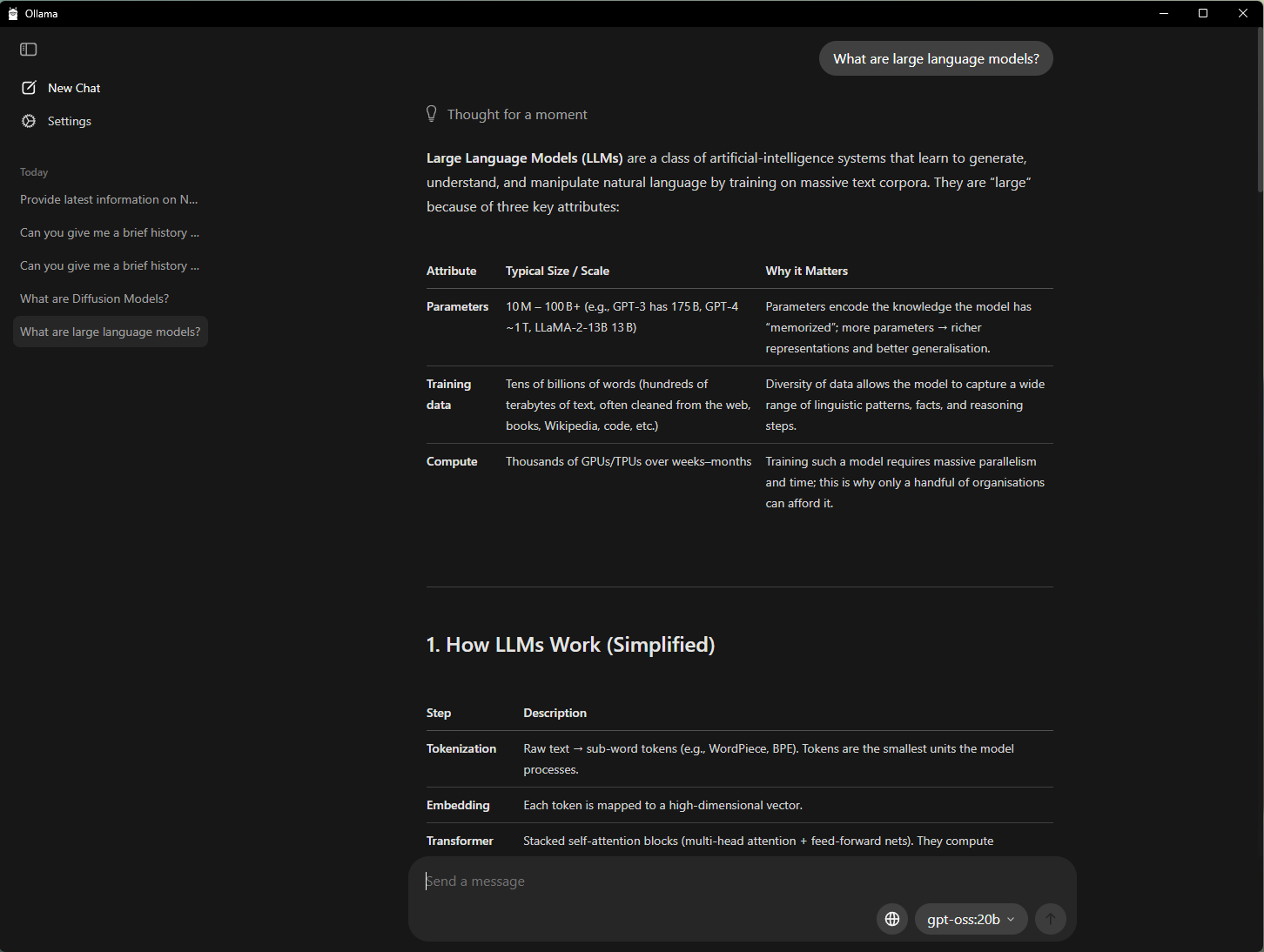

Newly released open-weight models like OpenAI's gpt-oss and Alibaba's Qwen 3 allow high-quality local AI experiences on NVIDIA RTX PCs. Developers can now easily optimize LLM applications with tools like Ollama and LM Studio for improved performance and user experience.

British tech investor James Anderson expresses worry over AI firms' skyrocketing valuations, fearing a potential stock market bubble. Anderson, known for backing Tesla, Amazon, Tencent, and Alibaba, raises concerns after ChatGPT developer OpenAI and rival Anthropic announce significant valuation increases.

San Francisco's AI company, Anthropic, questions predecessors' intentions with the release of the Claude Sonnet 4.5 model. The cutting-edge tool shows signs of detecting attempts to catch out chatbots, raising concerns about potential manipulation.

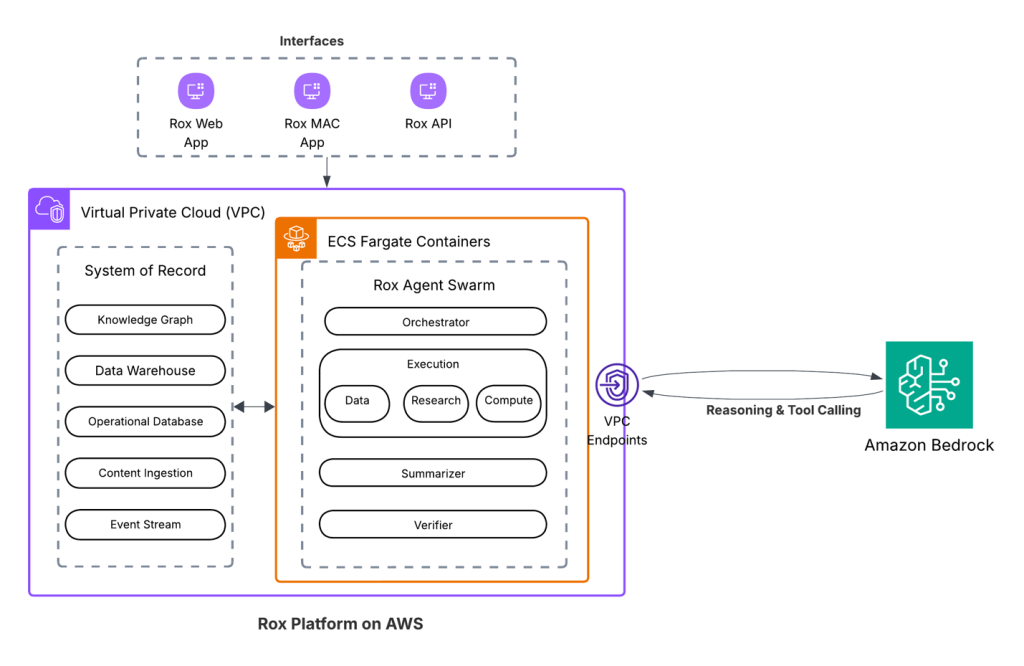

Rox introduces a revenue operating system to unify data sources for modern sales teams. The system, built on AWS, uses AI agents to streamline workflows and boost productivity.