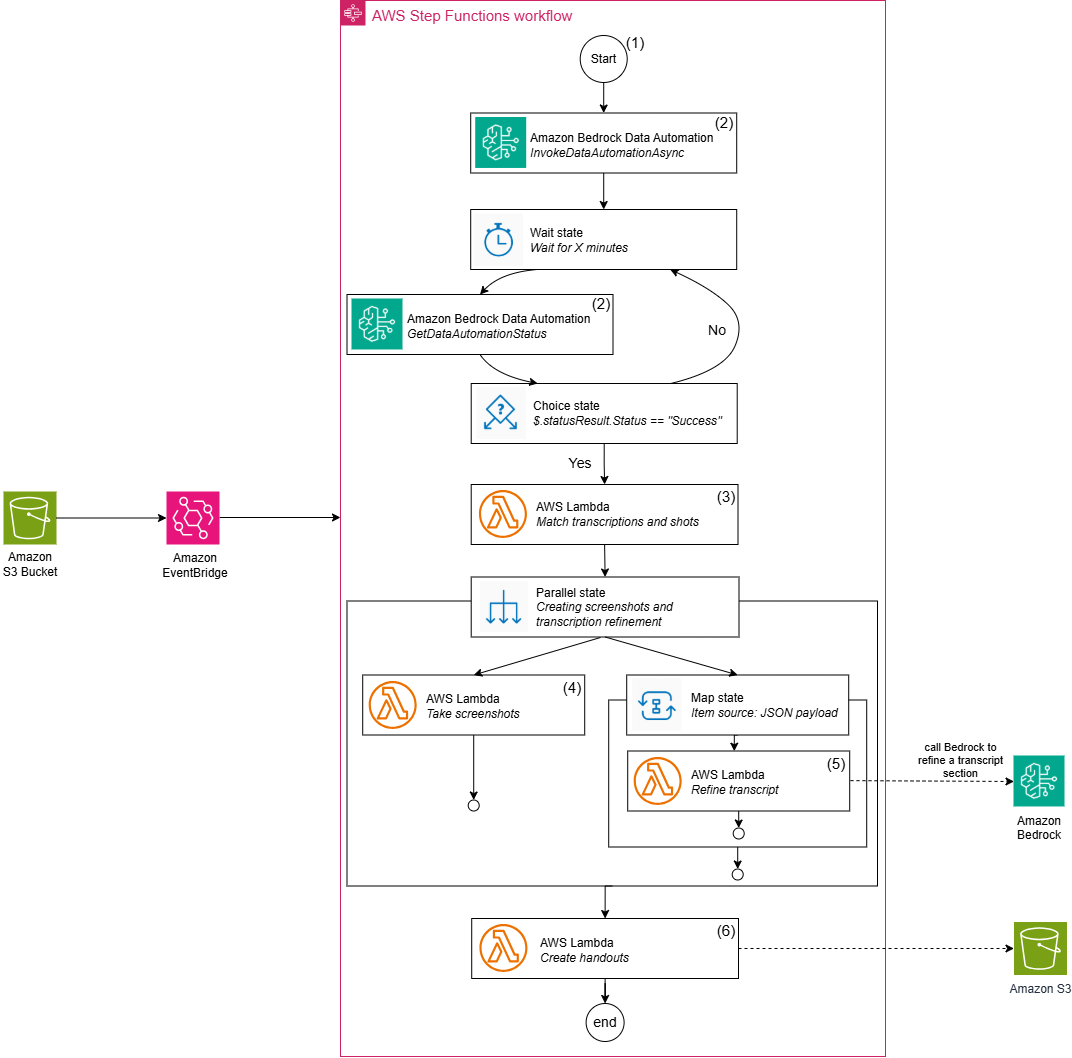

Automated solution transforms webinar recordings into handouts using Amazon Bedrock Data Automation for video analysis. Workflow automates transcription, slide detection, and synchronization for efficient handout creation.

Meta's CEO, Mark Zuckerberg, aims to bring powerful AI to millions, splurging on talent and acquisitions for superintelligence development. The company's spending spree on AI capabilities is paying off, with plans for data centers the size of Manhattan.

Microsoft's Q2 results reveal a thriving cloud business and massive AI investments, delighting investors. The tech giant plans to spend over $100bn on datacenters and talent in the upcoming fiscal year.

YouTube will use AI to estimate US users' ages for age-appropriate content. Australia bans under 16s from YouTube as UK enforces age checks on social networks.

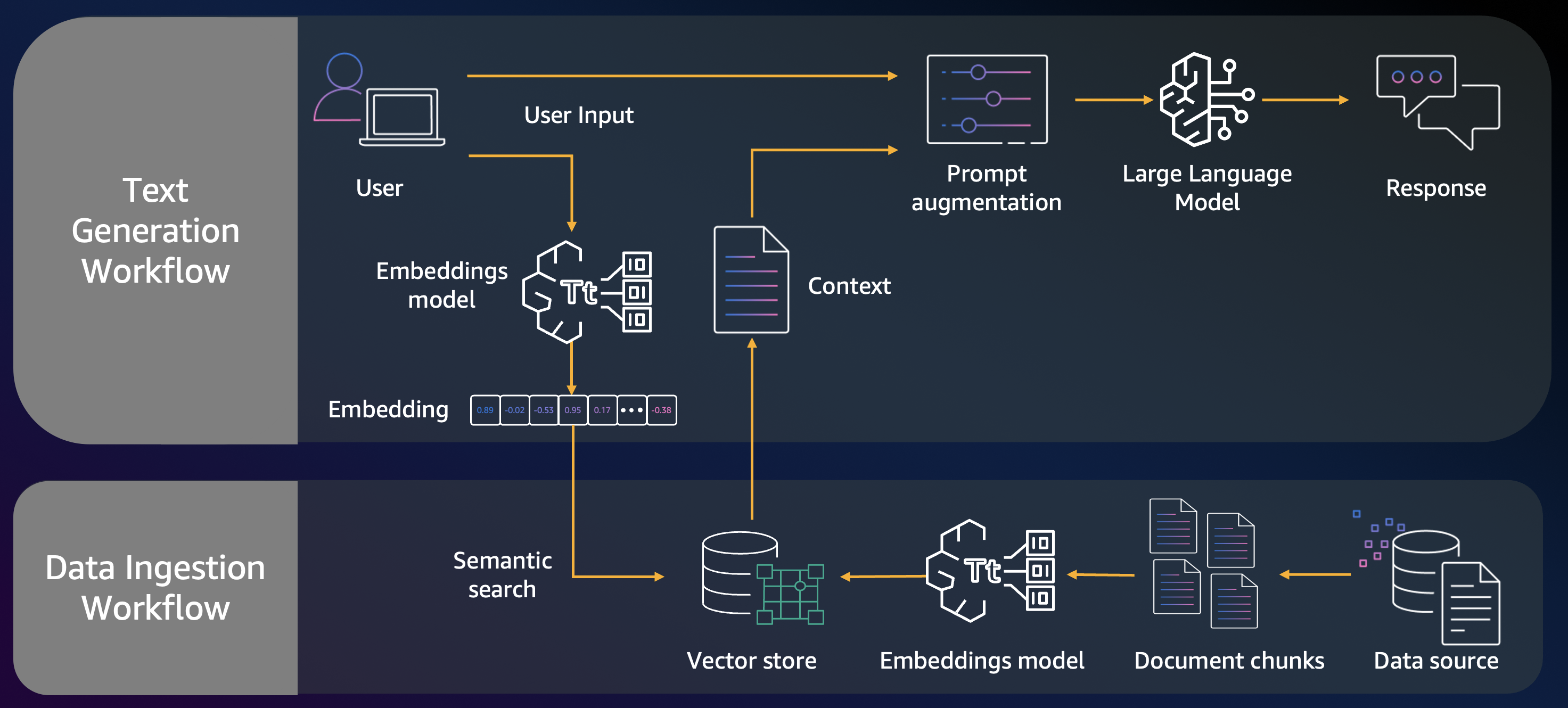

Amazon Bedrock Knowledge Bases offers a managed RAG experience for accurate response retrieval using enterprise data. Nippon Life India Asset Management Ltd. improves RAG accuracy by rewriting queries and reranking responses.

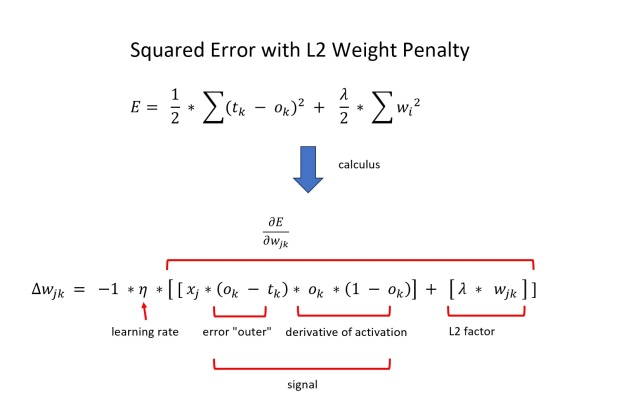

L2 regularization and weight decay are essentially equivalent in neural networks, both limiting model weights to prevent overfitting. Research papers show the math behind the equivalence, with examples from classic rocket ship designs to modern SpaceX prototypes.

Incredible progress is being made on global poverty, health, longevity, and climate change despite daunting challenges such as climate change and nuclear war. A survey revealed that many Americans fear humanity's extinction due to climate change, but there are profound reasons for hope that are often overlooked.

ChatGPT introduces "study mode" to promote responsible academic use amid AI misuse at universities. Users can receive guidance on complex subjects in a step-by-step format resembling an academic lesson.

MIT showcased cutting-edge music tech at “FUTURE PHASES,” featuring new works with string orchestra and electronics at ICMC. Works by Ziporyn, Egozy, and Machover, along with selected pieces, highlighted MIT’s commitment to music technology advancements.

Google, Amazon, and Meta invest billions in AI infrastructure. Concerns arise about environmental impact and impact on creatives and the grid.

Samsung to produce AI chips for Tesla in $16.5bn deal at new Texas plant, praised by Elon Musk. Unnamed client revealed in regulatory filing, with Musk sharing more on X platform.

Stephen Flynn, known for anti-English sentiment, may shed real tears if Lionesses win Euros. Jonathan Liew's poetic piece captures Women's Euro 2025 final emotions, likened to Eddie Butler in rugby.

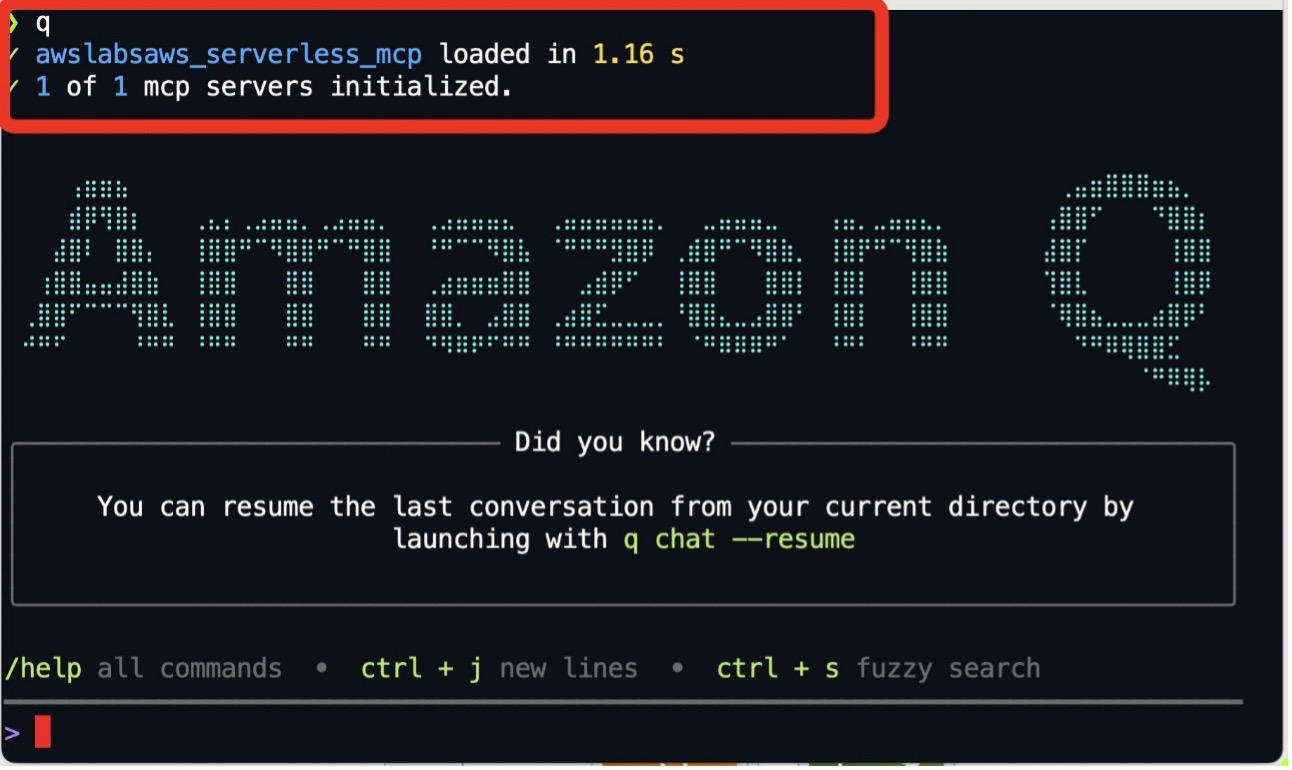

Amazon Q Developer CLI with Model Context Protocol simplifies building serverless applications on AWS by providing natural language guidance aligned with AWS best practices, accelerating development and streamlining operations directly from the command line. This innovative approach integrates conversational AI with external tools through MCP, transforming how developers interact with AWS servi...

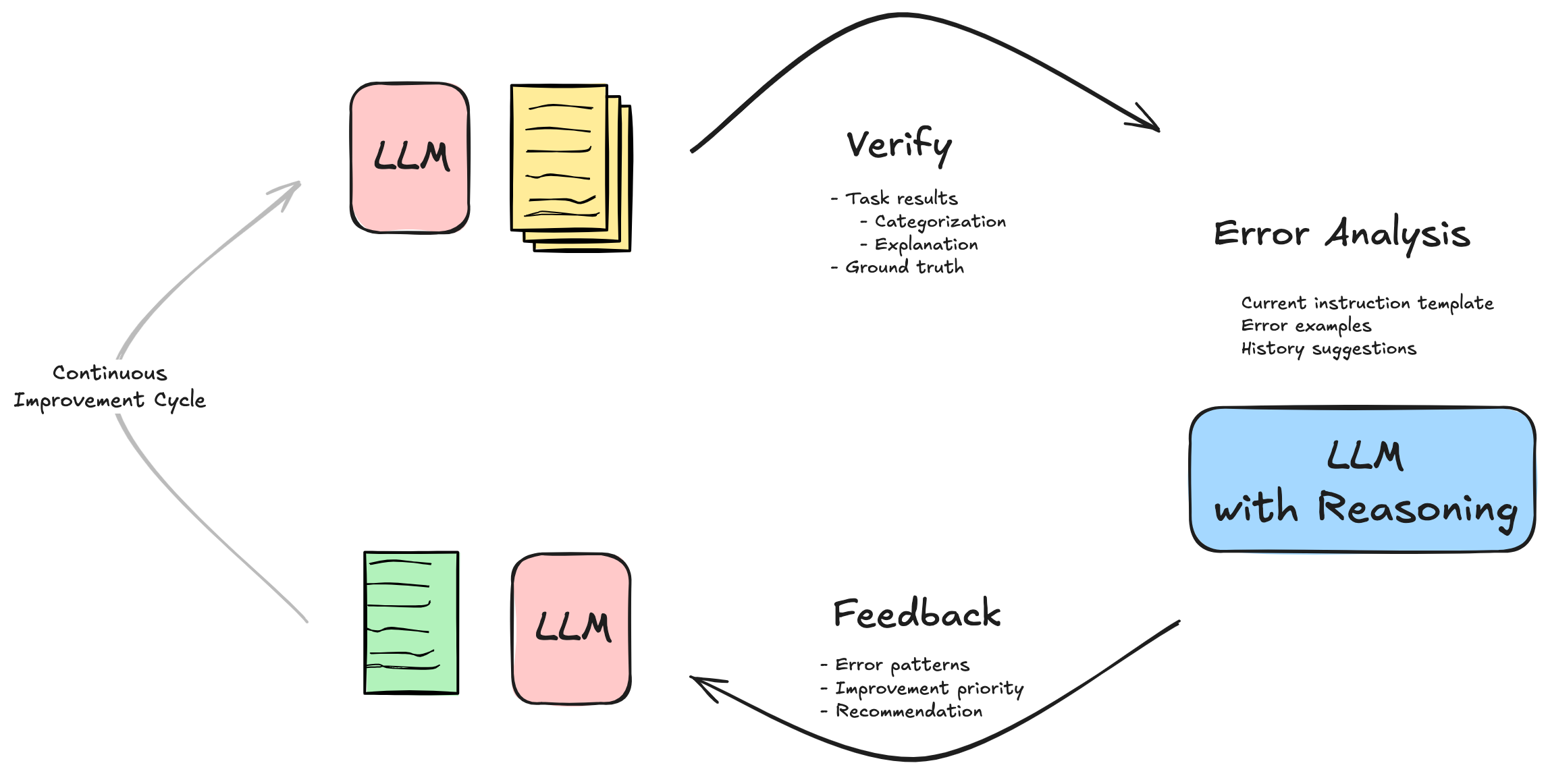

Crypto.com implemented generative AI-powered assistant services on AWS to enhance service quality. Modular subsystem architecture and feedback mechanisms were used to optimize AI assistant prompts for diverse scenarios, ensuring high performance and reliability.

Apple's latest iPhone release showcases new technology, including improved camera features and a faster processor. The iPhone 12 is set to be a game-changer in the smartphone industry.