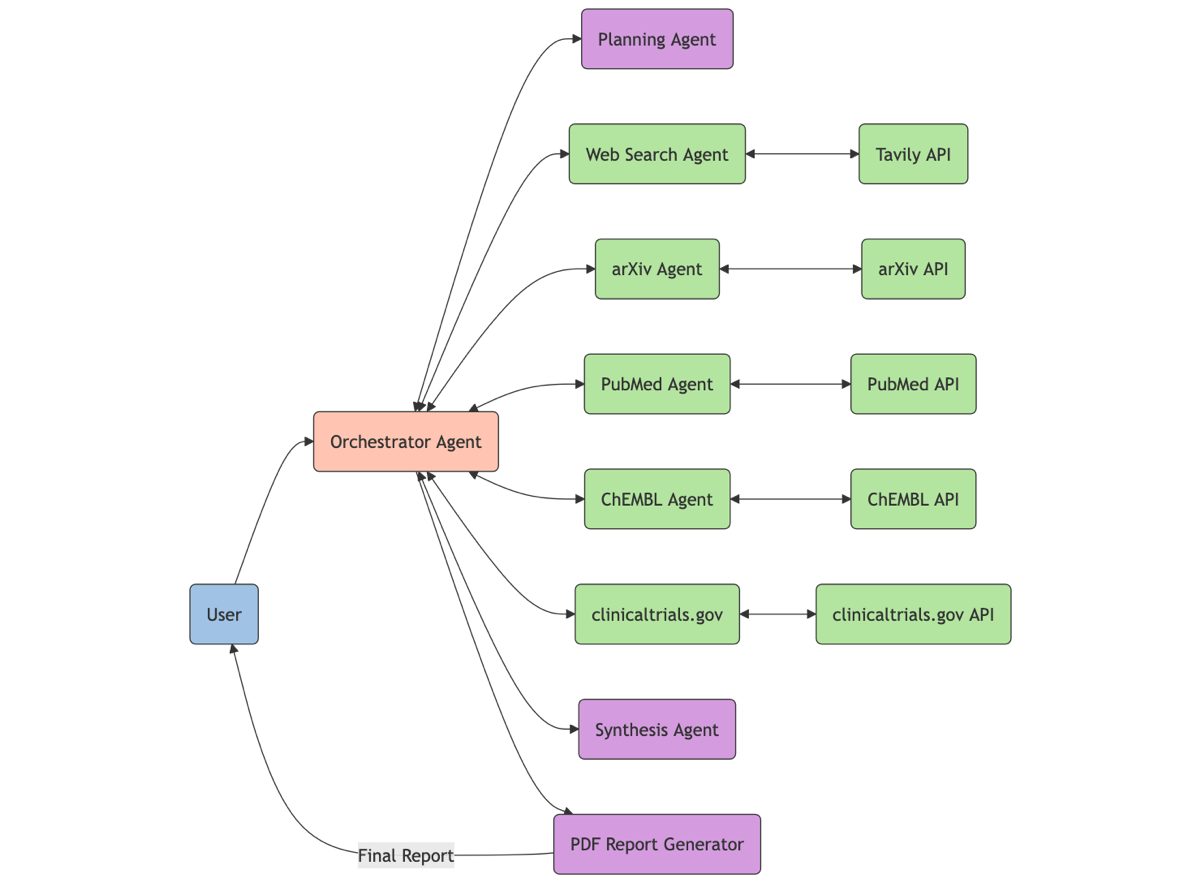

Genentech and AstraZeneca are using AI agents to speed up drug discovery. Strands Agents SDK helps create powerful research assistants for healthcare providers.

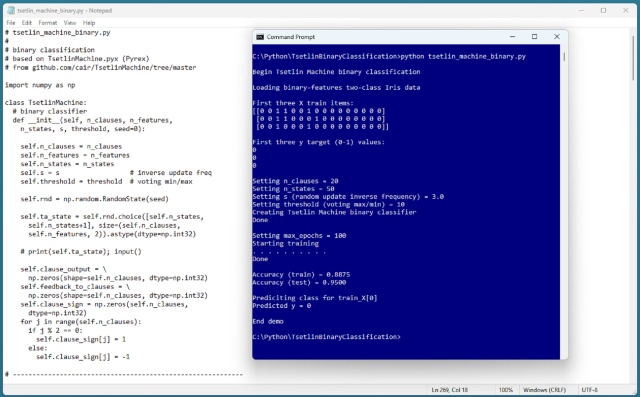

Obscure Tsetlin Machine binary classification combines rules and logic for accurate predictions. The Iris Dataset is transformed into binary values for effective Tsetlin classification.

AI chatbots like ChatGPT offer students instant help with academic, career, and cooking dilemmas. But are they missing out on valuable learning experiences by not making their own mistakes?

Indian film company alters 2013 movie 'Raanjhanaa' with AI ending, upsetting director. Doomed romance plot changed to 'happy' in re-release as 'Ambikapathy'.

Police in eastern Spain are investigating a 17-year-old for using AI to create fake nude images of female schoolmates. The boy allegedly intended to sell the modified images online, sparking a criminal investigation.

The "Department of Government Efficiency" (Doge) plans to cut 50% of federal regulations using AI by January. The "Doge AI Deregulation Decision Tool" will analyze 200,000 regulations to create a "delete list".

Przemysław Dębiak defeats OpenAI at coding world finals, fears humans may lose edge due to tech advancements. Psyho clinches victory over AI at AtCoder World Tour Finals 2025, predicts he may be last human to win.

Chinese Premier Li Qiang urges global cooperation on AI development, warns of security risks at World AI Conference in Shanghai. Proposes organization for coordination, stresses need for consensus on governance framework for AI.

Database of 8m handwritten census entries reveals Paris as hub for intellectuals like James Joyce in 1926, living among diverse neighbors including Russian émigrés and US writers. Joyce's life in the 7th arrondissement painted a vibrant portrait of the city's cultural richness.

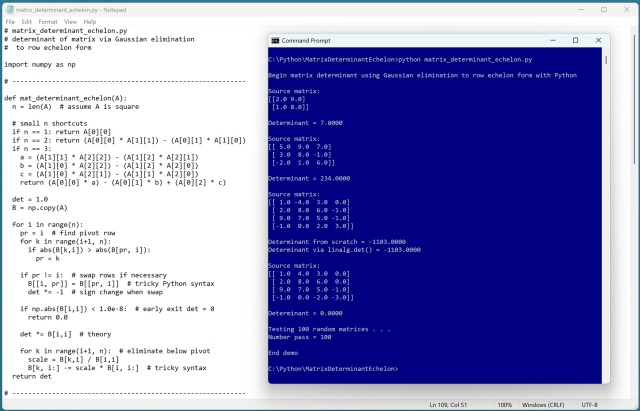

Computing matrix determinants using Gaussian elimination to row echelon form provides clear, concrete results. This technique ensures accuracy and consistency in determining the invertibility of square matrices.

Alphabet, Meta, Microsoft, and others invest millions in AI pay off as Trump vows to cut red tape at elite tech summit. President declares need for innovators to thrive without regulatory constraints, signaling a shift in tech policy focus.

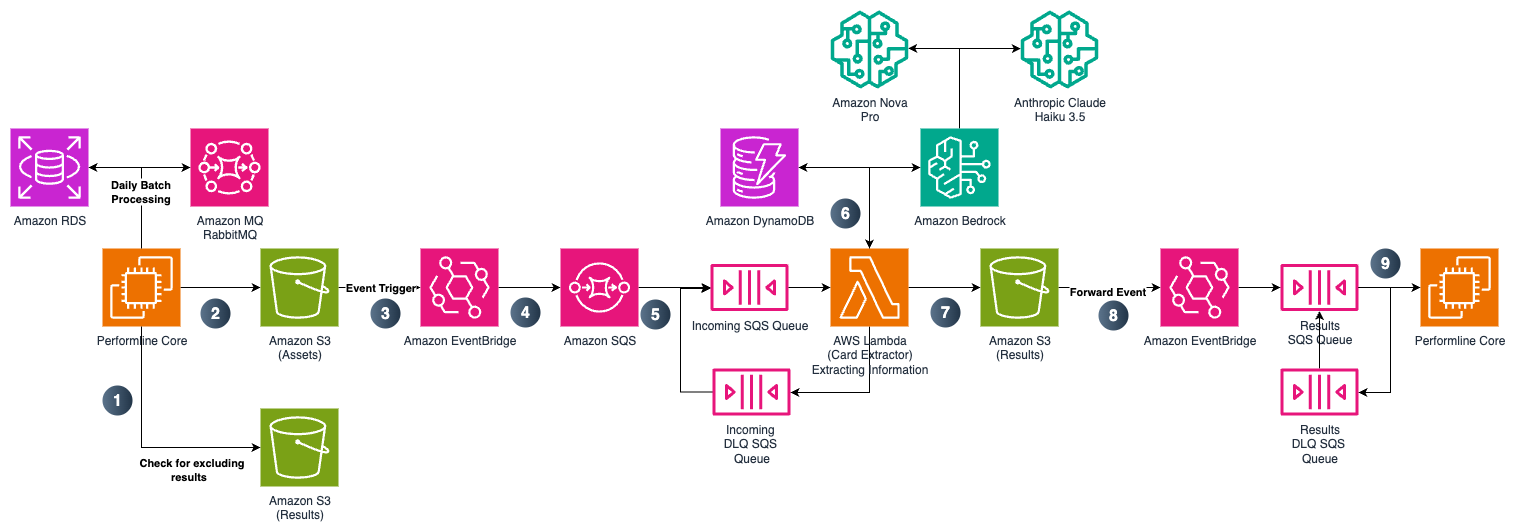

PerformLine, a leader in marketing compliance, uses AI to automate compliance oversight for consumer-facing channels, providing brands with a competitive advantage. By leveraging AWS technology, PerformLine accelerates compliance processes, ensuring speed and accuracy in monitoring millions of webpages daily.

Study finds Google AI Overviews can cause 79% traffic loss for sites ranked below, impacting news companies' online audiences. Media owners fear AI summaries as existential threat to outlets reliant on search traffic.

MIT study shows 15% increase in pedestrian walking speed in northeastern U.S. cities from 1980 to 2010. Public spaces now function more as thoroughfares than meeting places, impacting urban planning.

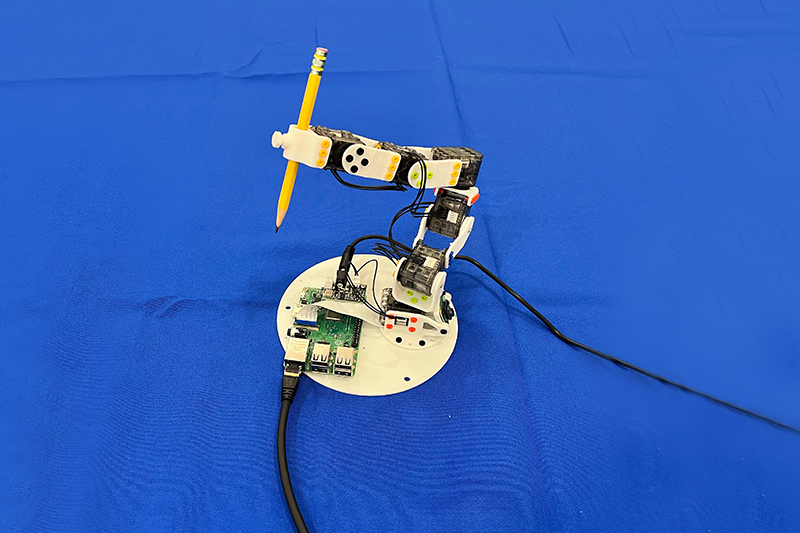

MIT's CSAIL developed NJF, allowing robots to learn control through vision, not sensors, offering a new approach to robotic self-awareness. The system enables robots to autonomously learn how to achieve goals, expanding design possibilities for soft and bio-inspired robots.