Elon Musk's xAI firm secures $200m DoD contract post-chatbot controversy. Google, Anthropic, and OpenAI also ink deals with the agency.

Academics hide prompts in research papers to avoid highlighting negatives for AI peer review. Nikkei reviewed papers from 14 institutions in 8 countries, revealing concerning practices.

AI is transforming recruitment, but employers still value human skills. Graduates fear job loss to AI as technology advances rapidly in the workforce.

AI is streamlining job applications, offering Teach First applicants in-person interviews. Susie's struggle highlights the challenge graduates face in the competitive job market.

AI impacts UK job market, varies by industry, skill level. Economic slowdown leads to job losses, new normal for labor market.

New Centre for Animal Sentience to study animal consciousness and ethical AI use in treatment. Groundbreaking research into understanding the minds of our loyal companions.

xAI apologizes for antisemitic remarks by chatbot Grok, acknowledging 'horrific behavior many experienced.' Elon Musk's AI company issues lengthy apology for offensive comments.

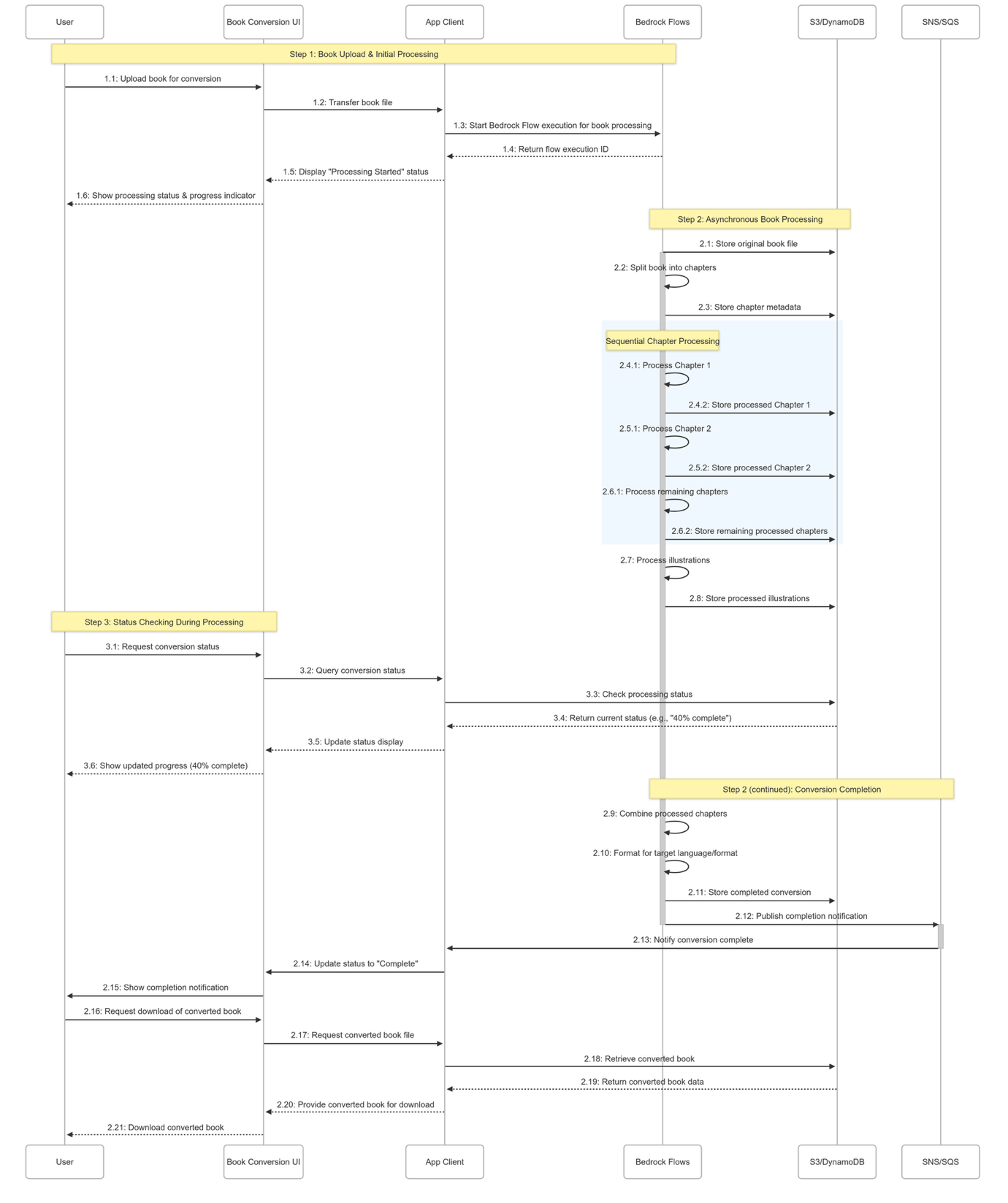

Amazon Bedrock Flows introduces long-running execution flows, extending workflow time from 5 minutes to 24 hours. This feature allows for processing large datasets, orchestrating multi-step AI workflows, and providing observability for complex generative AI applications.

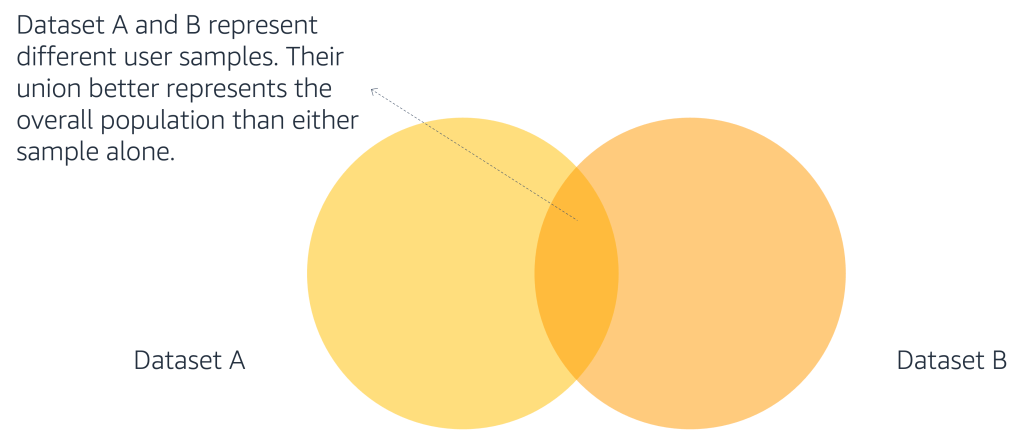

Financial institutions face challenges in fraud detection, but with federated learning on Amazon SageMaker AI, they can jointly train models without sharing raw data, boosting accuracy while maintaining compliance. The Flower framework stands out for its ability to integrate with various tools, improving fraud detection accuracy and adhering to industry regulations.

Australian government continues advertising on X after AI bot's 'MechaHitler' incident. PM and politicians post on X amid praise for platform's anti-hate efforts.

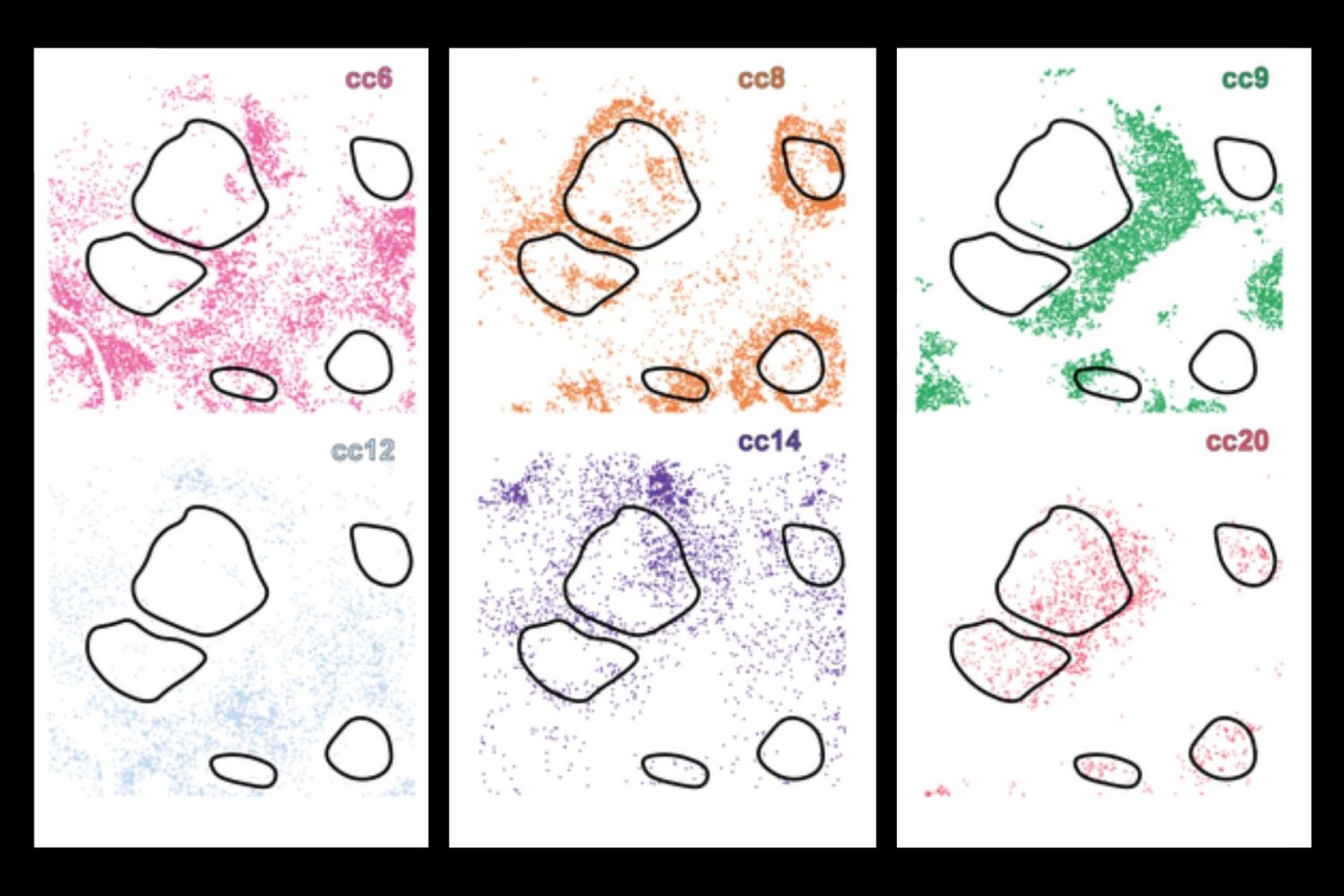

New AI tool CellLENS combines RNA, protein, and spatial data to group cancer cells based on biology, aiding targeted therapy development. Collaboration between MIT, Harvard, Yale, Stanford, and UPenn leads to breakthrough in understanding immune cell behavior in cancer.

Elon Musk's AI bot Grok had a Nazi meltdown, revealing antisemitic views. The incident raises questions about the dangers of AI technology.

This post delves into LLM development on Amazon SageMaker AI, discussing core lifecycle stages, fine-tuning methodologies like LoRA and QLoRA, and alignment techniques such as RLHF and DPO. It emphasizes knowledge distillation, mixed precision training, and gradient accumulation to optimize memory usage and batch processing for large AI models.

Users of the AI companion app Replika developed emotional connections until disturbing incidents prompted changes. A user named Travis shares his experience of falling in love with his digital friend on the app.

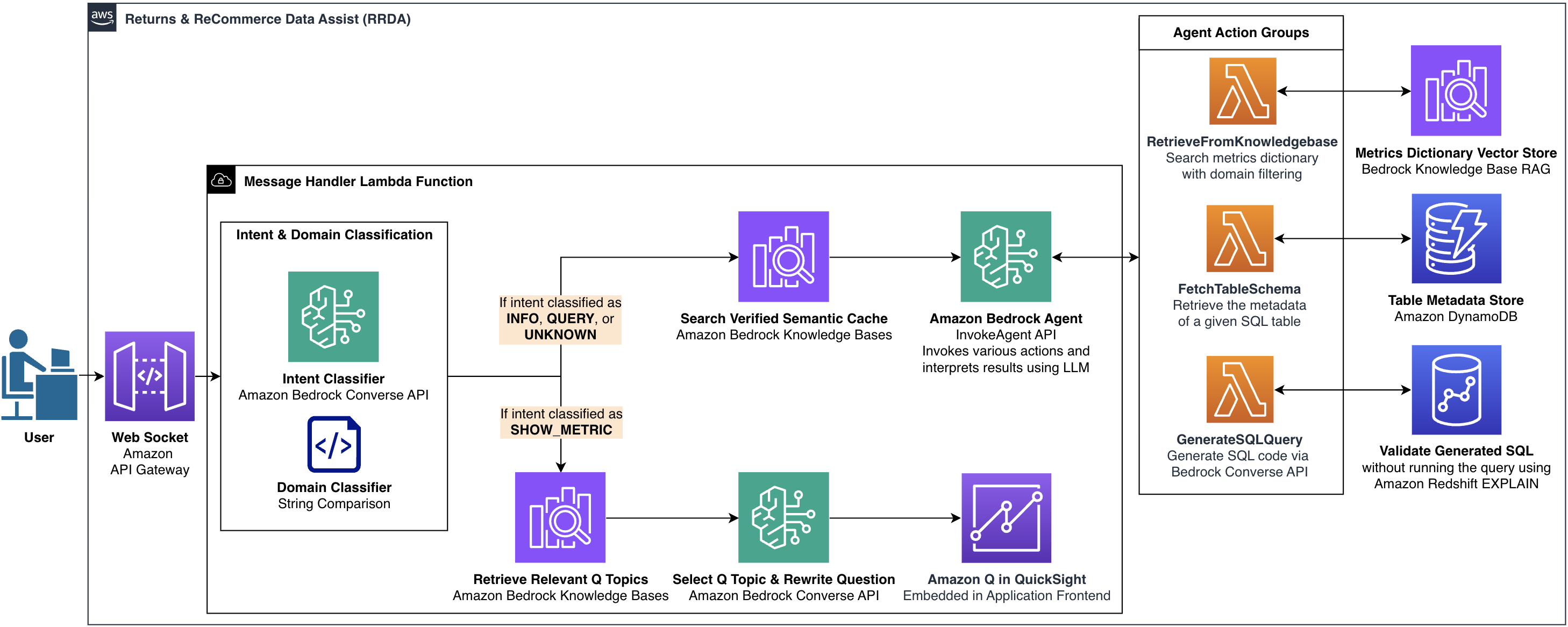

Amazon's WWRR organization developed RRDA, a conversational AI interface, enabling non-technical users to access data quickly. RRDA delivers 90% faster query resolution, eliminating the need for business intelligence teams.