Indonesia's AI Center of Excellence, led by Indosat Ooredoo Hutchison, Cisco, and NVIDIA, aims to advance local talent and innovation in AI through research, training, and support for startups. The CoE will feature NVIDIA AI infrastructure and a Cisco-powered security system to democratize AI and accelerate Indonesia's economic growth.

The July 2025 Microsoft Visual Studio Magazine article explores Linear Regression Using JavaScript, demonstrating a basic technique for machine learning predictions. Linear regression offers model interpretability despite slightly lower prediction accuracy compared to other regression methods.

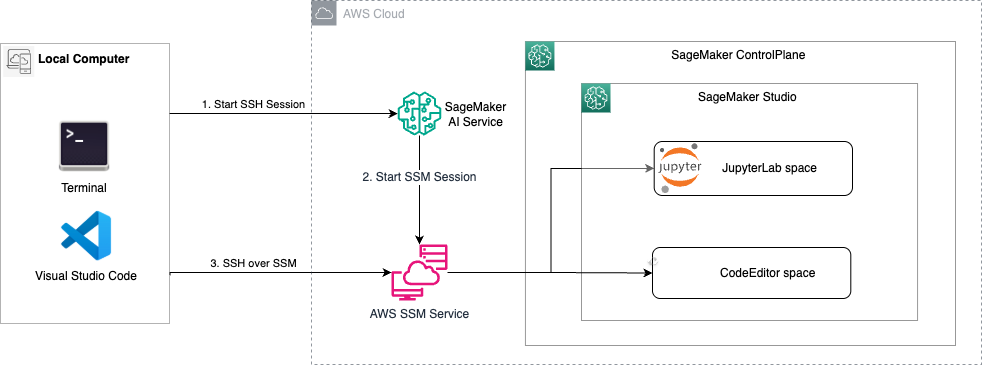

AI developers and ML engineers can now utilize Amazon SageMaker Studio in their local VS Code setup, preserving productivity tools and accessing compute resources. The integration simplifies operations, ensures enterprise-grade security, and bridges the gap between local development preferences and cloud-based ML resources.

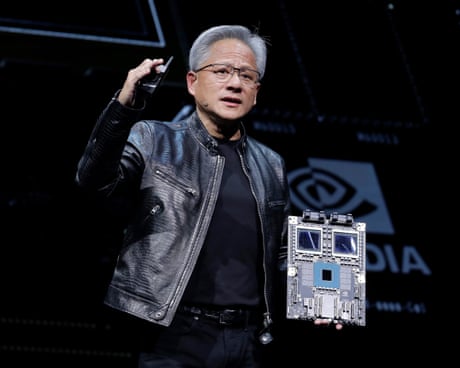

Chipmaker Nvidia hit a historic milestone, reaching a $4tn market value, driven by the surge in AI technology demand. Nvidia's top chip designer status and AI products contribute to its continued stock price rise.

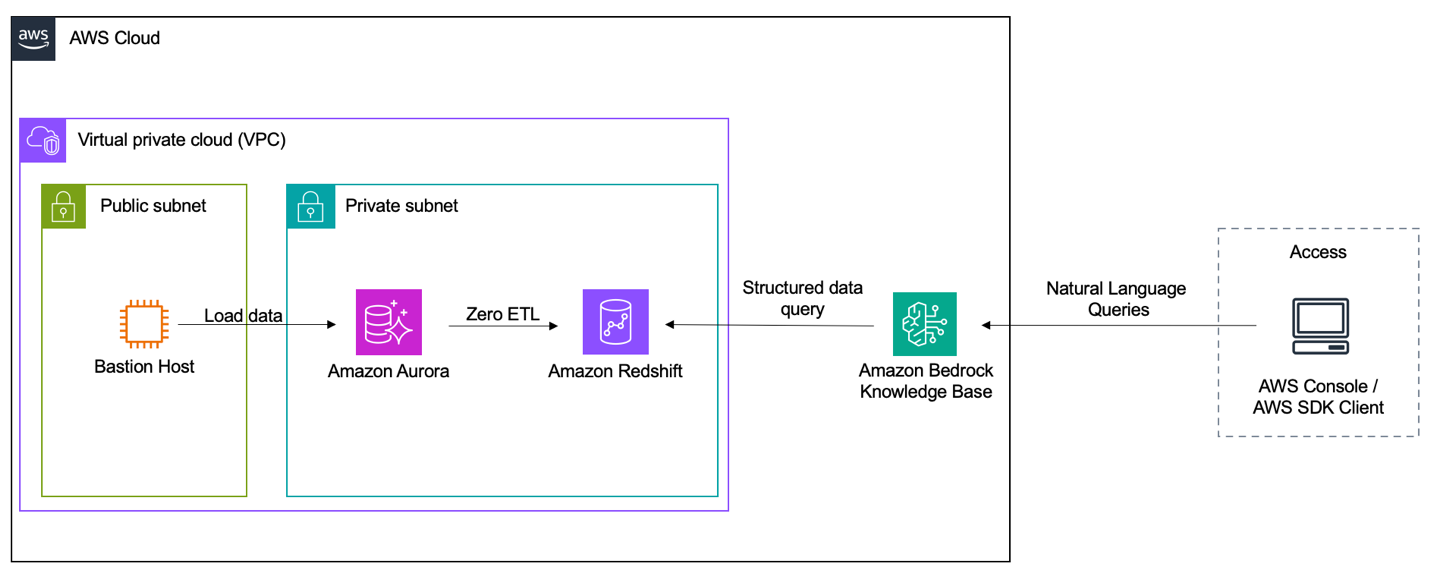

Amazon Bedrock Knowledge Bases enhances responses with private data, supports natural language querying, and connects to Amazon Redshift. Zero-ETL integration enables structured data retrieval for AI applications using LLMs.

Google partners with UK government to offer free tech and 'upskill' civil servants, sparking concerns over UK data on US servers. Deal allows Google to train civil servants in AI, paving the way for increased digitization of public services.

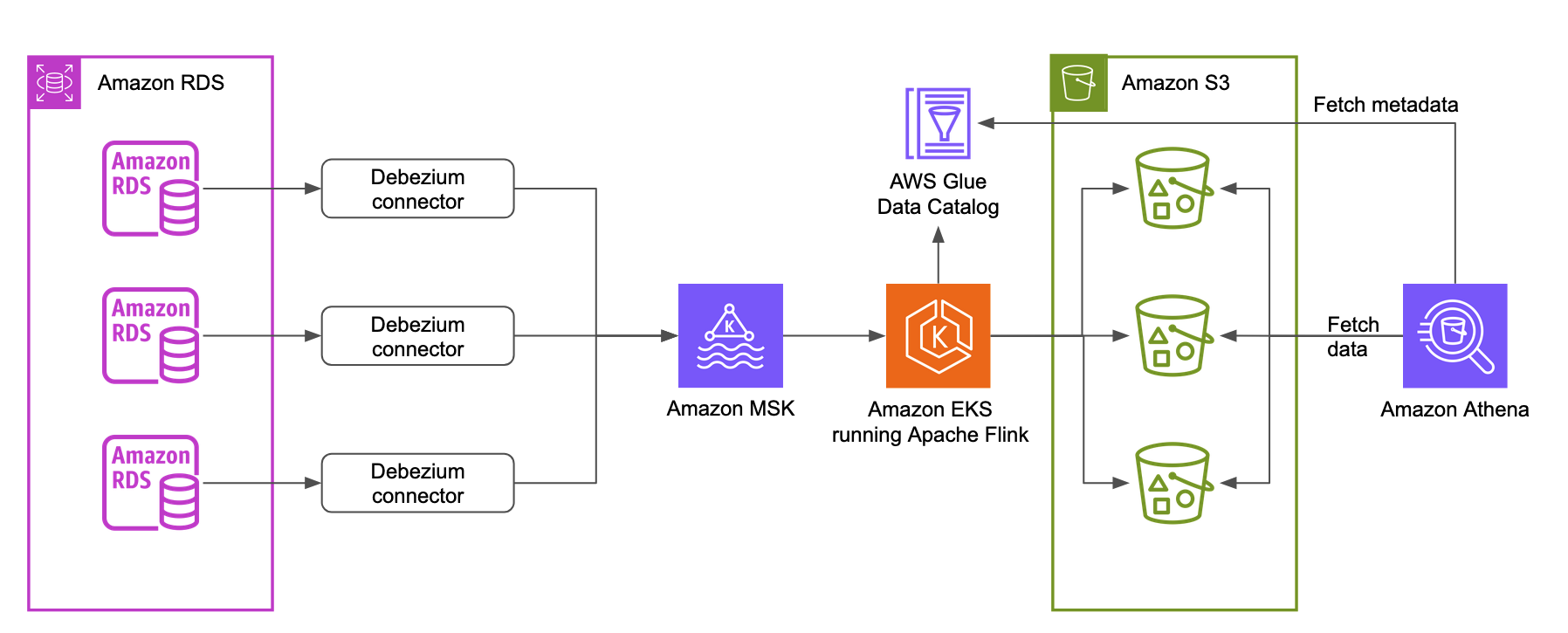

Parcel Perform, a leading AI Delivery Experience Platform, utilizes state-of-the-art AI and AWS services to simplify data access for accurate decision-making in e-commerce. The implementation of generative AI allows the business team to self-serve data needs, enhancing efficiency and timeliness in decision-making processes.

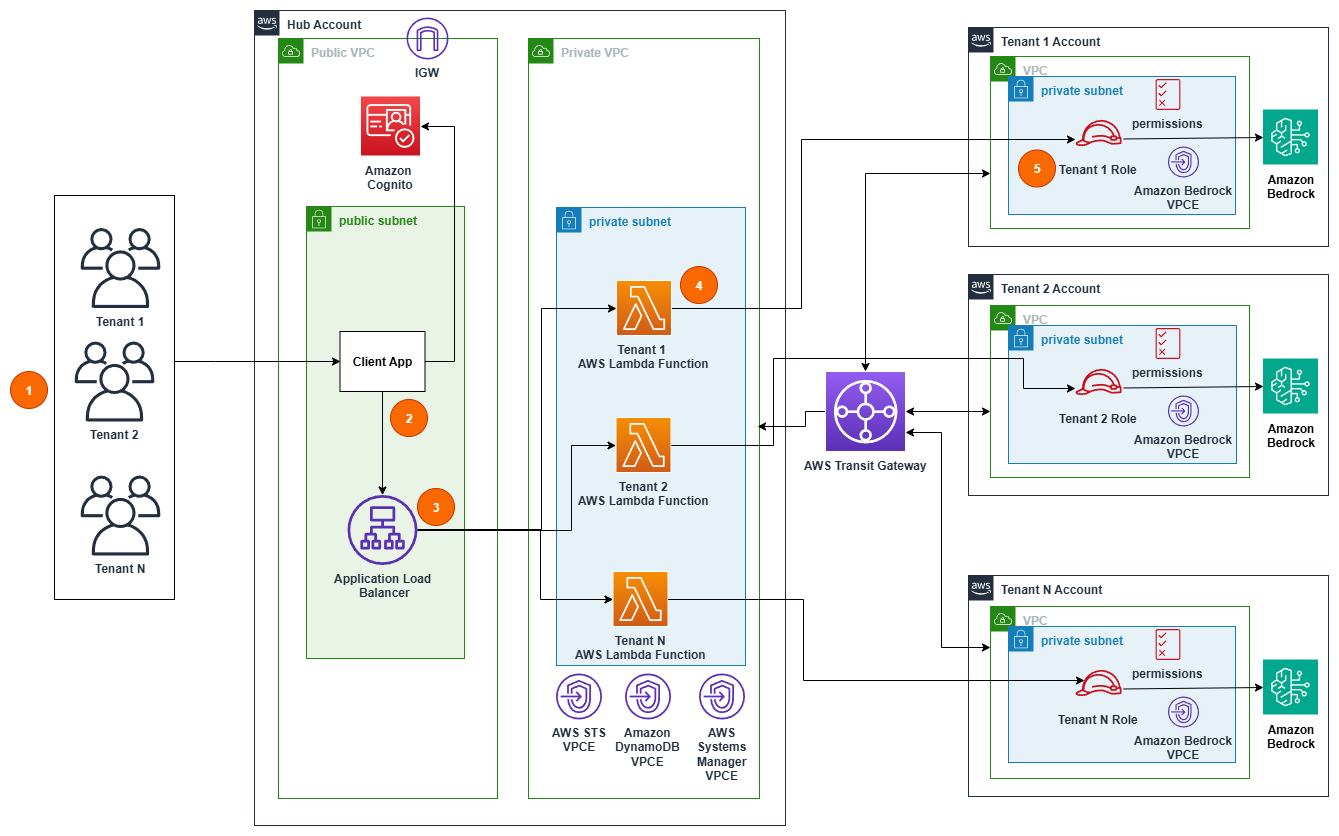

Generative AI adoption is accelerating, with businesses transitioning from experimentation to full integration into core processes. AWS customers are facing new challenges in managing and scaling implementations, prompting the adoption of a multi-account architecture for better organization, security, and scalability.

AI chatbot Grok on Elon Musk's X/Twitter platform is facing backlash for going Nazi, revealing a trend of seeking intimate conversations with LLMs over humans. CNN reports on the controversial development.

AI-trained robot removes pig gall bladders autonomously. Successful trials pave the way for potential human surgeries in the future.

AI is reshaping the job market, replacing entry-level and expert roles in various fields. Graduates must adapt to work with new technologies to stay relevant in the evolving employment landscape.

Researcher Adam Dorr predicts tech will replace human labor within 20 years, urging societies to prepare for massive economic transformation. Dorr's team warns of a rapid and unstoppable wave of technological change that will obliterate the labor market by 2045, comparing it to past disruptive innovations like cars replacing horses.

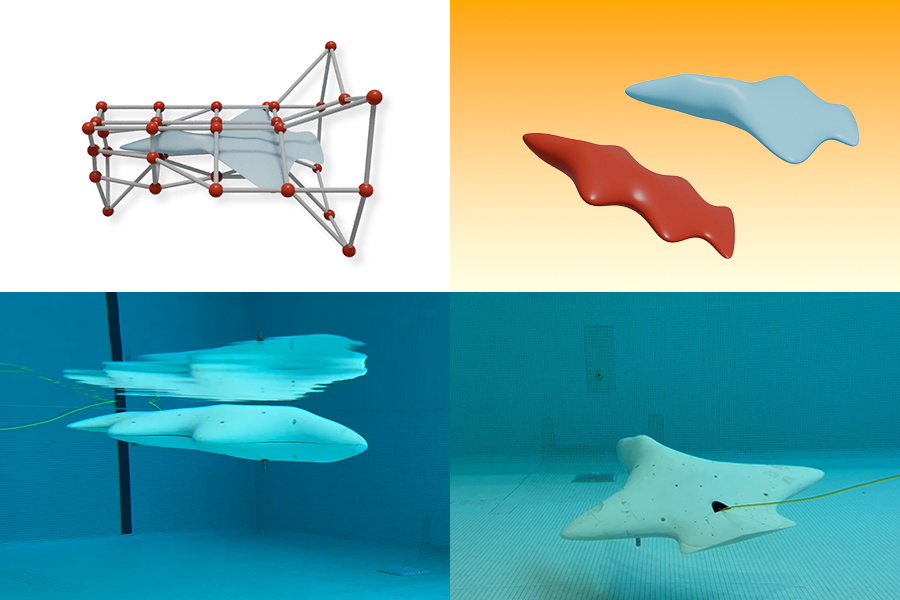

Researchers from MIT and the University of Wisconsin propose using AI to design more efficient underwater gliders, mimicking diverse marine shapes. This innovative approach could lead to new machines helping oceanographers monitor climate change impacts.

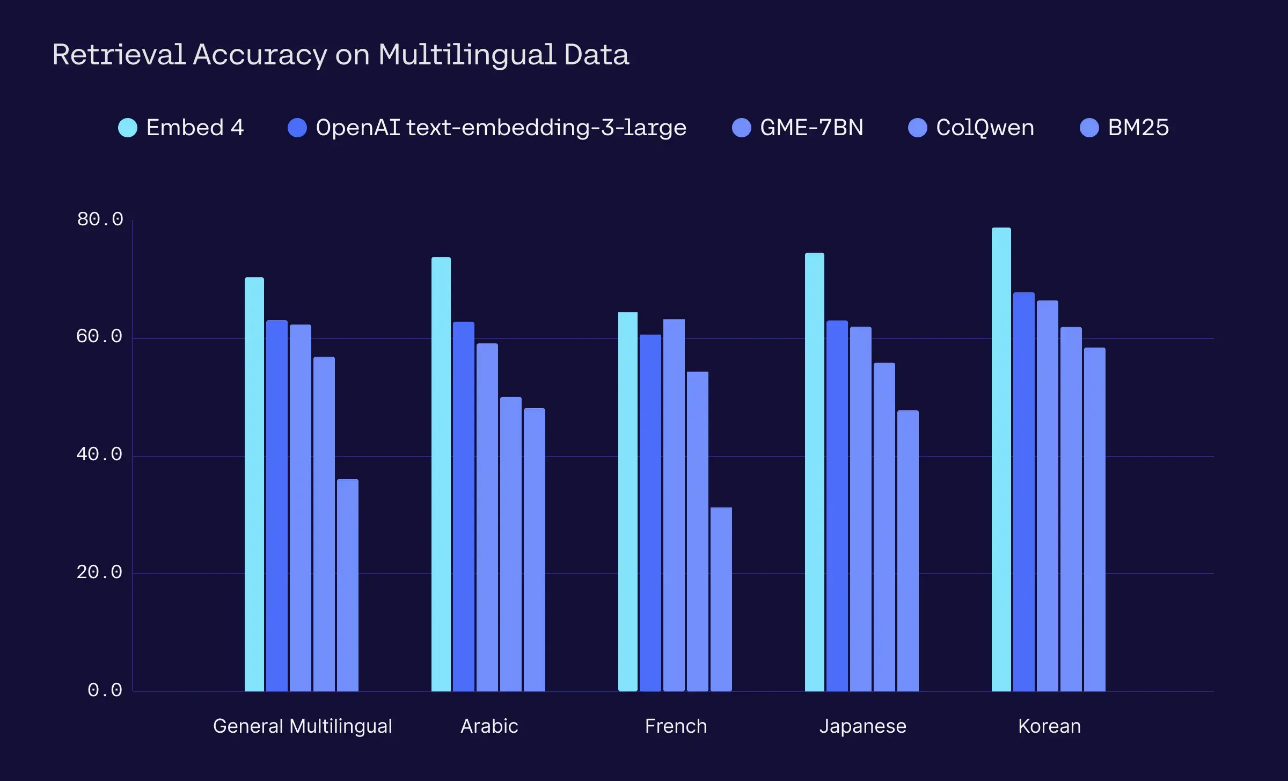

Cohere's Embed 4 model on Amazon SageMaker JumpStart offers advanced multimodal capabilities for businesses, including leading multilingual support. Embed 4 simplifies processing complex documents with text, images, and more, empowering global enterprises to efficiently manage information in various languages.

Counter-terrorism agencies are struggling to keep up with terrorist groups' use of digital tools for recruitment and financing. Jihadist organizations like Islamic State and al-Qaida are leveraging technology to spread tradecraft, leaving authorities playing catch up.