Neuromorphic Computing reimagines AI hardware and algorithms, inspired by the brain, to reduce energy consumption and push AI to the edge. OpenAI's $51 million deal with Rain AI for neuromorphic chips signals a shift towards greener AI at data centers.

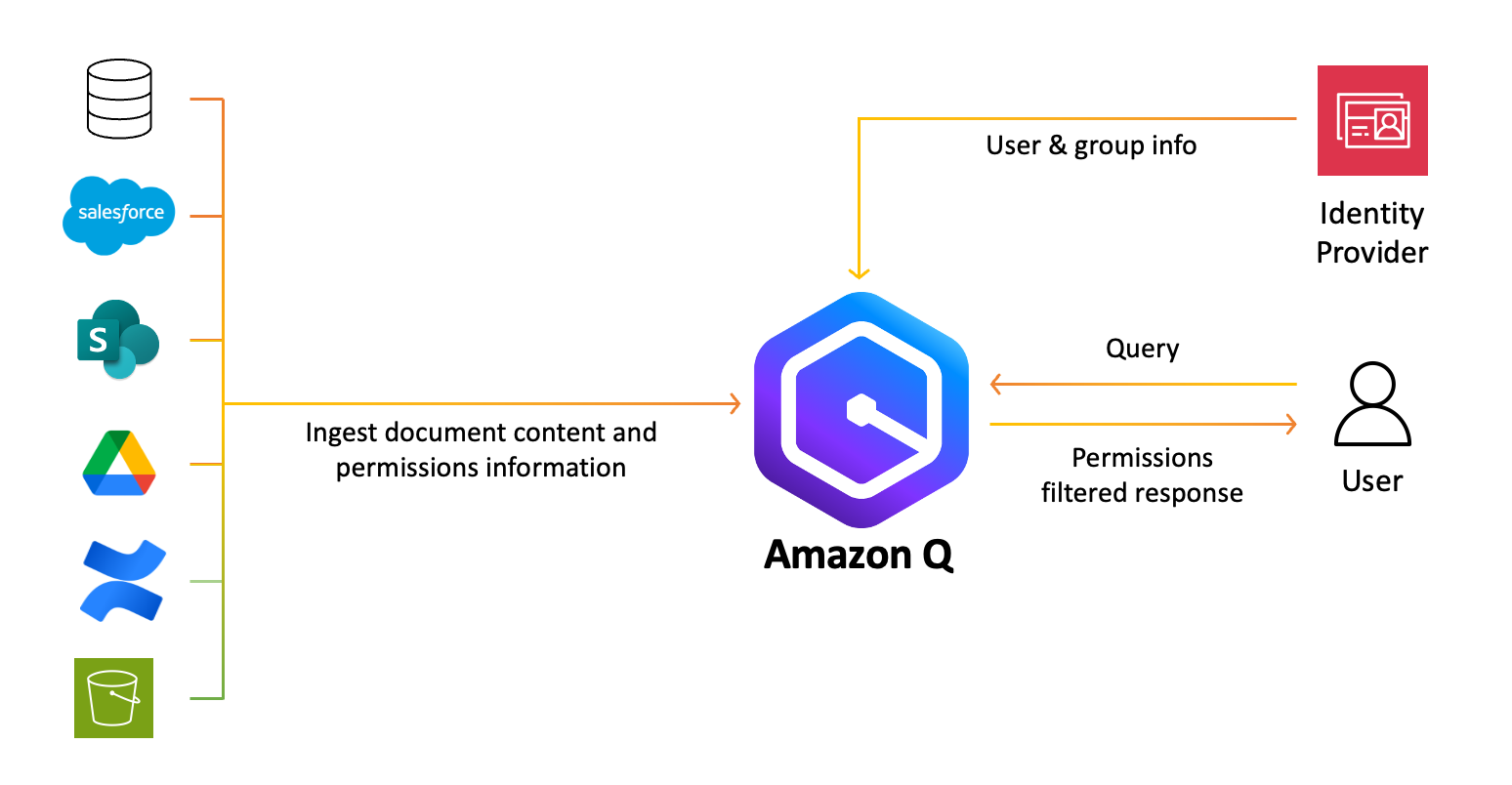

Salesforce centralizes customer data for insights. Amazon Q Business AI empowers employees with data-driven decisions and productivity.

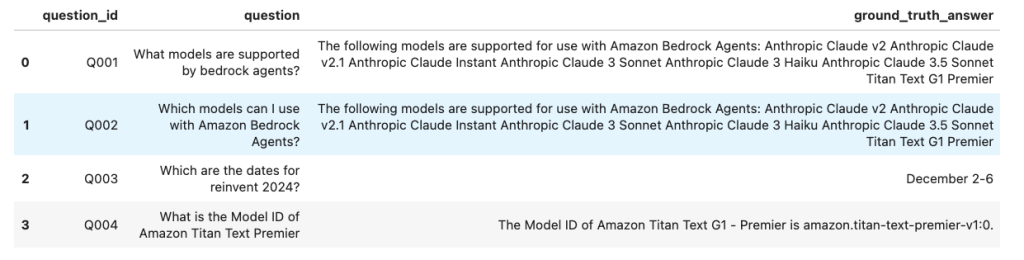

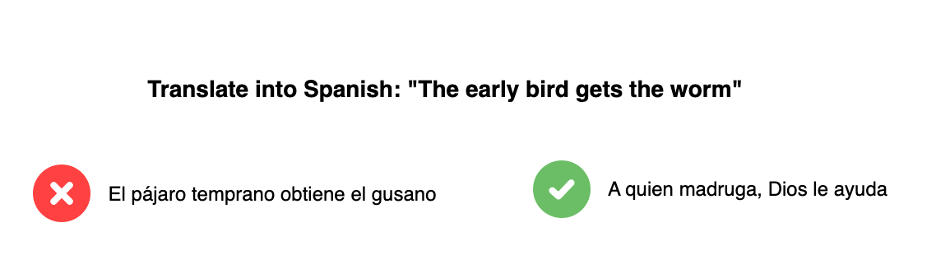

Hallucinations in large language models (LLMs) pose risks in production applications, but strategies like RAG and Amazon Bedrock Guardrails can enhance factual accuracy and reliability. Amazon Bedrock Agents offer dynamic hallucination detection for customizable, adaptable workflows without restructuring the entire process.

Rad AI's flagship product, Rad AI Impressions, uses LLMs to automate radiology reports, saving time and reducing errors. Their AI models generate impressions for millions of studies monthly, benefiting thousands of radiologists nationwide.

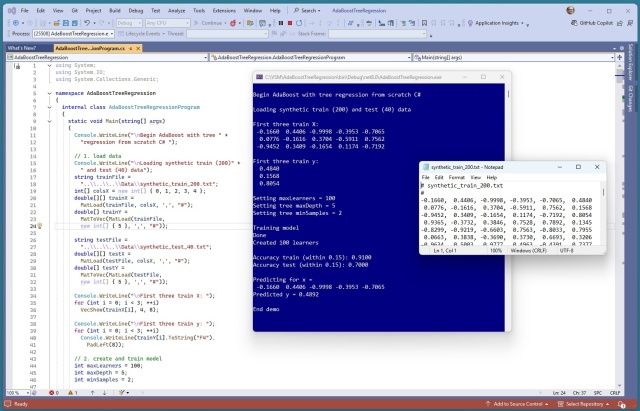

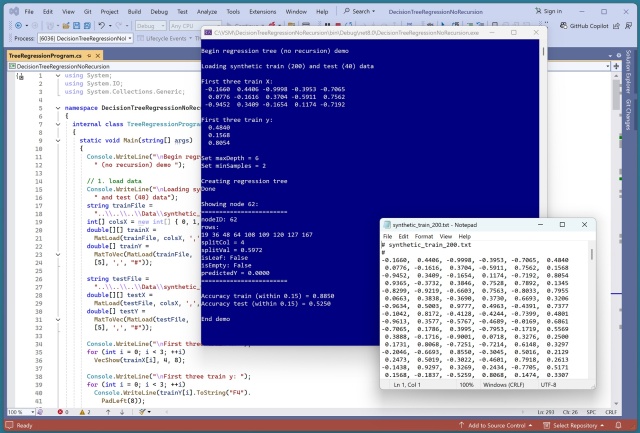

Implemented AdaBoost regression from scratch in C#, using k-nearest neighbors instead of decision trees. Explored original AdaBoost. R2 algorithm by Drucker, creating a unique implementation without recursion.

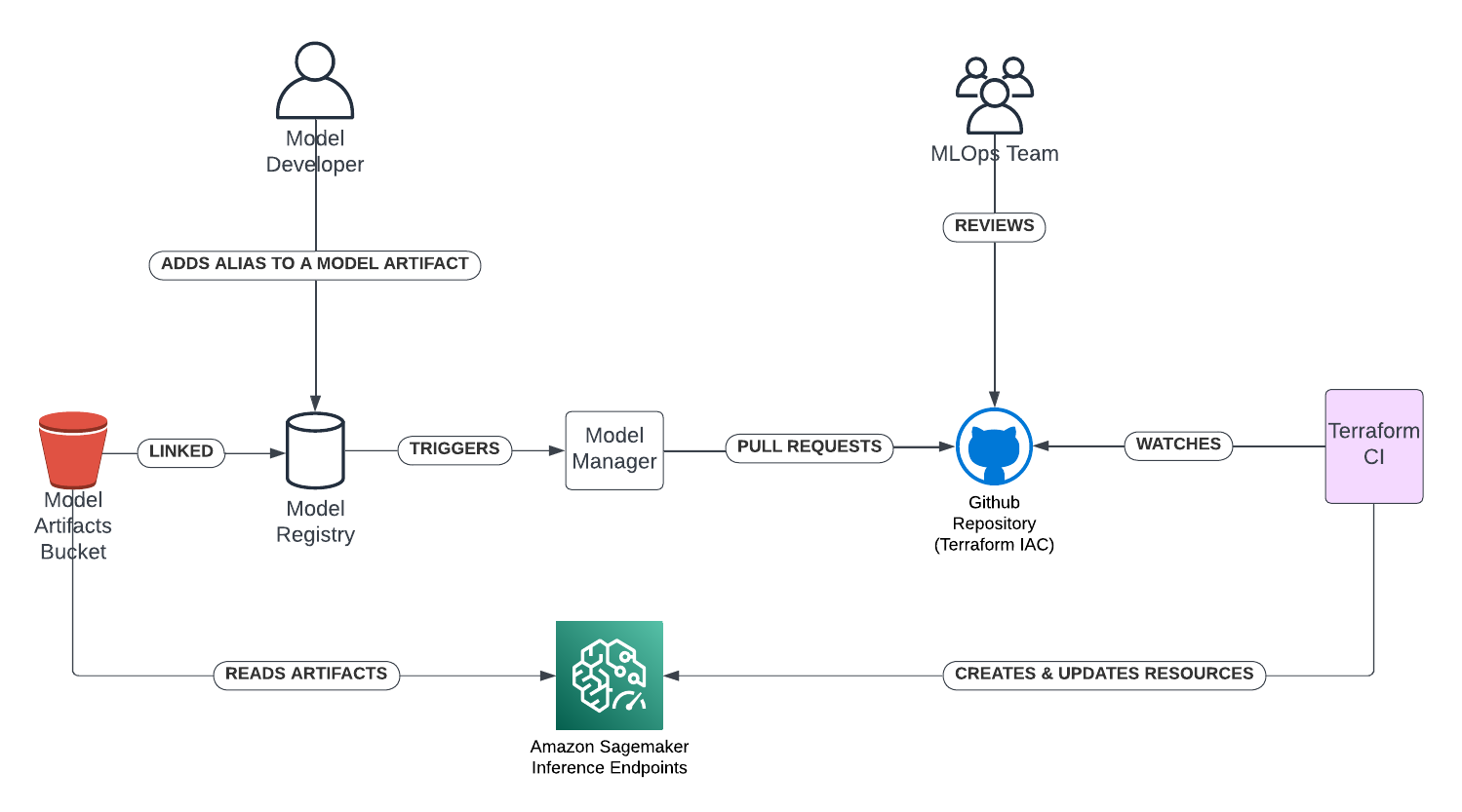

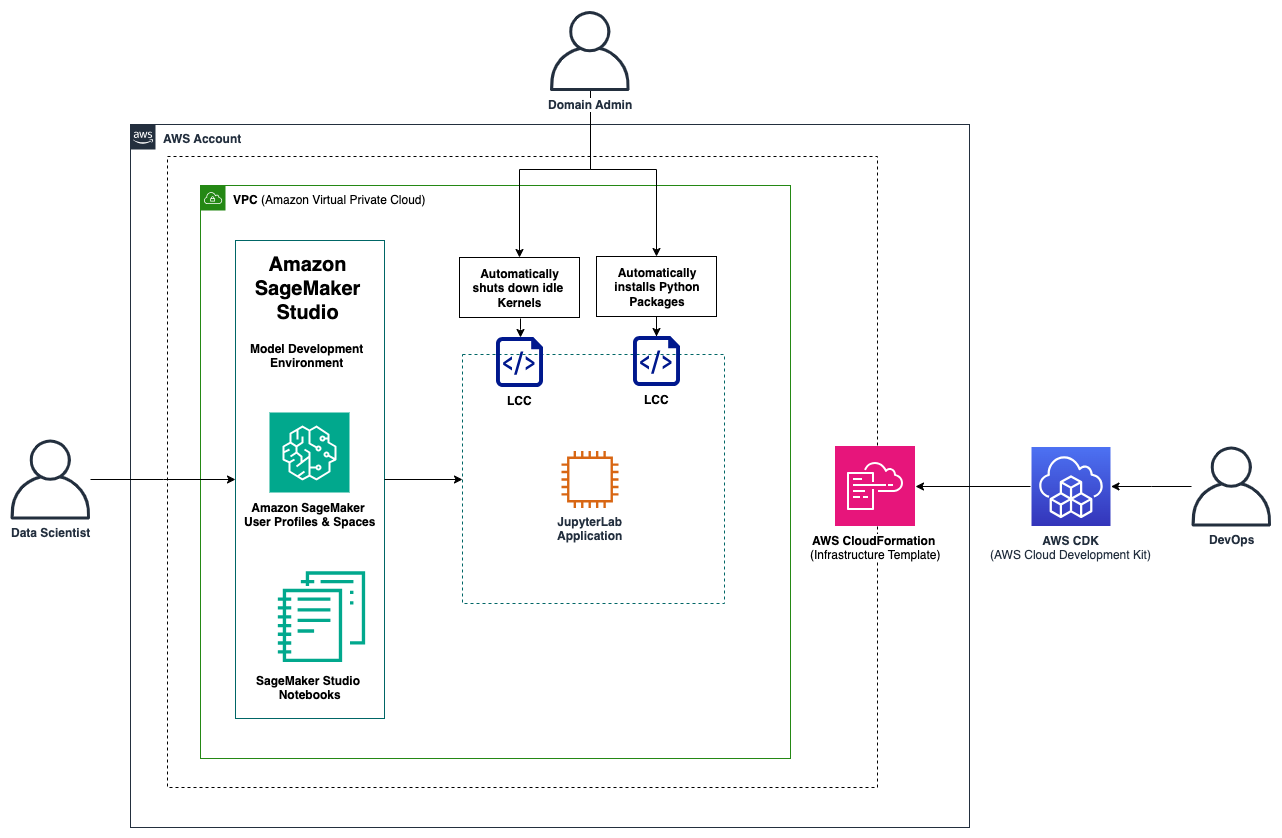

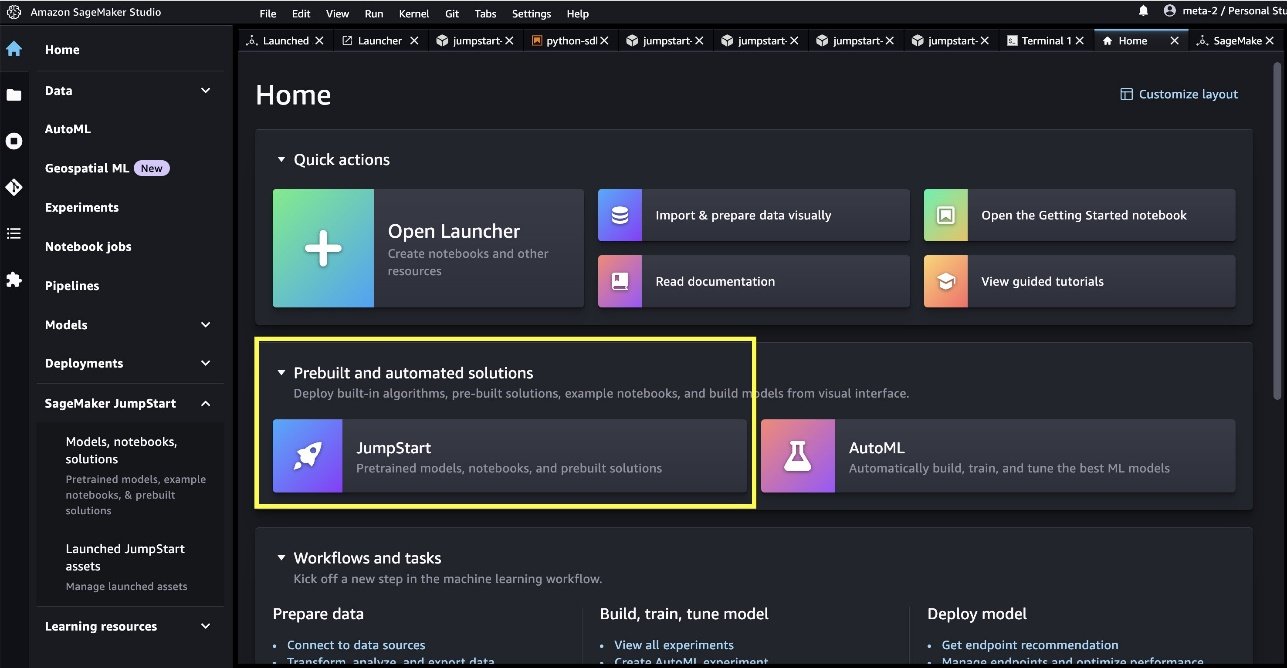

Learn how to set up lifecycle configurations for Amazon SageMaker Studio domains to automate behaviors like preinstalling libraries and shutting down idle kernels. Amazon SageMaker Studio is the first IDE designed to accelerate end-to-end ML development, offering customizable domain user profiles and shared workspaces for efficient project management.

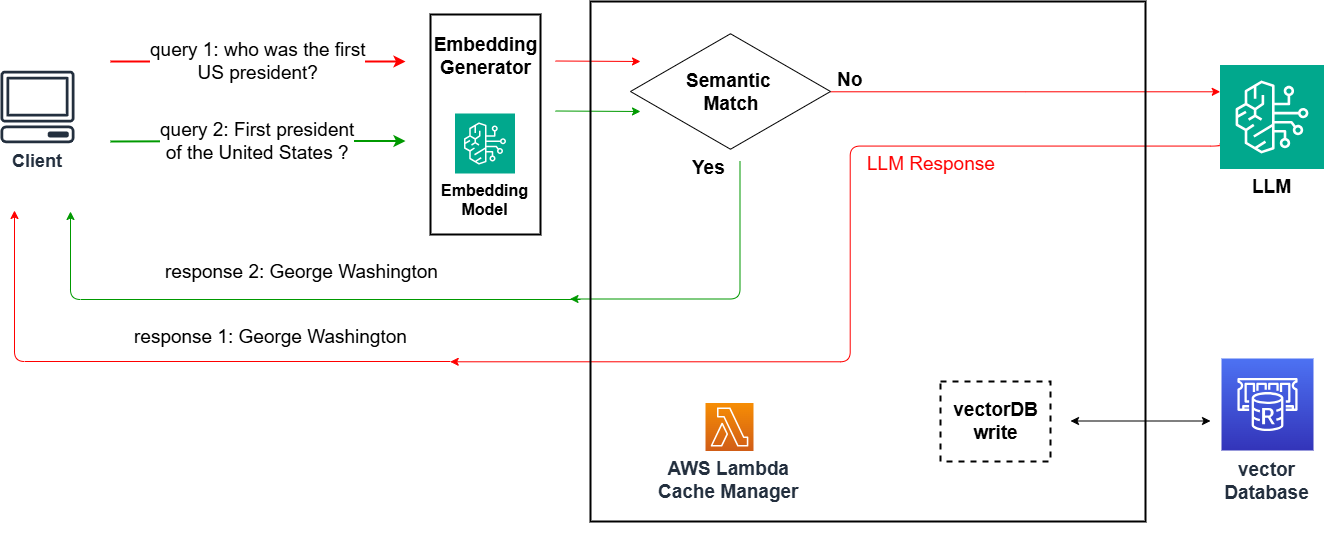

Optimizing LLM-based applications with a serverless read-through caching blueprint for efficient AI solutions. Utilizing Amazon OpenSearch Serverless and Amazon Bedrock to enhance response times with semantic cache for personalized prompts and reducing cache collisions.

MIT scientists develop method using AI and physics to generate realistic satellite images of future flooding impacts, aiding in hurricane preparation. The team's "Earth Intelligence Engine" offers a new visualization tool to help increase public readiness for evacuations during natural disasters.

Software engineer James McCaffrey designed a decision tree regression system in C# without recursion or pointers. He removed row indices from nodes to save memory, making debugging easier and predictions more interpretable.

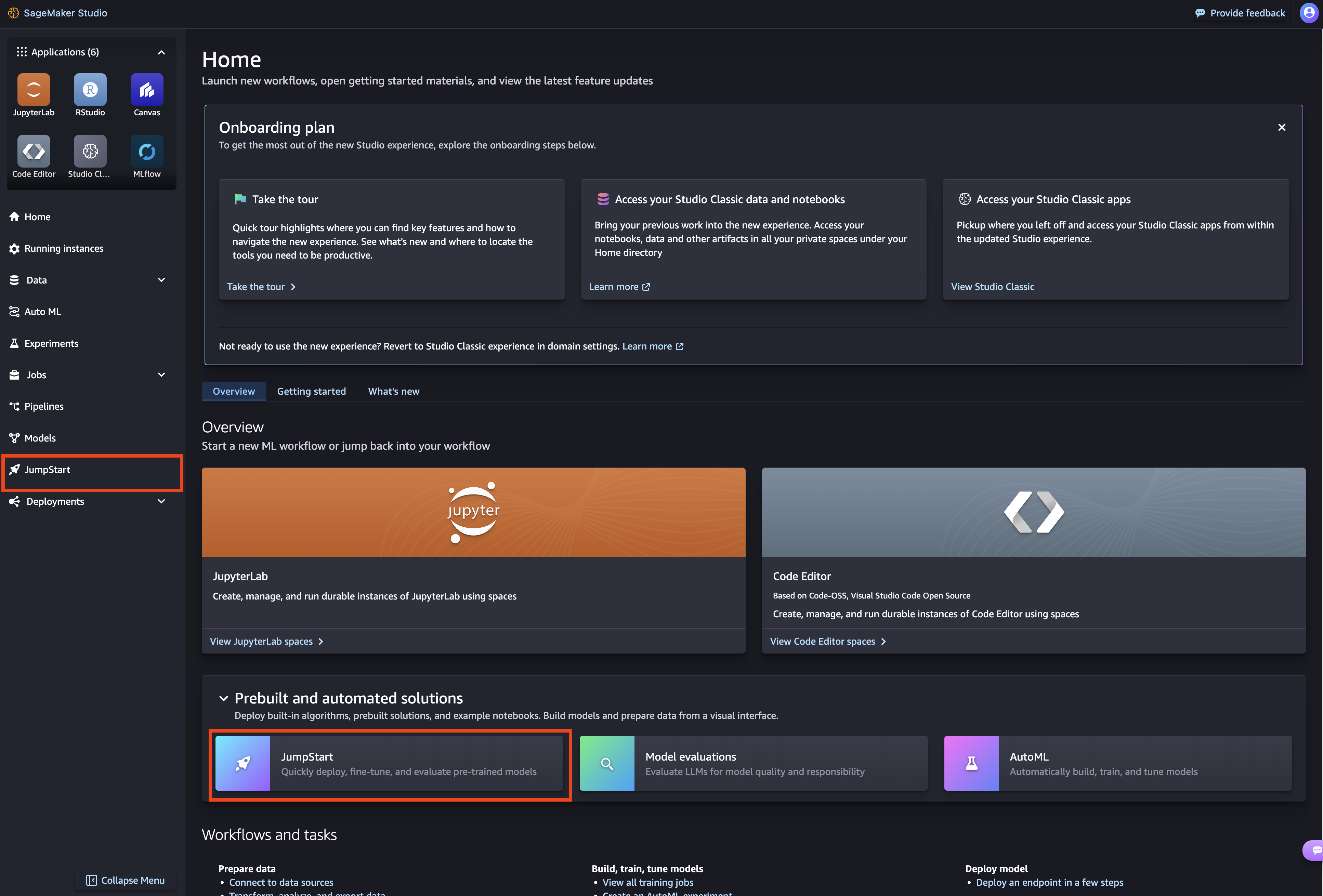

John Snow Labs' Medical LLM models on Amazon SageMaker Jumpstart optimize medical language tasks, outperforming GPT-4o in summarization and question answering. These models enhance efficiency and accuracy for medical professionals, supporting optimal patient care and healthcare outcomes.

Marzyeh Ghassemi combines her love for video games and health in her work at MIT, focusing on using machine learning to improve healthcare equity. Ghassemi's research group at LIDS explores how biases in health data can impact machine learning models, highlighting the importance of diversity and inclusion in AI applications.

Far-right parties in Europe are using AI to spread fake images and demonize leaders like Emmanuel Macron. Experts warn of the political weaponization of generative AI in campaigns since the EU elections.

Meta Llama 3.1 LLMs with 8B and 70B inference support now on AWS Trainium and Inferentia instances. SageMaker JumpStart offers secure deployment of pre-trained models for customization and fine-tuning.

123RF improved multilingual content discovery using Amazon OpenSearch Service and AI tools like Claude 3 Haiku. They faced challenges in translating metadata into 15 languages due to cost and quality issues.

Generative AI tools like ChatGPT and Claude are rapidly gaining popularity, reshaping society and the economy. Despite advancements, economists and AI practitioners still lack a comprehensive understanding of AI's economic impact.