Preference alignment (PA) boosts Large Language Models (LLMs) by aligning model behavior with human feedback, making LLMs more accessible and popular in Generative AI. RLHF with multi-adapter PPO on Amazon SageMaker offers a comprehensive, user-friendly approach for implementing PA, enhancing model performance and user alignment.

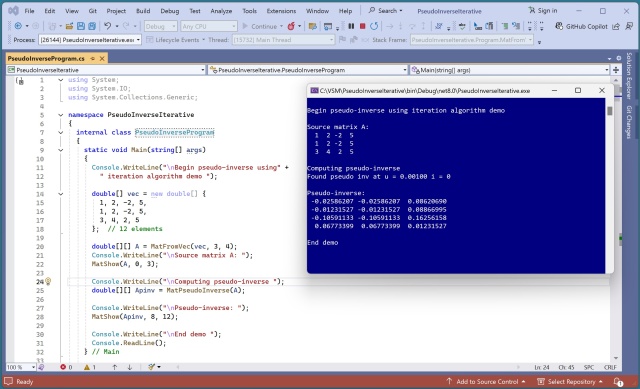

Research paper presents a new elegant iterative technique for computing the Moore-Penrose pseudo-inverse of a matrix. The method uses Calculus gradient and iterative looping to approach the true pseudo-inverse, resembling neural network training techniques.

Nvidia and Tesla stocks soar post-US election, signaling a tech boom. Elon Musk praised by Trump as "super genius."

Generative AI by Stability AI is transforming visual content creation for media, advertising, and entertainment industries. Amazon Bedrock's new models offer improved text-to-image capabilities, enhancing creativity and efficiency in marketing and storytelling.

Generative AI revolutionizes customer support with Amazon Bedrock Agents, integrating enterprise data APIs for personalized responses. Automotive parts retailer example showcases how AI agents enhance customer interactions by accessing inventory and catalog APIs, providing detailed information instantly.

Ai-Da, an advanced humanoid robot, sells Alan Turing portrait for $1.08m at Sotheby's auction, exceeding expectations. The 2.2m artwork titled A.I. God marks a milestone as the first robot-created piece to be auctioned.

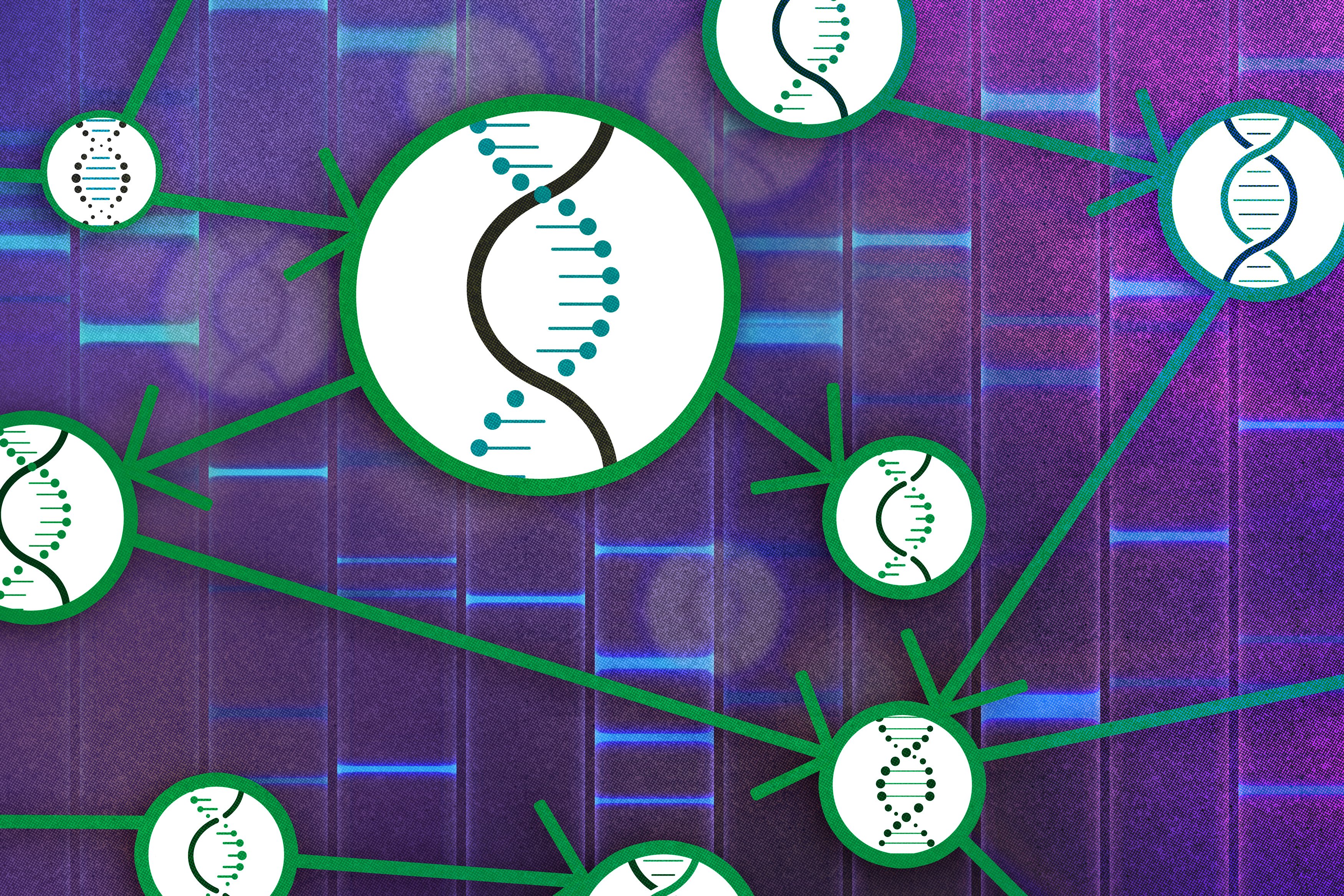

MIT researchers have developed a method to efficiently group genes together using only observational data, enabling the discovery of underlying cause-and-effect relationships. This technique could lead to more precise treatments for diseases by identifying potential gene targets in a more accurate and cost-effective manner.

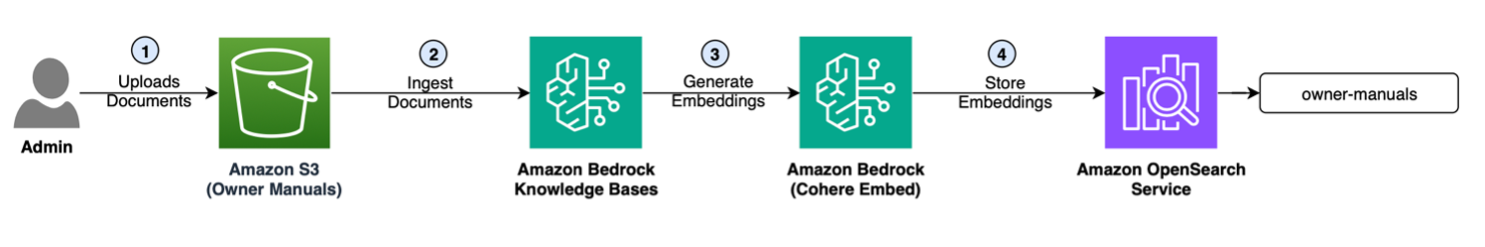

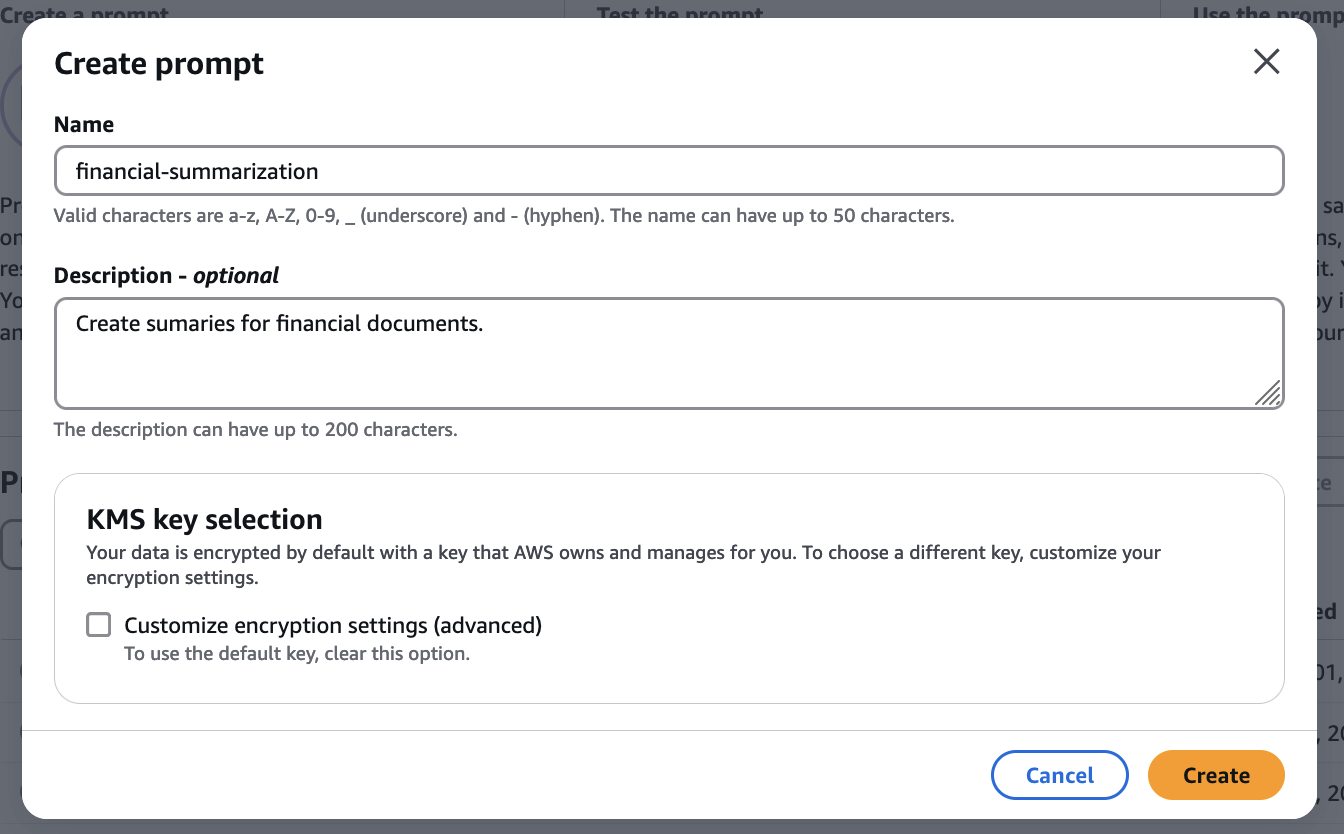

Amazon Bedrock Prompt Management simplifies prompt creation and integration for better AI responses. New features include structured prompts and API integration for seamless use.

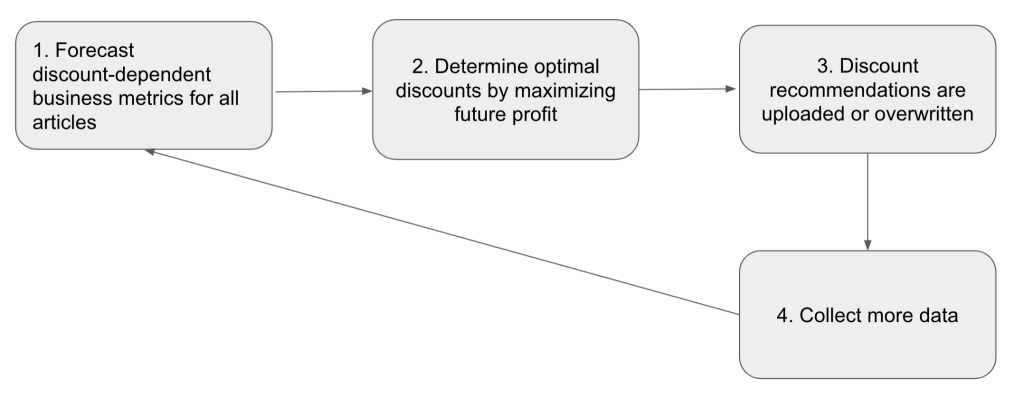

Zalando tackles markdown pricing challenges with algorithmic solutions for optimal discounts and profit maximization. Forecast-then-optimize approach uses past data to determine item-level demand and stock levels, enhancing training sets for accurate discount-dependent forecasts.

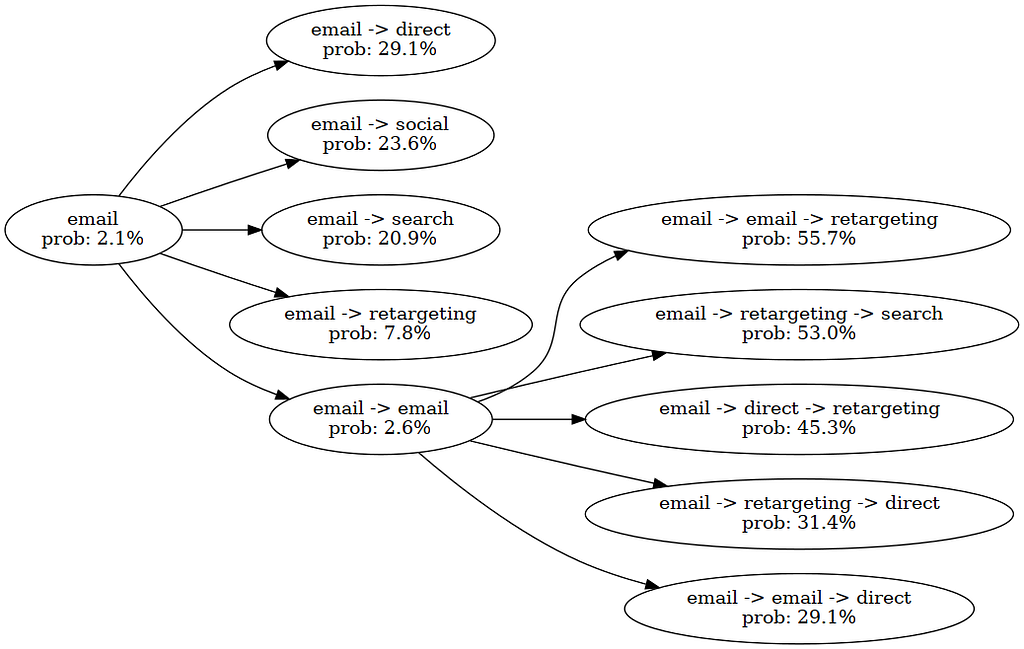

ML models can design optimal customer journeys by combining deep learning with optimization techniques. Traditional attribution models fall short due to position-agnostic attribution, context blindness, and static channel values.

AI-generated videos are both hilarious and concerning, with the potential to replace 200,000 entertainment jobs. Despite opposition, artists are exploring the digital miracles of Generative AI with optimism.

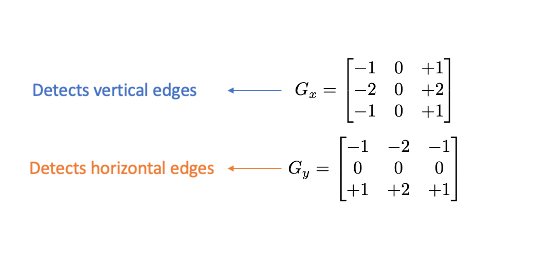

Histogram of Oriented Gradients (HOG) is a key feature extraction algorithm for object detection and recognition tasks, utilizing gradient magnitude and orientation to create meaningful histograms. The HOG algorithm involves calculating gradient images, creating histograms of gradients, and normalizing to reduce lighting variations.

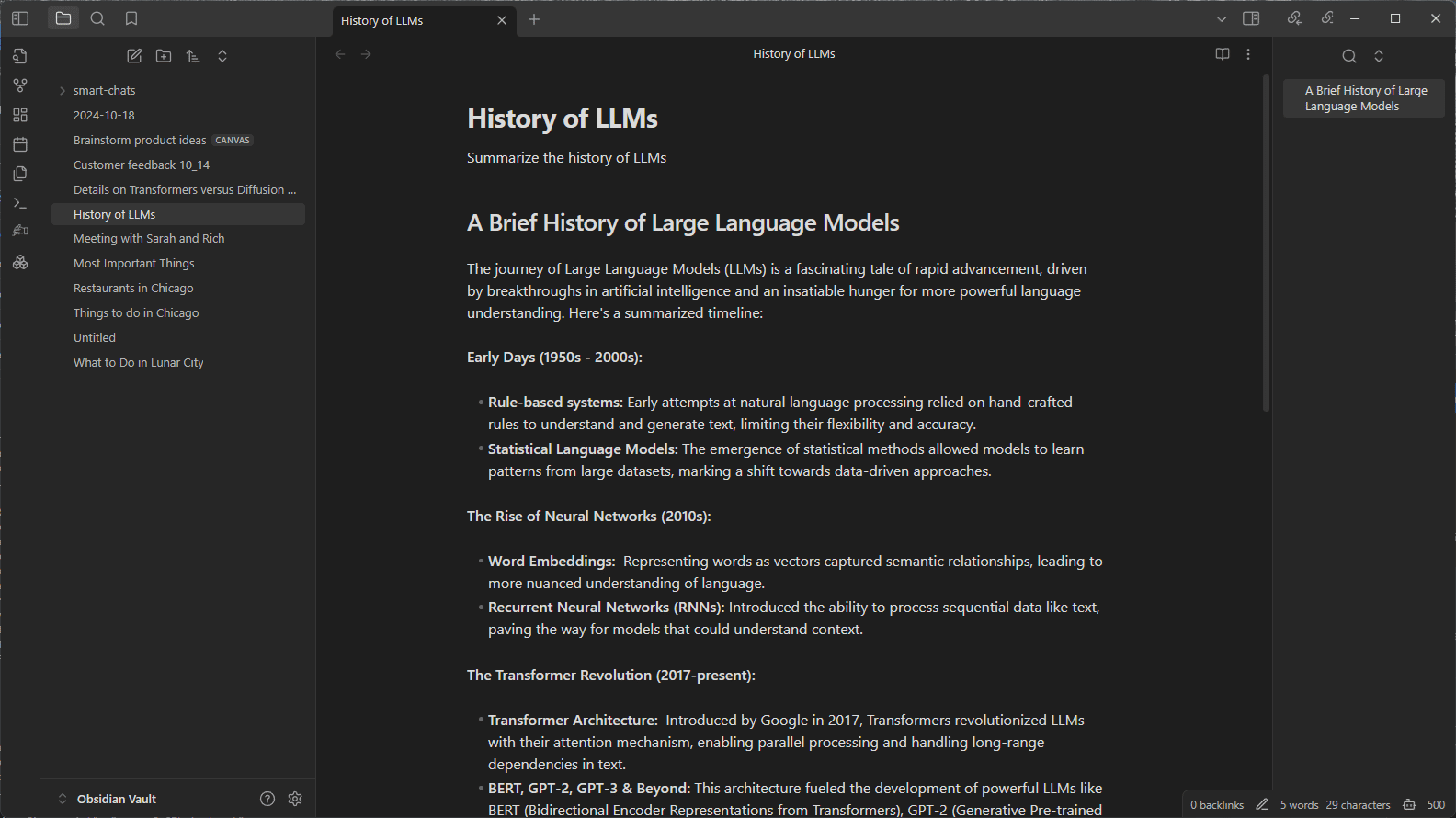

AI enthusiasts are integrating large language models into productivity workflows using applications like Obsidian and plug-ins like Text Generator and Smart Connections. By connecting Obsidian to LM Studio, users can generate notes with a 27B-parameter LLM accelerated by RTX for optimized web browsing and complex project management.

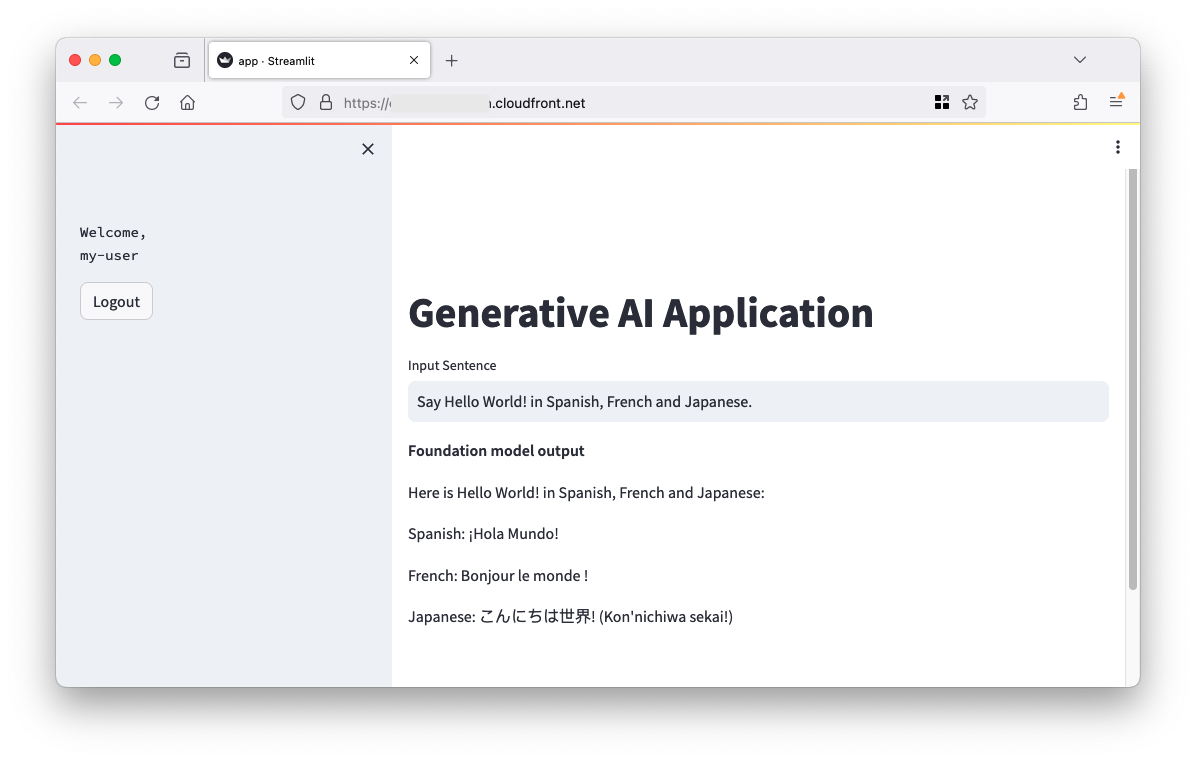

Generative AI offers new possibilities, but data scientists struggle with UI development. AWS simplifies generative AI app creation with Streamlit and key services like Amazon ECS and Cognito.

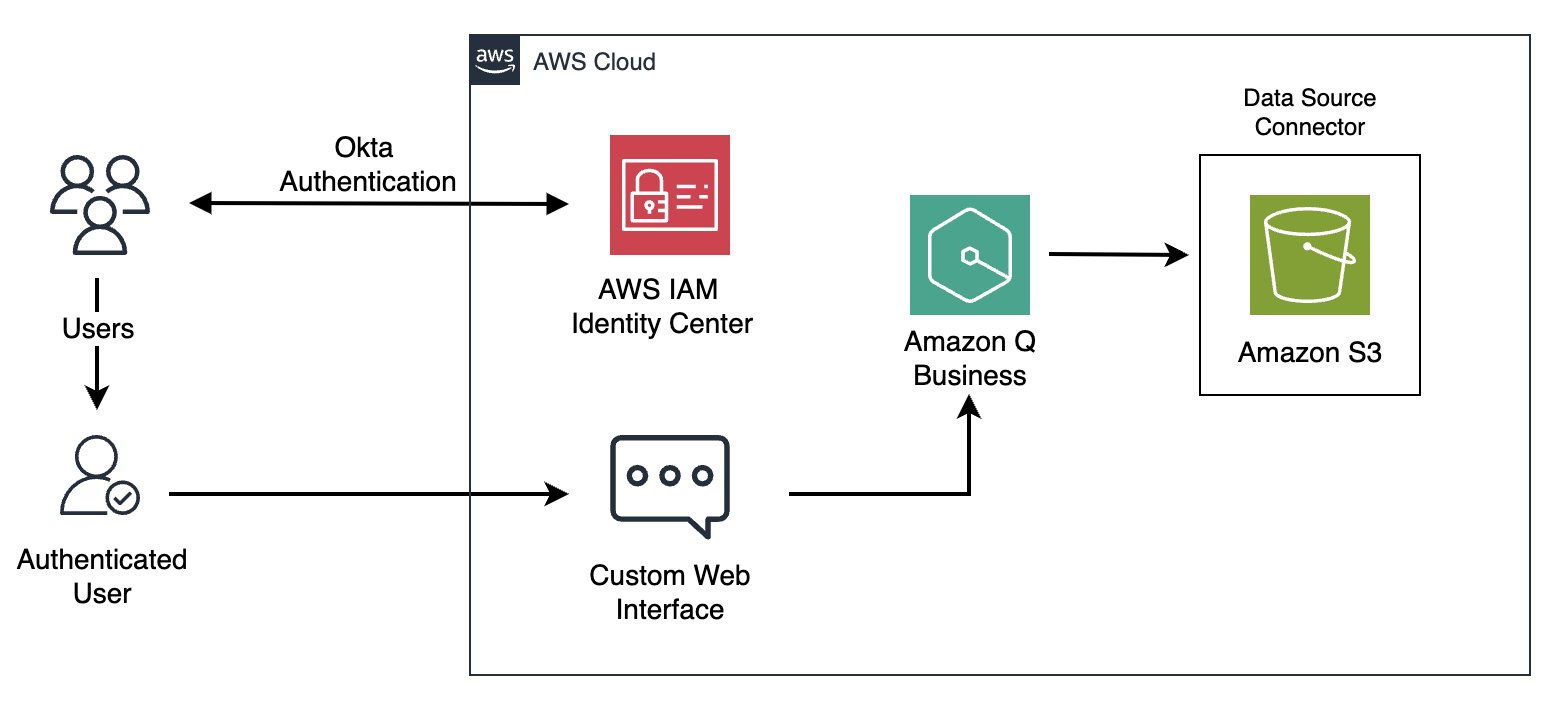

Hearst overcame CCoE challenges with a self-service generative AI assistant for cloud governance. Amazon Q Business scaled best practices, freeing up CCoE for high-value tasks.