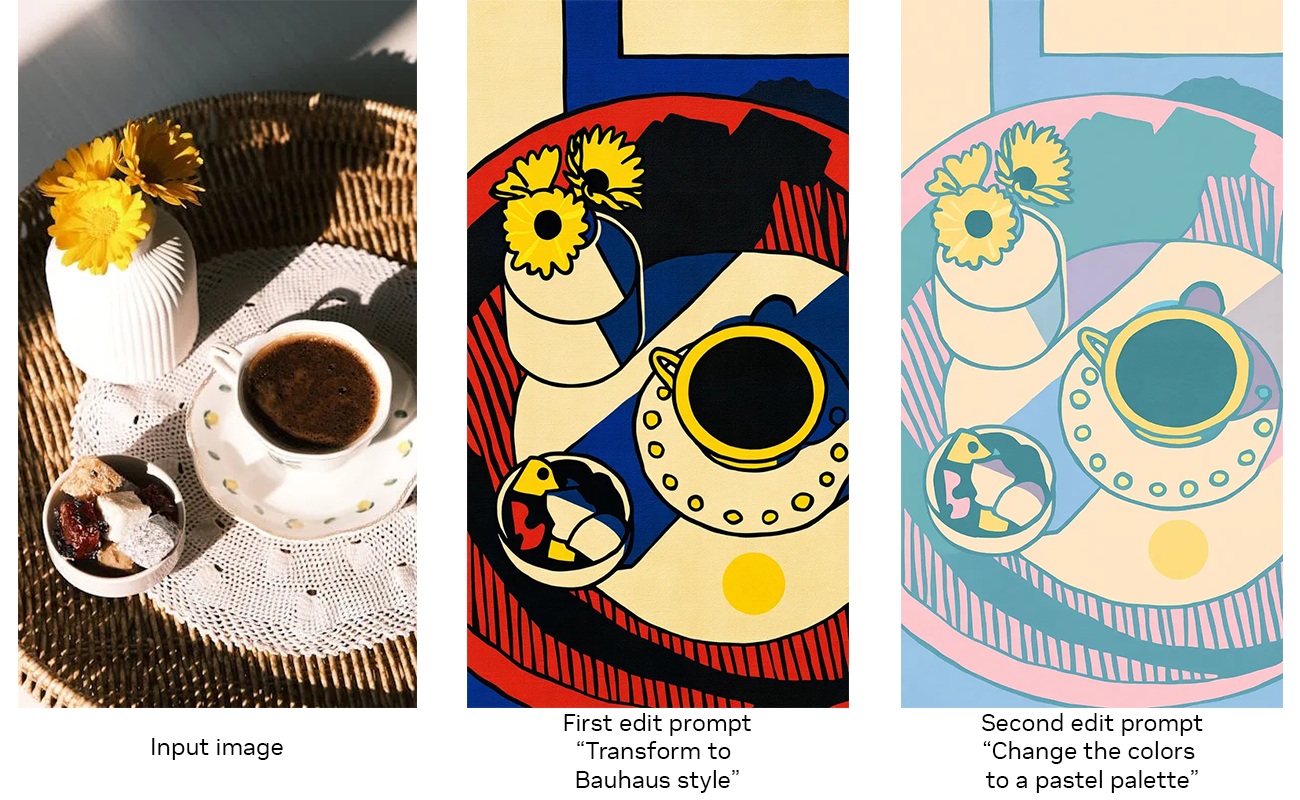

Black Forest Labs introduces FLUX. 1 Kontext, a game-changing AI model for image generation and editing. Collaborating with NVIDIA, the model offers faster edits and smoother iteration for creators using RTX GPUs.

Tech firms win battles over copyrighted text. Google's AI electricity demand disrupts green efforts.

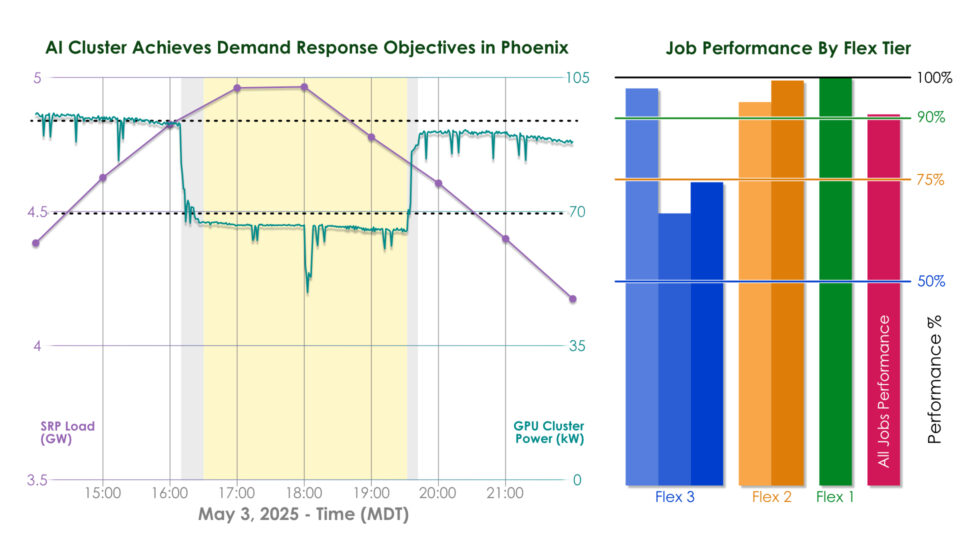

Emerald AI is developing a solution to enable AI factories to come online sooner by optimizing energy usage. The startup's AI-powered platform reduces power consumption by 25% during grid stress events, showcasing potential for data center flexibility and clean energy integration.

Tech companies propose tracking devices under skin, robots for containment, and driverless vehicles for UK justice crisis. Justice secretary Shabana Mahmood seeks deeper collaboration for innovative solutions like wearable tech and geolocation monitoring.

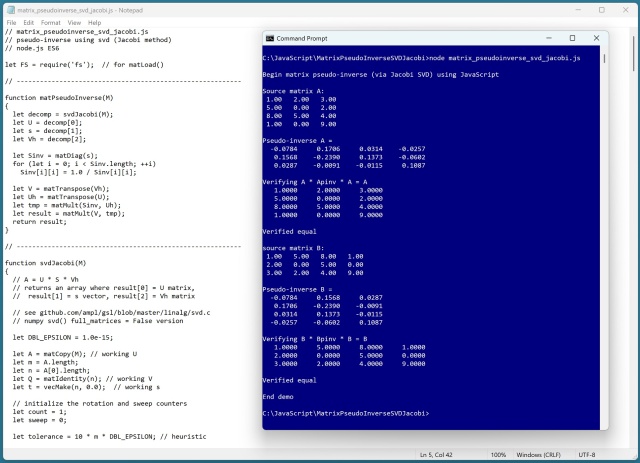

Transforming matrix pseudo-inverse code from C# to JavaScript on a flight. Demonstrating SVD method for computing pseudo-inverse matrices.

Humanoid robots struggle in three-a-side football match in Beijing, showcasing AI technology in action. Human footballers may face competition from AI-powered robots in the future.

Growing recognition of risks in unregulated AI development. Power dynamics at play, sparking distrust in technology.

Scientific productivity is declining, but FutureHouse aims to accelerate research with an AI platform specialized for information synthesis and data analysis. Founders Sam Rodriques and Andrew White believe AI agents can break through science bottlenecks and solve pressing problems using natural language.

Large language models like GPT-x rely on the Attention mechanism within Transformers for processing input sentences. The process involves tokenization, word embedding, positional encoding, and attention to accurately describe the source input sentence.

Adzuna finds entry-level job vacancies dropped 32% post ChatGPT launch in UK. AI chatbot leads to workforce downsizing.

AI enters personal chat: Anthropic's Claude AI helps tech entrepreneur support friend after mother's death. What does this mean for human relationships?

Organizations rely on visual documentation for complex information, but often struggle with accessing insights from images. Amazon Q Business's custom document enrichment feature now allows processing standalone image files, enabling comprehensive insights and natural language queries for decision-making.

Microsoft unveils AI system outperforming human doctors in health diagnoses, paving the way to 'medical superintelligence'. Led by Mustafa Suleyman, the company's AI unit mimics expert physicians in handling complex cases.

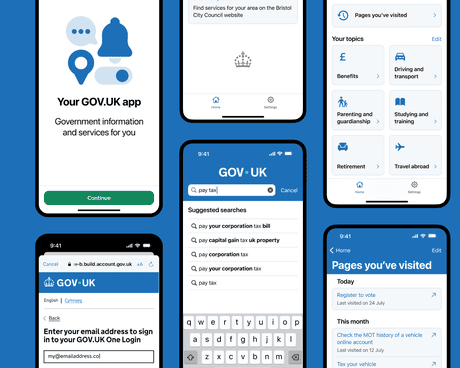

UK citizens can now download the gov.uk app for streamlined interactions with the government, featuring AI chatbot and digital driving licences. App's limited functions and design issues acknowledged by cabinet minister.

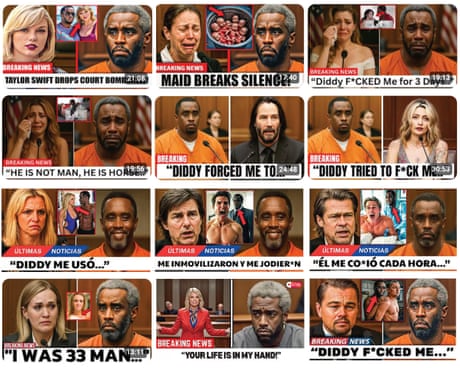

YouTube channels exploit AI to generate fake content about Sean 'Diddy' Combs trial, attracting millions of views for profit. Indicator and The Guardian uncover digital deception involving celebrity misinformation.