Amazon Bedrock prompt caching enhances AI-assisted coding with Claude Code, reducing latency and token costs. Claude Code revolutionizes coding assistants by intelligently managing cache points for lightning-fast responses and cost savings.

AI advancement could eliminate half of entry-level white-collar jobs in 1-5 years, predicts Dario Amodei of Anthropic. Unemployment in the US could reach 10-20% by the end of the decade due to rapid technological change.

BBC director general and Sky boss oppose copyright proposal allowing tech firms access to creative works without permission, threatening £125bn sector value. Creative industry calls for opt-in rule and licensing deals to protect copyrights from AI legislation.

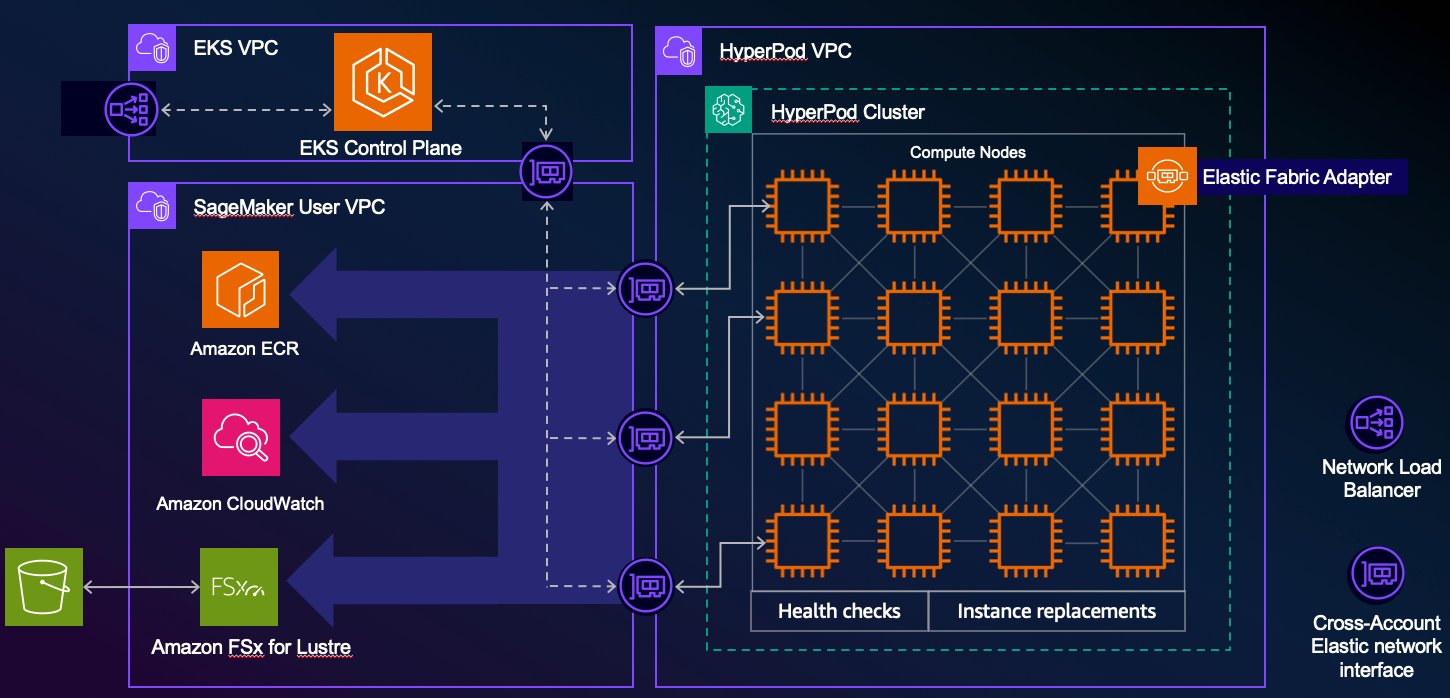

Climate tech startups use generative AI to tackle climate crisis, leveraging AWS SageMaker HyperPod for scalable solutions. Advanced computational capabilities and specialized models help startups save time and money, focusing on innovation.

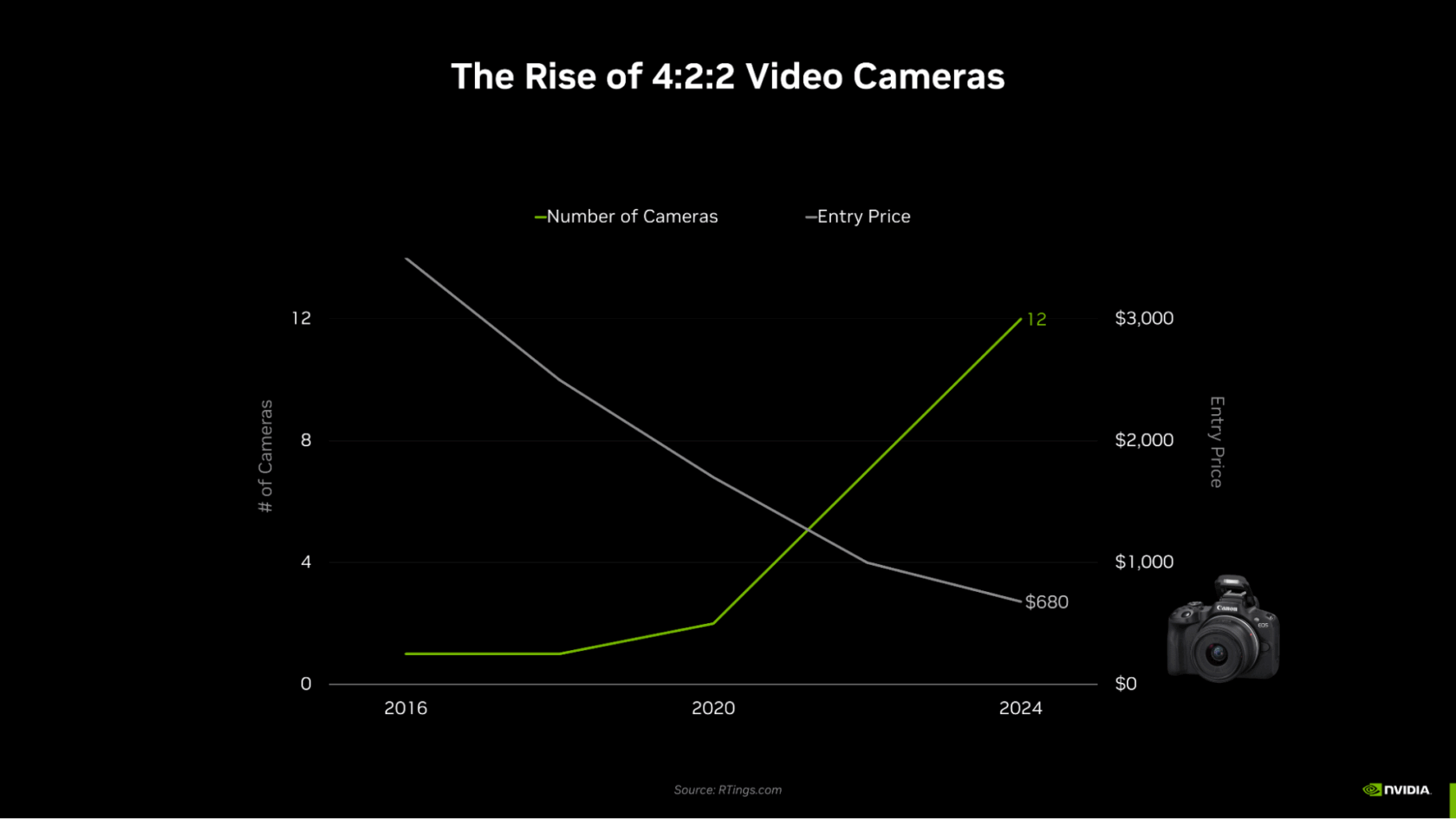

4:2:2 cameras offer double the color info for better video quality. NVIDIA RTX GPUs support 4:2:2 encoding for improved editing workflows.

House of Lords defeats government over AI companies using copyrighted material, demands artist copyright protection. Government faces ultimatum to amend legislation or risk losing support.

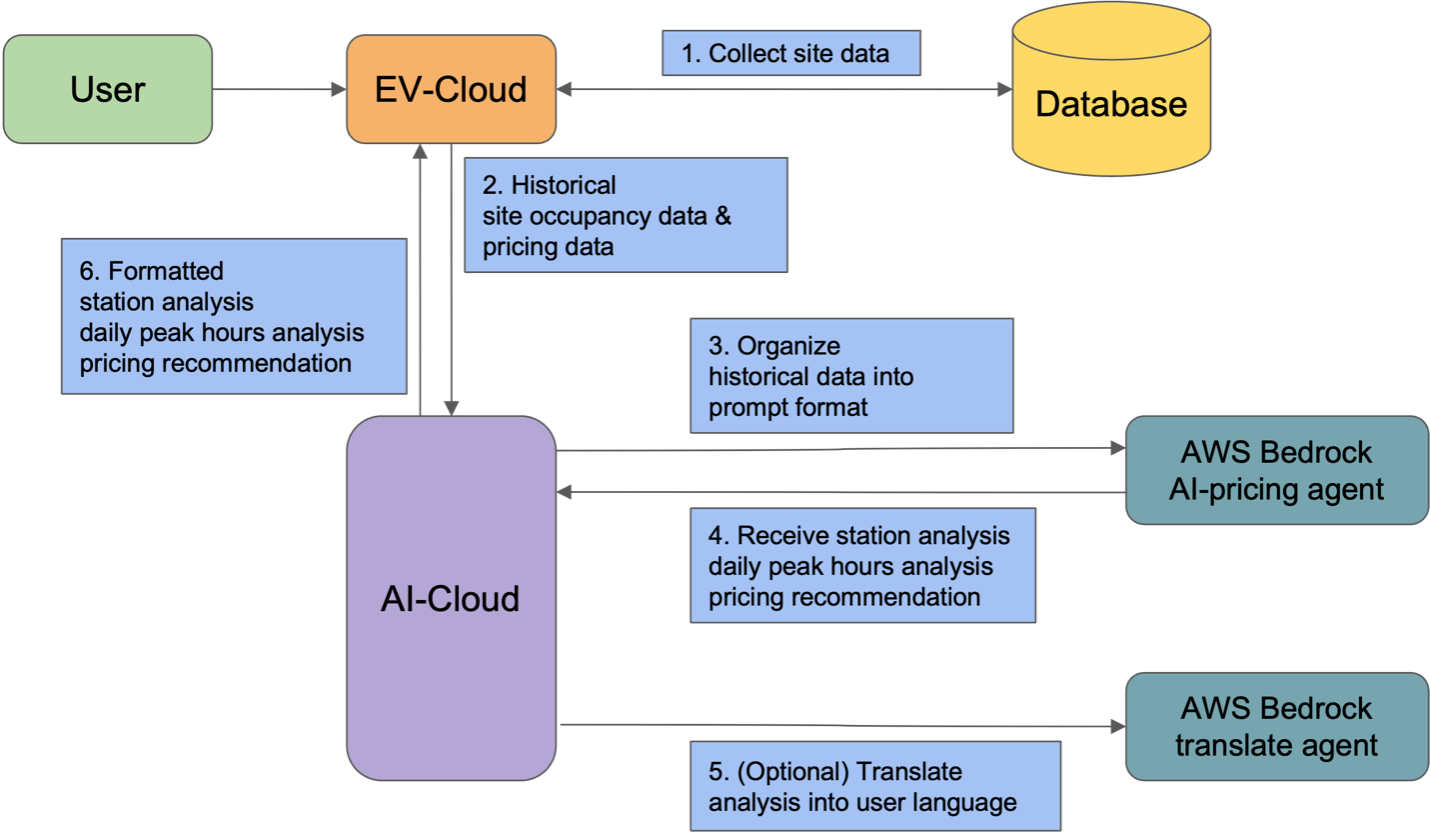

Noodoe leverages AI and Amazon Bedrock to optimize EV charging operations, offering intelligent automation, real-time data access, and tailored pricing recommendations. This innovative approach enhances efficiency, sustainability, and customer satisfaction in the competitive EV charging industry.

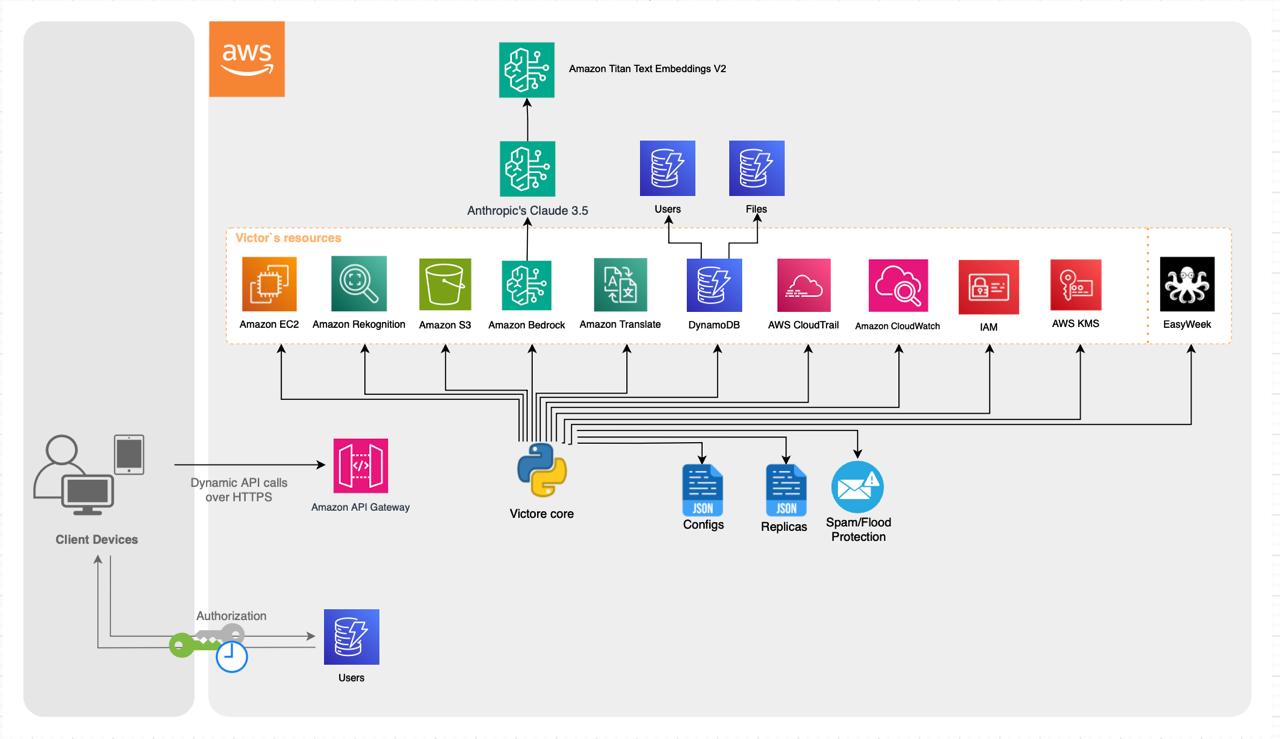

Bevar Ukraine's AI assistant Victor aids Ukrainian refugees in Denmark, using AWS services for scalability and data security. The system, developed by volunteer software developers, leverages Anthropic's Claude 3.5 LLM for natural, engaging responses.

Themis AI enhances AI models to detect and correct uncertainties and biases, ensuring reliability in high-stakes applications. The MIT spinout offers a solution to improve AI models and prevent devastating consequences by forecasting failures before they happen.

Generative AI transforming entry-level jobs; influencers monetizing AI-generated text. New AI test predicts prostate cancer drug benefits.

Yoshua Bengio launches LawZero to develop honest AI, preventing rogue systems from deceiving humans in the $1tn AI arms race. Bengio, an AI godfather, aims to create a guardrail against malicious AI agents.

Demis Hassabis of Google DeepMind aims to use AI to streamline email management, tackling inbox overload with next-gen email technology. The goal is to automate mundane tasks like sorting and replying to emails, ultimately reducing the stress of inbox management.

Global investment in AI is surging, with Nvidia leading the way. Concerns about ethics and inclusivity are being overshadowed in the AI frenzy.

Home secretary faces pressure on police due to early release proposals. Defence sources predict UK will commit to 3.5% GDP defense spending target by 2035 at Nato summit. Lords challenge government over data bill, accusing them of neglecting creative industries against AI.

Ewan Morrison explores generative AI's limits with ChatGPT, inventing fake book titles. He resists AI in his work, wary of its potential harm and human costs of technology.