AI is a tool, not a genius, reshaping storytelling with collaboration. It can provoke feeling and lead to knowing in the writer.

OpenAI partners with SoftBank to achieve 'artificial super intelligence' surpassing human capabilities, raising $40bn in the largest startup funding round ever. The collaboration aims to advance AI research towards AGI, emphasizing the necessity of significant computing power.

ChatGPT gained one million users in five days, sparking interest in AI. Foundation models are key to understanding generative AI's capabilities and applications.

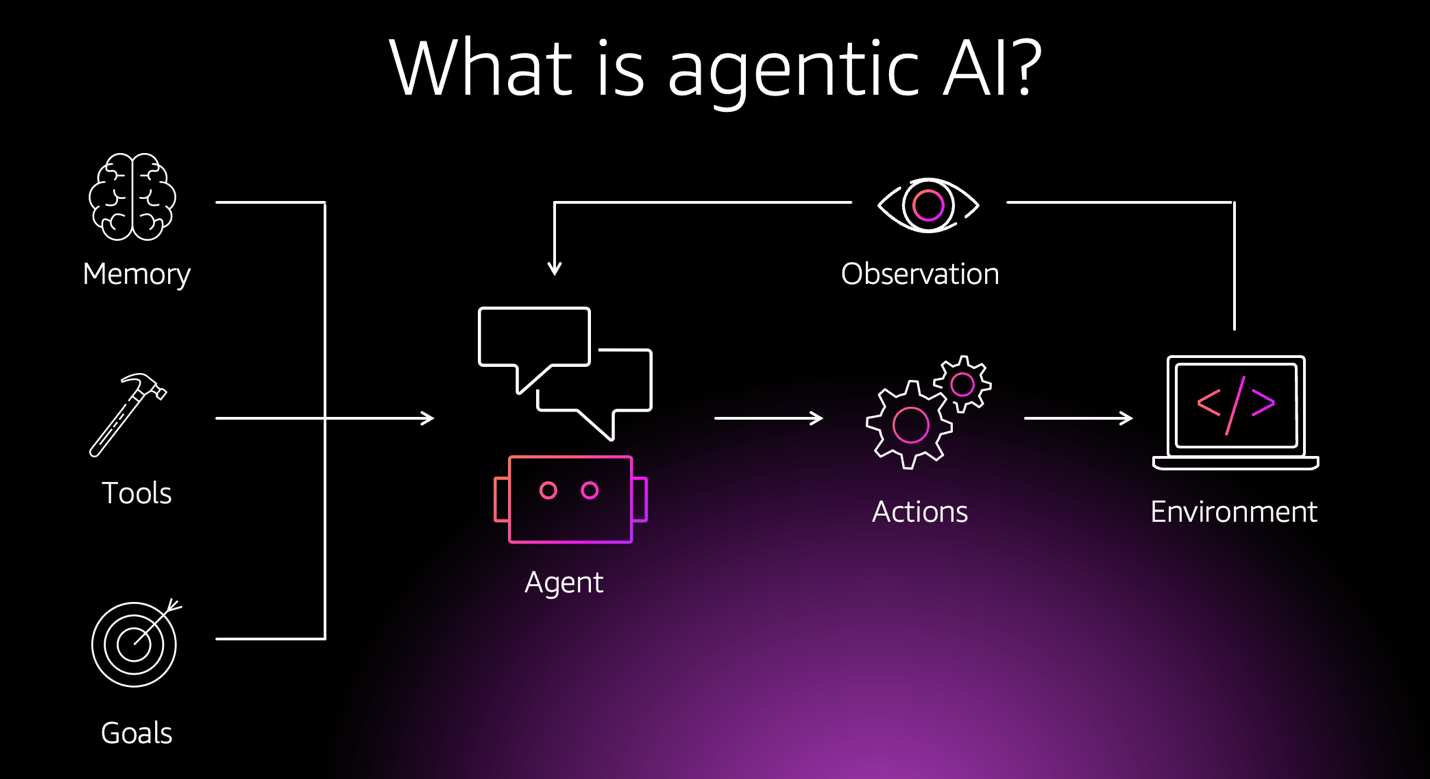

The enterprise AI landscape is evolving rapidly, with Deloitte predicting a surge in AI agent deployment by 2027. CrewAI's framework, combined with Amazon Bedrock, enables the creation of sophisticated multi-agent systems revolutionizing business operations.

MIT's Pattie Maes awarded 2025 ACM SIGCHI Lifetime Research Award for human-computer interaction contributions, advocating for human-centered AI integration. Maes' pioneering work in software agents and wearable devices shapes today's online experience, emphasizing ethical design and human experience enhancement.

Authors including Richard Osman, Kazuo Ishiguro, Kate Mosse, and Val McDermid urge UK government to hold Meta accountable for using copyrighted books in AI training. They request Lisa Nandy to summon Meta executives to parliament.

Supply Chain Analytics is crucial in navigating disruptions and uncertainties in supply chains. Samir Saci shares insights and practical case studies in his comprehensive Supply Chain Analytics Cheat Sheet to help improve profitability and optimize operations.

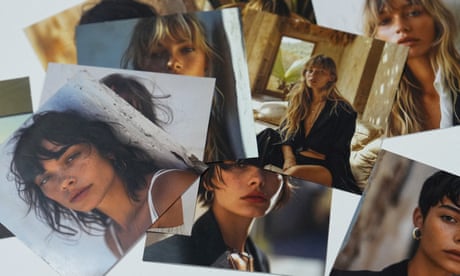

H&M introduces AI ‘twins’ of models for marketing, sparking debate on exploitation in fashion industry. Chief creative officer ensures human-centric approach remains unchanged despite AI integration.

Parents and teachers are preparing children for the future with generative artificial intelligence, despite age restrictions on tools like ChatGPT and Google's Gemini. Guardian readers share their strategies and reasons for introducing AI education to the youngest learners.

Serbian college student Ana Trišović credits MIT OpenCourseWare with transforming her career, leading her to become a research scientist at MIT's FutureTech lab. Trišović's journey from learning Data Analytics with Python to studying AI democratization showcases the impact of open learning resources on her interdisciplinary research.

Adam Kucharski, a renowned epidemiologist, delves into the importance of evidence in the age of AI and social media. In his new book, he explores how our understanding of 'proof' is evolving in a world where information and trust are paramount.

Venture capital-backed $1bn companies like Skydio are transforming warfare with AI-powered drones. These futuristic weapons could challenge traditional military manufacturers on the battlefield.

Automation has made mundane tasks less boring, but at what cost? John Gray's eco-nihilism perspective challenges the benefits of technology, even calling anaesthetised dentistry an "unmixed blessing."

The 3D Reconstruction journey from 2D to 3D models involves crucial steps for high-quality results. Successful reconstructions focus on fewer images, cleaner processing, and efficient troubleshooting, emphasizing understanding the creation process.

New AI model combines CNNs, Transformers, and morphological feature extractors for improved visual recognition accuracy up to 87.89%. CNNs capture details, morphological module highlights critical features, and multi-head attention models global relationships.