New GeForce RTX 5090 & 5080 GPUs with Blackwell architecture power AI content creation. FLUX models require less VRAM & generate images faster.

Data-centric AI can create efficient models; using just 10% of data achieved over 98% accuracy in MNIST experiments. Pruning with "furthest-from-centroid" selection strategy improved model accuracy by selecting unique, diverse examples.

Authors Guild launches Human Authored portal for members to confirm books are not AI-generated, with logo for proof on covers.

Apple beats Q1 earnings expectations with 4% revenue increase to $124.30bn. Shares surge 8% after CEO Tim Cook hints at continued growth in next quarter.

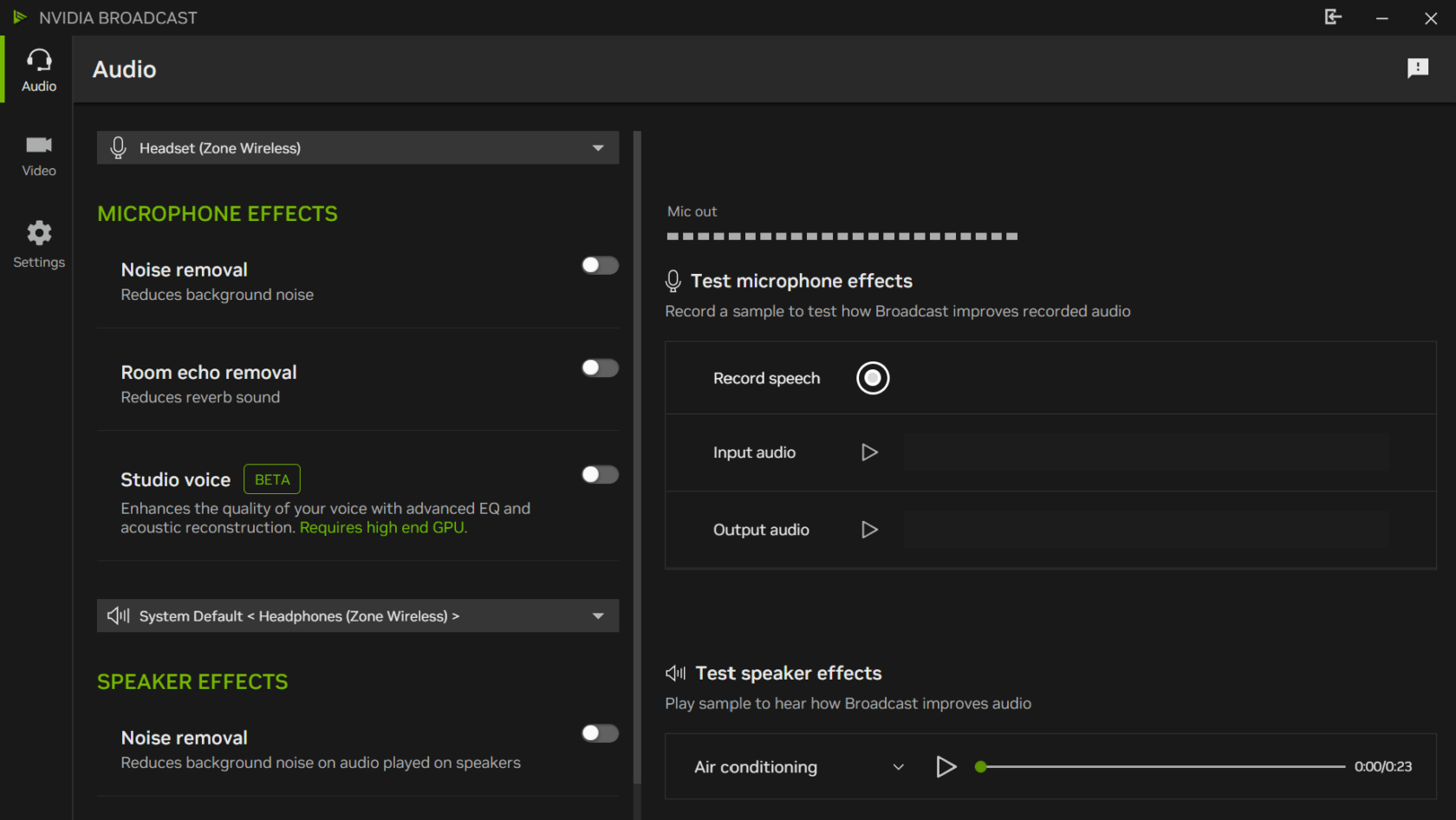

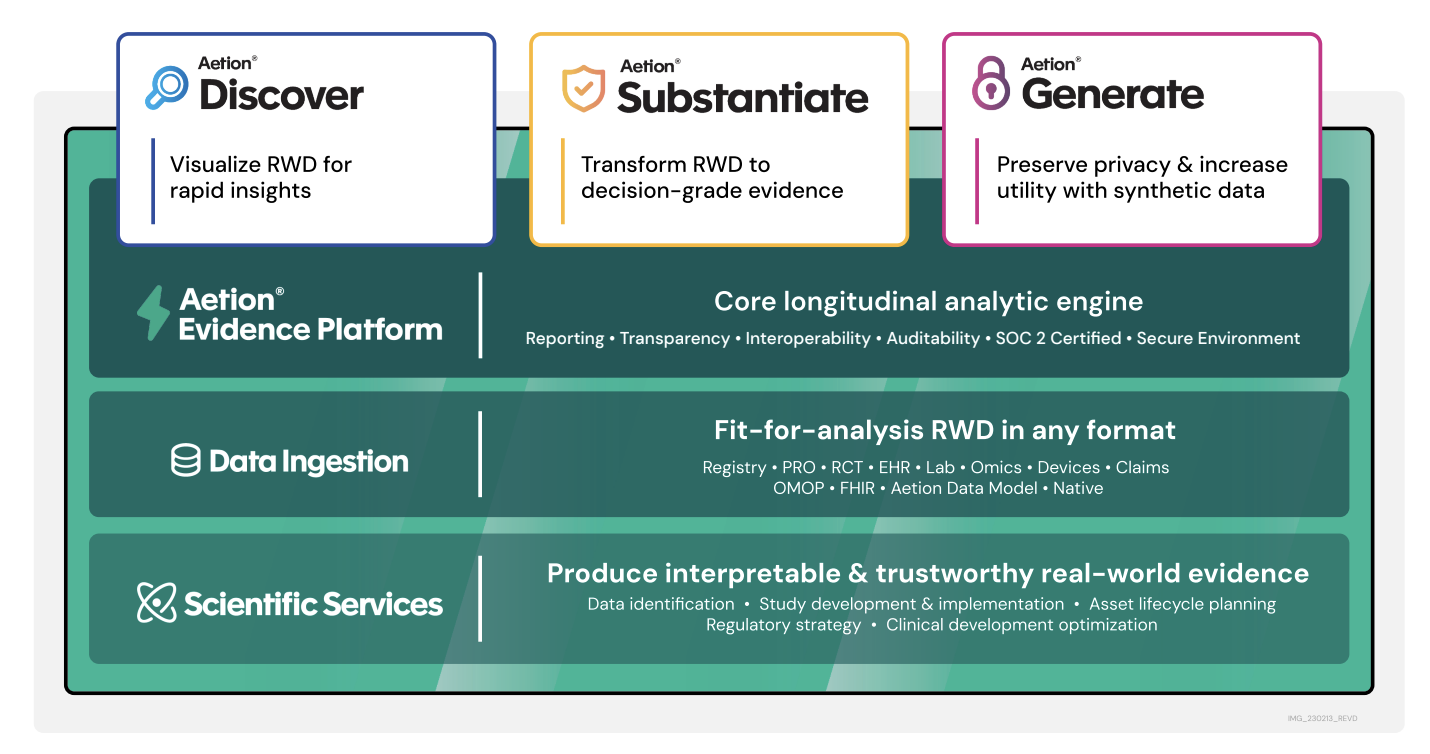

Aetion leverages real-world data to uncover hidden insights with Smart Subgroups and generative AI, transforming patient journeys into evidence. Aetion's use of Amazon Bedrock and Anthropic's Claude 3 LLMs enables users to interact with Smart Subgroups using natural language queries, accelerating hypothesis generation and evidence creation.

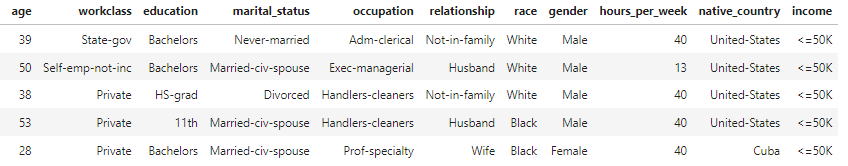

Article discusses Gradient Boosting Regression Using C# in Microsoft Visual Studio Magazine, presenting a demo of a simple version compared to XGBoost, LightGBM, and CatBoost. The demo showcases the step-by-step process of predicting values with gradient boosting regression.

Bandit algorithm vs A/B test: When A/B tests fail due to multiple variants or one-off campaigns, bandit algorithms offer a more efficient solution by focusing budget on the best performing ad variant in real-time. Bandit algorithms maximize rewards by serving the ad variant with the highest KPI, making them ideal for campaigns with numerous treatments or special events.

China's DeepSeek challenges US tech giants with premium AI at a fraction of the cost. A potential Sputnik moment in the AI race, raising questions about strategy and investment.

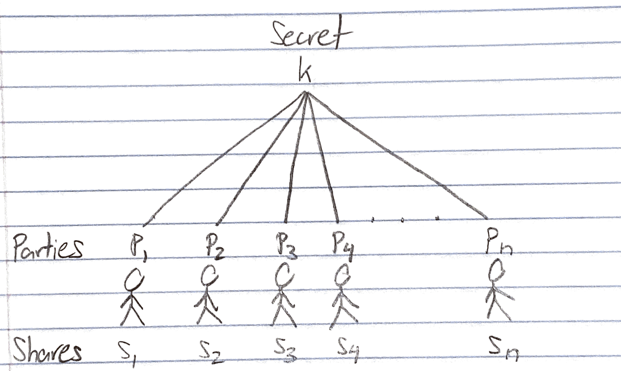

Secret sharing ensures secure distribution of sensitive data among parties. Shamir’s algorithm allows parties to pool data without revealing individual values.

SoftBank in talks to invest up to $25bn in OpenAI, becoming largest financial backer of ChatGPT startup. Potential $15-25bn deal with San Francisco-based company reported by Financial Times.

Challenges transitioning to deep learning in AdTech led to incidents, but ultimately improved ML platform performance. Incident management strategies crucial for robust model pipelines in production.

MIT students showcase innovative AI projects at NeurIPS 2024: "Be the Beat" suggests music based on dance moves, "A Mystery for You" cultivates critical thinking skills in an educational game. Both projects exemplify AI's potential to catalyze creativity and reshape human-computer interactions.

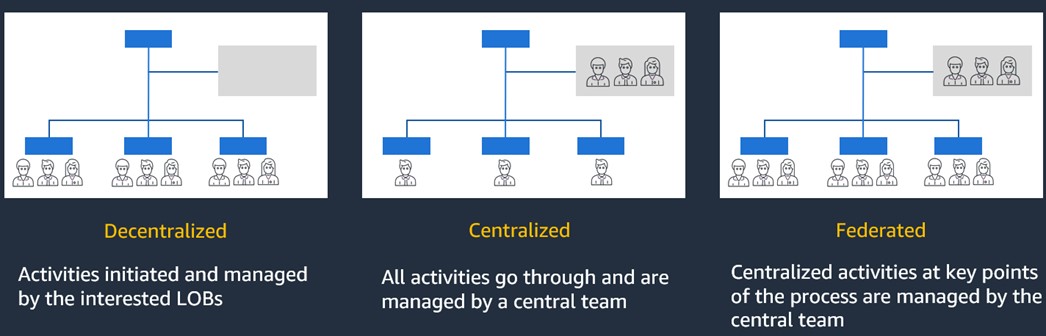

Generative AI transforms organizations with innovative applications for enhanced customer experiences. Operating models like decentralized, centralized, and federated drive adoption and governance of generative AI technologies.

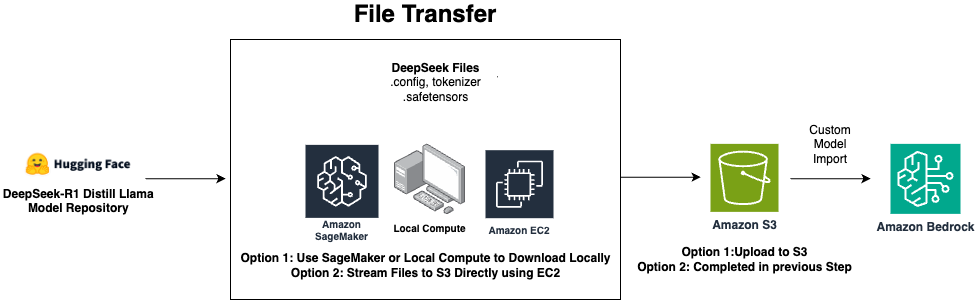

DeepSeek AI's DeepSeek-R1 models are now available in distilled versions, offering improved efficiency without sacrificing performance. Amazon Bedrock Custom Model Import allows seamless integration of these custom models, enhancing generative AI applications with cost-effective solutions.

Summary: First Dog merchandise and prints are available at the First Dog shop for fans seeking unique items. Sign up for email alerts to never miss a new First Dog cartoon.