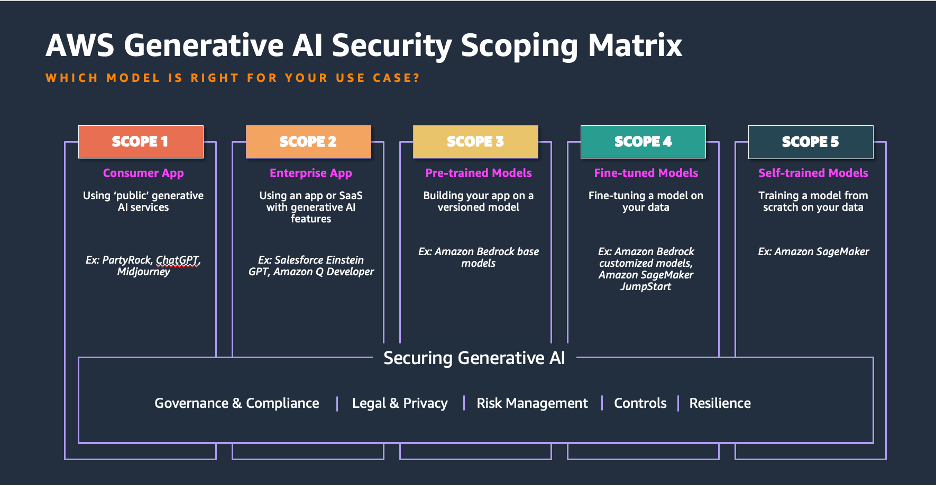

Generative AI applications like AI-powered assistants require thorough security assessments before deployment, focusing on mitigating security risks. Using the OWASP Top 10 framework, AWS offers a scoping matrix to help assess and secure generative AI applications, ensuring protection against novel threats.

Startup Station A, founded by MIT alumni, simplifies clean energy deployment for businesses. The platform offers a marketplace for analyzing, bidding, and selecting providers, partnering with major real estate companies to reduce carbon footprints.

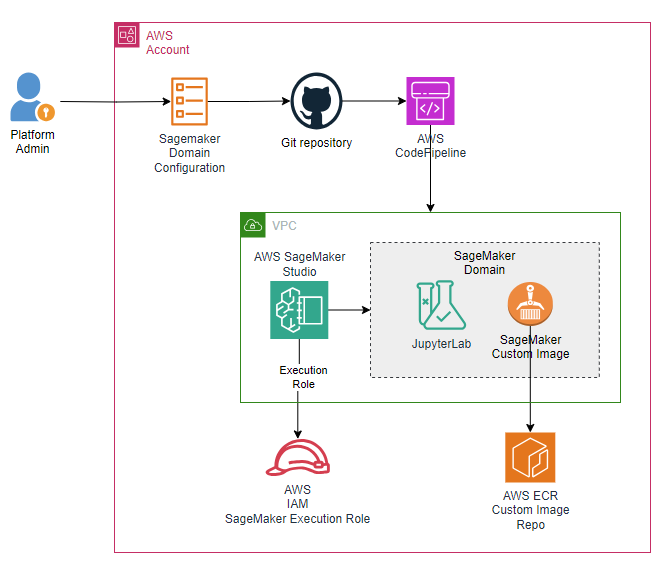

Automate attaching custom Docker images to Amazon SageMaker Studio domains for increased productivity and security. Deploy a pipeline using AWS CodePipeline to streamline image creation and attachment process.

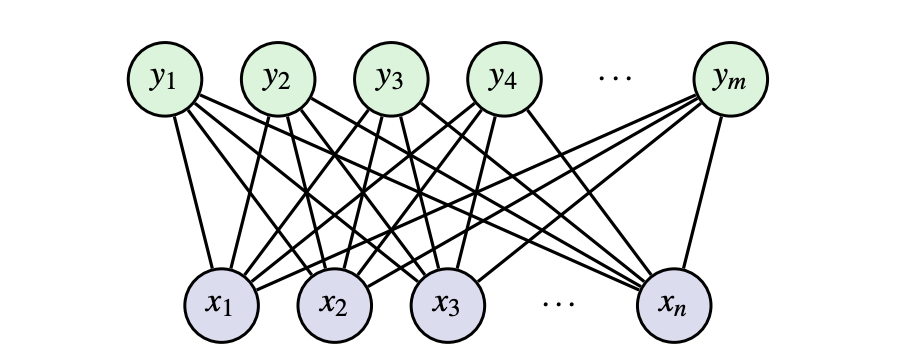

Geoffrey Hinton's Nobel Prize-winning work on Restricted Boltzmann Machines (RBMs) explained and implemented in PyTorch. RBMs are unsupervised learning models for extracting meaningful features without output labels, utilizing energy functions and probability distributions.

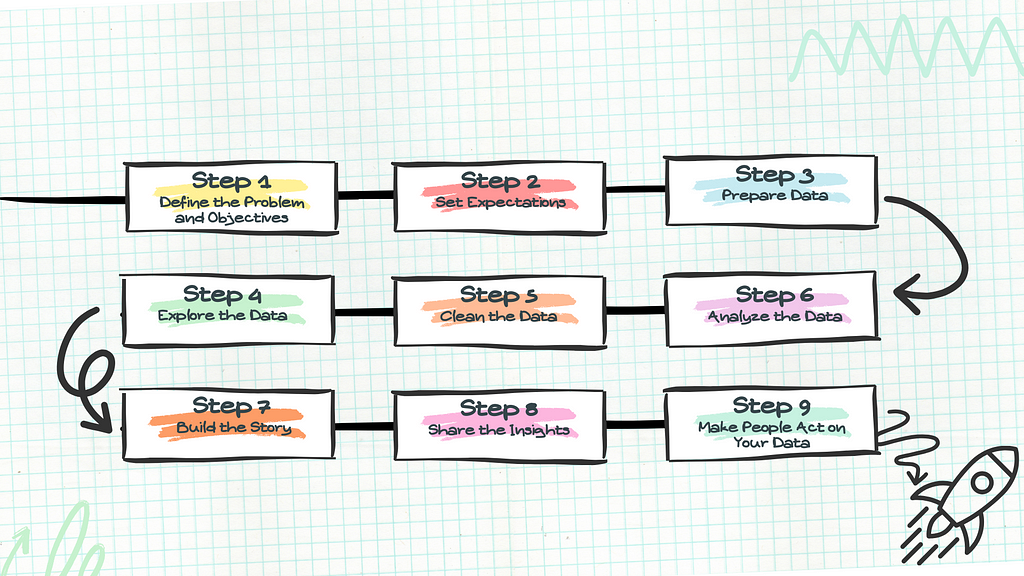

Learn how to approach data analytics projects like a pro: Define the problem, set expectations, and prepare effectively for impactful insights. Stakeholders' clear objectives and proper planning are key to successful data analysis projects.

Pope Francis urges Davos leaders to closely monitor AI's impact on humanity's future, warning of a potential truth crisis. Governments and businesses advised to exercise caution and vigilance in navigating the complexities of artificial intelligence.

Israel increased use of Microsoft cloud & AI tech during Gaza bombardment, deepening defense ties post-2023 with $10m in deals for support.

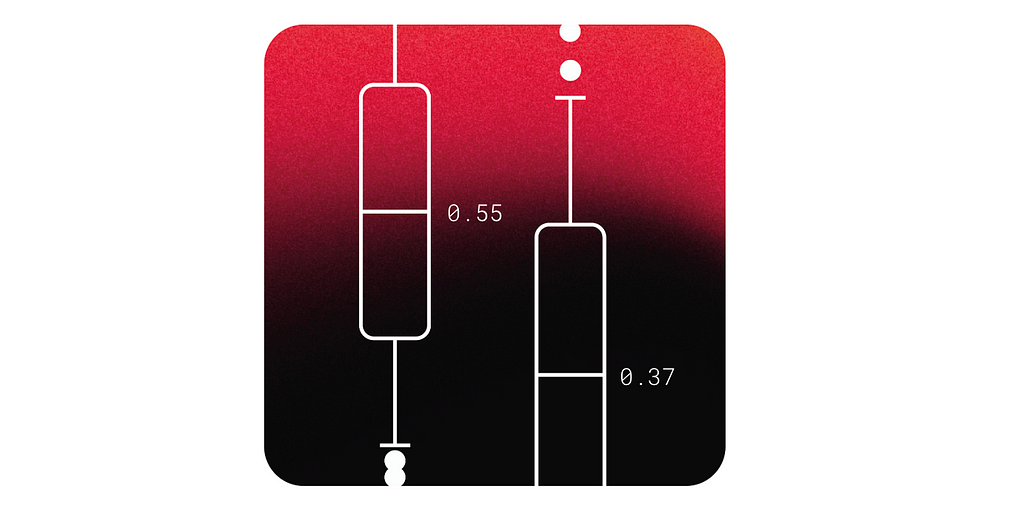

Hands-on machine learning projects reveal challenges in transitioning to production. Optimize model performance by aligning loss functions and metrics with business priorities.

Generative AI models like AlphaFold and RFdiffusion are transforming drug discovery by predicting molecular structures. MIT's MDGen offers a new approach, simulating dynamic molecular movements efficiently to aid in designing new molecules for diseases like cancer.

Machine learning models have made great strides, but their complexity can hinder interpretation. Human Knowledge Models offer a solution by distilling data into simple, actionable rules, improving trust and ease of use in various domains. This approach is especially valuable for field experts like doctors, enabling clear insights from complex data for better decision-making.

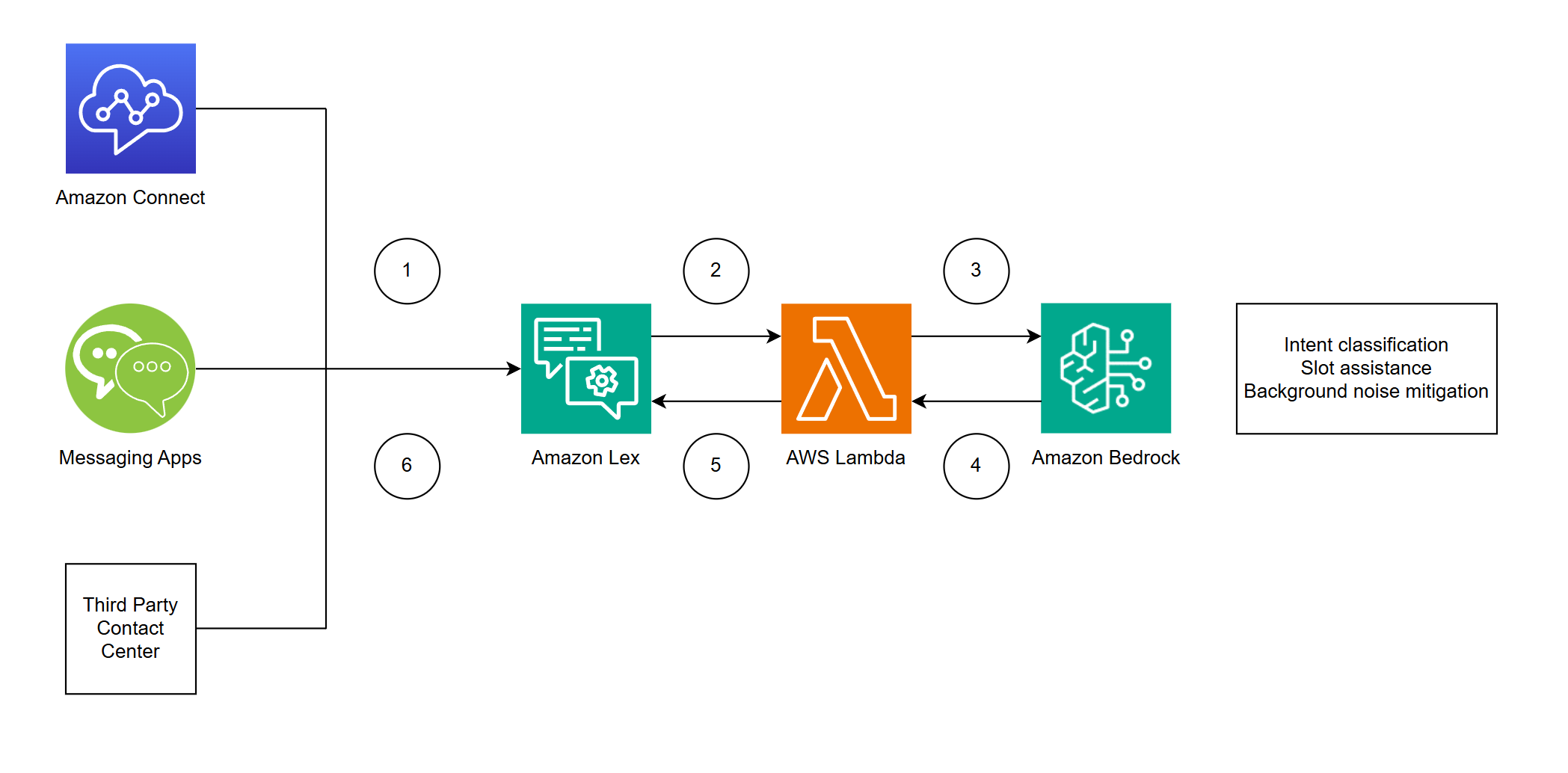

AI technologies like Amazon Lex and Amazon Bedrock are transforming customer experiences, reducing handle times, and enhancing self-service tasks. Integrating LLMs with Amazon Lex and Bedrock improves intent classification and slot resolution, ensuring accurate customer interactions.

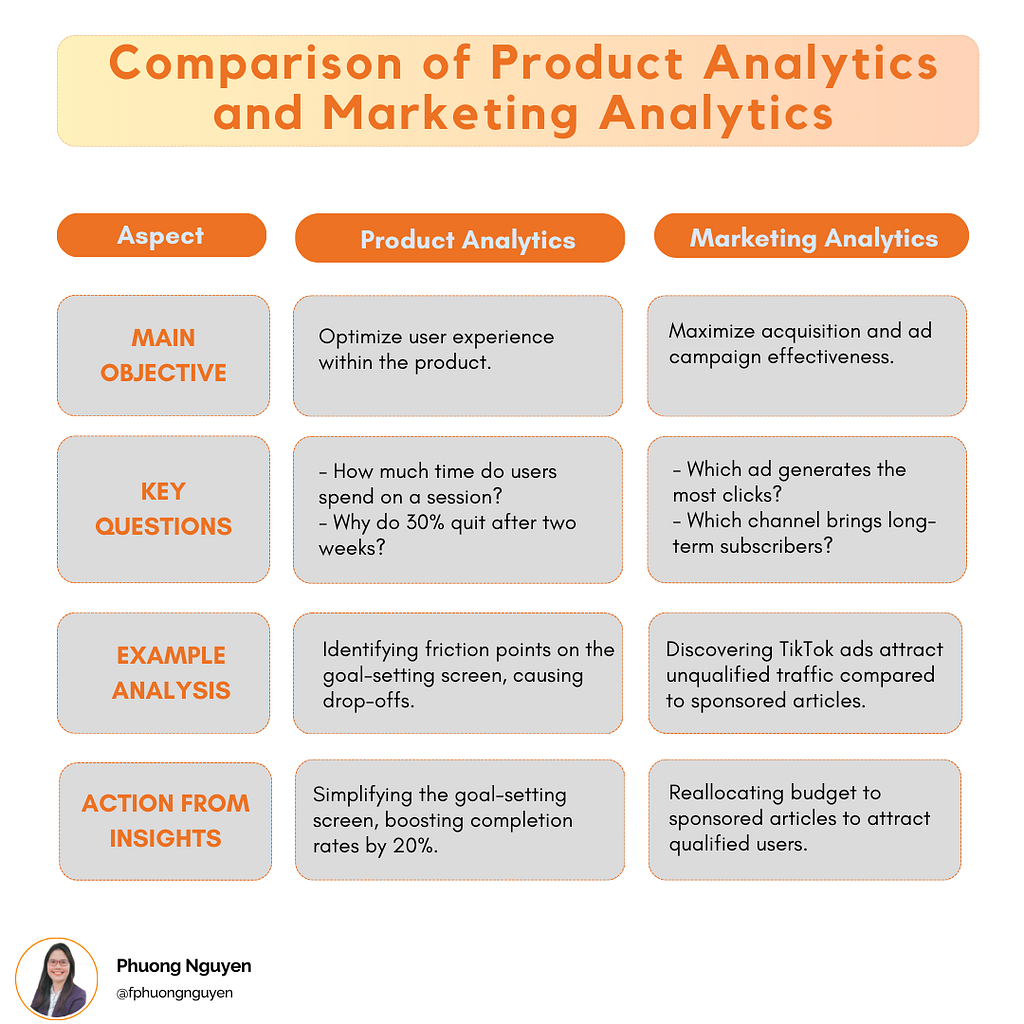

Data Analysts face confusion over the differences between Product Analytics and Marketing Analytics. Product Analytics improves user experience, while Marketing Analytics focuses on acquiring new users.

Leading companies like Microsoft, Oracle, and Snap are utilizing NVIDIA's AI inference platform for high-throughput and cost-effective AI services. NVIDIA's advancements in software optimization and the Hopper platform are revolutionizing AI inference, delivering exceptional user experiences while optimizing total cost of ownership.

Keir Starmer aims to boost AI use in public sector for significant change, with plans for AI growth zones like Culham, Oxfordshire. The environmental impact of increasing AI implementation is a key concern raised by Guardian's Helena Horton.

Independents urge urgent action on deepfakes as AEC warns of foreign interference in Australian election. Pocock and Chaney call for truth in political advertising reform.