Learn how to create a meal planner using ChatGPT in Python, simplifying meal decisions and grocery shopping. Utilize prompt engineering techniques to maximize ChatGPT's capabilities, making meal planning easier and more efficient.

Jeffrey L Bowman's Reframe consultancy uses AI for engaging employees with diversity programs. DEI is a $10bn industry, but DEI programs are being downsized by universities and companies like Nordstrom and Salesforce.

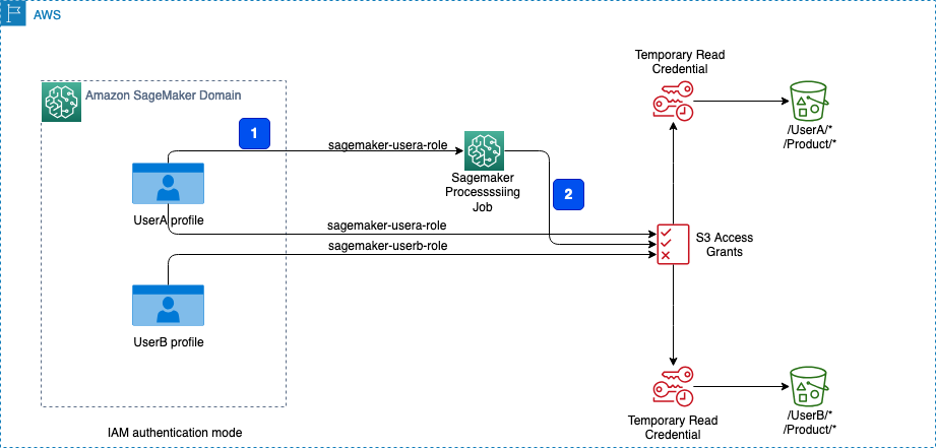

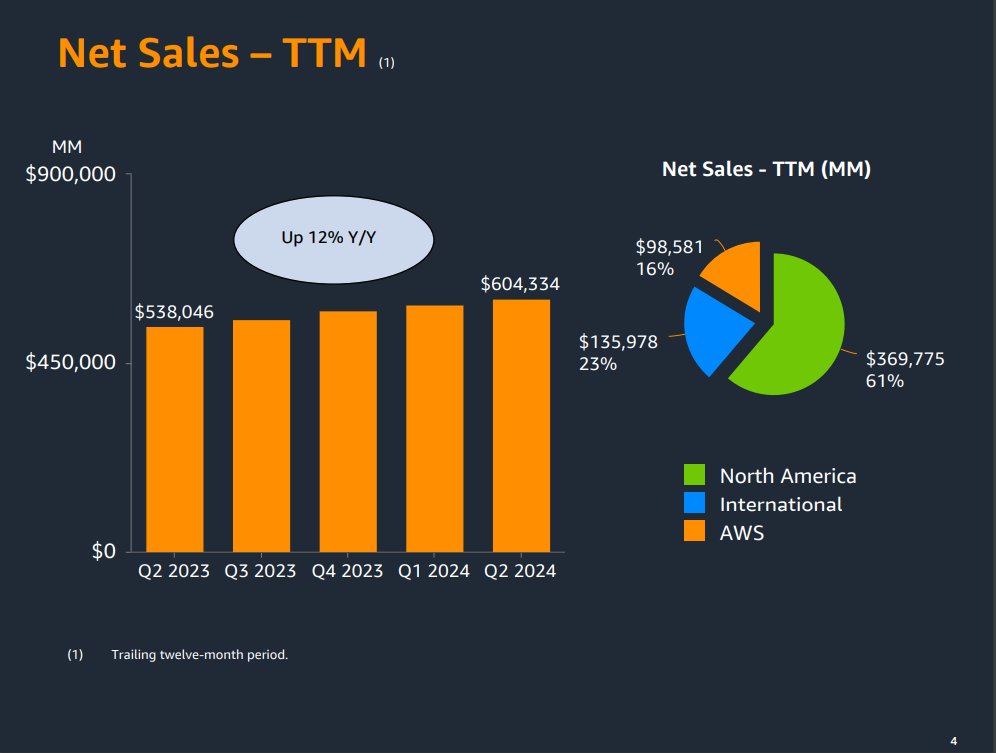

Amazon SageMaker Studio offers a unified interface for data scientists, ML engineers, and developers to build, train, and monitor ML models using Amazon S3 data. S3 Access Grants streamline data access management without the need for frequent IAM role updates, providing granular permissions at bucket, prefix, or object levels.

The AI version of Brian Sewell's review lacks his authentic voice, disappointing readers. Sewell's posh voice and unique style are sorely missed in the London Standard's attempt to recreate his writing.

Article: "Logistic Regression with Batch SGD Training and Weight Decay Using C#". It explains how logistic regression is easy to implement, works well with small and large datasets, and provides highly interpretable results. The demo program uses stochastic gradient descent with batch training and weight decay for accurate predictions.

Artificial intelligence causing panic over computer dominance, but true danger lies in hype. By Navneet Alang.

AI software with 380,000 snake pictures aids quick, accurate identification for correct antivenom use. Médecins Sans Frontières trials AI snake detection in South Sudan to treat patients faster.

OpenAI's CTO, Mira Murati, to depart after leading ChatGPT maker. Murati cites personal exploration as reason for leaving tech company.

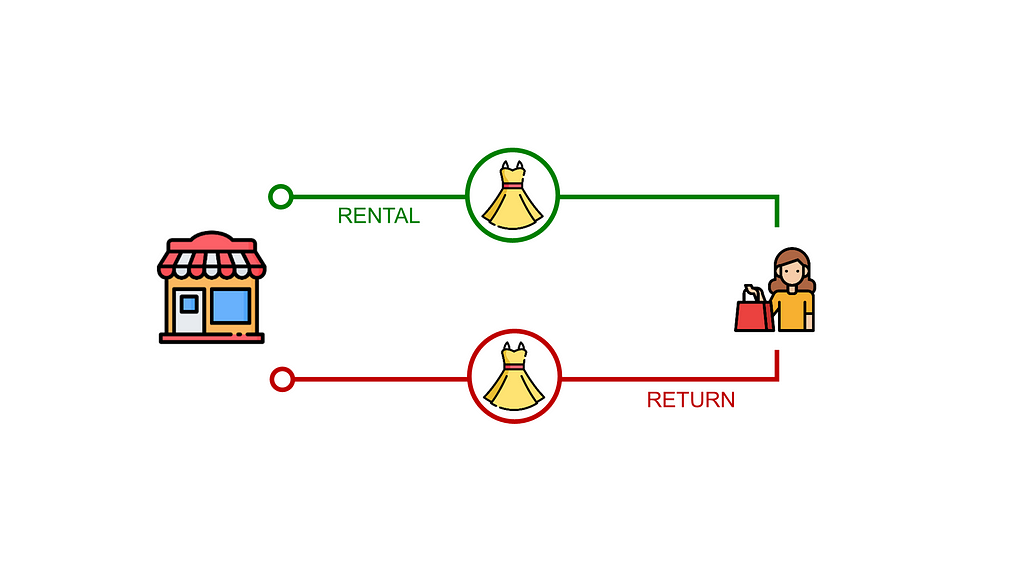

Fashion retailer explores circular rental model using data analytics to reduce environmental footprint and improve resource efficiency. Data scientist assesses operational challenges and metrics crucial for transitioning to a circular economy, aiding sustainability and logistics teams in building a solid business case for top management approval.

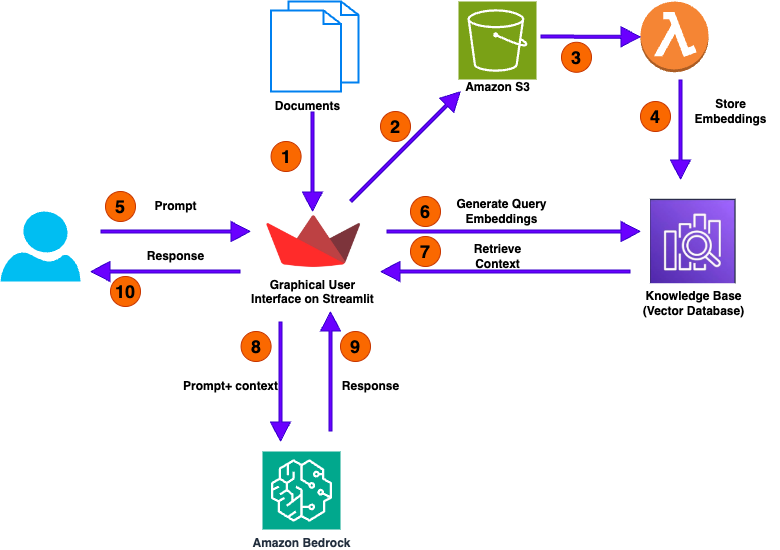

Amazon Bedrock offers high-performing FMs for generative AI apps. RAG in Amazon Bedrock resolves challenges in numerical analysis.

Regression Algorithm: Unveiling the Power of Dummy Regressor. Explore the significance of this simple model in assessing machine learning performance.

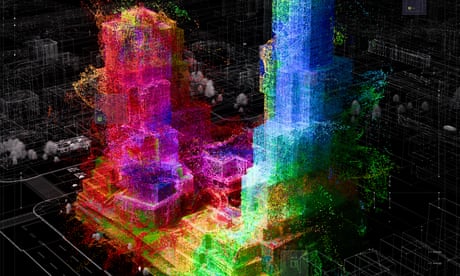

AI artist Refik Anadol plans Dataland, the first AI art museum in LA, promoting 'ethical AI' and renewable energy. Dataland will showcase the fusion of human creativity and machine potential, set to open in 2025 next to prestigious cultural venues.

Llama 3.2 models with vision capabilities are now available in Amazon SageMaker JumpStart and Amazon Bedrock, expanding their traditional text-only applications. These state-of-the-art generative AI models offer improved performance, multilingual support, and are suitable for a wide range of vision-based use cases.

LLM Fine-Tuning FAQs: Understand the nuances of fine-tuning large language models and when to use it effectively for AI projects. Fine-tuning can lower inference costs and adapt model outputs through prompt engineering, but its effectiveness depends on the use case and data volume.

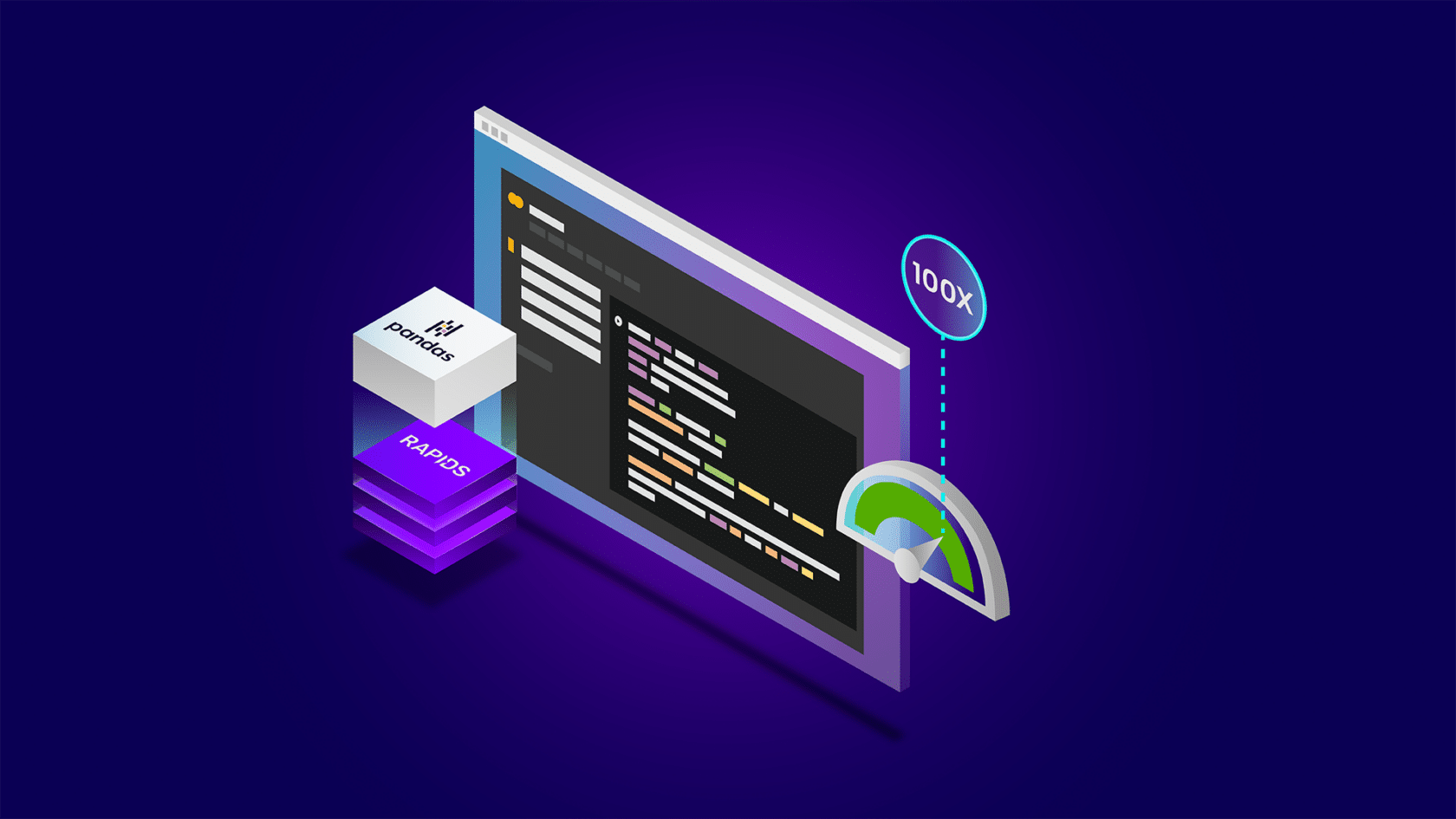

NVIDIA's RAPIDS cuDF library accelerates pandas by up to 100x on RTX hardware, improving data processing speed for data scientists. Data scientists can now use their preferred code base without sacrificing efficiency, thanks to RAPIDS cuDF's GPU-accelerated Python libraries.