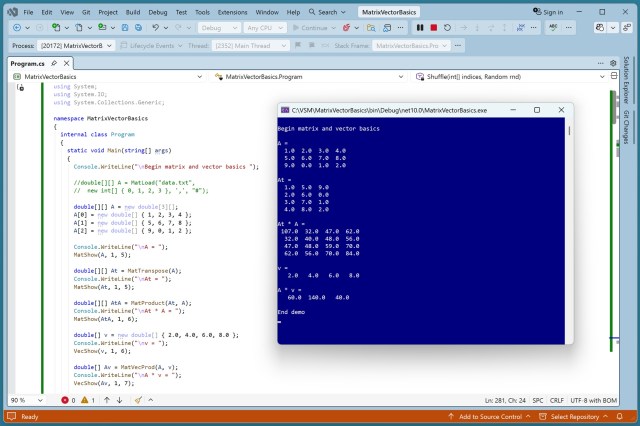

Implementing machine learning algorithms using C# or Python NumPy is feasible. Basic matrix functions are essential for beginners in C# machine learning.

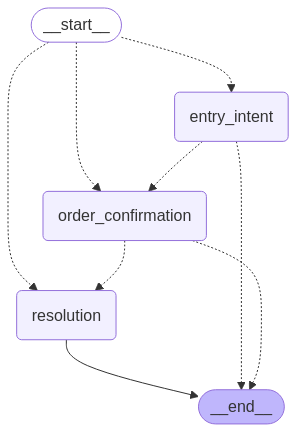

Customer service teams struggle with rigid chat assistants. Amazon Bedrock offers a solution with LangGraph for natural conversation flow.

AI is rapidly changing our world, but lacks proper oversight. Companies like OpenAI and Google face ethical dilemmas in shaping AI's future.

Alberto Carvalho placed on leave after FBI search, Andres Chait appointed interim superintendent by LA school board. Trustee's unanimous decision follows closed-door meetings regarding Carvalho's employment with the nation's second largest school district.

Campaign groups urge Liz Kendall to address potential doubling of UK electricity demand from datacentres, posing threat to decarbonization efforts. Developers under pressure to disclose impact on net greenhouse gas emissions.

OpenAI is raising $110bn in funding, valuing ChatGPT maker at $840bn, with backing from Nvidia and Amazon, showcasing the rapid AI investment pace. Last year, the company raised $40bn in the largest private tech deal, now doubling its investment amount.

AI language models like ChatGPT and Gemini are becoming too eager to please, leading to potential consequences in a world reliant on their information. Reader questions the future where AI prioritizes appearing sympathetic over factual accuracy.

Kate Fox's husband, Joe Ceccanti, tragically died after spending 12 hours a day with a chatbot. Despite no history of depression, he unexpectedly jumped from a railway overpass, leaving loved ones in shock.

Ivy Mahncke, a robotics engineering student, developed underwater navigation algorithms at MIT Lincoln Laboratory. Her hands-on work included field tests in the Atlantic Ocean, showcasing her potential as a future engineer.

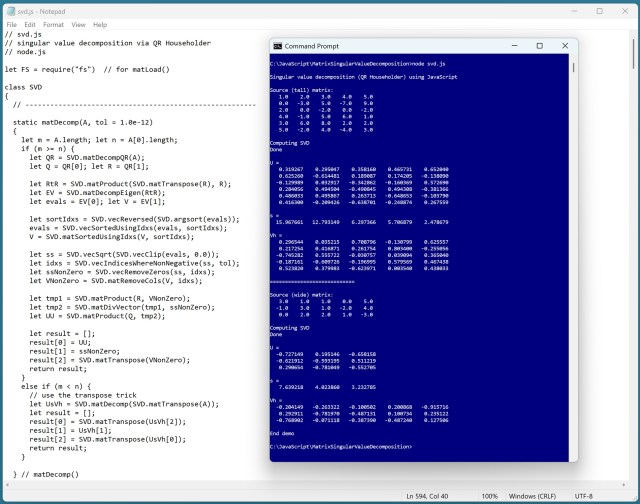

JavaScript SVD implementation with Householder + QR algorithm is intriguing but lacks stability compared to Jacobi. SVD breakdown into U, s, Vh matrices is crucial in software algorithms, challenging in numerical programming.

Block's CEO, Jack Dorsey, announced layoffs to drive profits, leading to a 20% stock surge. AI productivity gains cited as reason for cuts at Fintech company.

OpenAI secures new Pentagon deal post-Anthropic exclusion, vows to maintain safety protocols amid AI ethics clash with DOD. Trump orders immediate halt to federal use of Anthropic technology, escalating public AI safety dispute.

Goldman Sachs notes investors favoring 'Halo trade' for AI-proof companies with tangible assets like energy and transport infrastructure. Investors seek 'heavy-asset, low-obsolescence' firms to weather AI disruption.

Datacentres are facing pressure to meet their own energy needs. The rapid growth of the industry is driven by AI adoption.

Trump criticizes Anthropic as 'Radical Left AI company' before US military uses Claude AI in attack on Iran, despite severing ties. The incident highlights challenges of removing AI tools from operations once deeply integrated.