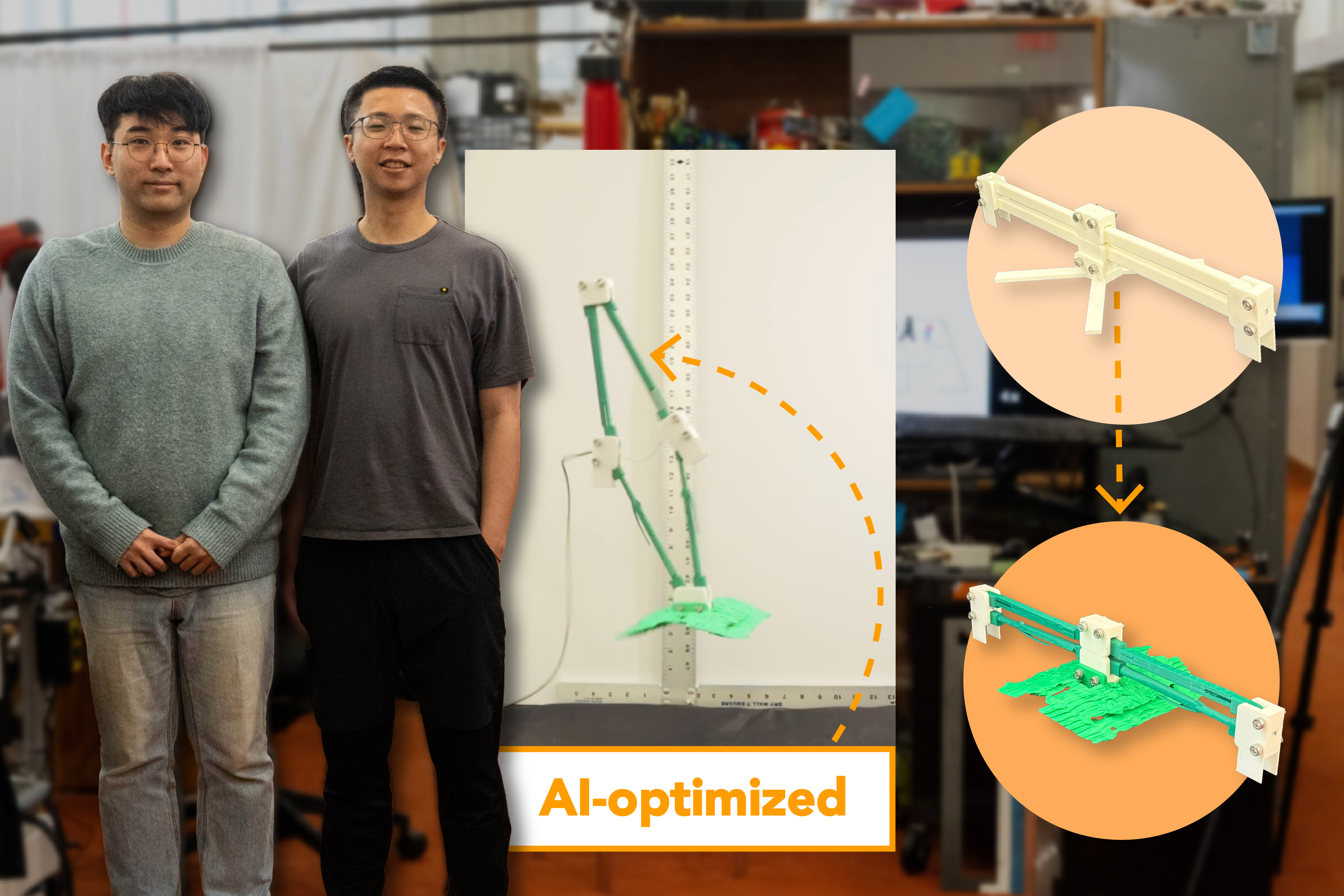

Generative AI models like OpenAI’s DALL-E can spark new designs. MIT’s CSAIL used GenAI to create robots that jump 41% higher.

Republicans aim to prevent AI regulations, risking 1bn tons of CO2 emissions in the US over a decade, Harvard researchers warn. Unrestrained AI growth threatens to worsen climate crisis, with massive electricity consumption contributing to planet-heating emissions.

NHS plans DNA tests for all babies to assess disease risk with AI, raising concerns about informed consent and privacy. Infants' genetic data could be stored and used by unknown corporations without parental knowledge.

MIT and Mass General Brigham team up with Analog Devices Inc. to launch the MIT-MGB Seed Program, aiming to advance human health research with interdisciplinary collaborations. The program will fund joint projects, combining expertise in AI, machine learning, and clinical research to drive transformative changes in patient care.

Google's carbon emissions up 51% due to AI, datacentre growth. Company struggles to reduce scope 3 emissions.

M3gan 2.0 shifts from horror to action in a bid for blockbuster status, with mixed results. The sequel retains the original's humor but struggles with pacing and stakes.

Meta, owned by Mark Zuckerberg, wins lawsuit over use of books to train AI. Authors, including Sarah Silverman, claim breach of copyright.

Anomaly detection in spacecraft dynamics is crucial for mission success. AWS's SageMaker AI uses RCF to detect anomalies in NASA and Blue Origin's lunar landing data.

GeForce NOW members get new rewards, games, and discounts, including We Happy Few and Broken Age. SteelSeries launches a mobile controller for cloud gaming, while Premium members get early access to exclusive rewards in The Elder Scrolls Online.

Generative AI sparks concerns in gaming as players reject AI-generated art, impacting developers' work and reception. Even non-users face backlash, raising questions on the future of AI in video games.

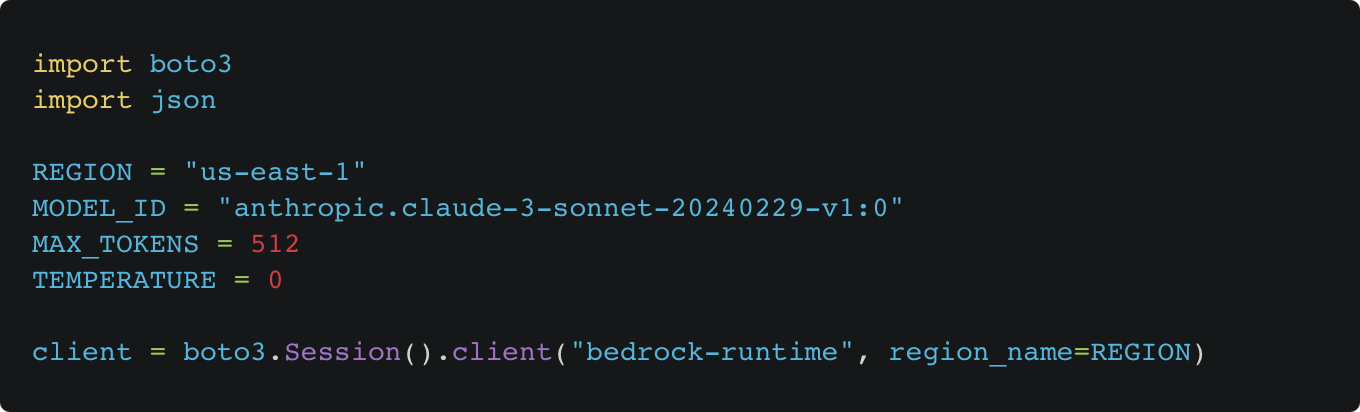

Generative AI is transforming industries by streamlining operations. Structured data enhances conversational AI, but LLMs face challenges in producing consistent JSON outputs. Amazon Bedrock offers solutions like Prompt Engineering and Tool Use with the Bedrock Converse API to address these challenges and generate structured outputs effectively.

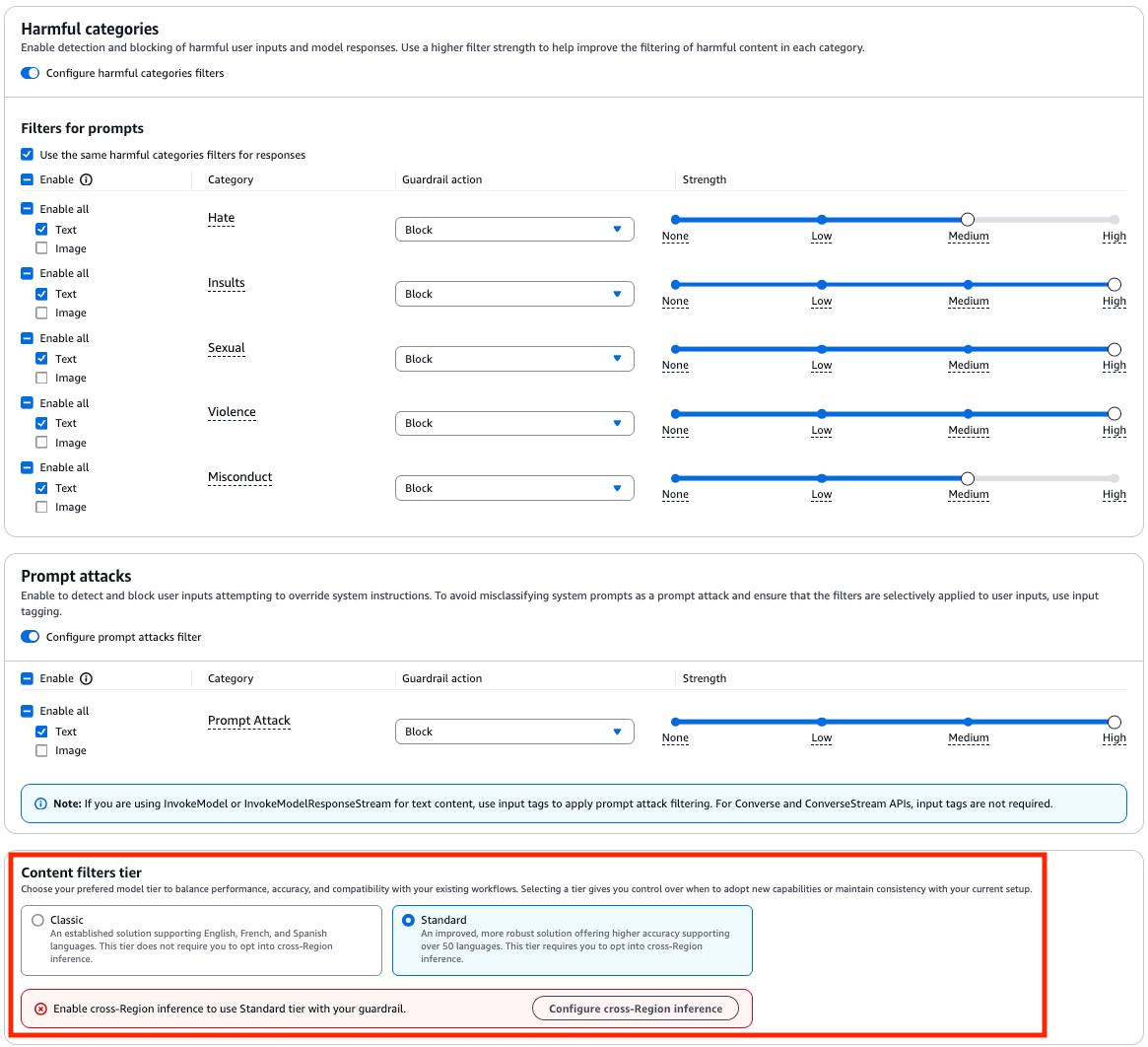

Amazon Bedrock Guardrails offers configurable safeguards for building trusted generative AI applications, including content filters and contextual grounding checks. The introduction of safeguard tiers allows organizations to tailor protection levels based on specific needs, ensuring responsible AI principles are upheld without sacrificing performance.

Article: 'Matrix Inverse Using Cayley-Hamilton with C#' in Microsoft Visual Studio Magazine explains the challenges and advantages of using Cayley-Hamilton technique for computing matrix inverse. The technique is simple but has limitations for larger matrices, yet offers customization and unique advantages in specific scenarios.

Former chief of staff to Rishi Sunak, Liam Booth-Smith, lands role at Anthropic, a company collaborating with the government on AI. Booth-Smith encountered Anthropic while working at No 10 and now joins as "external affairs" chief.

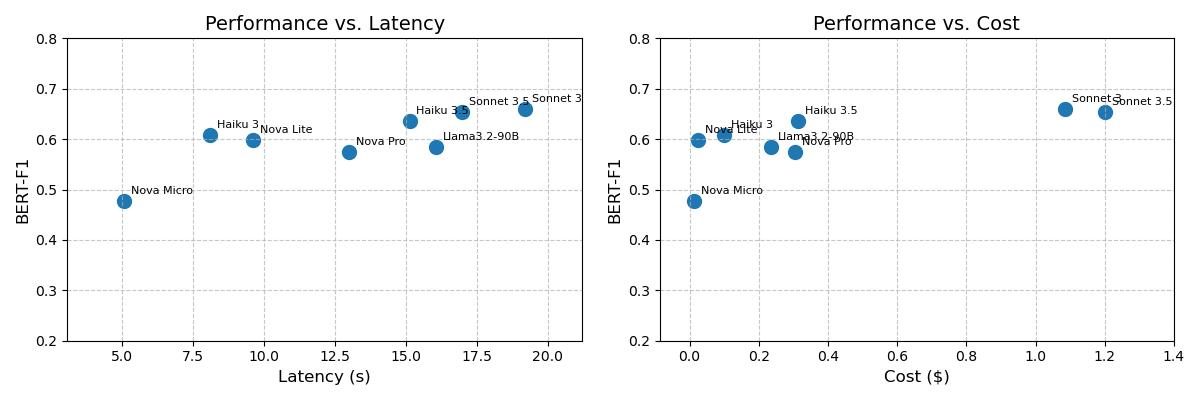

Amazon's vast operations require risk managers to oversee claims with an average of 75 documents per case. In response, an internal technology team at Amazon developed an AI-powered solution for efficient claim summarization, utilizing Amazon Nova foundation models to optimize cost and latency concerns.