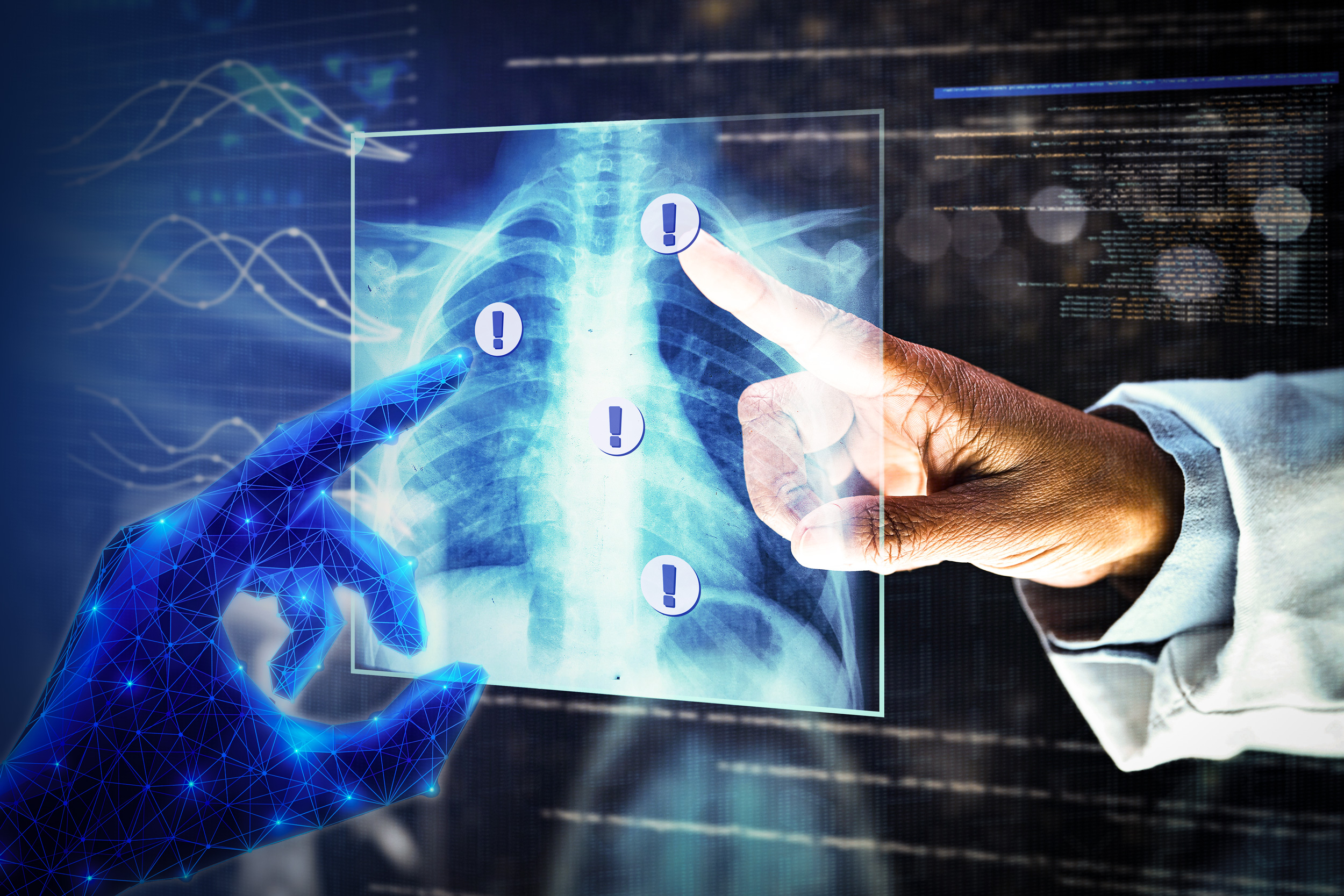

Discover the groundbreaking research by XYZ Company on the development of a new AI technology that can revolutionize the healthcare industry. Learn how this innovation is set to improve patient care and diagnosis accuracy.

Discover the groundbreaking AI technology developed by Tesla for their self-driving cars. Learn how this innovation is revolutionizing the automotive industry.

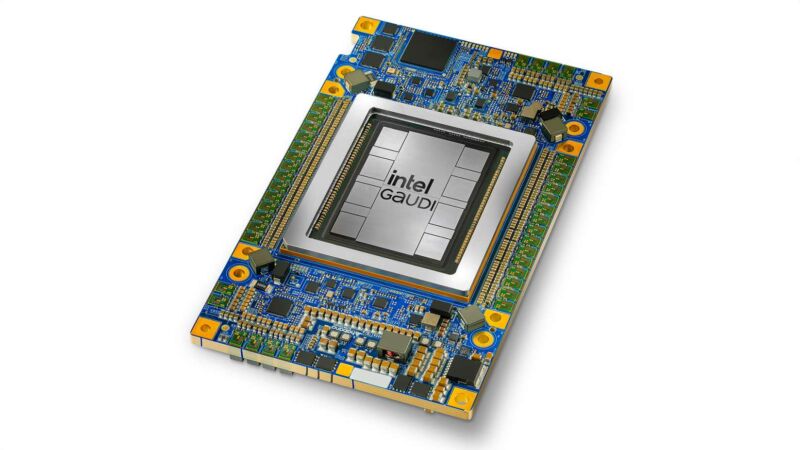

Discover the latest breakthrough in AI technology by XYZ Company. Their revolutionary product is set to disrupt the industry with its cutting-edge capabilities.

Discover the groundbreaking AI technology developed by XYZ Company, revolutionizing the healthcare industry. Learn how their innovative product is transforming patient care and diagnosis.

Discover how XYZ Company revolutionized the tech industry with their groundbreaking AI technology. Learn about the impressive results and future implications of their innovative product.

Discover the groundbreaking research by Tesla on sustainable energy solutions. Their innovative products and technologies are revolutionizing the industry.

Discover the latest breakthrough in AI technology by leading companies. Learn how innovative products are reshaping industries.

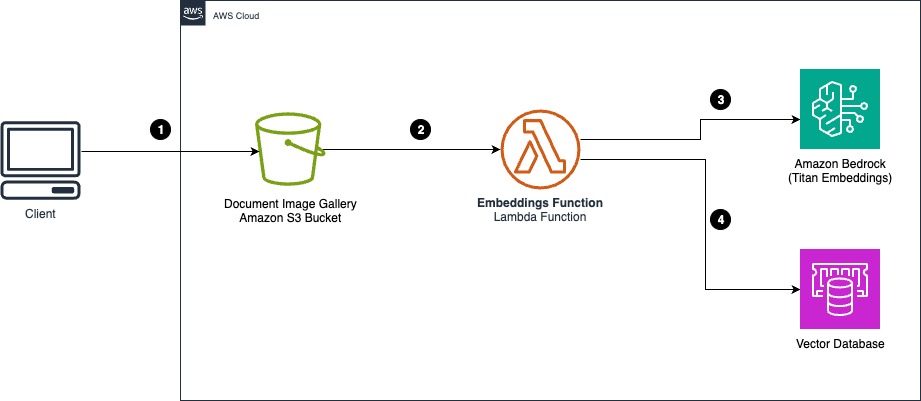

New AI technology developed by Google is revolutionizing the way we interact with computers. This groundbreaking innovation promises to streamline workflow and increase productivity.

New study reveals groundbreaking AI technology developed by Google, revolutionizing data analysis in healthcare. Findings show significant increase in accuracy and efficiency of diagnosing rare diseases.

New study reveals groundbreaking research on AI technology by leading tech companies. Findings suggest potential for major advancements in automation and machine learning.

Exciting new study reveals groundbreaking AI technology developed by Google surpasses human accuracy in medical imaging. The potential for revolutionizing healthcare is immense.

New study reveals groundbreaking AI technology developed by Google surpasses human accuracy in diagnosing diseases. Potential to revolutionize healthcare industry.

Discover how XYZ Company revolutionized the tech industry with their groundbreaking AI technology. Learn how their innovative product has disrupted the market and set new standards for efficiency and performance.

Exciting new study reveals groundbreaking AI technology developed by Google and Tesla. The innovative software promises to revolutionize the automotive industry.

Discover how Company X revolutionized the industry with their groundbreaking technology, leading to a surge in profits. Learn about the unexpected partnership between Company Y and Company Z that is set to disrupt the market.