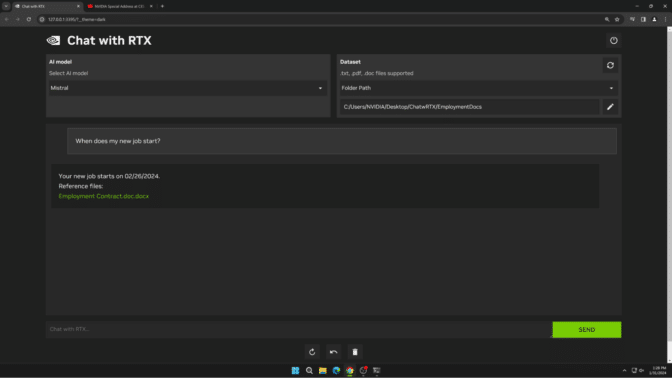

NVIDIA's GeForce RTX accelerates AI computing with over 100M users and 500 applications. Generative AI opens new possibilities for content creation and automation.

Guardian journalist uncovers mysterious AI company creating havoc with deepfakes, leaving authorities puzzled. Is AI blurring the line between truth and deception, making it harder to distinguish reality from fiction?

Microsoft AI engineer Shane Jones warns management about lack of safeguards for AI image generator, highlighting potential for harmful and offensive content. Despite repeated attempts, no action was taken, prompting Jones to escalate concerns to FTC and Microsoft's board of directors.

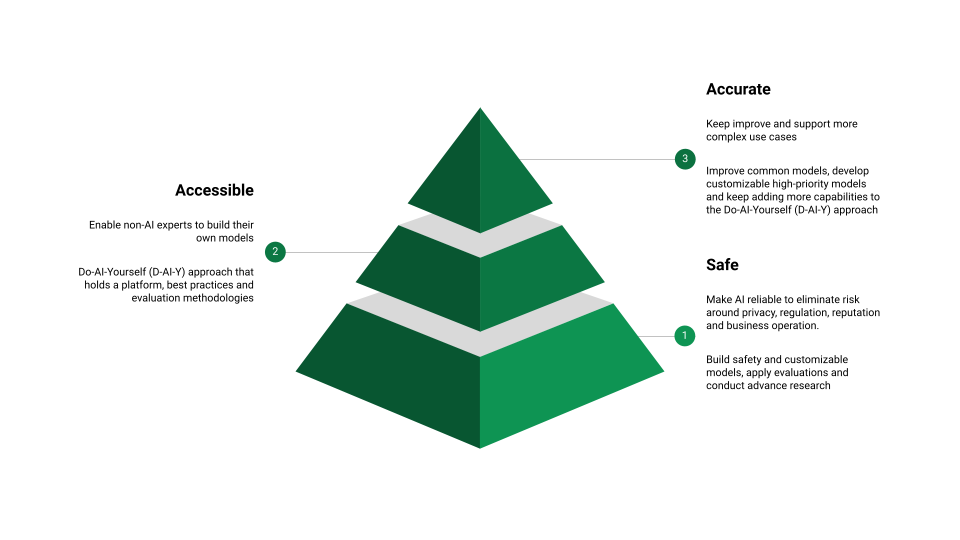

AI democratization allows non-experts to build AI solutions quickly. Wix data science team pioneers impactful AI features, ensuring safety and enhancing accessibility for AI-driven products.

Researchers suggest Australian media companies may seek compensation from Meta for using online news in generative AI. Meta downplays news value, stating only 3% of Facebook usage in Australia is news-related.

Environmental groups warn AI won't solve climate crisis, may worsen energy use & spread disinformation. Big tech touts AI for tracking deforestation, pollution, extreme weather, but concerns raised about potential negative impact.

OpenAI counters Elon Musk's lawsuit, revealing email support for for-profit unit creation. ChatGPT maker shares correspondence suggesting merger with Tesla.

Educators in grades 3-12 are using ChatGPT-powered grading tool Writable, streamlining the process and saving time. AI-generated feedback is reviewed by teachers before reaching students, offering actionable suggestions and unique writing prompts.

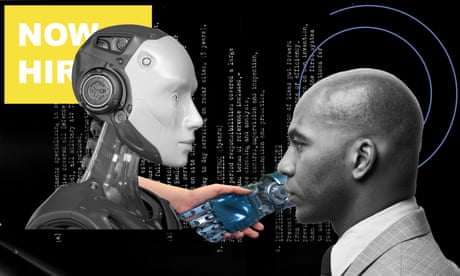

Employers are using AI for job interviews, catching candidates off guard. Ty's encounter with a Siri-like recruiter highlights the growing trend.

Explore the practical guide to using HuggingFace's CausalLM for language modeling. Learn how decoder-only models predict new tokens based on previous words.

Article highlights rise of vector databases in AI integration, focusing on Retrieval Augmented Generation (RAG) systems. Companies store text embeddings in vector databases for efficient search, raising privacy concerns over potential data breaches and unauthorized use.

The 96th Academy Awards nominees for Best Visual Effects push boundaries with cutting-edge NVIDIA tech. Godzilla: Minus One reinvents monster movies, while Guardians of the Galaxy Vol. 3 blends humor with breathtaking cosmic visuals.

Anthropic prompt engineer Alex Albert sparked controversy in the AI community with a tweet about Claude 3 Opus, a new large language model. Opus demonstrated potential "metacognition" during internal testing, raising questions about AI self-awareness.

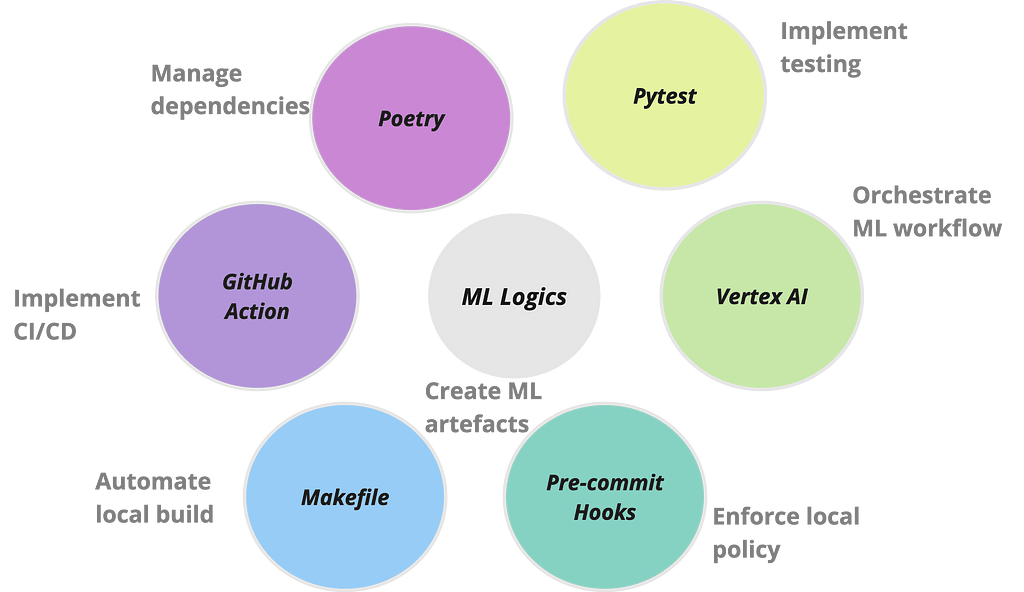

Building scalable Kubeflow ML pipelines on Vertex AI, 'jailbreaking' Google prebuilt containers. MLOps platform simplifies ML lifecycle with modular architecture and Google Vertex AI integration.

AI mishap in QSO ad creates bizarrely large and numerous fingers, sparking ridicule among creative workers. Couple's distorted appearance and mismatched outfits raise eyebrows and questions about AI's impact on advertising.