AI-generated deepfake videos of doctors are used to promote unproven supplements on TikTok and other platforms, spreading health misinformation. Full Fact uncovered hundreds of videos directing viewers to Wellness Nest, a US-based supplements company.

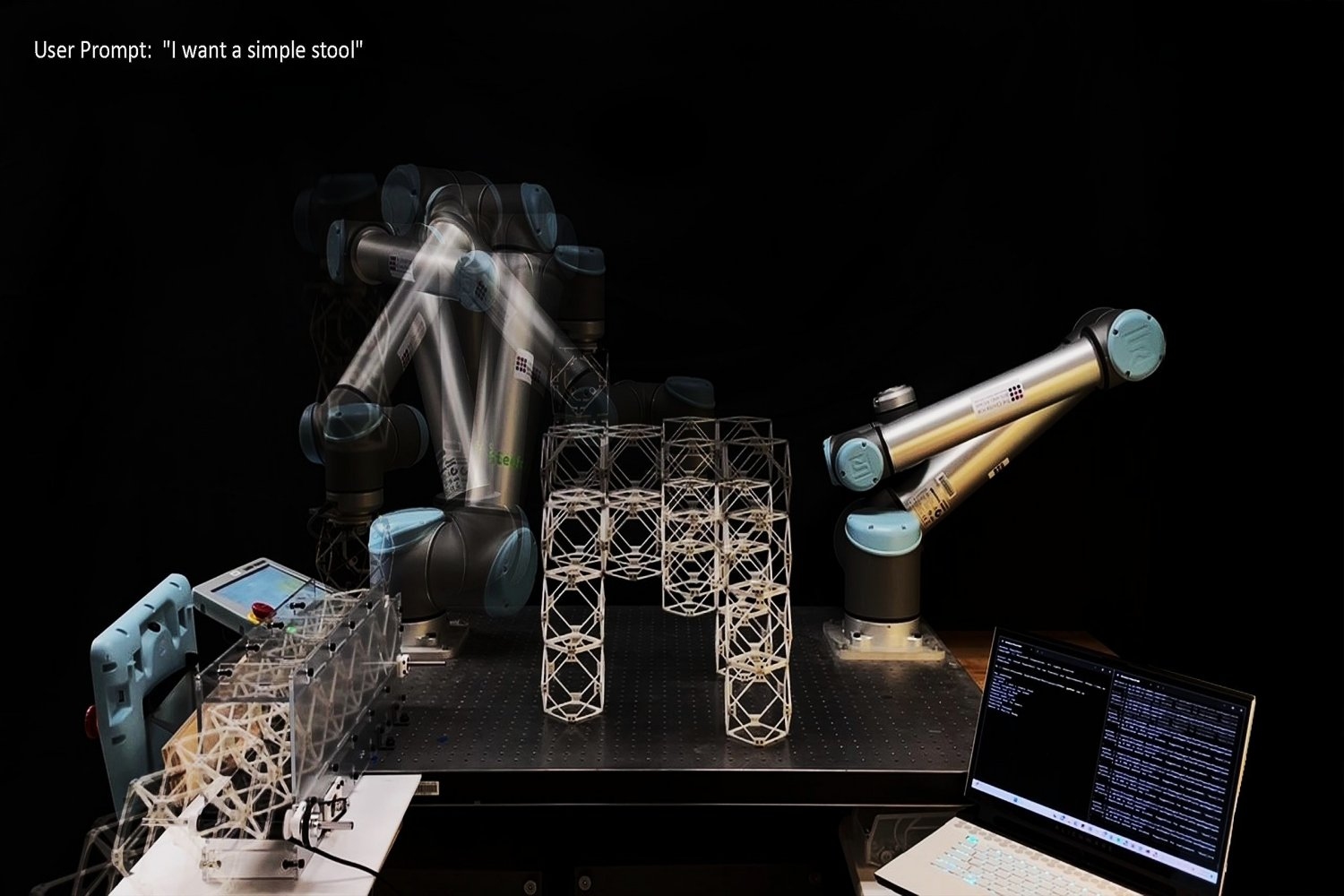

MIT researchers have developed a speech-to-reality system allowing a robotic arm to create objects from spoken prompts in minutes. This innovative technology combines natural language processing, 3D generative AI, and robotic assembly to make design and manufacturing accessible to all.

Fears arise over AI bubble bursting with tech giants like Alphabet, Amazon, and Microsoft heavily invested. What will happen if the magnificent seven companies' AI investments collapse?

Pickle Robot Company's one-armed robots autonomously unload trailers, aiming to reduce warehouse injuries and improve efficiency. Founders Meyer and Eisenstein transitioned from consulting to robotics, using AI and machine learning to revolutionize supply chain automation.

Robots can make humans laugh by falling over, but a new project explores if AI can make them genuinely funny. ChatGPT's jokes may be corny, but research is investigating the potential for robots to master standup comedy.

Dave Stewart of Eurythmics urges creatives to license music to AI platforms for new creations. AI analyzes songs to generate unique tracks based on user prompts.

Nevada desert now home to massive tech datacenters like Switch, Google, Microsoft, and Apple. Tesla's gigafactory also part of the growing business park.

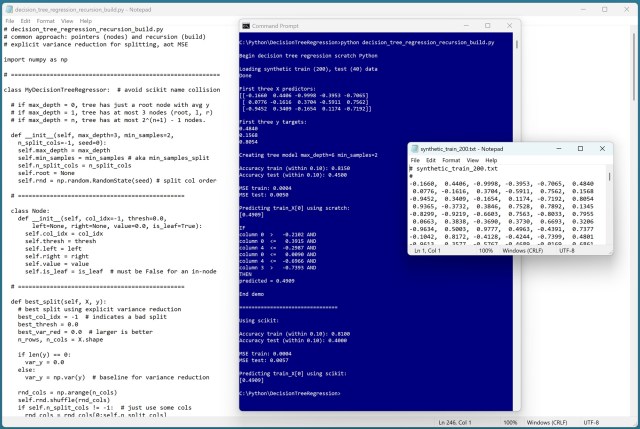

Implemented decision tree regression with Python, refactored code for readability, and created a nested Node class for better structure. Simplified parameters and eliminated recursion for a more user-friendly experience in MyDecisionTreeRegressor class.

Water demand for datacentres in Sydney to surpass Canberra's drinking water within a decade, raising concerns amid AI boom in Australia. Experts warn of strain on water resources as investments in datacentres surge in Sydney and Melbourne.

Google's Nano Banana Pro AI tool criticized for creating 'white saviour' images with Black children in Africa for humanitarian aid prompts. Accusations of racial bias and charity logos appearing in visuals spark controversy.

AI responses are influential but less accurate, per UK security report. Study with 80,000 participants reveals chatbots' persuasive power.

The US earmarked billions for critical minerals for military use, diverting resources from sustainable technologies. Pentagon stockpiling minerals needed for climate tech, hindering global arms race.

MIT researchers developed a dynamic approach for large language models (LLMs) to allocate computational effort based on question difficulty, improving efficiency and accuracy. This method allows smaller LLMs to outperform larger models on complex problems, potentially reducing energy consumption and expanding applications.

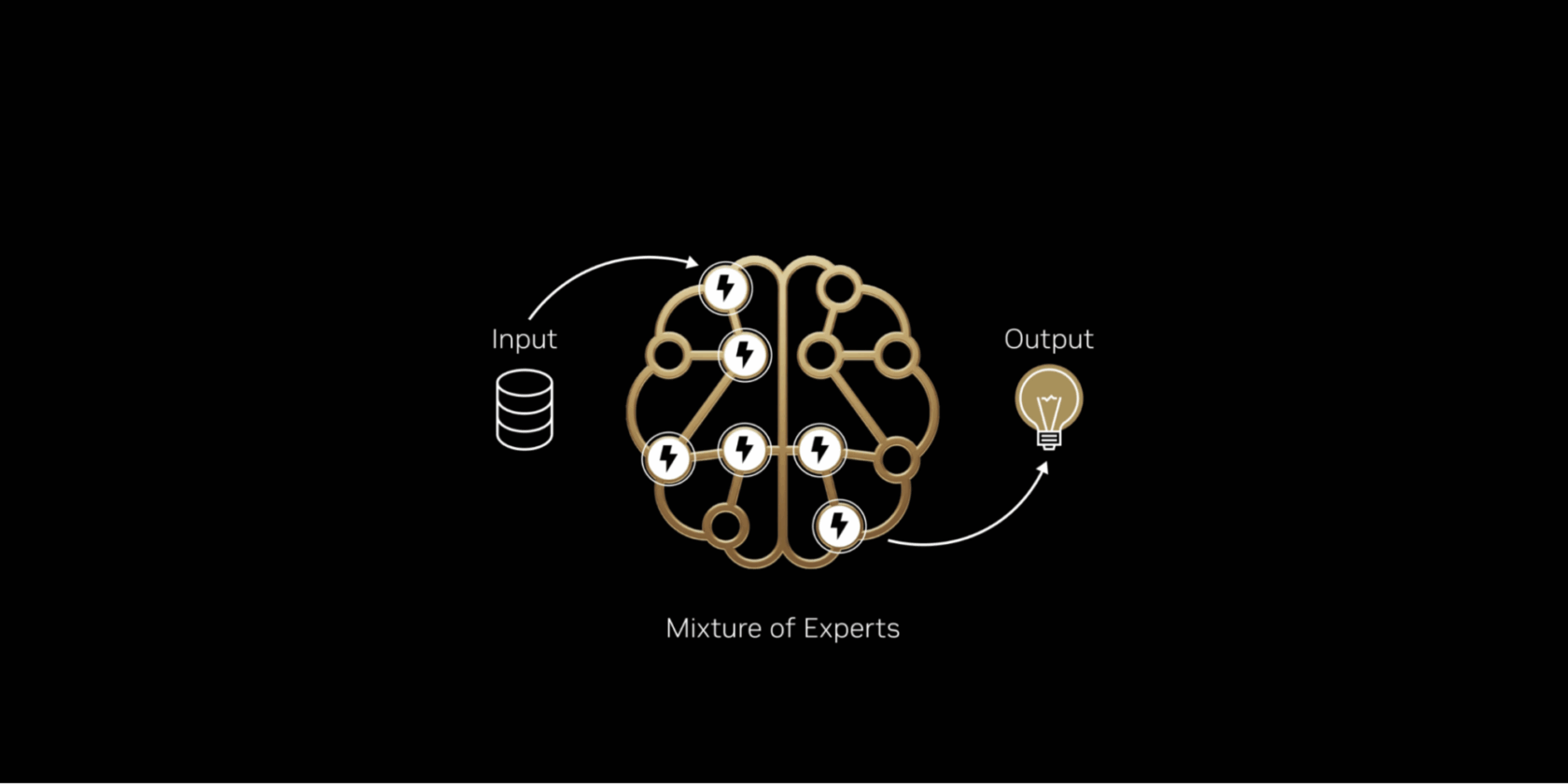

Top 10 intelligent open-source models use MoE architecture, 10x faster on NVIDIA GB200 NVL72. MoE models like Kimi K2 Thinking and Mistral Large 3 offer breakthrough performance on NVIDIA systems, becoming the standard for frontier AI models.

AI-produced music is taking over charts, with fake bands and impersonations causing controversy. Record labels are fighting back against AI-generated tracks.