Kimaya Lecamwasam, a computational neuroscientist and musician, combines her love for music and neuroscience to explore the emotional impact of music on mental health, leading to innovative tools for well-being at the MIT Media Lab.

'Project Blue, a massive datacenter in Sonoran desert, sparks concerns over water and energy usage, becoming the largest development in Pima county.'

Quebec man fined $3,500 for using AI hallucinations in legal defense, judge warns of integrity threat. Legal saga includes 'hijacked planes' and Interpol red alerts.

AI's rapid advancement in the workplace may create a "permanent underclass," predicts OpenAI's Leopold Aschenbrenner. By 2027, AI could surpass human capacity, leading to job displacement for everyone not on the AI train.

MIT Music and Theater Arts honors late Professor Emerita Jeanne Shapiro Bamberger, a pioneer in using computers to teach music. Bamberger's legacy includes innovative computer languages and influential contributions to music education.

World Food Day highlights global hunger issues. MIT researcher Aouad's project aims to optimize food assistance programs in India.

AI's influence on society prompts debate on regulation vs. industry growth, with concerns over democracy and market manipulation. The constant influx of new AI products raises questions about industry dominance and societal impact.

MIT engineers have developed SpectroGen, an AI tool that accelerates material scanning, matching physical results with 99% accuracy. This innovation could revolutionize quality control in industries by generating spectral data a thousand times faster than traditional methods.

AI in UK schools is causing concerns as 1 in 4 students find it 'too easy' to find answers, limiting creativity and learning new skills. Oxford University Press research reveals 80% of students aged 13-18 regularly use AI for schoolwork, with only 2% not using it at all.

ChatGPT's latest version raises concerns over harmful responses to prompts about suicide, self-harm, and eating disorders, with GPT-5 giving more harmful answers than its predecessor GPT-4o. Campaigners highlight the need for improvement in AI safety measures to protect vulnerable individuals seeking support.

Watershed Bio's platform helps researchers analyze complex biological data without needing software engineering skills. Co-founder Jonathan Wang aims to unlock insights faster for scientists in academia and industry using cutting-edge tools on the cloud-based platform.

OpenAI to relax restrictions on ChatGPT, allowing customized personalities and erotic content for verified adult users. New version in December will feature more human-like responses and age-gating for adult content.

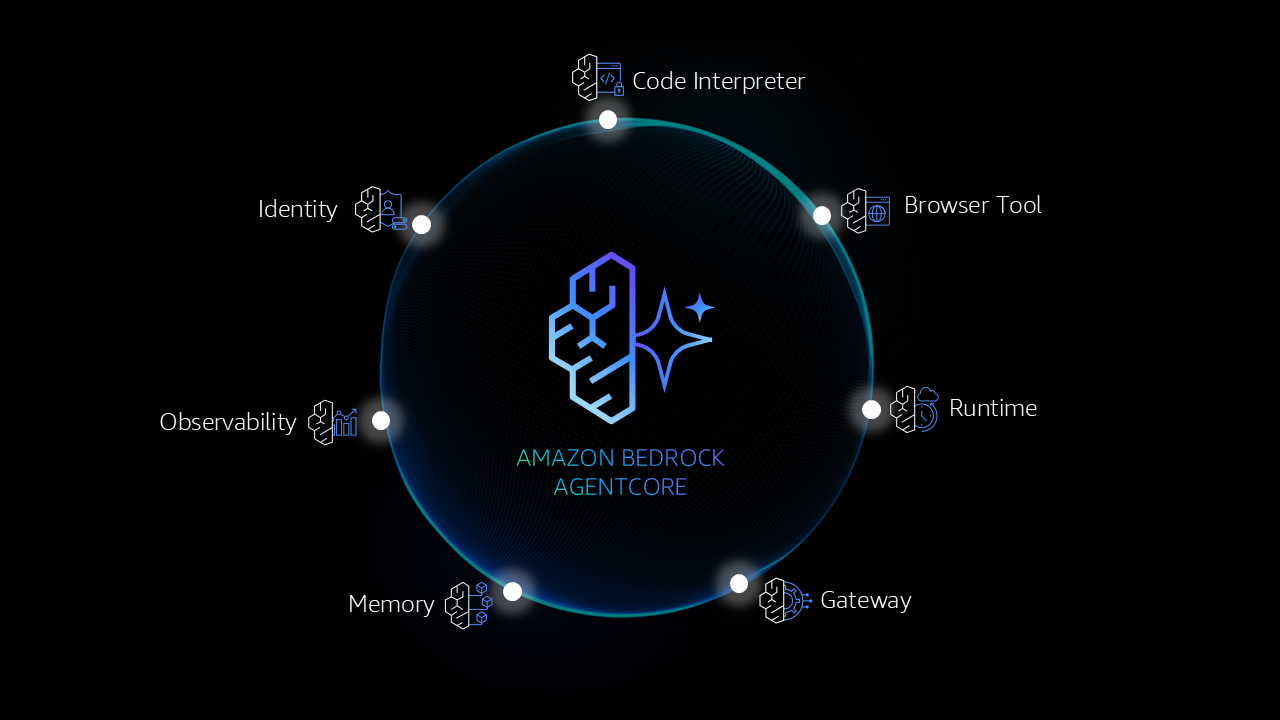

Amazon Bedrock AgentCore is a new platform that helps developers quickly transition AI agents from pilots to full-scale production. With support from major companies like Clearwater Analytics and Sony, AgentCore offers a secure, scalable, and reliable foundation for building, deploying, and operating agents with ease.

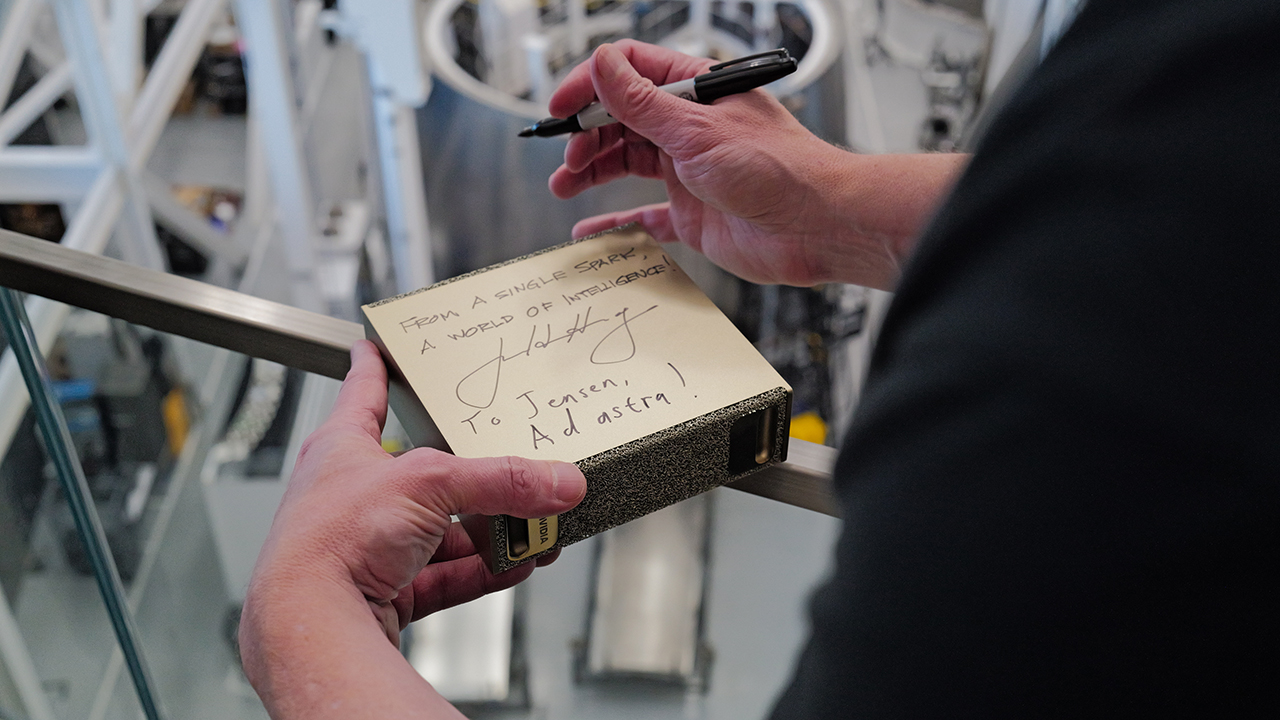

NVIDIA's DGX Spark, a compact supercomputer, delivers a petaflop of AI performance, empowering developers and creators beyond the data center. From SpaceX's Starbase to robotics labs, DGX Spark is putting supercomputer-class AI within reach for all.

Tech billionaire lectures on antichrist in San Francisco while man attacks Latter-day Saints in Michigan, sparking concerns. Matthew Avery Sutton warns of dangerous shift from pulpits to politics in American public life.