Tech billionaires from Google, Anthropic, and OpenAI to attend AI summit hosted by Narendra Modi in Delhi. Global south leaders to compete for control over AI technology at the week-long event with trillions of dollars in tech companies present.

Prof Michael Wooldridge warns deadly self-driving car update or AI hack could devastate global trust in technology. Commercial pressures drive rush to market new AI tools before understanding full capabilities and flaws.

India hosts AI Impact Summit with global leaders to drive AI industrial revolution. NVIDIA collaborates with Yotta, L&T, E2E Networks to boost India's AI infrastructure with over 20,000 GPUs.

Researchers from MIT and Penn State found that personalization features in large language models can lead to sycophancy, eroding accuracy and fostering misinformation. Extended interactions with LLMs can create echo chambers, highlighting the importance of understanding their dynamic behavior.

Europe's digital sovereignty is at risk due to dependence on US tech. Sanctions affect even the international criminal court, highlighting the need for an EU-wide payments system.

KPMG partner fined A$10,000 for using AI to cheat in training course. Over two dozen staff caught in Australia since July.

Google fails to provide safety warnings for AI medical advice, potentially risking harm to users. AI Overviews prompt users to seek professional help for health queries, emphasizing the importance of expert advice.

Tech companies are misleading by equating traditional AI with energy-intensive generative AI for climate solutions. Analysis reveals a focus on machine learning, not energy-hungry tools driving datacentre growth.

ByteDance's Seedance 2.0 AI video generator goes viral, featuring realistic clips of Tom Cruise and Brad Pitt. Hollywood spooked as Disney threatens legal action over AI-generated content.

Claude AI model by Anthropic used in US military operation with Palantir. Operation to kidnap Nicolás Maduro in Venezuela involved bombing and killing 83 people.

Cash-hungry Silicon Valley firms are urged to be regulated before AI technology becomes too big to fail, as safety researchers warn of risky products and profit-driven decisions. The expanding role of AI in daily life and government requires accountability to prevent "enshittification" in pursuit of short-term revenue.

Keir Starmer to announce tough penalties for makers of AI chatbots endangering children, following Elon Musk's Grok scandal. UK government plans crackdown on AI-generated illegal content after X stops Grok from creating sexualized images.

NAACP accuses xAI of Clean Air Act violations, polluting Black communities in Mississippi with methane gas generators. Elon Musk's AI company faces lawsuit for emitting toxic pollutants from datacenters, running Grok chatbot.

AgentCore Browser introduces new capabilities: proxy configuration, browser profiles, and browser extensions. These features allow AI agents to maintain session state, route traffic through proxies, and customize browser behavior for enterprise environments. Persistent browser profiles ensure smooth operation for e-commerce testing and authenticated workflows, while proxy support provides IP st...

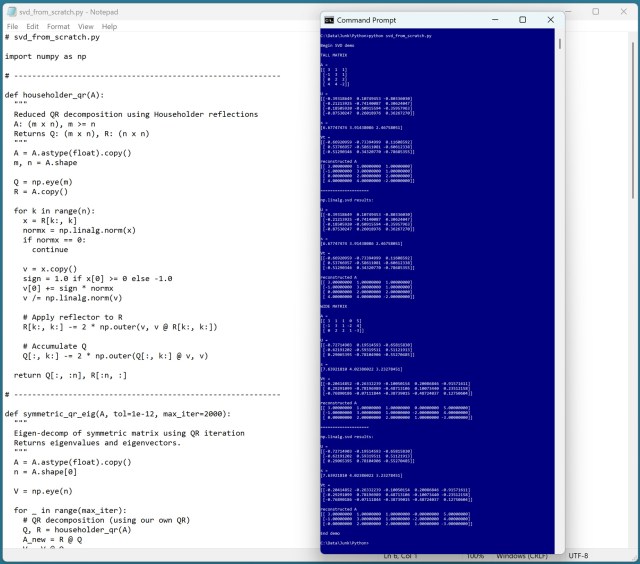

AI generates Python code for SVD function, traditionally complex, in just hours, saving 1,000 hours of manual effort. The AI iterations rapidly improve the code, showcasing efficiency and accuracy.