Fashion enthusiast uses AI to transform chaotic closet into curated outfits with multi-step GPT setup, creating Pico Glitter. GPT-based fashion advisor helps manage wardrobe, providing cohesive outfit suggestions based on user's personal style rules and specific pieces owned.

US state department uses AI to revoke visas of foreign students perceived as Hamas supporters. Trump's executive order targets pro-Palestinian protesters amid ongoing conflict with Israel.

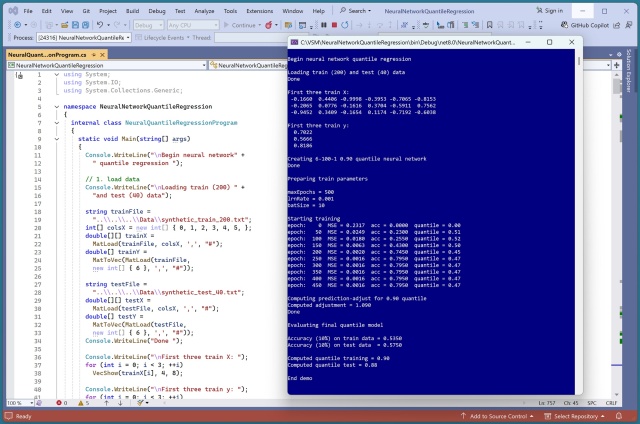

Implementing a neural network quantile regression system in PyTorch was challenging. Exploring C# for the same task proved even more difficult, with calibration issues.

AI brings Charles Darwin to life discussing evolution with students, transforms writing into images, and reimagines Luton as a car. Education Secretary Bridget Phillipson advocates for AI in schools, with Willowdown primary school leading the way in embracing the digital revolution.

Tech companies rely on machine learning for critical applications, but undetected model drift can lead to financial losses. Effective model monitoring is crucial to identify issues early and ensure stable, reliable models in production.

NVIDIA researchers honored for groundbreaking contributions to film industry. Ziva VFX, Disney's ML Denoiser, and Intel Open Image Denoise revolutionize visual effects.

BBC News plans to use AI for personalized content, targeting under-25s on platforms like TikTok. Chief executive Deborah Turness emphasizes the need to adapt to changing news consumption habits.

GeForce NOW members can now hunt monsters in Monster Hunter Wilds and dive into Split Fiction's mind-bending adventures. Enjoy high-performance gaming in the cloud with NVIDIA technologies.

Generative AI is revolutionizing retail by enhancing customer service, streamlining product management, and driving personalized marketing through adaptable interactions and automation. This technology is reshaping how businesses engage with customers, offering tailored experiences, and automating complex tasks to improve efficiency and customer satisfaction.

Science photographer Felice Frankel discusses the impact of generative artificial intelligence on research communication in a recent opinion piece for Nature magazine. She emphasizes the importance of ethical visual representation and the need for researchers to be trained in visual communication to ensure accuracy and transparency.

CMA won't investigate Microsoft's partnership with ChatGPT's startup due to lack of control despite a $13bn investment. Microsoft's material influence over OpenAI falls short of triggering a formal inquiry by the UK's competition watchdog.

US judge denies Musk's request to pause OpenAI's transition to for-profit model, fast-tracks dispute for fall trial. Judge states Musk does not meet high burden for preliminary injunction.

Creator of viral “Trump Gaza” video reacts to Trump sharing it on Truth Social, depicting Gaza as Dubai-style paradise with political satire. Video shows Trump with Netanyahu and Musk in beachside resort town.

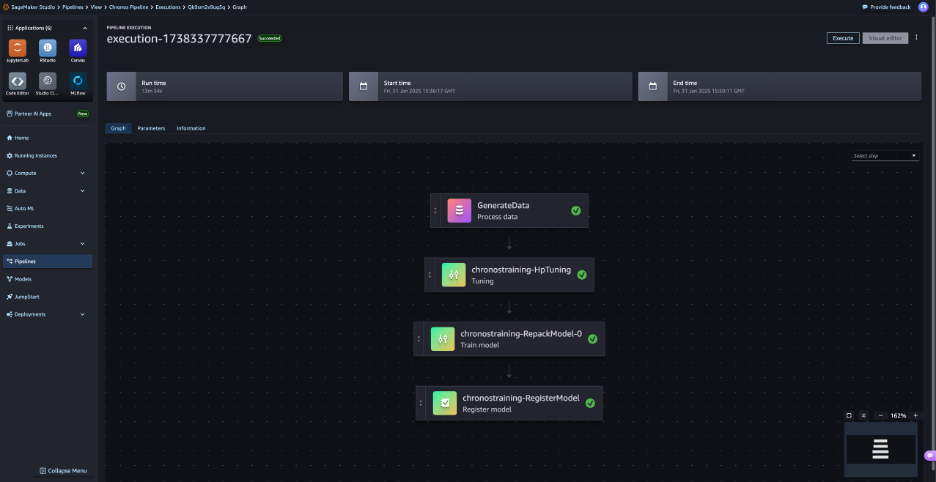

Chronos, a revolutionary time series model, utilizes large language model architectures to excel at zero-shot forecasts, outperforming task-specific models. By treating time series data as a language, Chronos streamlines development processes and offers accurate predictions with minimal data.

More than half of UK executives lack an official AI plan, leading to productivity gaps between AI users and non-users. Microsoft survey highlights companies "stuck in neutral" on AI strategy.