Google DeepMind's AlphaGenome can analyze 1m DNA letters, aiding in identifying genetic drivers of disease for new treatments. The AI tool predicts how mutations impact gene control, offering insights on biological volume settings.

UK technology secretary warns of job losses due to AI deployment, plans to train 10 million Britons in AI skills to adapt to workforce changes. Liz Kendall acknowledges concerns about potential redundancies in graduate entry jobs in industries like law and finance.

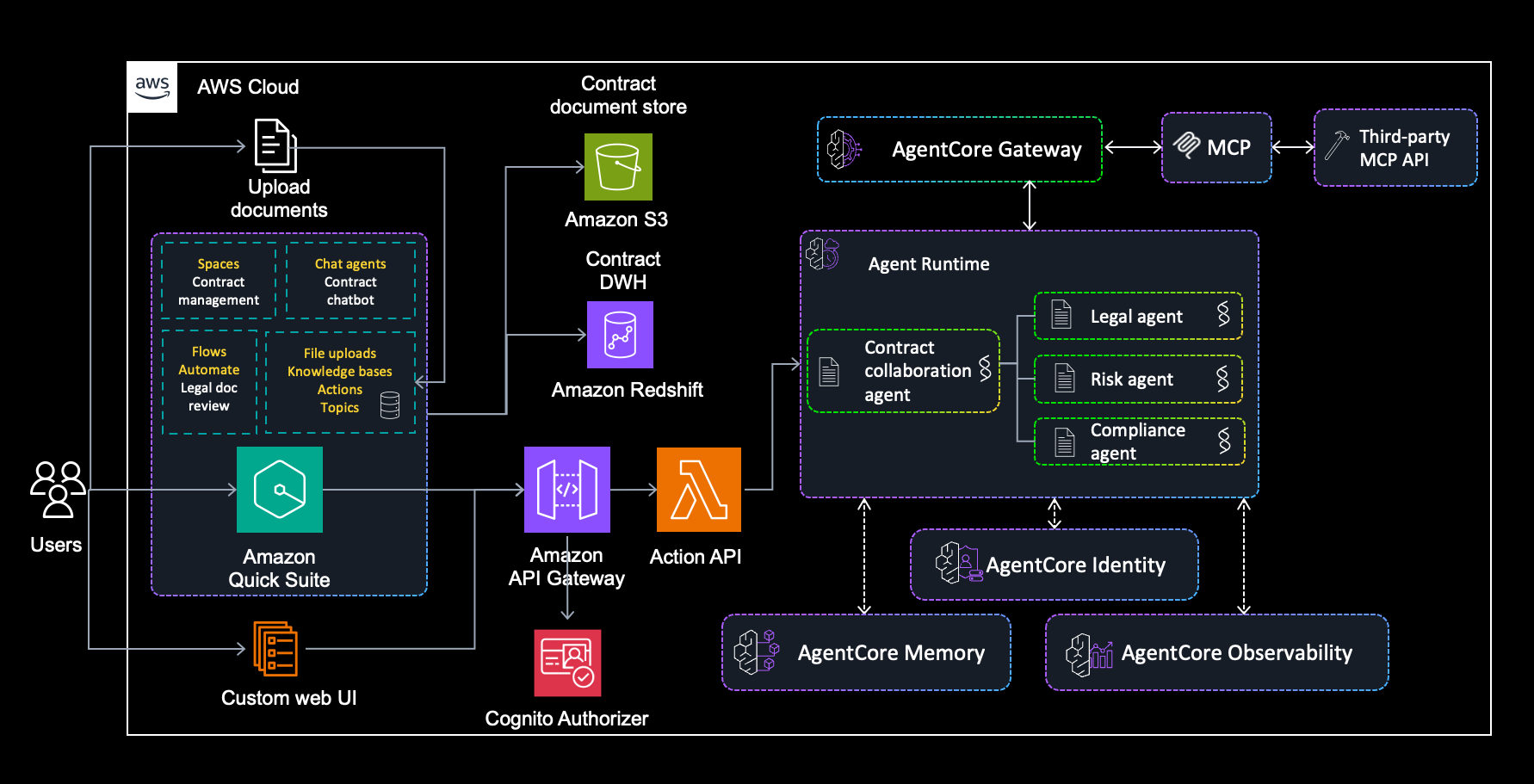

Amazon Quick Suite, augmented with Amazon Bedrock AgentCore, streamlines contract management with multi-agent collaboration for efficient analysis, risk assessment, compliance evaluation, and structured insights. The solution integrates specialized agents, such as legal, risk, and compliance agents, to automate contract processing and reduce review times from days to minutes, revolutionizing co...

'The AI Doc explores AI's potential for catastrophe or opportunity with top experts like Sam Altman. Director Daniel Roher's personal anxiety drives a deep dive into AI's impact on filmmaking and society.'

Ministers accept $1m from Meta for AI systems, raising concerns about UK's ties with 'Trump-supporting' tech firms. Mark Zuckerberg's company funds cutting-edge AI solutions for national security and defence.

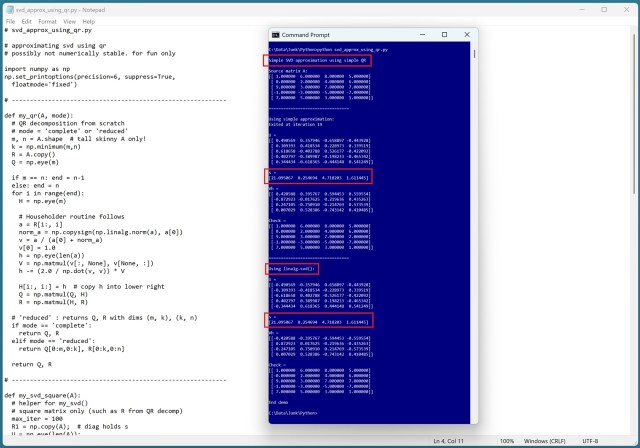

A simple, yet slow SVD approximation technique using QR decomposition in Python. Breaks down complex SVD computation into manageable sub-problems.

Just Eat introduces AI voice assistant in UK app to simplify food choices, with global expansion planned. Customers can now receive personalized meal recommendations without having to browse menus, tackling 'choice overload'.

Tech leaders discuss AI's potential at Davos while AI startups face drama. Tesla's success in Texas due to autonomous driving laxity.

Dario Amodei warns of AI power in 'The adolescence of technology', urging awareness of risks and societal impact. Britons fear job loss to AI in next 5 years, as Anthropic CEO questions humanity's readiness for AI advancement.

Over 70% of UK teens fear starting their careers in the current economic climate, with 1 in 4 young adults feeling they may fail in life, citing AI and lack of work experience as key concerns - King's Trust survey.

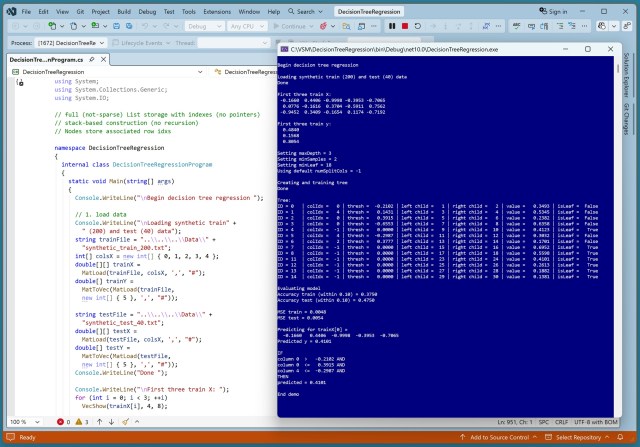

Decision tree regression predicts values using if-then rules in C#. A neural network generated the synthetic data for training and testing.

NHS England to trial AI and robot-assisted care for faster lung cancer detection. All smokers and ex-smokers to be offered screening by 2030.

Georgia lawmakers propose first statewide moratorium on new datacenters in US. Maryland and Oklahoma also exploring bans amidst environmental concerns.

British AI startup Synthesia, known for realistic video avatars, sees valuation soar to $4bn. 70% of FTSE 100 are clients.

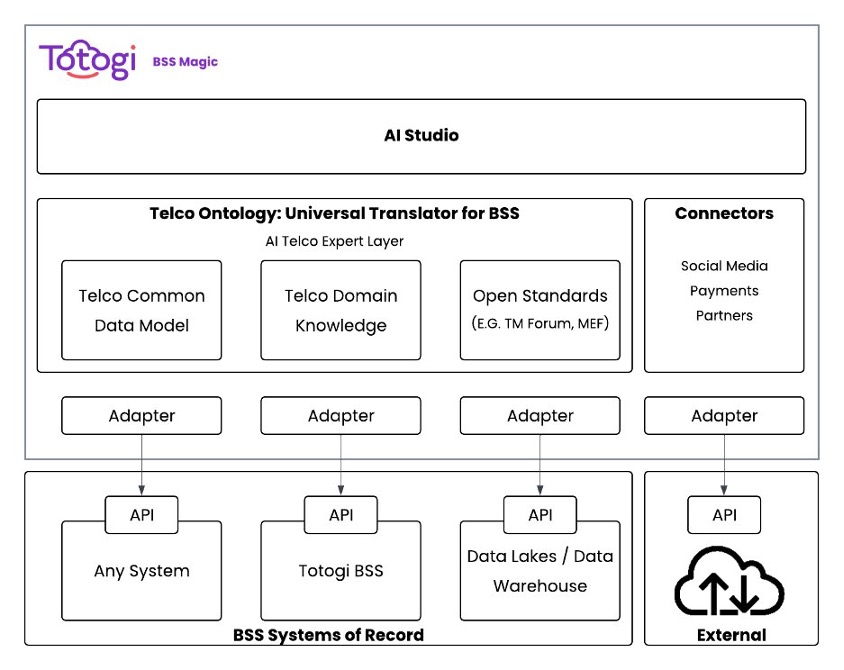

Totogi's BSS Magic streamlines telco change request processing with AI, cutting processing time from 7 days to hours. By leveraging AI and a multi-agent framework, Totogi helps telcos modernize systems without vendor dependencies or lengthy implementations.