Iron Mountain handles media industry vault archiving, finding 20% of 1990s hard drives unreadable. Music industry faces complex archiving challenges on spinning disks, prompting a call to action from Iron Mountain's global director.

AI image generator Flux recreates handwriting, sparking ethical questions and emotional connections. A unique way to preserve personal memories and celebrate loved ones.

OpenAI introduces "Strawberry" AI models for solving complex problems by breaking them down into logical steps, outperforming other AIs in science, coding, and math. These models excel at reasoning through difficult tasks, offering a promising solution for challenging problems.

Researchers discover AI can change believers' minds on conspiracy theories, challenging conventional wisdom. Chatting with AI proves effective in altering mistaken beliefs, offering hope for combating dangerous misinformation.

NVIDIA Holoscan for Media simplifies AI integration in live media, empowering developers to create cutting-edge applications easily. The platform addresses challenges in deployment and infrastructure, enabling seamless connectivity and advanced AI capabilities for media companies.

Amazon Bedrock Agents empowers developers to create intelligent generative AI applications, enhancing user experiences with natural language interactions. Agents combine large language models with other tools to handle complex queries, transforming the way we interact with AI.

International writing org angers community by seemingly supporting AI use, causing resignations. NaNoWriMo controversy highlights debate on AI in creative writing, sparking classist and ableist concerns.

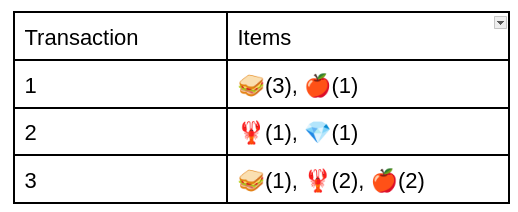

Alternative to Market Basket Analysis for high-value patterns, focusing on item co-occurrence in transactions for optimization strategies in retail and marketing. Frequent Itemset Mining finds patterns by calculating support, but limitations include not considering item quantity and relevance.

Yuval Noah Harari challenges conventional wisdom on AI's impact, warning of apocalyptic scenarios in his latest book. He argues that the free flow of information doesn't always lead to truth or political wisdom.

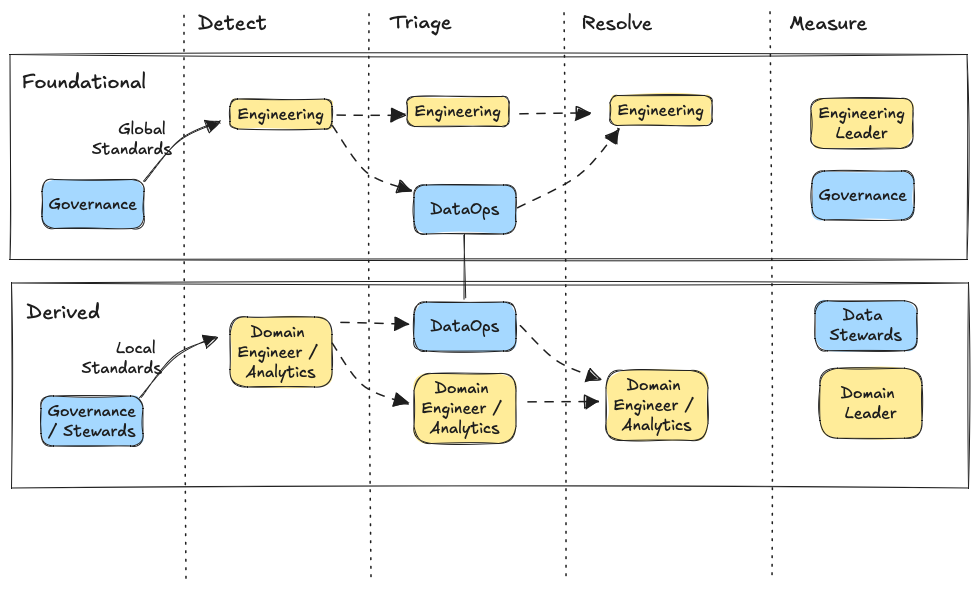

Enterprise data professionals often wonder "who does what" in data quality programs, highlighting the importance of detection, triage, resolution, and measurement in a relay race-like process. Aligning around valuable data products, such as foundational and derived data products, is key for modern data teams in larger organizations to ensure data quality success.

Taylor Swift endorses Kamala Harris for President, citing AI deepfake concerns. Misinformation fears sparked by AI-generated images of Swift endorsing Donald Trump on Truth Social.

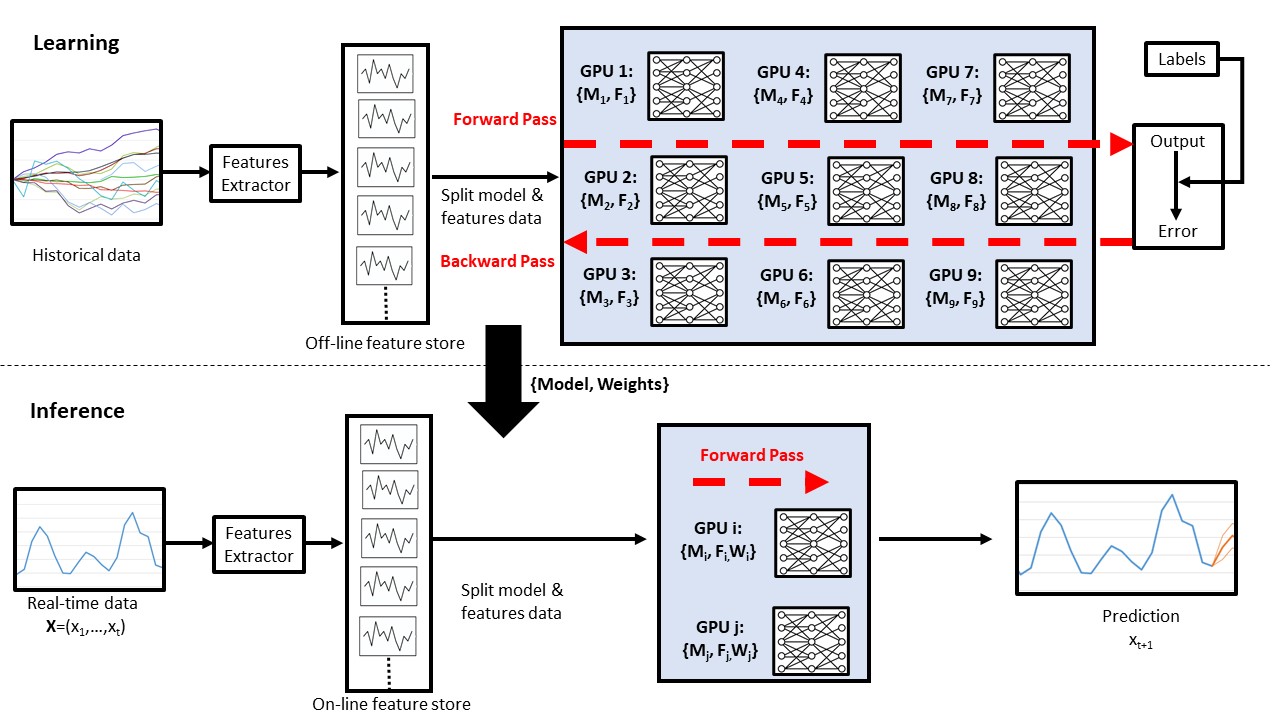

Financial services industry leaders are leveraging data and accelerated computing to gain a competitive edge in areas like quant research and real-time trading. Purpose-built accelerators, like GPUs, are crucial for activities ranging from basic data processing to AI advancements, enabling faster calculations and better customer experiences.

TechOps involves managing IT infrastructure & services. AWS generative AI solutions enhance productivity, resolve issues faster & improve customer experience. Generative AI helps with event management, incident documentation, and identifying recurring problems in TechOps.

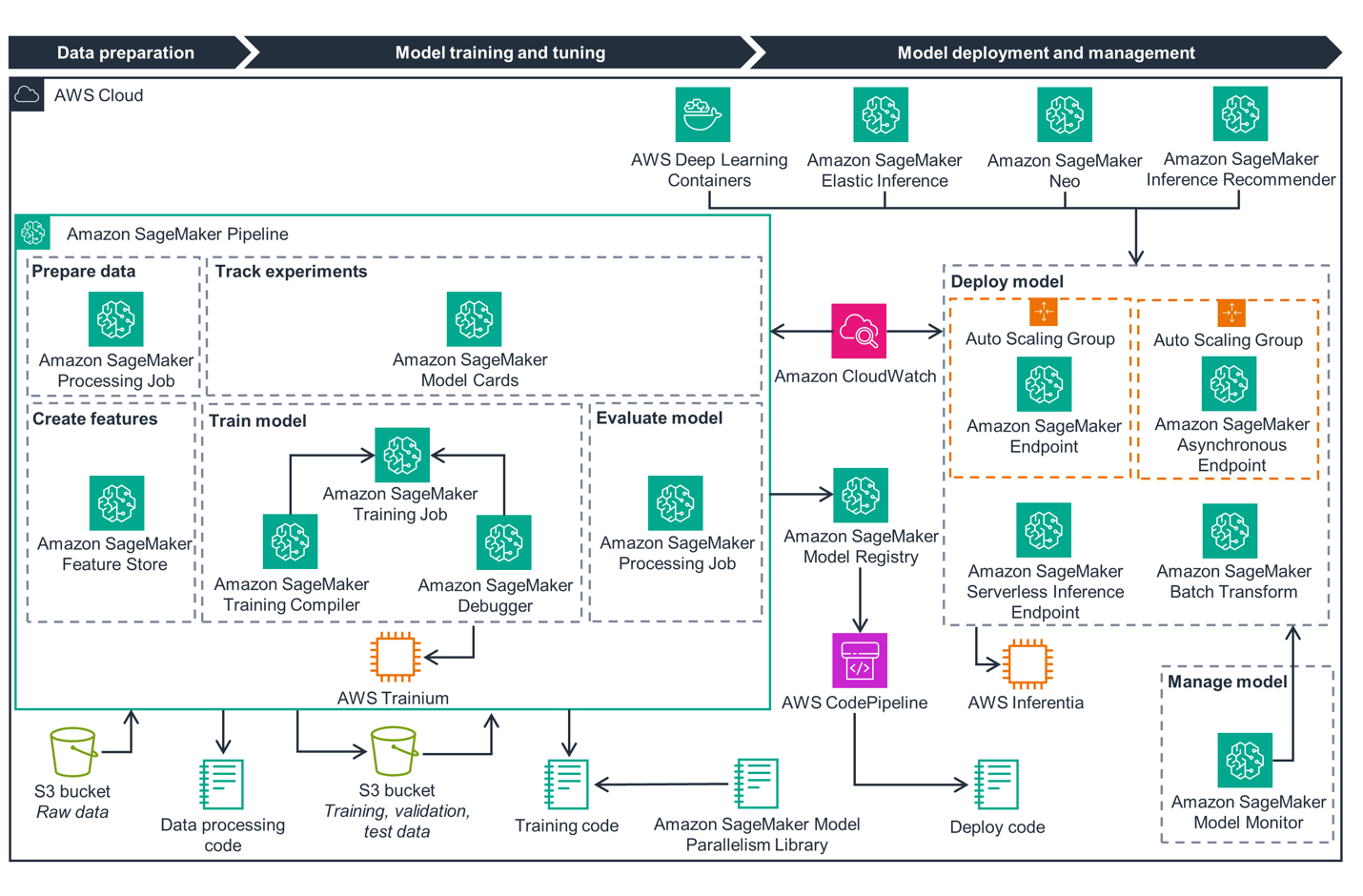

MLOps automates ML workflows, AWS offers guidance to optimize sustainability, reduce costs, and carbon footprint in ML workloads. Key steps include data preparation, model training, tuning, and deployment management. Optimize data storage, serverless architecture, and choose the right storage type to reduce energy consumption and carbon impact.

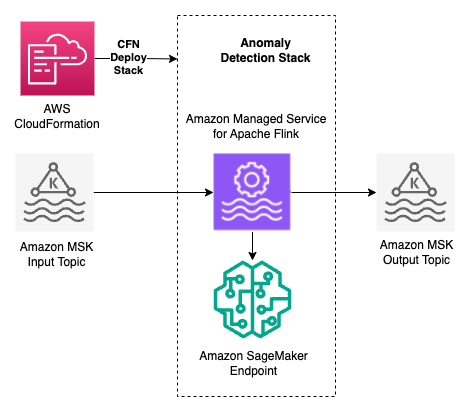

Time series data involves patterns over time. Amazon Managed Service for Apache Flink offers real-time anomaly detection for streaming data.