Merlin Bird ID app identifies 1,300 bird species worldwide, delighting users like Natasha Walter in London. AI and machine-learning make bird identification easy and enjoyable.

AI challenges human decision-making in everyday scenarios like navigation, raising the question of trust in machines vs. humans. Joseph de Weck explores this dilemma in the era of technology dominance.

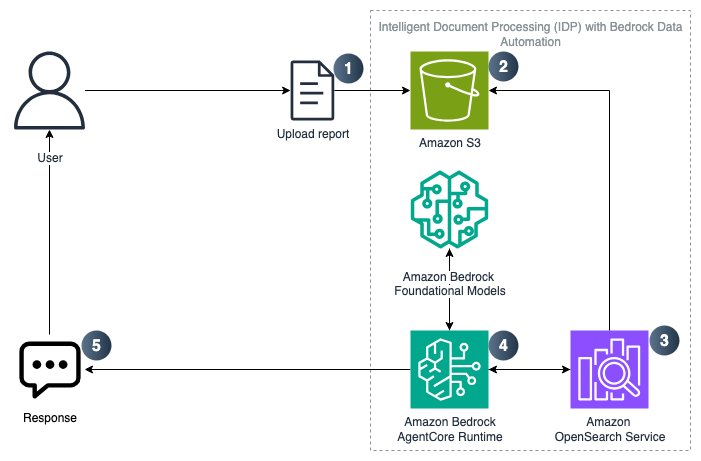

Intelligent Document Processing streamlines data extraction from unstructured documents using Strands SDK and Amazon Bedrock AgentCore. This solution leverages BDA for automated workflows, making IDP and RAG efficient and cost-effective.

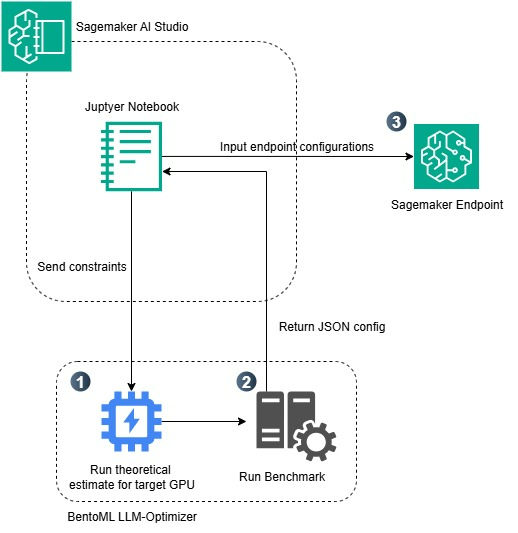

The rise of large language models makes AI integration easy, but self-hosting offers data sovereignty and model customization benefits. Amazon SageMaker AI simplifies infrastructure management for optimal performance with managed containers.

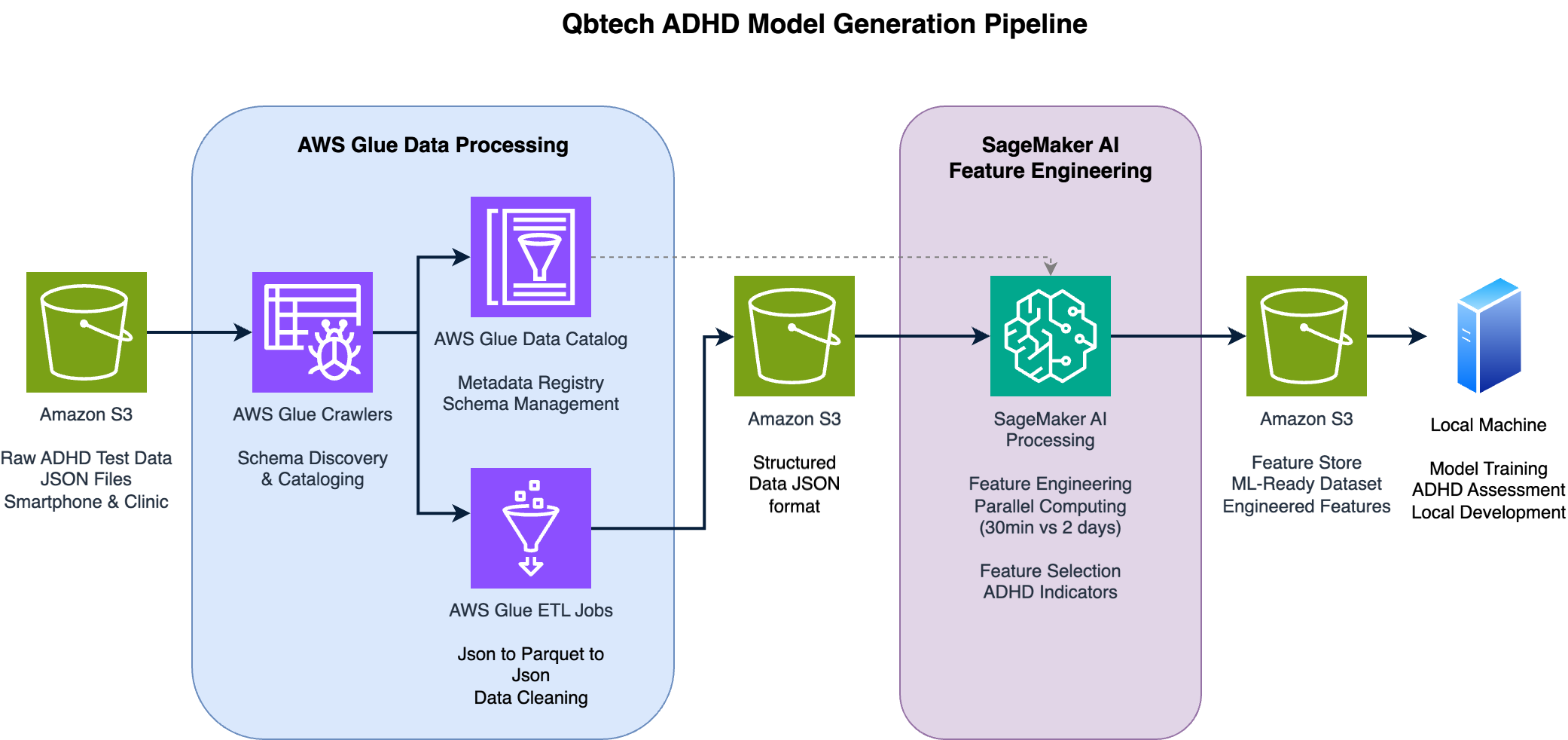

Qbtech enhances ADHD diagnosis with objective measurements & AI, launching QbMobile for smartphone-native assessments using AWS. The company streamlined ML workflow with Amazon SageMaker AI & AWS Glue to democratize access to objective ADHD assessment.

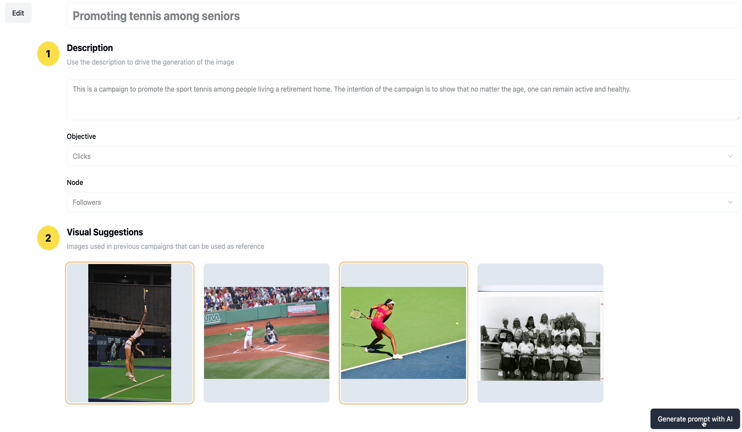

Marketing teams struggle with time constraints, high costs, and the need for creativity. Amazon Nova's generative AI offers a solution for rapid, efficient campaign creation. Bancolombia is using Amazon Nova to streamline their marketing visuals, highlighting the benefits of AI in modern campaigns.

Tech-bro vanity fuels AI hype distorting global economy. ChatGPT's explosive growth prompts $1.5tn investment in US AI future.

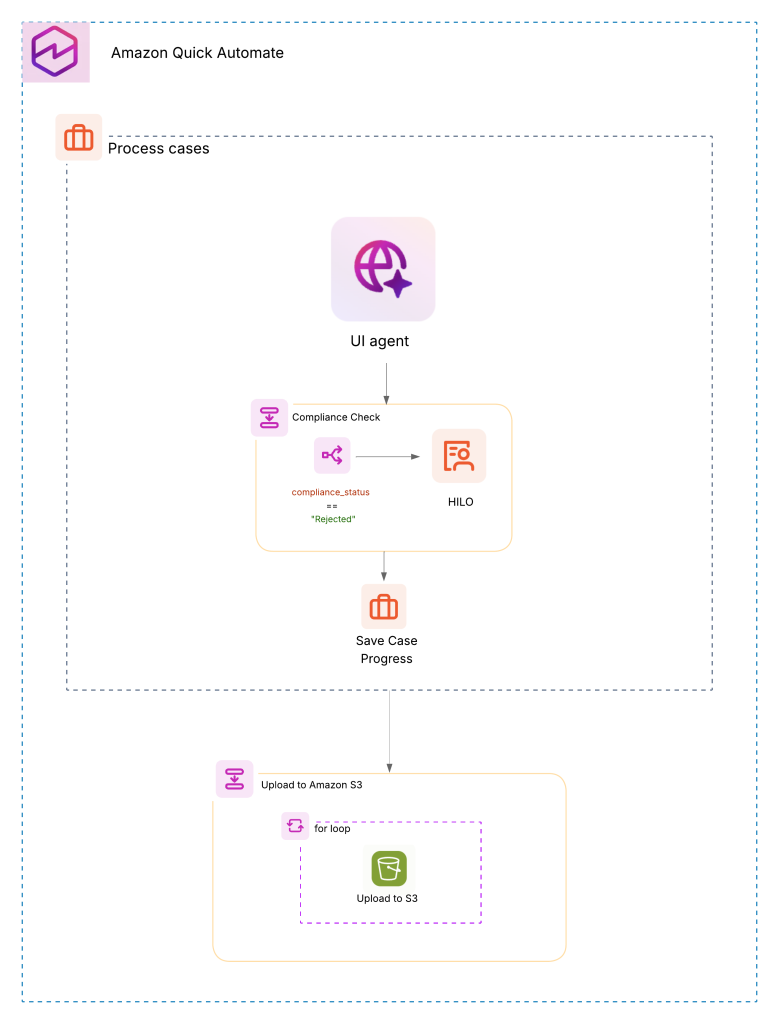

dLocal, Uruguay's first unicorn, revolutionized cross-border payments with its innovative compliance processes. By partnering with AWS and implementing Amazon Quick Automate, dLocal automated its merchant website review process, ensuring efficient and consistent policy enforcement at scale.

A recent study by NASA and SpaceX reveals new insights into the effects of microgravity on human health. Findings suggest potential advancements in space travel and long-term space missions.

Elon Musk's tumultuous year from political rise to controversial actions; AI dominates tech and economy in 2025. Musk's Doge, SpaceX, and Tesla in the spotlight amidst chaos and controversy.

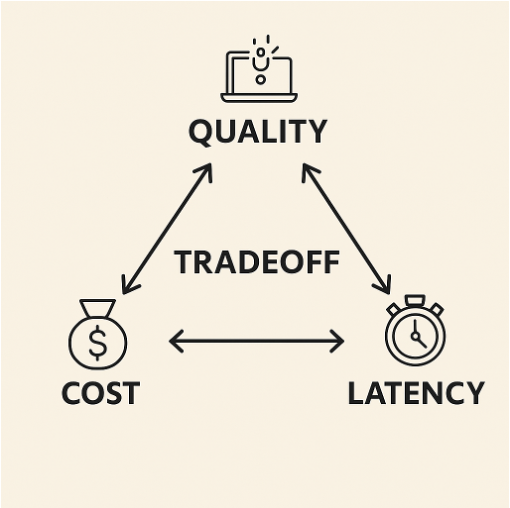

Organizations are seeking efficient AI prompting methods to balance quality, cost, and latency. Chain-of-Draft (CoD) offers a more efficient alternative to traditional prompting, reducing token usage by up to 75% and latency by over 78% while maintaining accuracy levels.

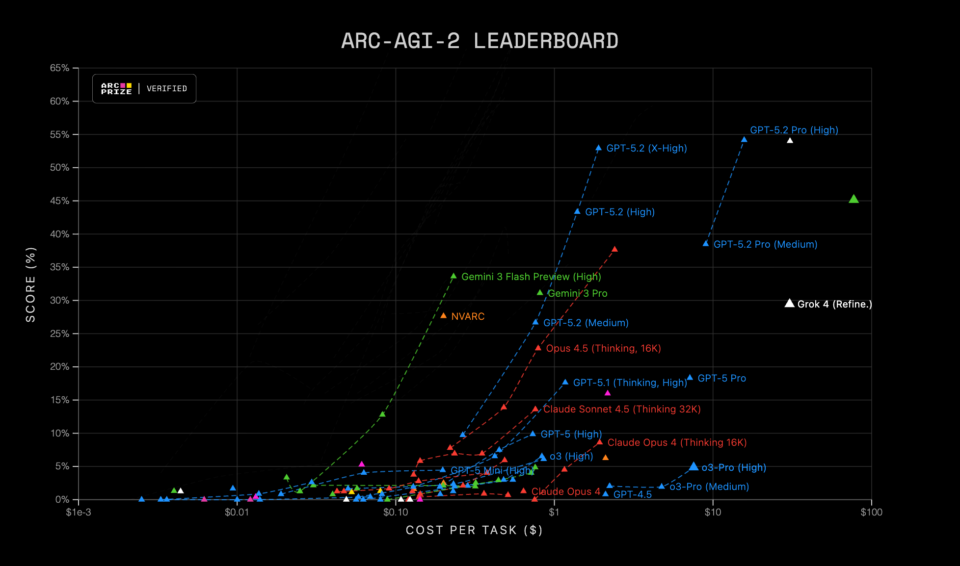

OpenAI launches GPT-5.2, achieving top scores on industry benchmarks with NVIDIA infrastructure. NVIDIA's advanced systems drive down costs and accelerate AI model development, leading to groundbreaking performance gains.

MIT's 2025 scientific breakthroughs in quantum, AI, and healthcare make headlines globally. MIT alumni found companies generating $1.9 trillion annually, impacting millions of jobs.

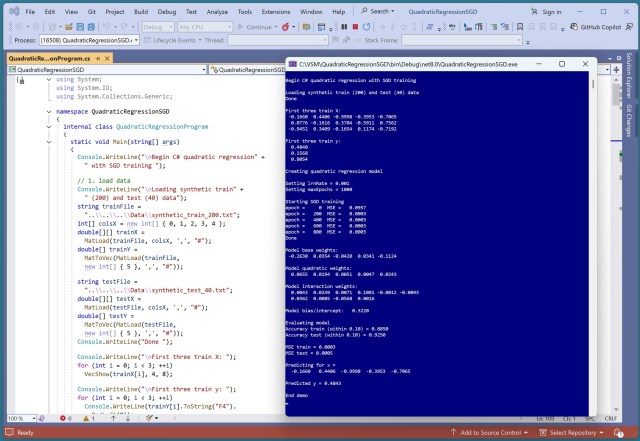

Linear regression is basic but struggles with non-linear data; quadratic regression extends it to handle complexity. A C# demo showcases quadratic regression accuracy, using synthetic data and neural network generation.

Spotify investigates data scraping by Anna’s Archive with 700m users. Leak could benefit AI companies.